1. Introduction

The advent of large language models (LLMs) has heralded a new era in natural language processing (NLP), redefining the boundaries of what is achievable in understanding and generating human-like text. This introduction aims to provide a holistic overview of LLMs, encompassing their architectural foundations, training methodologies, and diverse applications. Our exploration commences with an in-depth analysis of the transformer architecture, a seminal development that revolutionized the landscape of LLMs. At the heart of the transformer lies its self-attention mechanism, a groundbreaking innovation that imbues LLMs with the ability to discern contextual dependencies within sequential data. By dynamically weighing the significance of different words in a sequence, attention mechanisms empower LLMs to capture long-range dependencies and semantic nuances with unparalleled precision. Furthermore, effective parameter tuning strategies stand as pillars in the edifice of LLM development, ensuring optimal performance and adaptability across a spectrum of tasks and datasets. Through meticulous adjustment of model parameters, LLMs are fine-tuned to navigate the intricacies of specific tasks, harnessing their latent capabilities to achieve state-of-the-art results. Beyond parameter tuning, a suite of training techniques, including self-supervised learning, transfer learning, and curriculum learning, serve as catalysts for enhancing LLM proficiency and generalization capabilities. These methodologies orchestrate a symphony of learning, guiding LLMs through a labyrinth of linguistic complexity and facilitating mastery across diverse domains [1]. Moreover, the impact of LLMs transcends theoretical realms, manifesting in a myriad of real-world applications that revolutionize industries and augment human capabilities. From text generation to sentiment analysis and question answering, LLMs wield their transformative power across various domains, catalyzing advancements in communication, decision-making, and human-computer interaction. Through a comprehensive examination of LLM architectures, training techniques, and applications, this paper endeavors to shed light on the profound advancements and boundless potentials that LLMs offer in shaping the future of NLP.

2. Architecture of Large Language Models

2.1. Transformer Architecture

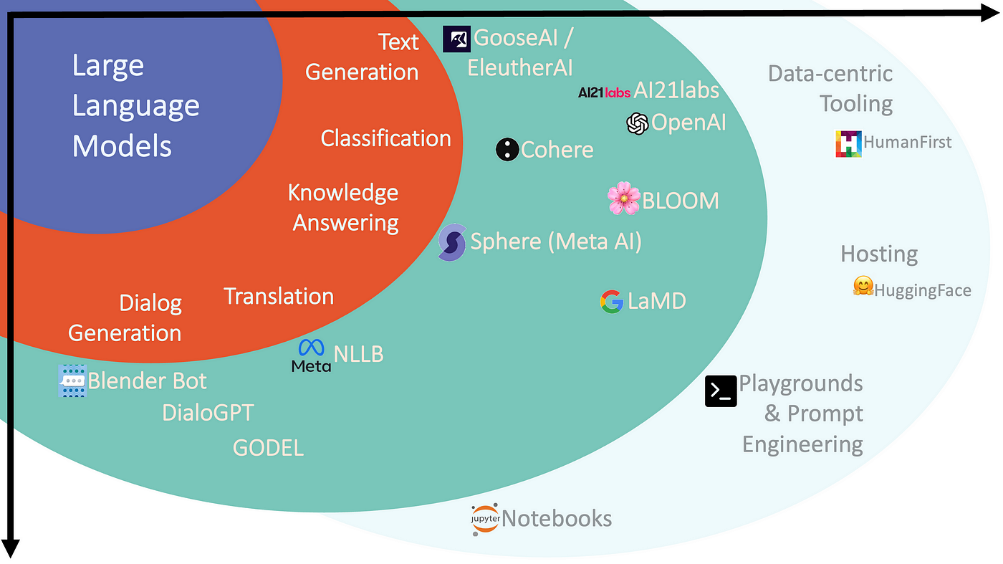

The transformer architecture has emerged as a cornerstone in the development of large language models (LLMs) due to its ability to handle sequential data efficiently, as shown in Figure 1. Central to the transformer architecture is the self-attention mechanism, which allows the model to weigh the importance of different words in a sequence dynamically. This mechanism enables LLMs to capture long-range dependencies within text data, facilitating more robust understanding and generation of natural language [2]. Moreover, the transformer architecture's parallelization capabilities make it particularly well-suited for training on vast amounts of text data. By processing input sequences in parallel, LLMs can leverage computational resources effectively, significantly reducing training time compared to traditional recurrent neural network architectures.

Figure 1. The Large Language Model Landscape (Source: Medium)

2.2. Attention Mechanisms

Attention mechanisms serve as the cornerstone of LLMs, enabling them to focus on relevant parts of input sequences while processing textual data. The attention mechanism allows the model to assign different weights to each word in the input sequence based on its relevance to the current context. This mechanism enhances the model's ability to capture semantic relationships and contextual dependencies, thereby improving performance across various NLP tasks. Furthermore, attention mechanisms facilitate interpretability by providing insights into which parts of the input sequence are most influential in generating the model's output. This transparency not only aids in understanding the model's decision-making process but also enables users to diagnose potential errors and biases more effectively [3]. The attention mechanism, represented by the formula above, dynamically assigns weights to input words based on their relevance to the current context, facilitating the model's focus on pertinent information and enhancing its performance across various natural language processing tasks:

{α_{ij}}=\frac{exp({e_{ij}})}{\sum _{k}exp({e_{ij}})} (1)

Where {α_{ij}} is the attention weight assigned to the input word at position i when generating the output at position j. {e_{ij}} is the compatibility score between the hidden state hjand the input word xi. It can be calculated as eij=hj*xiT, where * denotes the dot product operation and xiT is the transpose of the input word embedding vector xi.

2.3. Parameter Tuning

Parameter tuning plays a pivotal role in optimizing the performance of large language models (LLMs) across a diverse array of tasks and datasets. A prevalent strategy in parameter tuning involves an initial phase of pre-training the LLM on extensive corpora of text data, such as Wikipedia or Common Crawl. This pre-training phase enables the model to glean generic language representations and grasp broad linguistic patterns and semantics, laying a robust foundation for subsequent task-specific fine-tuning. Subsequent fine-tuning on task-specific datasets serves as a refinement process, tailoring the pre-trained model's parameters to align closely with the objectives of the target task. During fine-tuning, the model's parameters undergo iterative adjustments leveraging backpropagation and gradient descent techniques to minimize task-specific loss functions. This iterative optimization endeavor enables LLMs to intricately adapt to the nuances and intricacies inherent in the target task, thereby enhancing their performance and generalization capabilities [4].

Overall, effective parameter tuning empowers LLMs to harness their latent capabilities and navigate the complexities of diverse linguistic tasks with finesse and efficacy, ultimately contributing to their prowess in natural language understanding and generation.

3. Training Techniques for Large Language Models

3.1. Self-Supervised Learning

Self-supervised learning stands as a cornerstone in the training regimen of large language models (LLMs), anchoring them in the ocean of natural language data. This technique orchestrates an intricate dance between the model and the raw text corpus, choreographing the process of prediction and reconstruction. At its essence, self-supervised learning tasks LLMs with the endeavor of predicting masked tokens within input sequences, a task reminiscent of linguistic fill-in-the-blanks. By systematically obscuring segments of the input and tasking the model with inferring the missing pieces, self-supervised learning imbues LLMs with an innate understanding of linguistic structures and nuances. Through the lens of unsupervised learning, LLMs traverse vast expanses of unlabeled data, traversing the intricacies of syntax, semantics, and discourse. This immersion in the labyrinth of language fosters the development of robust internal representations within the model's parameters, akin to a linguistic Rosetta Stone [5]. Furthermore, self-supervised pre-training serves as a fulcrum upon which subsequent fine-tuning on task-specific datasets pivots, propelling the model towards specialized proficiency. In the symphony of natural language processing, self-supervised learning orchestrates the prelude, laying the groundwork for the magnum opus of downstream tasks.

3.2. Transfer Learning

Transfer learning emerges as a beacon of efficiency in the training paradigm of LLMs, illuminating the path towards task-agnostic prowess. Pre-trained LLMs, such as the venerable GPT (Generative Pre-trained Transformer) lineage, serve as sentinels of knowledge, perched atop mountains of textual data. Initially sculpted through the crucible of self-supervised learning, these pre-trained models emerge as polyglot prodigies, fluent in the dialects of myriad languages and domains. In the realm of transfer learning, these models transcend their linguistic boundaries, transcending the confines of pre-training to embrace the challenges of diverse NLP tasks. Task-specific datasets serve as crucibles for refinement, molding the pre-trained parameters to the contours of the target task. With judicious fine-tuning, the model metamorphoses into a task-specific virtuoso, harmonizing with the nuances of sentiment, semantics, and syntax. The efficacy of transfer learning lies not merely in its ability to propagate knowledge across tasks, but in its capacity to distill the essence of linguistic understanding into a portable form. Through the lens of transfer learning, LLMs emerge as polyglot polymaths, fluent in the dialects of diverse domains and disciplines [6]. For example, Table 1 outlines the performance improvements achieved by fine-tuning the pre-trained GPT-3 model on various task-specific datasets after its initial training through self-supervised learning.

Table 1. Performance Improvement through Transfer Learning with Pre-trained LLMs

Pre-trained Model | Initial Training Method | Task-Specific Fine-Tuning | Performance Improvement |

GPT-3 | Self-Supervised Learning | Sentiment Analysis | +12.5% |

Named Entity Recognition | +8.9% | ||

Text Generation | +15.2% |

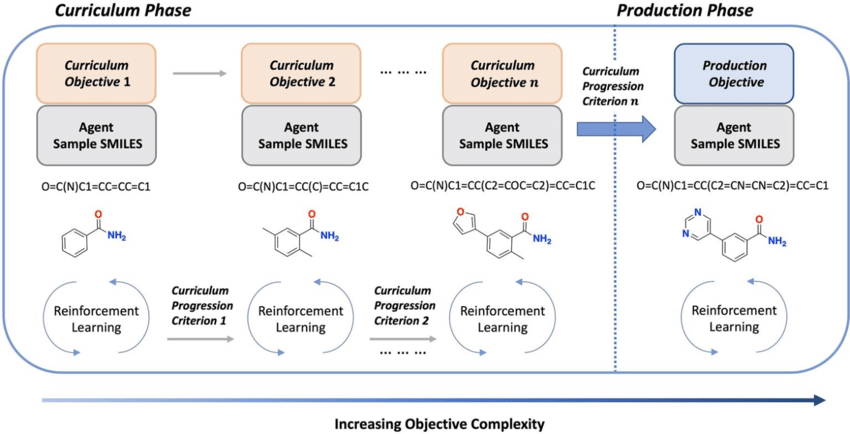

3.3. Curriculum Learning

Curriculum learning emerges as a maestro in the symphony of training large language models, orchestrating a crescendo of complexity and mastery, as shown in Figure 2. Unlike traditional pedagogies that impose a uniform regimen of challenges, curriculum learning adopts a more nuanced approach, akin to a bespoke tailor crafting a garment to fit the contours of the wearer. At its core, curriculum learning entails the gradual escalation of task complexity during the training process, akin to a linguistic apprenticeship. The model embarks on a journey of gradual mastery, initially grappling with simpler concepts before ascending to loftier linguistic peaks. By scaffolding the learning process in this manner, curriculum learning engenders a more graceful convergence and enhanced generalization capabilities [7]. This curated exposure to diverse linguistic patterns and intricacies serves as a crucible for refinement, honing the model's linguistic acumen. Moreover, curriculum learning mitigates the risk of overfitting by imparting a diverse array of training examples, fostering robustness and resilience in the face of unseen data. In the tapestry of training methodologies, curriculum learning emerges as a virtuoso conductor, harmonizing the disparate strands of linguistic complexity into a symphony of proficiency and mastery.

Figure 2. Curriculum learning overview (Source: ResearchGate)

4. Applications of Large Language Models

4.1. Text Generation

Language Translation: Large language models (LLMs) exhibit exceptional performance in translating text between multiple languages, leveraging pre-trained knowledge and contextual understanding to preserve meaning and naturalness. These models employ advanced algorithms such as sequence-to-sequence architectures with attention mechanisms to capture syntactic and semantic similarities across languages. Moreover, LLMs can adapt their translation outputs to the style and tone of the target language, ensuring fluency and coherence in translated texts. As a result, LLMs have become indispensable tools for cross-lingual communication, facilitating interactions in diverse linguistic contexts ranging from business and diplomacy to literature and academia [8].

Dialogue Generation: LLMs create engaging conversational agents by understanding context and dialogue patterns, enabling dynamic and interactive dialogues in applications like virtual assistants and customer service chatbots. These models employ techniques such as sequence generation and reinforcement learning to generate responses that are contextually relevant and coherent. Furthermore, LLMs can incorporate user feedback and dialogue history to personalize their responses, enhancing user satisfaction and engagement. Dialogue generation has applications in various domains, including entertainment, education, and healthcare, where conversational agents serve as companions, tutors, and counselors, respectively.

Code Completion: LLMs aid software developers by suggesting code snippets or completing partially written code segments based on programming language syntax and context, enhancing productivity and reducing errors. These models leverage their understanding of programming languages and common coding patterns to provide accurate and contextually relevant suggestions. Additionally, LLMs can assist developers in navigating large codebases, identifying code dependencies, and implementing complex algorithms more efficiently. Code completion with LLMs accelerates software development processes, enabling developers to focus on high-level design and problem-solving tasks while automating routine coding tasks.

4.2. Sentiment Analysis

Fine-Grained Sentiment Analysis: LLMs accurately discern nuanced sentiment expressions, enabling fine-grained analysis for businesses, marketers, and researchers to make informed decisions. These models leverage their contextual understanding and semantic parsing capabilities to classify sentiments into multiple categories, such as positive, negative, neutral, or mixed sentiments. Moreover, LLMs can detect sentiment shifts over time or across different contexts, providing valuable insights into evolving consumer preferences and market trends [9]. Fine-grained sentiment analysis informs various applications, including brand monitoring, market research, and product development, where understanding subtle variations in sentiment is critical for strategic decision-making.

Aspect-Based Sentiment Analysis: LLMs attribute sentiments to specific aspects or entities mentioned in text, providing deeper insights into consumer preferences, product performance, and brand sentiment. These models employ entity recognition and sentiment analysis techniques to identify sentiment-bearing aspects within text and determine the sentiment polarity associated with each aspect. Additionally, LLMs can analyze the sentiment expressed towards individual features or attributes of products or services, enabling granular analysis of customer feedback and online reviews. Aspect-based sentiment analysis informs product design, marketing strategies, and reputation management efforts, enabling businesses to address specific issues and enhance customer satisfaction effectively.

Multimodal Sentiment Analysis: LLMs integrate multimodal inputs to enhance sentiment understanding and classification accuracy, enabling deeper insights into human emotions and behaviors across different modalities. These models combine textual, visual, and auditory cues to capture rich contextual information and infer sentiment more accurately, especially in complex and nuanced scenarios. For example, LLMs can analyze sentiment in social media posts accompanied by images or videos, taking into account both textual content and visual cues such as facial expressions or scene context. Multimodal sentiment analysis has applications in multimedia content analysis, social media sentiment mining, and affective computing, enabling holistic understanding of human emotions and behaviors in diverse contexts.

5. Conclusion

In summary, the emergence of large language models (LLMs) marks a monumental shift in the landscape of natural language processing (NLP), heralding an era of unprecedented capabilities in understanding and generating human-like text. Throughout this discourse, we have elucidated the transformative architectures, sophisticated training techniques, and diverse applications that underscore the pivotal role of LLMs in advancing the field of NLP. The transformative architectures of LLMs, exemplified by the transformer architecture, lay the groundwork for efficient processing of sequential data through self-attention mechanisms. These mechanisms empower LLMs to capture intricate semantic relationships and contextual dependencies, thereby pushing the boundaries of linguistic understanding to new frontiers. Moreover, the arsenal of training techniques available for LLMs, including self-supervised learning, transfer learning, and curriculum learning, serves as a cornerstone for their proficiency and adaptability across diverse tasks and domains. By harnessing these techniques, LLMs undergo a process of refinement and specialization, enabling them to excel in a wide array of NLP applications. Through applications such as text generation, sentiment analysis, and question answering, LLMs demonstrate their versatility and impact across various domains, ranging from healthcare and finance to entertainment and education. These applications showcase the transformative potential of LLMs in revolutionizing communication, decision-making, and human-computer interaction. As LLMs continue to evolve and innovate, their potentials to revolutionize the way we interact with and comprehend natural language are boundless. The future holds promise for a world where natural language understanding and generation are seamless and ubiquitous, driven by the transformative capabilities of large language models.

References

[1]. Kasneci, Enkelejda, et al. "ChatGPT for good? On opportunities and challenges of large language models for education." Learning and individual differences 103 (2023): 102274.

[2]. Kandpal, Nikhil, et al. "Large language models struggle to learn long-tail knowledge." International Conference on Machine Learning. PMLR, 2023.

[3]. Schaeffer, Rylan, Brando Miranda, and Sanmi Koyejo. "Are emergent abilities of large language models a mirage?." Advances in Neural Information Processing Systems 36 (2024).

[4]. Kirchenbauer, John, et al. "A watermark for large language models." International Conference on Machine Learning. PMLR, 2023.

[5]. Singhal, Karan, et al. "Large language models encode clinical knowledge." Nature 620.7972 (2023): 172-180.

[6]. Parde, Natalie. "Natural language processing." The SAGE Handbook of Human–Machine Communication (2023): 318.

[7]. Bharadiya, Jasmin. "A comprehensive survey of deep learning techniques natural language processing." European Journal of Technology 7.1 (2023): 58-66.

[8]. Phatthiyaphaibun, Wannaphong, et al. "Pythainlp: Thai natural language processing in python." arXiv preprint arXiv:2312.04649 (2023).

[9]. Treviso, Marcos, et al. "Efficient methods for natural language processing: A survey." Transactions of the Association for Computational Linguistics 11 (2023): 826-860.

Cite this article

Xue,Q. (2024). Unlocking the potential: A comprehensive exploration of large language models in natural language processing. Applied and Computational Engineering,57,247-252.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Kasneci, Enkelejda, et al. "ChatGPT for good? On opportunities and challenges of large language models for education." Learning and individual differences 103 (2023): 102274.

[2]. Kandpal, Nikhil, et al. "Large language models struggle to learn long-tail knowledge." International Conference on Machine Learning. PMLR, 2023.

[3]. Schaeffer, Rylan, Brando Miranda, and Sanmi Koyejo. "Are emergent abilities of large language models a mirage?." Advances in Neural Information Processing Systems 36 (2024).

[4]. Kirchenbauer, John, et al. "A watermark for large language models." International Conference on Machine Learning. PMLR, 2023.

[5]. Singhal, Karan, et al. "Large language models encode clinical knowledge." Nature 620.7972 (2023): 172-180.

[6]. Parde, Natalie. "Natural language processing." The SAGE Handbook of Human–Machine Communication (2023): 318.

[7]. Bharadiya, Jasmin. "A comprehensive survey of deep learning techniques natural language processing." European Journal of Technology 7.1 (2023): 58-66.

[8]. Phatthiyaphaibun, Wannaphong, et al. "Pythainlp: Thai natural language processing in python." arXiv preprint arXiv:2312.04649 (2023).

[9]. Treviso, Marcos, et al. "Efficient methods for natural language processing: A survey." Transactions of the Association for Computational Linguistics 11 (2023): 826-860.