1. Introduction

Artificial intelligence has fundamentally transformed knowledge management processes, revolutionizing the ways in which knowledge is acquired, developed, and disseminated in the 21st century [1,2]. This transformation has been dramatically accelerated by the advent of large language models, exemplified by ChatGPT. These models, representing a significant advancement in computational technology [3], possess the capability to generate novel, contextually relevant, and semantically meaningful content in response to various inputs and instructions. Their remarkable versatility enables them to address queries across diverse domains [4,5], establishing them as invaluable tools in numerous sectors, including education, scientific research, and healthcare. Studies have documented an exponential growth in both the user base and application scope of intelligent assistants, with users reporting enhanced productivity, improved work efficiency, and increased task satisfaction [3,4,6]. This rapid expansion in both the breadth and frequency of intelligent assistant utilization underscores the pressing need to comprehensively understand the underlying mechanisms of human-machine interaction.

The evolution of human knowledge co-creation models has paralleled advancements in computational technology. Initially, knowledge creation was exclusively a human-to-human process. Subsequently, the advent of knowledge management systems and knowledge bases introduced machines as auxiliary tools in the knowledge creation process. Currently, we are witnessing a paradigm shift where intelligent assistants are transitioning from auxiliary tools to primary participants in knowledge creation [5,7]. These intelligent assistants, powered by large language models, exhibit sophisticated language comprehension and generation capabilities that transcend basic information retrieval and instruction execution, enabling them to engage in complex problem-solving, creative generation, and knowledge creation processes [5]. Within this emerging human-machine collaborative framework, intelligent assistants serve not merely as information providers but as active partners in knowledge creation through interactive dialogue. This transformation, spearheaded by intelligent assistants, represents a fundamental disruption of traditional human-centric knowledge co-creation paradigms, heralding the emergence of a novel human-machine knowledge co-creation model. While preliminary investigations have been conducted [8], a comprehensive theoretical framework remains undeveloped. Thus, there is an urgent need to thoroughly examine the key variables, underlying mechanisms, and theoretical foundations of this nascent human-machine knowledge co-creation model to advance our understanding of knowledge co-creation mechanisms in the digital age.

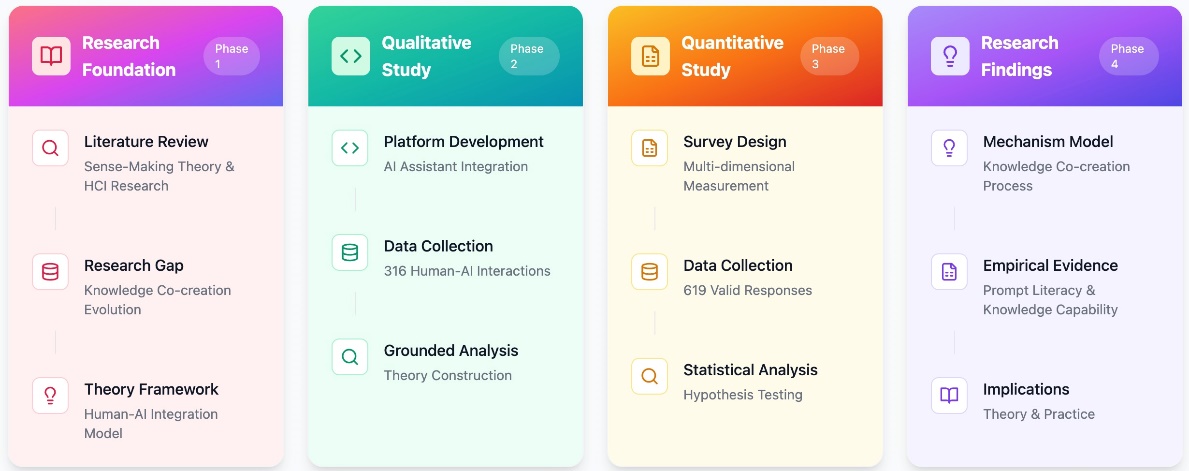

To address these research imperatives, we employ a mixed-methodology approach grounded in Sense-Making Theory to investigate the human-intelligent assistant interaction process. The study’s methodological framework, depicted in Figure 1, encompasses four sequential phases: research foundation establishment, qualitative investigation, quantitative analysis, and findings synthesis, collectively facilitating the systematic construction and validation of a human-machine knowledge co-creation model. Initially, we developed a bespoke human-machine interaction platform to systematically collect interaction data from recruited participants. Through rigorous application of Grounded Theory methodology to these interaction logs and supplementary materials, we derived a comprehensive model of human-machine knowledge co-creation. Subsequently, we formulated testable hypotheses based on this theoretical model, developed measurement instruments for key variables, and conducted empirical validation to assess the model’s robustness and validity.

Figure 1. Research framework

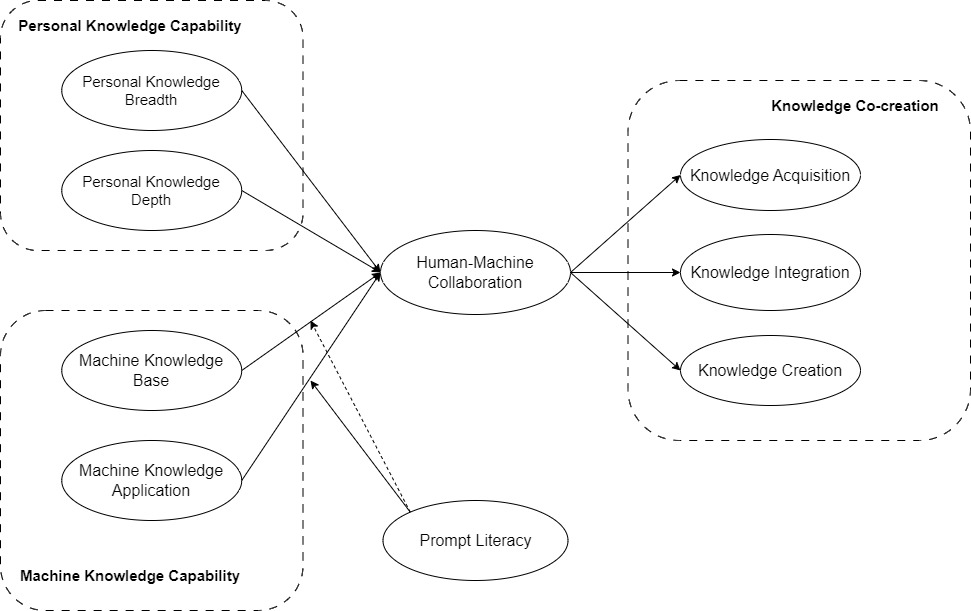

This research advances theoretical understanding in several significant ways. Primarily, it extends the application of Sense-Making Theory to illuminate the human-machine knowledge co-creation process, providing an innovative theoretical framework for comprehending cognitive mechanisms in human-machine interaction. Secondly, while extant literature has predominantly offered preliminary qualitative insights into human-machine interaction processes [8], our study develops a comprehensive theoretical architecture that integrates multiple dimensions: personal knowledge capability, machine knowledge capability, human-machine collaboration, prompt literacy, and knowledge co-creation. This integrated model establishes a systematic theoretical foundation for understanding the dynamics of knowledge creation in human-machine interaction contexts. Furthermore, we introduce and empirically validate prompt literacy as a critical construct in human-machine knowledge co-creation. This theoretical extension significantly broadens the conceptual boundaries of information literacy research by incorporating technical elements of prompt engineering into user-centered research paradigms, thereby offering novel perspectives on the determinants of effective human-machine interaction.

2. Literature review

2.1. Sense-making theory

Sense-Making Theory posits that individuals develop knowledge, interpretations, and understanding of their environment through sustained dialogic interactions. The sense-making process comprises three fundamental elements: cues, frames, and the interconnections between them. Cues constitute environmental stimuli that catalyze individuals’ motivation to comprehend situations, while frames represent structured knowledge repositories encompassing rules and values that guide understanding. Meaning emerges when individuals establish cognitive linkages between frames and cues [9-12]. Zhang et al. synthesized existing sense-making models, emphasizing that sense-making represents a knowledge construction process, whether undertaken individually or collectively. Despite variations across different models, they share a common foundational pattern: an iterative cycle between information search and meaningful structure creation [9]. Contemporary scholars have increasingly applied Sense-Making Theory to elucidate theoretical underpinnings in human-machine interaction research: Qian and Fang [13] investigated demand interpretation through a sense-making lens to address algorithm aversion; Gero et al. [14] enhanced large language model system design using Sense-Making Theory principles; and Li et al. [8] conducted qualitative analyses of knowledge workers’ sense-making processes in human-machine interactions. Building upon this theoretical foundation, our research conceptualizes human-intelligent assistant knowledge co-creation as a sense-making process, wherein multiple rounds of environmentally-informed interactions between humans and intelligent assistants facilitate the reconstruction of personal knowledge structures. This theoretical framework provides valuable insights into the mechanisms underlying human-machine knowledge co-creation.

2.2. Current status of human-machine interaction research

Contemporary research in human-machine interaction primarily encompasses two distinct domains [15-20]. The first domain focuses on human-machine collaboration in physical systems, predominantly addressing industrial and manufacturing applications designed to enhance professional task accomplishment [15-18]. The integration of deep learning methodologies into natural language processing has catalyzed significant advancements in natural language understanding, speech recognition, and gesture interpretation, leading to the development of intelligent systems across diverse production and service contexts [19]. These investigations primarily aim to optimize human-machine system performance through systematic improvements in operational design and development [21]. The second domain examines human-machine collaborative relationships, encompassing critical areas such as human-machine trust [22,23], algorithm aversion [22,24], and collaborative dynamics [20]. This research stream emphasizes the intricate interplay between technological systems and human behavioral, cognitive, and social dimensions. While existing literature has established a robust foundation for understanding human-machine collaboration, it has predominantly concentrated on machine utilization rather than co-creation processes. Consequently, our research adopts a knowledge-centric perspective to investigate the mechanisms underlying human-intelligent assistant interaction, specifically examining the processes through which humans and intelligent machines collaboratively generate knowledge.

2.3. Evolution of knowledge co-creation models

The evolution of knowledge co-creation models has progressed through three distinct developmental stages. The initial stage, characterized by traditional knowledge creation, is exemplified by Ikujiro Nonaka’s seminal SECI model. This framework conceptualizes knowledge creation through four iterative cycles: socialization, externalization, combination, and internalization, facilitating knowledge creation through the dynamic interplay between explicit and tacit knowledge [25]. Within this theoretical framework, knowledge creation is exclusively human-centric, with knowledge generation and transformation occurring through sustained interpersonal communication and practice.

The second stage represents technology-assisted knowledge creation, marked by the emergence of information systems and knowledge management platforms. These technological innovations have substantially enhanced inter-human knowledge creation processes. The implementation of these technological tools has not only significantly reduced the operational costs of knowledge management processes but has also catalyzed theoretical advancements in knowledge management research [26]. During this phase, while knowledge creation remained fundamentally human-centered, technological tools began serving as auxiliary facilitators, marking machines’ initial entry into the knowledge creation process.

The third stage introduces human-machine collaborative knowledge creation, characterized by the advancement of artificial intelligence technologies. Intelligent assistants exemplify this evolution, demonstrating sophisticated capabilities in context comprehension, human-like response generation, and deep user interaction. Hu et al.’s research demonstrates how intelligent assistants establish comprehensive platforms for knowledge acquisition, sharing, and integration [5]. Feng et al. further elucidate intelligent assistants’ dual role in facilitating knowledge transformation and enabling more effective, personalized learning experiences [7]. This transformation signifies intelligent machines’ emergence as active participants in knowledge creation. However, the underlying mechanisms of this process remain largely unexplored, necessitating comprehensive investigation to enhance and enrich knowledge creation theory.

In conclusion, knowledge co-creation models have evolved to their current third-stage manifestation: the human-machine knowledge co-creation paradigm. In this contemporary context, machines have transcended their auxiliary role to become active participants in knowledge creation. While existing research has examined intelligent assistants’ functional roles, the fundamental mechanisms underlying human-machine knowledge co-creation remain inadequately explored. Our research addresses this gap by analyzing the comprehensive process of human-machine knowledge creation in the digital era, employing Sense-Making Theory as a robust theoretical foundation. This investigation not only addresses a critical research gap in human-machine interaction but also provides valuable theoretical and practical insights for optimizing human-machine collaboration systems and enhancing knowledge co-creation effectiveness.

3. Grounded research design

3.1. Introduction to experimental platform

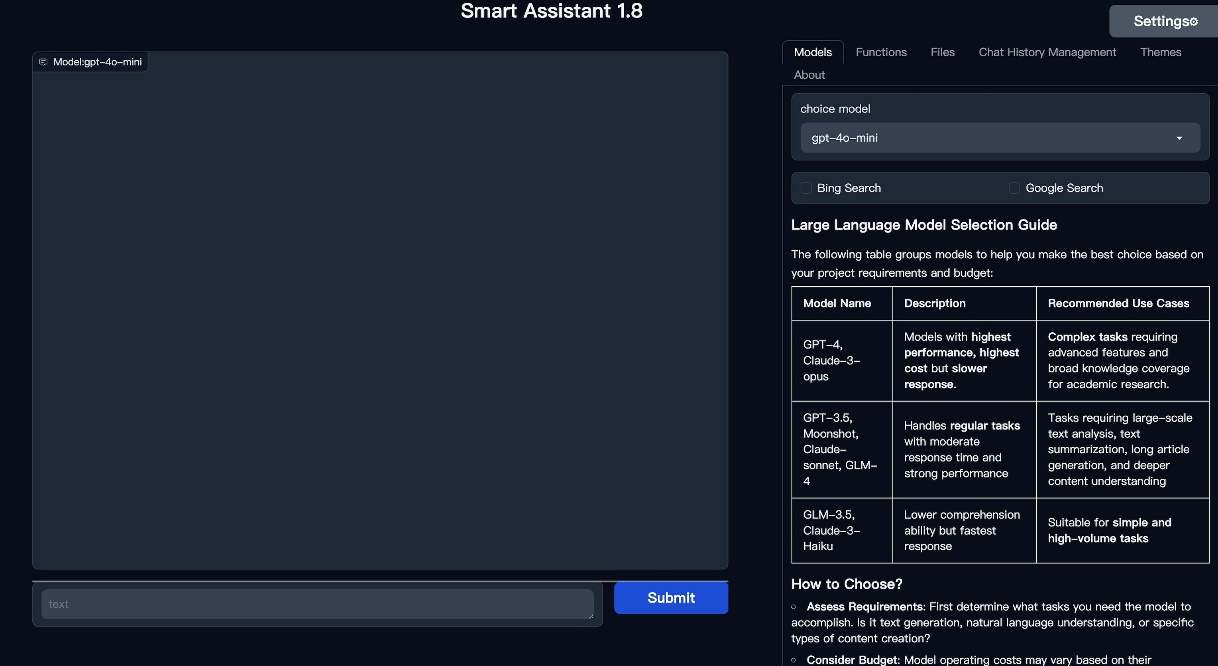

To empirically investigate the underlying mechanisms of human-intelligent assistant knowledge co-creation, we developed a proprietary research platform (as shown in Fig.2). The system, hosted on Tencent Cloud infrastructure with a registered domain, was launched on April 11, 2024. Developed independently using the Gradio framework, the platform implements a modular architectural design that ensures both scalability and customizability. The system’s core functionality is built around asophisticated multi-model fusion architecture, integrating several state-of-the-art large language models, including Claude and GPT-4. This design enables users to leverage different models for diverse task requirements through a unified interface.

Figure 2. Interface of AI assistant platform

In contrast to conventional web-based large language model implementations, our platform exhibits enhanced capabilities in contextual understanding and task processing adaptability. The system architecture integrates both real-time internet-based information retrieval and local knowledge base comprehension functionalities, facilitating sophisticated domain-specific knowledge extraction. A key architectural component is the advanced dialogue management system, which maintains persistent contextual memory to support cumulative knowledge construction and multiturn reasoning processes. Through iterative user interactions, the platform demonstrates adaptive optimization of both problem comprehension and task decomposition capabilities, progressively developing user-specific assistance paradigms. This sophisticated interaction framework not only facilitates the execution of complex tasks but also establishes an optimal experimental environment for investigating cognitive mechanisms in human-machine collaborative processes.

3.2. Data collection

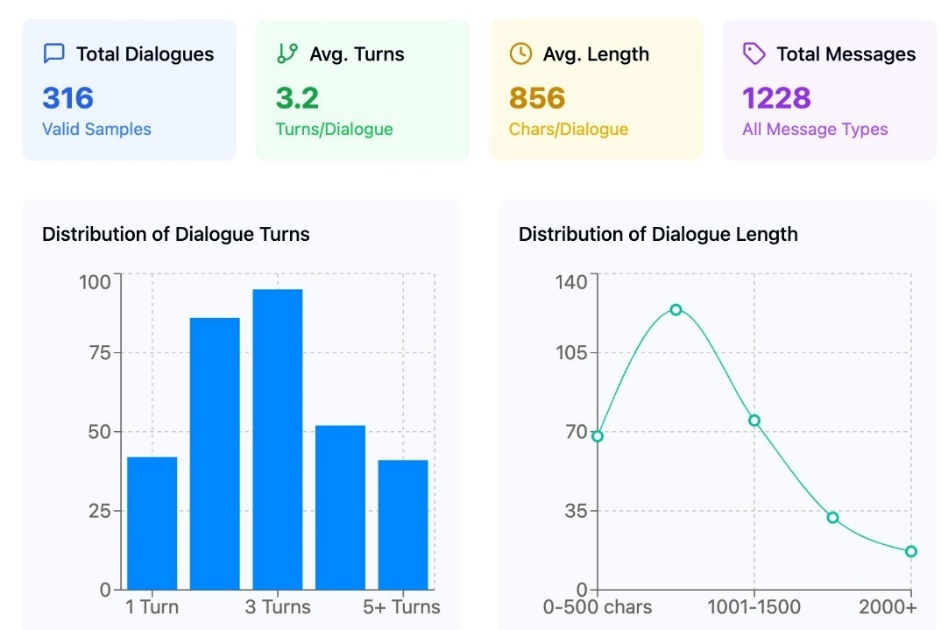

For this empirical investigation, we collected a comprehensive dataset comprising 316 carefully selected user-intelligent assistant interactions from the platform, encompassing user prompts and textual exchanges totaling 810,000 characters. To supplement our primary data, we also gathered secondary materials including user evaluations from external platforms and relevant articles on intelligent assistants. This methodological approach primarily relies on first-hand interaction data to construct our theoretical framework, with supplementary materials serving to enhance the robustness and generalizability of our findings.

The analysis of our dialogue corpus revealed distinct interaction patterns (Figure 3). Within the 316 validated dialogue samples, we documented 1,228 discrete message exchanges. The interactions exhibited an average of 3.2 conversational turns per dialogue, with mean dialogue length of 856 characters. The turn frequency distribution indicated a predominance of 2-3 turn exchanges, while approximately 40 interactions extended beyond 5 turns, suggesting that users typically engaged in focused, goal-oriented interactions rather than extended conversational exchanges. Examination of dialogue length distribution revealed noteworthy patterns: the modal category occurred in the 501-1000 character range, followed by a consistent diminution in frequency as length increased, with relatively few exchanges exceeding 2000 characters. This distribution pattern suggests that while users predominantly engaged in moderate-length interactions, a subset of exchanges developed into more extensive discussions, potentially reflecting more complex problem-solving scenarios or detailed knowledge-sharing sessions.

Figure 3. Statistical distribution of human-machine dialogue characteristics

3.3. Grounded theory method

3.3.1. Open coding

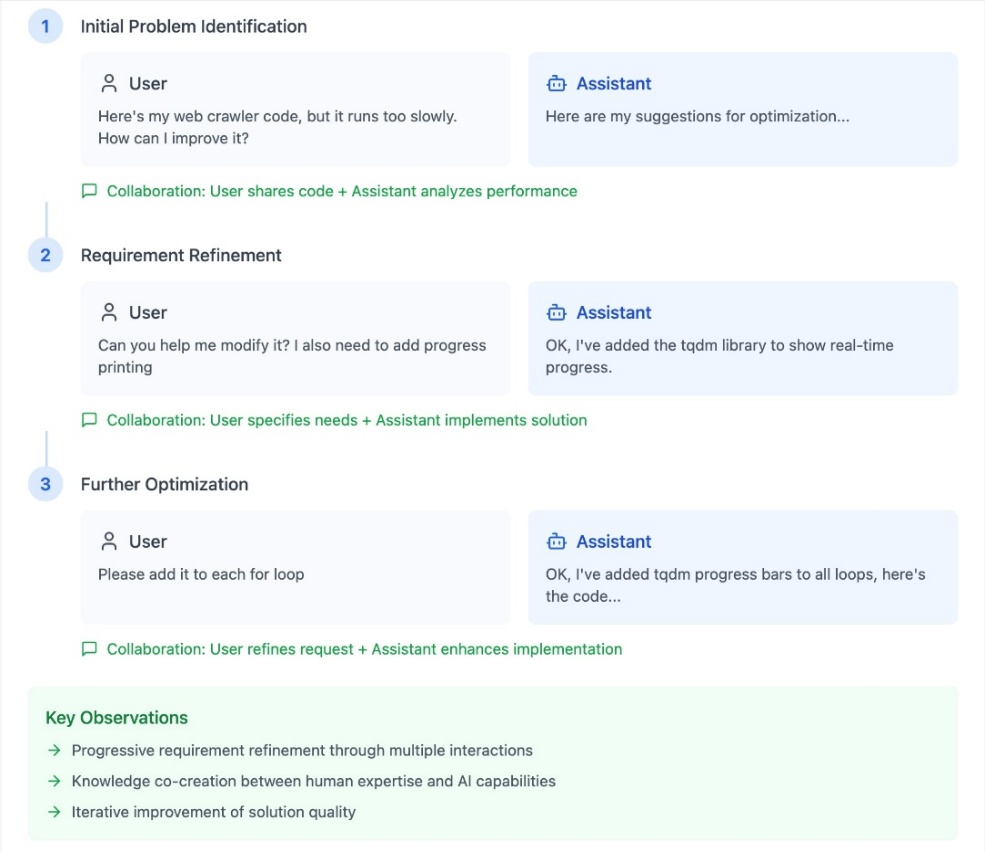

In the open coding phase, we employed a systematic approach to analyze the raw data and identify initial concepts. The coding process was conducted by two independent groups, each comprising three graduate researchers (masters and doctoral candidates) in business administration. These groups performed parallel open coding on 316 interaction transcripts. Through the constant comparative method, the coding results from both groups were integrated to generate preliminary conceptual categories. To illustrate the open coding process, Figure 4 presents a representative analysis of a web crawler optimization dialogue, demonstrating our approach to initial concept identification and categorization.

Figure 4. Examples of human-machine interaction process analysis

The open coding process began with thorough examination of our first-hand interaction transcripts, each containing detailed user-intelligent assistant dialogues. Team members conducted line-by-line analysis to identify potential conceptual indicators. For example, in the dialogue presented in Figure 4, we traced the progression of interaction patterns from initial problem framing through iterative requirement refinement to solution optimization. Our open coding procedure followed three systematic steps. First, researchers independently analyzed the raw data to generate initial analytical notes. Second, these preliminary observations were synthesized into conceptual codes through collaborative intra-group analysis. Finally, these conceptual codes were organized into higher-level categories. The relationship between raw data excerpts, conceptual codes, and emergent categories is detailed in Table 1. Following intra-group analysis, we conducted inter-group comparative sessions to integrate and refine the coding schemes. This iterative coding process, involving multiple rounds of independent coding and group discussion, enhanced the reliability and theoretical sensitivity of our findings.

Table 1. Examples of open coding results

Raw Data | Insights | Initial Concepts | Categories |

Q: How can I check my server’s memory usage? A: To check server memory usage, you can use the following commands: | The intelligent assistant possesses server-related knowledge and can correctly answer user questions | Server usage knowledge | A1 Knowledge Base |

Q: Linux “anzhao minianaconda” A:Installing minianaconda is a good choice...Here are the detailed steps: ... | The intelligent assistant possesses software installation knowledge and can correctly answer related questions | Software installation knowledge | A1 Knowledge Base |

Q: I’m using Alibaba Cloud, how to ensure access to port 8777? A: On Alibaba Cloud, you need to configure security group rules… | The intelligent assistant possesses website related knowledge and can correctly answer related questions | Website knowledge | A1 Knowledge Base |

… | … | … | … |

Q: Linux “anzhao miniana-conda” A: Installing mini-anaconda is a good choice...Here are the detailed steps: ... | In this interaction, the user meant “install” but typed “anzhao miniana-conda”. The intelligent assistant correctly understood the user’s intention and provided the appropriate response | “Install” semantic understanding | A2 Knowledge Application Ability |

Q: How can I check my server’s memory usage? A: To check server memory usage, you can use the following commands: | For unique user questions, the intelligent assistant needs both relevant knowledge and the ability to apply this knowledge to solve specific user needs | Server knowledge application ability | A2 Knowledge Application Ability |

Q: Please polish this paragraph in academic English and mark the changes... A: “The questionnaire commenced on May 22, 2023, and… | In this interaction, the intelligent assistant understood Chinese semantics and polished the content in English as requested | English language ability | A2 Knowledge Application Ability |

… | … | … | … |

Q1: Here’s my web crawler code, but it runs too slowly. How can I improve it? A1: ...Here are my suggestions: ... | Through multiple rounds of interaction, the intelligent assistant gradually understands and meets the user’s specific needs for crawler code modification, providing increasingly appropriate responses | Crawler code demand collaboration | A5 human-machine Demand Collaboration |

Q2: Can you help me modify it? I also need to add progress printing A2: OK, I’ve added the tqdm library to show real-time progress. | |||

Q3: Please add it to each for loop A3: OK, I’ve added tqdm progress bars to all loops, here’s the code: ... | |||

Q1: (Stata code) A1: Based on your Stata code snippet... | Through multiple rounds of interaction, the intelligent assistant helps users solve Stata code problems step by step, with knowledge from both parties being integrated and enhanced during the communication process | Stata code knowledge collaboration process | A6 human-machine Knowledge Collaboration |

Q2: (Data) How to convert from wide format to long format? A2: Based on your data frame... Q3: variable Country num contains all missing values A3: If Country num variable contains all missing values after executing reshape long command. | |||

Q1: Linux “anzhao mini anaconda” A1: Installing mini anaconda is a good choice... | Analysis of the interaction reveals that users combined their Miniconda installation knowledge with relevant knowledge provided by the intelligent assistant, and through providing practical information, helped the intelligent assistant better understand the user’s problems, thus providing more in-depth solutions | Software installation knowledge collaboration | A6 human-machine Knowledge Collaboration |

Q2: I made a mistake and didn’t add it to PATH A2: If you didn’t add Miniconda to PATH during installation, you can do it manually. Here are the detailed steps: ... | |||

… | … | … | … |

Q: What is artificial intelligence? A: Artificial Intelligence (AI) refers to... | User recognized their knowledge gap in AI definition and acquired external knowledge through interaction with the intelligent assistant | “Artificial Intelligence” definition acquisition | A8 Knowledge Acquisition |

Q: Do you know about the openparse library? A: Yes, OpenParse is an open-source toolkit for natural language processing... | User recognized their knowledge gap about the OpenParse Python library and acquired external knowledge through interaction with the intelligent assistant | Python library information acquisition | A8 Knowledge Acquisition |

Q: Please introduce me to “Blossoms Shanghai” A: “Blossoms Shanghai” is a popular TV series that tells stories of Shanghai’s concessions... | User recognized their knowledge gap about this TV series and acquired external knowledge through interaction with the intelligent assistant | TV series information acquisition | A8 Knowledge Acquisition |

… | … | … | … |

3.3.2. Axial Coding

Following open coding, we proceeded with axial coding to systematically explore relationships among emergent concepts and reassemble the data into a coherent theoretical framework. Through this analytical process, we sought to identify core phenomena and their interrelationships by examining the dimensions and properties of initial categories. The integration of our 12 preliminary categories led to the identification of five core theoretical categories: machine knowledge capability, personal knowledge capability, human-machine collaboration, knowledge co-creation, and prompt literacy, as shown in Table 2.

Table 2. Axial Coding and Explanations

Main Categories | Sub-categories | Explanations |

Machine Knowledge Capability | A1 Machine Knowledge Base | The extensive and in-depth knowledge system learned by intelligent assistants through vast amounts of data |

A2 Machine Knowledge Application | The ability of intelligent assistants to flexibly apply their knowledge base to solve problems and complete tasks in specific contexts | |

Personal Knowledge Capability | A3 Personal Knowledge Breadth | The range of diverse knowledge an individual possesses across multiple domains |

A4 Personal Knowledge Depth | The deep understanding and expertise an individual possesses in specific domains | |

Human-machine Collaboration | A5Human-machine Demand Collaboration | Refers to the process where intelligent assistants accurately understand and meet user needs, while users effectively express their needs and utilize assistant functions. Through continuous interaction, both parties optimize their collaboration mode to achieve more precise need fulfillment. |

A6 Human-machine Knowledge Collaboration | Refers to the complementarity and integration between users and intelligent assistants in knowledge exchange and creation processes. Both parties expand their knowledge structures through knowledge sharing and integration. | |

Knowledge Cocreation | A7 Knowledge Acquisition | Users acquire needed knowledge and information from intelligent assistants based on their requirements |

A8 Knowledge Integration | After users acquire knowledge, intelligent assistants help them organize and analyze this knowledge. This may include explaining complex concepts, integrating similar information, etc. | |

A9 Knowledge Creation | Based on integrated knowledge, intelligent assistants and users jointly generate new insights or knowledge. This may include innovative solutions, new theories, or valuable insights. | |

Prompt Literacy | A10 Background Prompting | Users provide context and relevant details of current tasks or problems, helping intelligent assistants provide more targeted support |

A11Knowledge Prompting | Users provide or specify professional information in specific domains, enabling intelligent assistants to more accurately match and answer with relevant knowledge | |

A12 Behavioral Prompting | Guide users in next steps or provide choices, helping them complete tasks more efficiently |

3.3.3. Grounded theory research results

Through intensive analysis of the interaction data, our grounded theory investigation revealed five main categories with 12 subcategories, yielding four key theoretical mechanisms.

First, our analysis demonstrated that knowledge capabilities of both human users and intelligent assistants function as critical determinants of knowledge co-creation outcomes. Specifically: 1) The knowledge repository and application capabilities of intelligent assistants emerged as fundamental enablers of effective knowledge co-creation. This was particularly evident in technical domains, where the assistant’s domain expertise and adaptive application of knowledge significantly influenced interaction quality, as observed in server management and programming consultations. 2) User knowledge dimensions—both breadth and depth—exhibited substantial impact on co-creation processes. Users possessing diverse knowledge backgrounds and deep domain expertise consistently demonstrated more sophisticated and productive engagement patterns with intelligent assistants.

Second, the analysis revealed human-machine collaboration as the core mediating mechanism in knowledge co-creation, manifesting in two distinct dimensions. The first dimension, demand collaboration, encompasses the progressive refinement of mutual understanding, where intelligent assistants iteratively align with user requirements while users increasingly optimize their utilization of system capabilities. This was exemplified in web crawler optimization scenarios, where multiple interaction cycles led to increasingly precise solution development. The second dimension, knowledge collaboration, represents the synergistic integration of human and machine knowledge bases. This was particularly evident in statistical programming contexts, where sustained dialogue facilitated the fusion of complementary expertise to address complex technical challenges.

Third, our findings identified prompt literacy as a significant moderating factor in human-machine collaboration effectiveness. Users demonstrating advanced prompt engineering skills—particularly in articulating task parameters and contextual information—achieved notably higher levels of collaborative success. This effect was clearly demonstrated in software installation scenarios, where detailed error context provision led to more precise technical solutions.

Finally, the analysis revealed a progressive deepening of knowledge co-creation through three distinct phases: a) Knowledge acquisition, where users obtain novel information from the intelligent assistant, exemplified in conceptual inquiries about artificial intelligence; b) Knowledge integration, where assistants facilitate the incorporation of new knowledge into existing cognitive frameworks, observed in discussions of complex programming paradigms; c) Knowledge creation, characterized by the emergence of novel insights and innovative solutions through sustained collaborative interaction, particularly evident in algorithm optimization scenarios.

4. Propose hypotheses

The conclusion should elaborate on the key points of the research results, analyze the conclusions drawn from the results, and explain their significance for future research or practice. All sections such as patents, appendices, funding projects, and acknowledgments should be placed after the conclusion and before the references.

4.1. Knowledge capability and human-machine collaboration

Sense-making theory serves as a crucial theoretical cornerstone for conceptualizing the human-machine knowledge co-creation process. Foundational research indicates that sense-making comprises two fundamental components: internal and external representations [27,28]. Within the context of human-machine interaction, our study, informed by grounded research findings, conceptualizes personal knowledge capability as internal representation and machine knowledge capability as its external counterpart.

By incorporating schema theory [29-31], we frame the human-machine knowledge co-creation process as a dynamic interplay of knowledge accumulation, adjustment, and reorganization. Within this framework, human-machine collaboration emerges as the pivotal mechanism, manifesting through distinct developmental stages as illustrated in Table 3.

Table 3. Process stages of HM collaborative knowledge co-creation

Stage | Description |

Accumulation | Driven by needs, individuals begin to interact with intelligent assistants.At this stage, personal knowledge capability (internal representation) starts to assimilate machine knowledge capability (external representation) [32]. For example, users begin to receive and understand information provided by the intelligent assistant. |

Adjustment | As interaction deepens, human-machine collaboration begins to form. This is reflected in users and intelligent assistants gradually clarifying needs and their knowledge structures beginning to adapt and adjust to each other during the interaction process [31]. |

Reorganization | Through continuous human-machine collaboration, users’ knowledge structures undergo reorganization, thereby achieving knowledge co-creation. This may manifest as the acquisition of new knowledge, integration of existing knowledge, or generation of entirely new insights. |

Rather than following a linear trajectory, this process exhibits an upward spiral pattern. The depth of human-machine collaboration intensifies with increasing interactions, leading to corresponding enhancements in knowledge co-creation outcomes. Drawing from prior case studies and synthesizing literature on sense-making and schema theories, we posit that enhanced personal and machine knowledge capabilities facilitate more effective knowledge structure supplementation and correction through human-machine collaboration. Pontis and Blandford’s investigation into knowledge capabilities’ effects on sense-making revealed that both domain expertise and general knowledge levels significantly influence knowledge structure adjustment processes [32]. Building upon organizational knowledge base theory [33], we differentiate personal knowledge capability into two dimensions: breadth and depth. Similarly, informed by case study findings, we categorize machine knowledge capability into knowledge base and knowledge application components. Based on our analysis, we propose:

H1: Personal knowledge capability has a positive effect on human-machine collaboration

H1a: Personal knowledge breadth has a positive effect on human-machine collaboration

H1b: Personal knowledge depth has a positive effect on human-machine collaboration

H2: Machine knowledge capability has a positive effect on human-machine collaboration

H2a: Machine knowledge base has a positive effect on human-machine collaboration

H2b: Machine knowledge application has a positive effect on human-machine collaboration

4.2. Human-machine collaboration and knowledge co-creation

Through iterative interactions between personal and machine knowledge capabilities, the alignment and refinement of knowledge structures culminates in the emergence of a novel knowledge framework system, marking the completion of the knowledge co-creation process. Our case study-derived theoretical model delineates this knowledge co-creation process into three distinct stages: acquisition, integration, and creation.

The initial stage, knowledge acquisition, emerges primarily from structured information seeking behaviors [34]. Our grounded research corroborates this finding, revealing that knowledge expansion through interaction with intelligent assistants constitutes the fundamental and most prevalent activity in human-machine interactions.

The second stage encompasses knowledge integration, wherein individuals engage in cognitive processes of idea clarification, model conceptualization, comparative analysis between existing and new models, and ultimately, model synthesis [10,35]. Our grounded analysis demonstrates that users maintain continuous dialogue with intelligent assistants to refine their understanding, enabling the assistants to systematically organize, synthesize, and propose novel frameworks and action strategies.

In the final stage, as interactions become more sophisticated, intelligent assistants facilitate the continuous refinement and augmentation of the knowledge framework, enabling co-creators to derive meaningful insights. This culminates in various outcomes, including task-specific methodologies, novel knowledge constructs, or tacit knowledge generation, thereby completing the knowledge creation cycle. Based on these observations, we propose:

H3: Human-machine collaboration has a positive effect on knowledge co-creation

H3a: Human-machine collaboration has a positive effect on knowledge acquisition

H3b: Human-machine collaboration has a positive effect on knowledge integration

H3c: Human-machine collaboration has a positive effect on knowledge creation

4.3. The moderating effect of prompt literacy

Research by Zhang, Pontis, and colleagues demonstrates that the provision of contextual background information enhances sense-making effectiveness [9,32]. In human-intelligent assistant interactions, prompt engineering serves as a mechanism for conveying essential contextual information, encompassing the co-creator’s environmental context, the intended role of the intelligent assistant, and the desired response format. This contextual enrichment facilitates the intelligent assistant’s comprehension of co-creator requirements, thereby enhancing human-machine collaborative outcomes. Based on these insights, we propose:

H4: Prompt literacy moderates the positive relationship between machine knowledge capability and human-machine collaboration

H4a: Prompt literacy moderates the positive relationship between machine knowledge base and human-machine collaboration

H4b: Prompt literacy moderates the positive relationship between machine knowledge application and human-machine collaboration

Figure 5 presents our integrated research model, synthesizing findings from our grounded theory investigation and comprehensive literature review.

Figure 5. Model framework diagram

5. Verification of human-machine knowledge co-creation mechanism

5.1. Research procedure and sample

To validate the questionnaire’s reliability and validity and implement necessary refinements, we conducted a pilot study with 318 participants who had prior experience with intelligent assistants. Participants were recruited through the Wenjuanxing platform, with the sample size determined based on the questionnaire’s variable structure. Initial data analysis confirmed the instrument’s reliability, while exploratory factor analysis revealed nine distinct components that aligned precisely with our theoretical constructs, thereby establishing the measurement instrument’s construct validity.

Following the successful pilot phase, we administered the refined questionnaire in the main study. We recruited 672 new participants through the Wenjuanxing platform, all with intelligent assistant experience. After eliminating responses with insufficient completion times and those indicating no prior intelligent assistant usage, our final sample comprised 619 valid questionnaires. The demographic characteristics of the sample are presented below.

Table 4. Descriptive statistics of survey sample

Characteristic | Category | Frequency | Percentage (%) |

Gender | Male | 295 | 47.7 |

Female | 324 | 52.3 | |

Age | Under 18 | 89 | 14.4 |

19-30 | 239 | 38.6 | |

31-45 | 148 | 23.9 | |

46-59 | 115 | 18.6 | |

Over 60 | 28 | 4.5 | |

Education | High School or Below | 152 | 24.6 |

Associate | 185 | 29.9 | |

Bechelor’s Degree | 204 | 33.0 | |

Master’s Degree | 66 | 10.7 | |

Doctoral Degree | 12 | 1.9 | |

Frequency of Using Intelligent Assistants | Multiple Times per Day | 133 | 21.5 |

Once per Day | 236 | 38.1 | |

Several Times per Week | 142 | 22.9 | |

Several Times per Month | 73 | 11.8 | |

Rarely | 35 | 5.7 | |

Never | 0 | 0.0 |

5.2. Questionnaire design and measurement

To establish measurement reliability and validity, we adapted established scales from prior research, employing the back-translation method for non-English instruments and modifying items to align with our research context. All constructs were measured using 7-point Likert scales (1=“strongly disagree” to 7 =“strongly agree”). The measurement scales were operationalized as follows:

Personal Knowledge Capability: We adapted LYU et al.’s [37] scales to assess personal knowledge breadth and depth.

Machine Knowledge Capability: Drawing from Rosemarie’s [38] conceptualization and Chi et al.’s [39] robot performance (RP) measurement, we developed scales for machine knowledge base and application.

Human-Machine Collaboration: Scale items were adapted from Chi et al.’s [39] Service Robot Facilitator (SRF) measurement.

Knowledge Co-creation: This construct was measured using three subscales: knowledge acquisition (adapted from Lyles and Salk [41]), knowledge integration (based on Basaglia et al.’s [41] IT knowledge integration capability scale), and knowledge creation (synthesized from Sarwat and Abbas’s [42] personal knowledge creation ability scale and Hoon Song et al.’s [43] organizational knowledge creation scale).

Prompt Literacy: We developed this scale by adapting Chi et al.’s [39] Robot Use Self-Efficacy (RUSE) measurement, incorporating recent conceptualizations of prompt engineering as an emerging information literacy competency [44–48].

5.3. Mechanism verification

5.3.1. Reliability and validity tests

We assessed the measurement model’s psychometric properties using SPSS for reliability analysis and AMOS for confirmatory factor analysis. Table 5 presents the detailed results. All items demonstrated satisfactory factor loadings (> 0.7), and each construct exhibited strong internal consistency with Cronbach′ sα and composite reliability (CR) values exceeding 0.8. Additionally, the average variance extracted (AVE) values were all above the recommended threshold of 0.5. These results collectively support the reliability and construct validity of our measurement instrument.

Table 5. Reliability and convergent validity results

Variable | Items | Loading | Cronbach’s \( α \) | CR | AVE |

Personal Knowledge Breadth (PKB) | I can understand and discuss topics across different disciplines. | 0.768 | 0.841 | 0.832 | 0.6228 |

I often explore knowledge areas not directly related to my specialty. | 0.788 | ||||

I can connect knowledge from different fields to solve problems. | 0.811 | ||||

Personal Knowledge Depth (PKD) | I can understand and apply complex concepts in my field. | 0.766 | 0.835 | 0.8266 | 0.6138 |

I can critically evaluate new theories or methods in my field. | 0.801 | ||||

I frequently study cutting-edge topics in my field. | 0.783 | ||||

Machine Knowledge Base (MKB) | The intelligent assistant can provide basic knowledge across multiple disciplines. | 0.798 | 0.831 | 0.8263 | 0.6132 |

The intelligent assistant’s answers usually contain accurate factual information. | 0.782 | ||||

The intelligent assistant can explain complex concepts or theories. | 0.769 | ||||

Machine Knowledge Application (MKA) | The intelligent assistant can flexibly apply knowledge to specific problems. | 0.79 | 0.821 | 0.8097 | 0.5866 |

The intelligent assistant can integrate knowledge from multiple fields to solve complex problems. | 0.742 | ||||

The intelligent assistant can adjust its knowledge application based on specific contexts. | 0.765 | ||||

Human-machine Match (HM) | Information provided by the intelligent assistant is usually highly relevant to my needs. | 0.788 | 0.835 | 0.8257 | 0.6125 |

My interaction with the intelligent assistant improves as the dialogue deepens. | 0.804 | ||||

The intelligent assistant can understand and adapt to my knowledge level and expression. | 0.755 | ||||

Knowledge Acquisition (KA) | Through interaction with the intelligent assistant, I gained new knowledge or information. | 0.799 | 0.838 | 0.8338 | 0.6259 |

The intelligent assistant helped me quickly understand unfamiliar fields. | 0.804 | ||||

Interaction with the intelligent assistant sparked my interest in further learning. | 0.77 | ||||

Knowledge Integration (KI) | The intelligent assistant helps me connect new knowledge with existing knowledge. | 0.789 | 0.849 | 0.8458 | 0.6465 |

Through discussions with the intelligent assistant, I better understand relationships between complex concepts. | 0.817 | ||||

The intelligent assistant helps me build a more systematic knowledge framework. | 0.806 | ||||

Knowledge Creation (KC) | Interaction with the intelligent assistant inspired new ideas. | 0.826 | 0.828 | 0.8244 | 0.6106 |

The intelligent assistant helped me discover new problem-solving perspectives. | 0.744 | ||||

Through collaboration with the intelligent assistant, I can generate innovative solutions. | 0.772 | ||||

Prompt Literacy (PL) | I clearly express my task objectives and background to the intelligent assistant. | 0.802 | 0.825 | 0.8341 | 0.6263 |

I adjust my questioning method based on the quality of the intelligent assistant’s responses. | 0.796 | ||||

I provide necessary technical terms or domain-specific information to the intelligent assistant | 0.776 |

5.3.2. Main effects test

We employed structural equation modeling using AMOS to examine the main effects, with path parameters estimated through maximum likelihood estimation. The model demonstrated satisfactory fit indices: χ2/df = 3.457 (falling within the acceptable range of 2-5); RMSEA = 0.063 (indicating good fit); GFI = 0.874 and AGFI = 0.846 (both exceeding the threshold of 0.8 for good fit); and CFI = 0.908 (surpassing 0.9, indicating excellent fit). Collectively, these fit indices provide strong support for the model’sempirical validity. Table 6 presents the detailed path analysis results.

Table 6. Path analysis results

Hypothesis | Path | Coefficient | SE | CR | P-value | Result |

H1a | Personal Knowledge Rreadth--->human-machine Match | 0.326 | 0.042 | 6.887 | *** | Supported |

H1b | Personal Knowledge Depth--->human-machine Match | 0.331 | 0.043 | 6.928 | *** | Supported |

H2a | Machine Knowledge Base--->human-machine Match | 0.24 | 0.042 | 5.188 | *** | Supported |

H2b | Machine Knowledge Application--->human-machine Match | 0.148 | 0.043 | 3.247 | *** | Supported |

H3a | Human-machine Match--->Knowledge Acquisition | 0.499 | 0.057 | 9.512 | *** | Supported |

H3b | Human-machine Match--->Knowledge Integration | 0.471 | 0.055 | 9.083 | *** | Supported |

H3c | Human-machine Match--->Knowledge Creation | 0.472 | 0.057 | 9.107 | *** | Supported |

Path analysis results reveal significant positive relationships in our hypothesized model. Personal knowledge depth and breadth both demonstrate significant positive effects on human-machine collaboration (p < 0.001), supporting H1a and H1b. Similarly, machine knowledge base and machine knowledge application exhibit significant positive influences on human-machine collaboration (p < 0.001), confirming H2a and H2b. Furthermore, human-machine collaboration shows significant positive effects on all three knowledge co-creation outcomes: acquisition, integration, and creation (p < 0.001), validating H3a, H3b, and H3c. Collectively, all path coefficients in the main effects model demonstrate statistical significance, providing comprehensive support for hypotheses H1, H2, and H3.

5.3.3. Moderation effect test

To examine the moderation effects, we conducted multi-group analyses in AMOS using a median split to categorize prompt literacy into high and low groups. We employed nested model comparisons with three increasingly constrained models:

M0: Unconstrained model (baseline)

M1: Constrained factor loadings

M2: Constrained factor loadings and path coefficients

All three models demonstrated satisfactory fit indices. Sequential model comparisons revealed a nonsignificant chi-square difference between M0 and M1 (∆χ2 = 0.299, p > 0.05), supporting measurement invariance. However, the comparison between M1 and M2 yielded a significant difference (∆χ2, p < 0.001), indicating the presence of moderation effects.

Further examination of specific paths revealed that the critical ratio for the “Machine Knowledge Base→ Human-Machine Collaboration:” relationship (CR = 1.647, p > 0.05) was non-significant, suggesting prompt literacy does not moderate this relationship. Conversely, the critical ratio for the ”Machine Knowledge Application → Human-Machine Collaboration” path (CR = 1.978, p < 0.05) was significant, indicating that prompt literacy significantly moderates the relationship between machine knowledge application and human-machine collaboration.

6. Conclusion and discussion

6.1. Research conclusions

This research investigated the knowledge co-creation process at the individual level, employing Sense-Making Theory as a theoretical foundation and utilizing a mixed-method approach combining grounded theory methodology and empirical validation. Through systematic analysis of human-intelligent assistant interactions, we identified several key mechanisms underlying the knowledge co-creation process.

Our primary finding reveals that human-machine collaboration constitutes the fundamental mechanism driving knowledge co-creation. This collaboration manifests through two distinct dimensions: human-machine demand alignment and knowledge synthesis. Throughout iterative interactions, we observed a progressive enhancement in the intelligent assistant’s ability to comprehend user requirements, while users demonstrated increasing capacity to assimilate and integrate the assistant’s knowledge into their existing cognitive frameworks. This bidirectional collaborative process facilitates profound knowledge co-creation, yielding outcomes across three hierarchical levels: knowledge acquisition, knowledge integration, and knowledge creation.

Furthermore, our analysis demonstrates that knowledge capability serves as the cornerstone of effective human-machine collaboration. The empirical results establish that both individual and machine knowledge capabilities exert significant positive influences on collaborative outcomes. Enhanced individual knowledge capability enables more precise and targeted inquiries, while superior machine knowledge capability facilitates more sophisticated and comprehensive knowledge support.

A notable finding concerns the role of prompt literacy in modulating the relationship between machine knowledge application and human-machine collaboration. The data suggests that users possessing advanced prompt literacy demonstrate superior ability to articulate their requirements and optimize their interactions, thereby more effectively leveraging the intelligent assistant’s knowledge capabilities.

This investigation contributes substantial insights to our understanding of human-machine knowledge co-creation mechanisms, particularly emphasizing the critical roles of human-machine collaboration, knowledge capabilities, and prompt literacy. These findings advance both theoretical frameworks for understanding human-intelligent assistant interaction and provide actionable guidelines for enhancing collaborative effectiveness in knowledge-intensive contexts.

6.2. Theoretical contributions

This study makes several significant theoretical contributions. Foremost, it extends the theoretical boundaries of Sense-Making Theory into the domain of human-machine interaction. While traditional Sense-Making Theory has predominantly addressed human-human interactions and basic computational program interactions [9], our research advances its application to encompass sophisticated human-intelligent assistant interactions. By conceptualizing personal knowledge capability and machine knowledge capability as internal and external representations respectively, and integrating Sense-Making Theory with empirically derived human-machine knowledge co-creation processes, we establish a novel theoretical framework for understanding cognitive mechanisms in human-machine interaction.

Additionally, our research substantially advances beyond the preliminary qualitative findings of previous studies on human-machine interaction processes through the lens of Sense-Making Theory [8]. We empirically validate the centrality of human-machine collaboration in knowledge co-creation and develop a comprehensive theoretical model that synthesizes multiple dimensions: personal knowledge capability, machine knowledge capability, human-machine collaboration, prompt literacy, and knowledge co-creation. This integrated model not only delineates the pathways through which knowledge capabilities influence collaborative processes but also explicates the mechanisms by which human-machine collaboration facilitates the progressive evolution of knowledge from initial acquisition to sophisticated creation. The result is a systematic theoretical framework that substantially enhances our understanding of knowledge creation dynamics in human-machine interaction.

Furthermore, this study makes a distinctive contribution by empirically validating prompt literacy as a critical moderating variable in human-machine knowledge co-creation. While previous research has identified prompt literacy as an emergent component of information literacy, our investigation rigorously establishes its role in mediating human-machine interaction effectiveness. This finding successfully bridges the technical domain of prompt engineering with user-centered research paradigms, offering novel insights into the factors that shape successful human-machine interaction outcomes.

6.3. Practical implications

Our comprehensive investigation into human-intelligent assistant interactions and the validated model of knowledge co-creation mechanisms yields significant practical implications, particularly for stakeholders in the content economy. Drawing from our theoretical findings, we present strategic recommendations across two key domains:

For Traditional Content Production Organizations:

Our research indicates a paradigm shift in content generation processes. The emergence of human-machine collaborative knowledge co-creation fundamentally transforms content production from a linear, human-resource-dependent model to an integrated, dynamic ecosystem. We propose the following strategic initiatives:

1. Strategic Process Redesign: Organizations must fundamentally reimagine their content production workflows, implementing sophisticated AI integration strategies. This transformation extends beyond superficial AI adoption, necessitating the development of comprehensive human-machine collaborative frameworks that optimize the synergy between human creativity and AI capabilities.

2. Evolution of Quality Assessment Frameworks: Contemporary content evaluation protocols require substantial revision to accurately capture the value generated through human-machine collaboration. Organizations should implement multidimensional evaluation systems that incorporate metrics for content originality, intellectual depth, operational efficiency, and scalability potential.

3. Systematic Development of Prompt Engineering Competencies: Our findings emphasize the critical role of prompt literacy in enhancing collaborative effectiveness. Organizations should institute structured training programs and knowledge-sharing platforms to cultivate advanced prompt engineering capabilities among their workforce.

For Individual Creators and Small Production Teams:

Our research highlights a pivotal trend: future content creation excellence will be increasingly defined by proficiency in AI collaboration. We recommend the following strategic adaptations:

1. Prompt Literacy Enhancement: Given the demonstrated positive moderating effect of prompt literacy on machine knowledge application and collaborative outcomes, mastery of prompt engineering techniques should be prioritized as a fundamental professional competency.

2. Role Evolution: Within the human-machine collaborative paradigm, creators must transition from traditional content production roles to become strategic content architects and creative directors. This evolution demands enhanced capabilities in strategic analysis and cross-disciplinary knowledge synthesis.

3. Continuous Technological Adaptation: The progressive nature of knowledge co-creation, evolving from basic to sophisticated levels, necessitates ongoing professional development. Creators must maintain dynamic knowledge structures aligned with rapidly advancing technological capabilities.

6.4. Limitations and future directions

While this research advances our understanding of human-machine knowledge co-creation mechanisms, several limitations warrant acknowledgment and suggest promising avenues for future investigation: A primary methodological limitation concerns the scope of data collection. The reliance on interaction data from a single intelligent assistant platform potentially introduces platform-specific biases and may constrain the generalizability of our findings. This single-source data collection approach may not adequately capture the diverse characteristics and impacts of various intelligent assistant systems on knowledge co-creation processes. Future research would benefit from adopting a comprehensive multi-platform sampling strategy. Such cross-platform comparative analyses could illuminate universal patterns in human-machine knowledge co-creation while elucidating the role of platform-specific characteristics in shaping co-creation outcomes, ultimately yielding more robust and generalizable conclusions.

A second theoretical limitation lies in the study’s predominantly individual-level focus, which necessarily excludes team and organizational dynamics from consideration. Given that knowledge innovation in professional contexts typically occurs through collective processes, future investigations should expand their analytical scope to encompass team and organizational dimensions. Such research could explore the cascading effects of human-machine collaboration on collective knowledge innovation processes across organizational hierarchies. This multi-level analysis approach would not only enhance the theoretical framework of human-machine knowledge co-creation but also provide actionable insights for organizations seeking to leverage artificial intelligence technologies for collective innovation advancement. Researchers might employ hierarchical modeling techniques to simultaneously examine variables at individual, team, and organizational levels, thereby constructing a more comprehensive theoretical framework.

Finally, while our study establishes prompt literacy as a significant moderating variable, the underlying mechanisms through which it influences human-machine collaboration remain incompletely understood. This presents a crucial opportunity for future research to conduct more granular analyses of prompt literacy’s operational dynamics. Subsequent investigations could employ mixed-method research designs to systematically examine how various dimensions of prompt literacy impact the quality and efficiency of human-machine interactions. Specifically, controlled experimental studies could be designed to manipulate different prompt engineering strategies and assess their differential effects on knowledge co-creation processes and outcomes. Such research would contribute to both theoretical understanding and practical applications in optimizing human-machine collaboration.

References

[1]. Jarrahi, M. H., Askay, D., Eshraghi, A., & Smith, P. (2023). Artificial intelligence and knowledge management: A partnership between human and AI. Business Horizons, 66(1), 87–99. https://doi.org/10.1016/j.bushor.2022.03.002

[2]. Sharma, A. (2023). Artificial intelligence for sense making in survival supply chains. International Journal of Production Research, 0(0), 1–24. https://doi.org/10.1080/00207543.2023.2221743

[3]. Feuerriegel, S., Hartmann, J., Janiesch, C., & Zschech, P. (2024). Generative AI. Business & Information Systems Engineering, 66(1), 111–126. https://doi.org/10.1007/s12599-023-00834-7

[4]. Stokel-Walker, C., & Van Noorden, R. (2023). What ChatGPT and generative AI mean for science. Nature, 614(7947), 214–216. https://doi.org/10.1038/d41586-023-00340-6

[5]. Hu, X., Tian, Y., Nagato, K., Nakao, M., & Liu, A. (2023). Opportunities and challenges of ChatGPT for design knowledge management. Procedia CIRP, 119, 21–28. https://doi.org/10.1016/j.procir.2023.05.001

[6]. Chan, C. K. Y., & Lee, K. K. W. (2023). The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learning Environments, 10(1), 60. https://doi.org/10.1186/s40561-023-00269-3

[7]. Feng, C. (Mitsu), Botha, E., & Pitt, L. (2024). From HAL to GenAI: Optimize chatbot impacts with CARE. Business Horizons. Advance online publication. https://doi.org/10.1016/j.bushor.2024.04.012

[8]. Li, L. (2023). Everyday AI sensemaking of freelance knowledge workers. In Companion Publication of the 2023 Conference on Computer Supported Cooperative Work and Social Computing (pp. 452–454). Association for Computing Machinery. https://doi.org/10.1145/3584931.3608924

[9]. Zhang, P., & Soergel, D. (2014). Towards a comprehensive model of the cognitive process and mechanisms of individual sensemaking. Journal of the Association for Information Science and Technology, 65(9), 1733–1756. https://doi.org/10.1002/asi.23125

[10]. Odden, T. O. B., & Russ, R. S. (2019). Defining sensemaking: Bringing clarity to a fragmented theoretical construct. Science Education, 103(1), 187–205. https://doi.org/10.1002/sce.21452

[11]. Norman, D. A., & Bobrow, D. G. (1975). On the role of active memory processes in perception and cognition (Technical Report No. 76-02). Center for Human Information Processing, University of California, San Diego.

[12]. Russell, D. M., Stefik, M. J., Pirolli, P., & Card, S. K. (1993). The cost structure of sensemaking. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems (pp. 269–276). Association for Computing Machinery. https://doi.org/10.1145/169059.169209

[13]. Qian, X., & Fang, Y. (2024). Mitigating algorithm aversion through sensemaking? A revisit to the explanation-seeking process. PACIS 2024 Proceedings.

[14]. Gero, K. I., Swoopes, C., Gu, Z., Kummerfeld, J. K., & Glassman, E. L. (2024). Supporting sensemaking of large language model outputs at scale. Proceedings of the CHI Conference on Human Factors in Computing Systems (pp. 1–21). Association for Computing Machinery. https://doi.org/10.1145/3613904.3642139

[15]. Kanarik, K. J., Osowiecki, W. T., Lu, Y. (Joe), Talukder, D., Roschewsky, N., Park, S. N., Kamon, M., Fried, D. M., & Gottscho, R. A. (2023). Human–machine collaboration for improving semiconductor process development. Nature, 616(7958), 707–711. https://doi.org/10.1038/s41586-023-05773-7

[16]. Pizoń, J., & Gola, A. (2023). Human–machine relationship—Perspective and future roadmap for Industry 5.0 solutions. Machines, 11(2), 203. https://doi.org/10.3390/machines11020203

[17]. Ren, M., Chen, N., & Qiu, H. (2023). Human-machine collaborative decision-making: An evolutionary roadmap based on cognitive intelligence. International Journal of Social Robotics, 15(7), 1101–1114. https://doi.org/10.1007/s12369-023-01020-1

[18]. Sowa, K., Przegalinska, A., & Ciechanowski, L. (2021). Cobots in knowledge work: Human–AI collaboration in managerial professions. Journal of Business Research, 125, 135–142. https://doi.org/10.1016/j.jbusres.2020.11.038

[19]. Sowa, K., & Przegalinska, A. (2020). Digital coworker: Human-AI collaboration in work environment, on the example of virtual assistants for management professions. In A. Przegalinska, F. Grippa, & P. A. Gloor (Eds.), Digital transformation of collaboration (pp. 179–201). Springer. https://doi.org/10.1007/978-3-030-48993-9_13

[20]. Cao, S., Jiang, W., Wang, J., & Yang, B. (2024). From man vs. machine to man + machine: The art and AI of stock analyses. Journal of Financial Economics, 160, 103910. https://doi.org/10.1016/j.jfineco.2024.103910

[21]. Hoc, J.-M. (2000). From human–machine interaction to human–machine cooperation. Ergonomics, 43(7), 833–843. https://doi.org/10.1080/001401300409044

[22]. Lin, H., Han, J., Wu, P., Wang, J., Tu, J., Tang, H., & Zhu, L. (2023). Machine learning and human-machine trust in healthcare: A systematic survey. CAAI Transactions on Intelligence Technology. Advance online publication. https://doi.org/10.1049/cit2.12268

[23]. Xue, C., Zhang, H., & Cao, H. (2024). Multi-agent modelling and analysis of the knowledge learning of a human-machine hybrid intelligent organization with human-machine trust. Systems Science & Control Engineering, 12(1), 2343301. https://doi.org/10.1080/21642583.2024.2343301

[24]. Reich, T., Kaju, A., & Maglio, S. J. (n.d.). How to overcome algorithm aversion: Learning from mistakes. Journal of Consumer Psychology. Advance online publication. https://doi.org/10.1002/jcpy.1313

[25]. Nonaka, I., Takeuchi, H., & Umemoto, K. (1996). A theory of organizational knowledge creation. International Journal of Technology Management, 11(7–8), 833–845.

[26]. Al-Emran, M., Mezhuyev, V., Kamaludin, A., & Shaalan, K. (2018). The impact of knowledge management processes on information systems: A systematic review. International Journal of Information Management, 43, 173–187. https://doi.org/10.1016/j.ijinfomgt.2018.08.001

[27]. Zhang, J. (1997). The nature of external representations in problem solving. Cognitive Science, 21(2), 179–217.

[28]. Richardson, M., & Ball, L. J. (2009). Internal representations, external representations and ergonomics: Towards a theoretical integration. Theoretical Issues in Ergonomics Science, 10(4), 335–376. https://doi.org/10.1080/14639220802368872

[29]. Piaget, J. (1976). Piaget’s theory. In B. Inhelder, H. H. Chipman, & C. Zwingmann (Eds.), Piaget and his school (pp. 11–23). Springer. https://doi.org/10.1007/978-3-642-46323-5_2

[30]. Rumelhart, D. E., & Norman, D. A. (1976). Accretion, tuning and restructuring: Three modes of learning (Report No. 7602). Center for Human Information Processing, University of California, San Diego.

[31]. Vosniadou, S., & Brewer, W. F. (1987). Theories of knowledge restructuring in development. Review of Educational Research, 57(1), 51–67. https://doi.org/10.3102/00346543057001051

[32]. Chi, M. T. (2009). Three types of conceptual change: Belief revision, mental model transformation, and categorical shift. In S. Vosniadou (Ed.), International handbook of research on conceptual change (pp. 89–110). Routledge.

[33]. Pontis, S., & Blandford, A. (2016). Understanding “influence”: An empirical test of the Data-Frame Theory of Sensemaking. Journal of the Association for Information Science and Technology, 67(4), 841–858. https://doi.org/10.1002/asi.23427

[34]. Katila, R., & Ahuja, G. (2017). Something old, something new: A longitudinal study of search behavior and new product introduction. Academy of Management Journal, 45(8), 1183–1194. https://doi.org/10.5465/3069433

[35]. Qu, Y., & Furnas, G. W. (2008). Model-driven formative evaluation of exploratory search: A study under a sensemaking framework. Information Processing & Management, 44(2), 534–555.

[36]. Shen, J., & Linn, M. C. (2011). A technology-enhanced unit of modeling static electricity: Integrating scientific explanations and everyday observations. International Journal of Science Education, 33(12), 1597–1623. https://doi.org/10.1080/09500693.2010.514012

[37]. Lyu, C., Yang, J., Zhang, F., Teo, T. S. H., & Mu, T. (2020). How do knowledge characteristics affect firm’s knowledge sharing intention in interfirm cooperation? An empirical study. Journal of Business Research, 115, 48–60. https://doi.org/10.1016/j.jbusres.2020.04.045

[38]. Yagoda, R. E., & Gillan, D. J. (2012). You want me to trust a ROBOT? The development of a human–robot interaction trust scale. International Journal of Social Robotics, 4(3), 235–248. https://doi.org/10.1007/s12369-012-0144-0

[39]. Chi, O. H., Jia, S., Li, Y., & Gursoy, D. (2021). Developing a formative scale to measure consumers’ trust toward interaction with artificially intelligent (AI) social robots in service delivery. Computers in Human Behavior, 118, 106700. https://doi.org/10.1016/j.chb.2021.106700

[40]. Lyles, M. A., & Salk, J. E. (1996). Knowledge acquisition from foreign parents in international joint ventures: An empirical examination in the Hungarian context. Journal of International Business Studies, 27(5), 877–903. https://doi.org/10.1057/palgrave.jibs.8490155

[41]. Basaglia, S., Caporarello, L., Magni, M., & Pennarola, F. (2010). IT knowledge integration capability and team performance: The role of team climate. International Journal of Information Management, 30(6), 542–551. https://doi.org/10.1016/j.ijinfomgt.2010.04.003

[42]. Sarwat, N., & Abbas, M. (2020). Individual knowledge creation ability: Dispositional antecedents and relationship to innovative performance. European Journal of Innovation Management, 24(5), 1763–1781. https://doi.org/10.1108/EJIM-05-2020-0198

[43]. Hoon Song, J., Uhm, D., & Won Yoon, S. (2011). Organizational knowledge creation practice: Comprehensive and systematic processes for scale development. Leadership & Organization Development Journal, 32(3), 243–259. https://doi.org/10.1108/01437731111123906

[44]. Han, Z., & Battaglia, F. (2024). Transforming challenges into opportunities: Leveraging ChatGPT’s limitations for active learning and prompt engineering skill. The Innovation.

[45]. Walter, Y. (2024). Embracing the future of artificial intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15. https://doi.org/10.1186/s41239-024-00448-3

[46]. Lo, L. S. (2023). The art and science of prompt engineering: A new literacy in the information age. Internet Reference Services Quarterly, 27(4), 203–210. https://doi.org/10.1080/10875301.2023.2227621

[47]. Ortolan, P. (2023). Optimizing prompt engineering for improved generative AI content.

[48]. Johnson, M., Albizri, A., Harfouche, A., & Fosso-Wamba, S. (2022). Integrating human knowledge into artificial intelligence for complex and ill-structured problems: Informed artificial intelligence. International Journal of Information Management, 64, 102479. https://doi.org/10.1016/j.ijinfomgt.2022.102479

Cite this article

Yang,X.;Hao,Y.;Guo,J. (2025). Mind meets machine: how do humans and AI assistants co-create knowledge? . Journal of Applied Economics and Policy Studies,18(3),19-35.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Journal:Journal of Applied Economics and Policy Studies

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Jarrahi, M. H., Askay, D., Eshraghi, A., & Smith, P. (2023). Artificial intelligence and knowledge management: A partnership between human and AI. Business Horizons, 66(1), 87–99. https://doi.org/10.1016/j.bushor.2022.03.002

[2]. Sharma, A. (2023). Artificial intelligence for sense making in survival supply chains. International Journal of Production Research, 0(0), 1–24. https://doi.org/10.1080/00207543.2023.2221743

[3]. Feuerriegel, S., Hartmann, J., Janiesch, C., & Zschech, P. (2024). Generative AI. Business & Information Systems Engineering, 66(1), 111–126. https://doi.org/10.1007/s12599-023-00834-7

[4]. Stokel-Walker, C., & Van Noorden, R. (2023). What ChatGPT and generative AI mean for science. Nature, 614(7947), 214–216. https://doi.org/10.1038/d41586-023-00340-6

[5]. Hu, X., Tian, Y., Nagato, K., Nakao, M., & Liu, A. (2023). Opportunities and challenges of ChatGPT for design knowledge management. Procedia CIRP, 119, 21–28. https://doi.org/10.1016/j.procir.2023.05.001

[6]. Chan, C. K. Y., & Lee, K. K. W. (2023). The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learning Environments, 10(1), 60. https://doi.org/10.1186/s40561-023-00269-3

[7]. Feng, C. (Mitsu), Botha, E., & Pitt, L. (2024). From HAL to GenAI: Optimize chatbot impacts with CARE. Business Horizons. Advance online publication. https://doi.org/10.1016/j.bushor.2024.04.012

[8]. Li, L. (2023). Everyday AI sensemaking of freelance knowledge workers. In Companion Publication of the 2023 Conference on Computer Supported Cooperative Work and Social Computing (pp. 452–454). Association for Computing Machinery. https://doi.org/10.1145/3584931.3608924

[9]. Zhang, P., & Soergel, D. (2014). Towards a comprehensive model of the cognitive process and mechanisms of individual sensemaking. Journal of the Association for Information Science and Technology, 65(9), 1733–1756. https://doi.org/10.1002/asi.23125

[10]. Odden, T. O. B., & Russ, R. S. (2019). Defining sensemaking: Bringing clarity to a fragmented theoretical construct. Science Education, 103(1), 187–205. https://doi.org/10.1002/sce.21452

[11]. Norman, D. A., & Bobrow, D. G. (1975). On the role of active memory processes in perception and cognition (Technical Report No. 76-02). Center for Human Information Processing, University of California, San Diego.

[12]. Russell, D. M., Stefik, M. J., Pirolli, P., & Card, S. K. (1993). The cost structure of sensemaking. In Proceedings of the INTERACT ’93 and CHI ’93 Conference on Human Factors in Computing Systems (pp. 269–276). Association for Computing Machinery. https://doi.org/10.1145/169059.169209

[13]. Qian, X., & Fang, Y. (2024). Mitigating algorithm aversion through sensemaking? A revisit to the explanation-seeking process. PACIS 2024 Proceedings.

[14]. Gero, K. I., Swoopes, C., Gu, Z., Kummerfeld, J. K., & Glassman, E. L. (2024). Supporting sensemaking of large language model outputs at scale. Proceedings of the CHI Conference on Human Factors in Computing Systems (pp. 1–21). Association for Computing Machinery. https://doi.org/10.1145/3613904.3642139

[15]. Kanarik, K. J., Osowiecki, W. T., Lu, Y. (Joe), Talukder, D., Roschewsky, N., Park, S. N., Kamon, M., Fried, D. M., & Gottscho, R. A. (2023). Human–machine collaboration for improving semiconductor process development. Nature, 616(7958), 707–711. https://doi.org/10.1038/s41586-023-05773-7

[16]. Pizoń, J., & Gola, A. (2023). Human–machine relationship—Perspective and future roadmap for Industry 5.0 solutions. Machines, 11(2), 203. https://doi.org/10.3390/machines11020203

[17]. Ren, M., Chen, N., & Qiu, H. (2023). Human-machine collaborative decision-making: An evolutionary roadmap based on cognitive intelligence. International Journal of Social Robotics, 15(7), 1101–1114. https://doi.org/10.1007/s12369-023-01020-1

[18]. Sowa, K., Przegalinska, A., & Ciechanowski, L. (2021). Cobots in knowledge work: Human–AI collaboration in managerial professions. Journal of Business Research, 125, 135–142. https://doi.org/10.1016/j.jbusres.2020.11.038

[19]. Sowa, K., & Przegalinska, A. (2020). Digital coworker: Human-AI collaboration in work environment, on the example of virtual assistants for management professions. In A. Przegalinska, F. Grippa, & P. A. Gloor (Eds.), Digital transformation of collaboration (pp. 179–201). Springer. https://doi.org/10.1007/978-3-030-48993-9_13

[20]. Cao, S., Jiang, W., Wang, J., & Yang, B. (2024). From man vs. machine to man + machine: The art and AI of stock analyses. Journal of Financial Economics, 160, 103910. https://doi.org/10.1016/j.jfineco.2024.103910

[21]. Hoc, J.-M. (2000). From human–machine interaction to human–machine cooperation. Ergonomics, 43(7), 833–843. https://doi.org/10.1080/001401300409044

[22]. Lin, H., Han, J., Wu, P., Wang, J., Tu, J., Tang, H., & Zhu, L. (2023). Machine learning and human-machine trust in healthcare: A systematic survey. CAAI Transactions on Intelligence Technology. Advance online publication. https://doi.org/10.1049/cit2.12268

[23]. Xue, C., Zhang, H., & Cao, H. (2024). Multi-agent modelling and analysis of the knowledge learning of a human-machine hybrid intelligent organization with human-machine trust. Systems Science & Control Engineering, 12(1), 2343301. https://doi.org/10.1080/21642583.2024.2343301

[24]. Reich, T., Kaju, A., & Maglio, S. J. (n.d.). How to overcome algorithm aversion: Learning from mistakes. Journal of Consumer Psychology. Advance online publication. https://doi.org/10.1002/jcpy.1313

[25]. Nonaka, I., Takeuchi, H., & Umemoto, K. (1996). A theory of organizational knowledge creation. International Journal of Technology Management, 11(7–8), 833–845.

[26]. Al-Emran, M., Mezhuyev, V., Kamaludin, A., & Shaalan, K. (2018). The impact of knowledge management processes on information systems: A systematic review. International Journal of Information Management, 43, 173–187. https://doi.org/10.1016/j.ijinfomgt.2018.08.001

[27]. Zhang, J. (1997). The nature of external representations in problem solving. Cognitive Science, 21(2), 179–217.

[28]. Richardson, M., & Ball, L. J. (2009). Internal representations, external representations and ergonomics: Towards a theoretical integration. Theoretical Issues in Ergonomics Science, 10(4), 335–376. https://doi.org/10.1080/14639220802368872

[29]. Piaget, J. (1976). Piaget’s theory. In B. Inhelder, H. H. Chipman, & C. Zwingmann (Eds.), Piaget and his school (pp. 11–23). Springer. https://doi.org/10.1007/978-3-642-46323-5_2

[30]. Rumelhart, D. E., & Norman, D. A. (1976). Accretion, tuning and restructuring: Three modes of learning (Report No. 7602). Center for Human Information Processing, University of California, San Diego.

[31]. Vosniadou, S., & Brewer, W. F. (1987). Theories of knowledge restructuring in development. Review of Educational Research, 57(1), 51–67. https://doi.org/10.3102/00346543057001051

[32]. Chi, M. T. (2009). Three types of conceptual change: Belief revision, mental model transformation, and categorical shift. In S. Vosniadou (Ed.), International handbook of research on conceptual change (pp. 89–110). Routledge.

[33]. Pontis, S., & Blandford, A. (2016). Understanding “influence”: An empirical test of the Data-Frame Theory of Sensemaking. Journal of the Association for Information Science and Technology, 67(4), 841–858. https://doi.org/10.1002/asi.23427

[34]. Katila, R., & Ahuja, G. (2017). Something old, something new: A longitudinal study of search behavior and new product introduction. Academy of Management Journal, 45(8), 1183–1194. https://doi.org/10.5465/3069433