1. Introduction

In today's low-altitude security landscape, effective drone detection technology is crucial. Recently, illegal drone intrusions have garnered significant media attention. For example, The New York Times reported a drone incident at a presidential speech site, which raised serious concerns among security agencies [1]. This event not only highlighted vulnerabilities in public safety but also underscored the urgent need for advanced drone detection technologies to prevent similar occurrences. Additionally, The Guardian reported a drone disrupting a football match, forcing a game halt and compromising the safety of spectators and players. Such incidents emphasize the critical importance of drone detection for ensuring public safety and countering potential threats [2].

Current drone detection technologies predominantly rely on processing signals from radar, sound waves, and the Doppler effect. These methods utilize signal processing principles to capture a drone’s flight trajectory, speed, and emitted signals for effective identification and tracking [3]. However, ongoing advancements in drone technology, combined with increasingly complex flight environments and interference factors, pose significant challenges to existing detection methods [4]. For instance, smaller and faster drones make radar detection more challenging, while electromagnetic interference in urban settings and obstacles from buildings further complicate detection efforts [5]. As UAVs are increasingly recognized as threats to both civilian and military targets, there is a pressing need for more robust UAV detection systems, which are still under development. Among various proposed methods, passive radio frequency sensing has shown considerable promise.

In this study, we utilize an open radio frequency dataset to investigate UAV detection using Convolutional Neural Networks (CNNs). Our comparative analysis with traditional machine learning models, such as random forest, AdaBoost, and XGBoost, demonstrates that CNNs outperform these methods in UAV detection tasks. CNNs achieved exceptional accuracy rates: 99.93% for detecting UAVs, 93.30% for classifying UAV types, and 76.43% for categorizing UAV modes, confirming their effectiveness in tackling UAV detection challenges.

This paper presents an innovative approach to UAV detection using CNNs and RF data. The paper explores the application of CNNs, which are typically used for image recognition, to RF data for detecting UAVs. This is a novel approach as CNNs are not traditionally applied to RF data. The study compares the performance of CNNs with traditional machine learning models like random forest, AdaBoost, and XGBoost, providing a comprehensive analysis of different detection methods. The experiments utilize an open RF dataset containing signals from various drone models, which is a valuable resource for training and testing UAV detection models. The CNN model achieves exceptionally high accuracy rates of 99.93% for UAV detection, 93.30% for UAV type classification, and 76.43% for UAV mode categorization. The results demonstrate that CNNs outperform traditional machine learning models in all tasks, indicating the potential of deep learning for UAV detection.

2. Related Work

2.1. Radio Frequency Sensing

A deep learning-based method for monitoring the radio spectrum in UAV communication is detailed in [6]. The authors developed a spectrum dataset to simulate UAV communication environments and introduced a novel labeling method. They utilized detection networks to extract information on signal presence and location within the spectrum and employed decision-level fusion to integrate results from multiple nodes. The system's performance was assessed using accuracy, recall, and F1 score metrics across different signal-to-noise ratios and modulation types. The method proved highly effective for wireless radio spectrum monitoring in complex electromagnetic environments, demonstrating its adaptability to scenarios involving multiple receivers across extensive areas. This approach provides a valuable deep learning solution for radio monitoring in UAV communication.

In [7], an innovative learning-based framework for the low-cost detection of UAVs in China is presented. The authors developed a method that utilizes video streaming attributes to detect UAVs and associated devices within WiFi range. The framework operates in three stages: WiFi data sniffing and preprocessing, UAV classification, and UAV database maintenance. It is implemented on a low-cost portable device, allowing for early-stage detection of invading UAVs. By classifying traffic traces based on source MAC addresses, the framework reduces feature complexity and computational demands. It includes a dataset with both video-streaming and non-video-streaming UAV modes, facilitating the detection of both standard and stealthy UAVs. Integrated into a compact device with a built-in CPU and WiFi chipset, the framework's performance was evaluated using real-world datasets, demonstrating its effectiveness and adaptability across various scenarios, akin to the deep learning-based radio spectrum monitoring method described in [6].

2.2. Audio Detection

In [8], the authors introduce a deep learning-based method for drone detection that utilizes Wavelet Scattering Transform and One-Dimensional Convolutional Neural Networks (1D-CNNs). This approach involves simulating drone communication environments to create a labeled spectrum dataset. Detection networks extract information on signal presence and location, and decision-level fusion integrates results from multiple nodes. The system's performance is evaluated across various signal-to-noise ratios and modulation types using accuracy, recall, and F1 score, offering a comprehensive assessment of its detection capabilities. A notable contribution of this research is its focus on the application of audio detection methods in conjunction with traditional radio frequency-based approaches. The authors highlight the potential of audio signals as an effective detection mechanism, particularly valuable in complex electromagnetic environments where it can add an extra layer of surveillance and security.

In [9], a novel audio detection technique for unmanned aerial vehicles (UAVs) is presented, employing transformer-based methods. The researchers developed an acoustic anomaly detection system, similar to a stethoscope for drones, which listens for irregular sounds associated with UAV operations. They compiled an extensive dataset of UAV sounds that reflects real-world environments and used a sophisticated labeling technique to identify anomalies. By leveraging transformer networks, the system effectively analyzes audio signals to detect and locate anomalies with high precision. Performance was evaluated using a range of metrics, including accuracy, recall, and F1 score, across various operational conditions. This study highlights the significant potential of audio detection for improving UAV safety and reliability, particularly in complex acoustic environments.

2.3. Vision Detection

The rapid advancement of drone technology has brought significant convenience, but it has also introduced new security risks. Traditional video surveillance methods often fall short in detecting small and long-range drones, highlighting the need for more effective solutions. In response, researchers are actively exploring innovative detection technologies, with photoelectric detection showing considerable promise in this field.

Research presented in [10] focuses on optical-electronic detection, leveraging computer vision and deep learning to identify small UAVs. The study utilizes various versions of the advanced YOLO (You Only Look Once) object detection models, which process images with cutting-edge computer vision and deep learning techniques to enhance detection capabilities. A notable contribution of this research is its analysis of these models' performance in enabling safe and autonomous UAV operations, evaluating factors such as detection accuracy, speed, and reliability. The photonic detection approach is emphasized for its use of light-electronic interactions to capture and analyze UAV presence.

In [11], electro-optical detection technology is categorized into passive and active types. Passive electro-optical detection relies on detecting infrared radiation or visible light emitted by the drone’s engine. While it offers strong concealment, it is vulnerable to weather and environmental conditions. Active electro-optical detection involves illuminating the drone with laser or infrared light and analyzing the reflected signals. This method allows for long-range, high-precision detection but requires emitting equipment, which may reveal the operator’s position.

2.4. Radar Detection

Radar technology is essential for drone detection and navigation, offering distinct advantages over other sensors such as cameras and lidars. This article reviews two studies that explore radar applications in drone systems: FMCW radar for altitude measurement and navigation, and frequency-agile radar for target detection and radar cross-section (RCS) analysis.

A drone system equipped with a 77-GHz FMCW radar designed for altitude measurement and ground reflection analysis in [12]. The radar generates range profiles, and a post-processing algorithm identifies altitude above ground level by detecting the strongest ground reflection. To address the issue of free-space path loss, the study proposes a range compensation method that enhances measurement accuracy and helps distinguish ground reflections from other objects. This system shows promise for navigation and landing assistance, providing reliable altitude information across various environmental conditions.

The effects of frequency agility on radar detection performance using RCS data from different drones are investigated in [13]. The statistical analysis of integrated RCS data indicates that the Gamma distribution effectively models the variations caused by frequency agility. The study also evaluates the performance of an incoherent square-law detector with both measured and simulated data, demonstrating good correlation between the two. The findings suggest that frequency agility reduces RCS fluctuations, thereby improving detection performance at high signal-to-noise ratio (SNR) levels.

Table 1 presents a summary of advantages and disadvantages of UAV detection technologies.

Table 1: Summary of advantages and disadvantages of UAV detection technologies.

Detection Technology | Advantages | Disadvantages |

Radio Frequency Sensing | High recognition accuracy; Low cost; Low computational complexity; Easy deployment. | Training data are required; Drone models and manufacturers are difficult to identify |

Audio Detection | Robust to noise and signal deformation; Suitable for real-time applications on resource-constrained devices. | Training data are required; Low accuracy in noisy environments. |

Vision Detection | Good performance in clear backgrounds; High accuracy, good balance between accuracy and speed. | Poor performance in far range and harsh backgrounds; High computational cost. |

Radar Detection | Weather resistant; Enhanced detectability of drones. | Precise frequency hopping control requirement; Susceptible to interference; Complex system design. |

3. Dataset Description

This paper utilizes a dataset of drone WiFi signals containing 227 segments to explore the feasibility of using radio frequency signals for drone detection and identification. The dataset includes various drone models, such as Bebop, Drone, AR, and Phantom, and distinguishes between different control modes, including off, on and connected, hovering, flying, and video recording [14]. For data collection, the NI USRP-2943R radio frequency receiver and LabView software are used. The sampling duration was divided into two categories, 5.25 seconds and 10.25 seconds, depending on the presence of the drone. The raw data amounted to 40GB, encompassing the 227 segments.

4. Methodology Description

This paper explores the CNN, a deep learning model renowned for its effectiveness in processing grid-structured data. CNNs are particularly noted for their exceptional performance in image and temporal signal processing [16]. We will provide a comprehensive overview of the main components of the CNN model and its application in estimating micro-motion parameters.

Firstly, the convolutional layer, the core of the CNN, extracts local features by applying convolutional kernels to the input data. These features are progressively combined in the deeper layers of the network to form more complex and abstract representations. Secondly, the pooling layer reduces the dimensionality of the features output by the convolutional layer, which decreases computational load while retaining essential information [17]. This reduction is crucial for improving the model's efficiency and robustness.

Subsequently, the fully connected layer integrates the features extracted by the convolutional and pooling layers, creating high-dimensional feature vectors essential for classification or regression tasks. The addition of activation functions introduces non-linearity to the network, greatly enhancing its expressive power. Finally, for multi-classification tasks, the Softmax layer transforms the features from the output layer into a probability distribution, which facilitates classification decisions.

5. Experiments

The experiments were conducted using Python and the TensorFlow framework. The DNN model includes two dense layers, each with 100 neurons and ReLU activation functions, followed by two dropout layers with a dropout rate of 0.2, and an output layer. The CNN model consists of two convolutional layers with a kernel size of 3, each equipped with 100 filters and ReLU activation functions. Additionally, it features two batch normalization layers, two dropout layers with a dropout rate of 0.2, a global average pooling layer, and an output layer.

For the UAV detection task, the model outputs two classes. For UAV type classification, it outputs four classes, and for UAV mode classification, it outputs ten classes. The Adam optimizer is used with categorical cross-entropy as the loss function. Early stopping is applied to monitor validation loss, halting training if there is no improvement after 20 epochs. The models are evaluated and compared using two metrics: accuracy and the F1 score.

Accuracy is the most straightforward metric for classification problems. It measures the proportion of correctly predicted instances (both true positives and true negatives) over the total number of instances. In the context of UAV detection, a high accuracy rate indicates that the model is effective at correctly identifying the presence of UAVs, classifying UAV types, and categorizing UAV modes. However, it does not provide insights into the type of errors made (e.g., false positives vs. false negatives) and can be misleading if the class distribution is imbalanced. The F1 score is a harmonic mean of precision and recall, which provides a balance between the two. It is particularly useful when the class distribution is imbalanced. A high F1 score indicates that the model is effective at distinguishing between different UAV types and modes, which is important for targeted security measures.

6. Discussion

Table 2 summarizes key evaluation results for both machine learning and deep learning models across three specific tasks: UAV detection, UAV type classification, and UAV mode classification. The data reveal that detecting the presence of a UAV is notably easier compared to classifying its type or mode. UAV detection achieves an impressive accuracy rate of up to 99%, which is generally sufficient for various applications and highlights the effectiveness of radio frequency data in UAV detection. However, there is significant potential for improvement, especially in the classification of UAV types and modes.

Table 2: Evaluation results.

Problem | UAV Detection | UAV Type Classification | UAV Mode Classification | |||

Model | Accuracy | F1 Score | Accuracy | F1 Score | Accuracy | F1 Score |

Random Forest | 0.9991 | 0.9985 | 0.8952 | 0.9260 | 0.6888 | 0.6528 |

AdaBoost | 1.0000 | 1.0000 | 0.4357 | 0.3568 | 0.2786 | 0.1280 |

XGBoost | 0.9998 | 0.9996 | 0.9278 | 0.9500 | 0.7328 | 0.7050 |

DNN | 0.9991 | 0.9985 | 0.9020 | 0.9300 | 0.7480 | 0.6996 |

CNN | 0.9993 | 0.9989 | 0.9330 | 0.9528 | 0.7643 | 0.7146 |

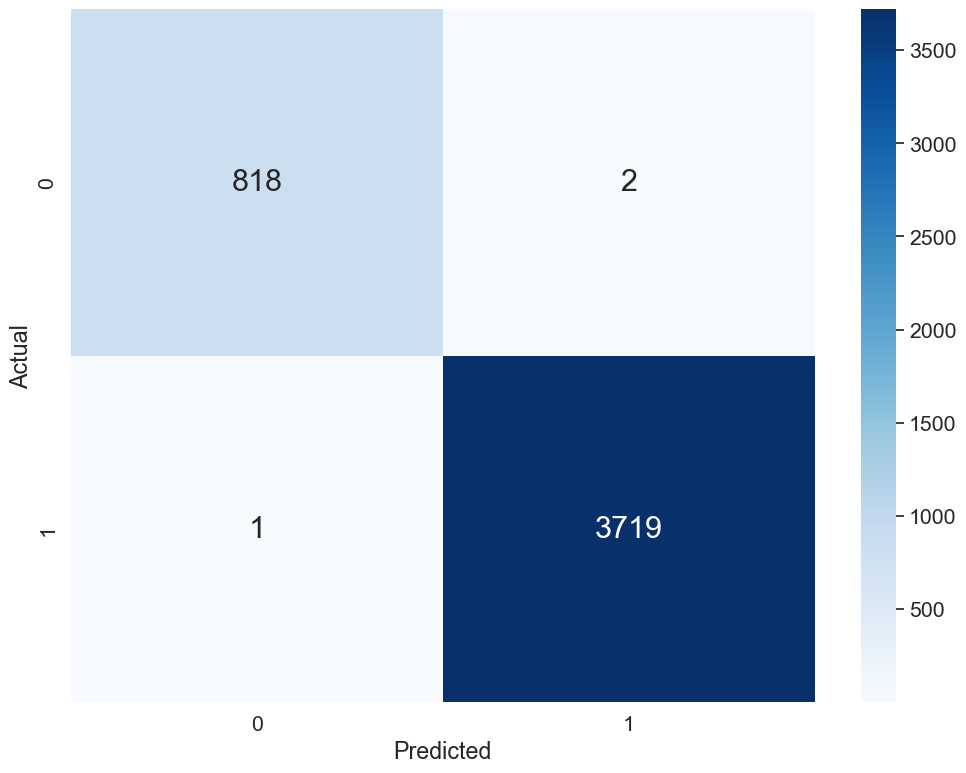

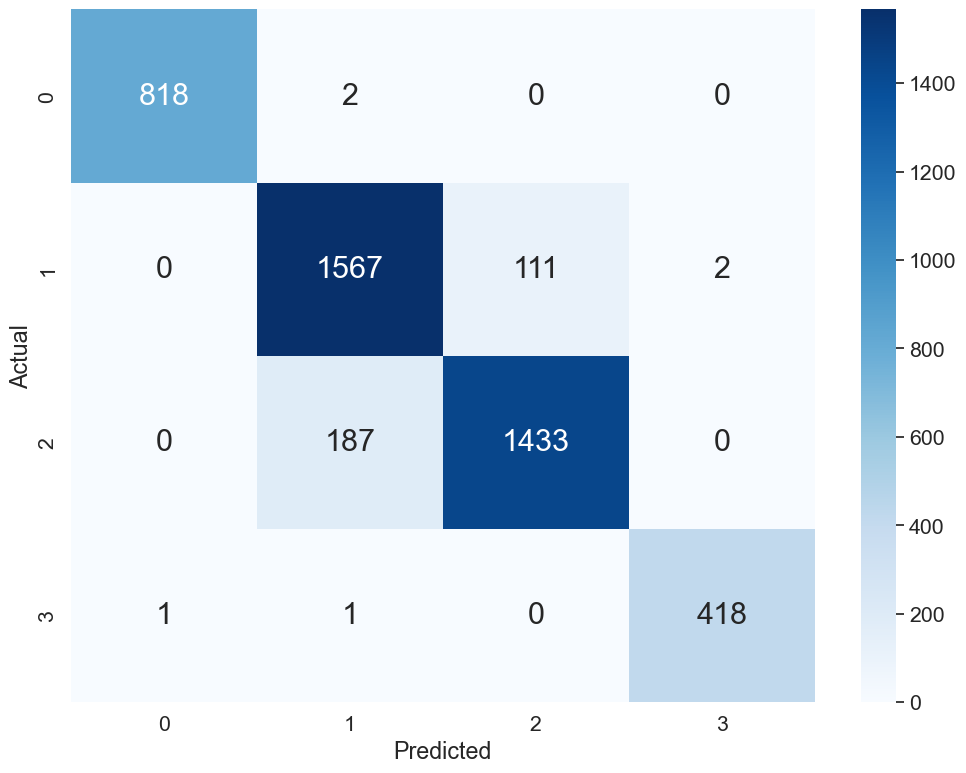

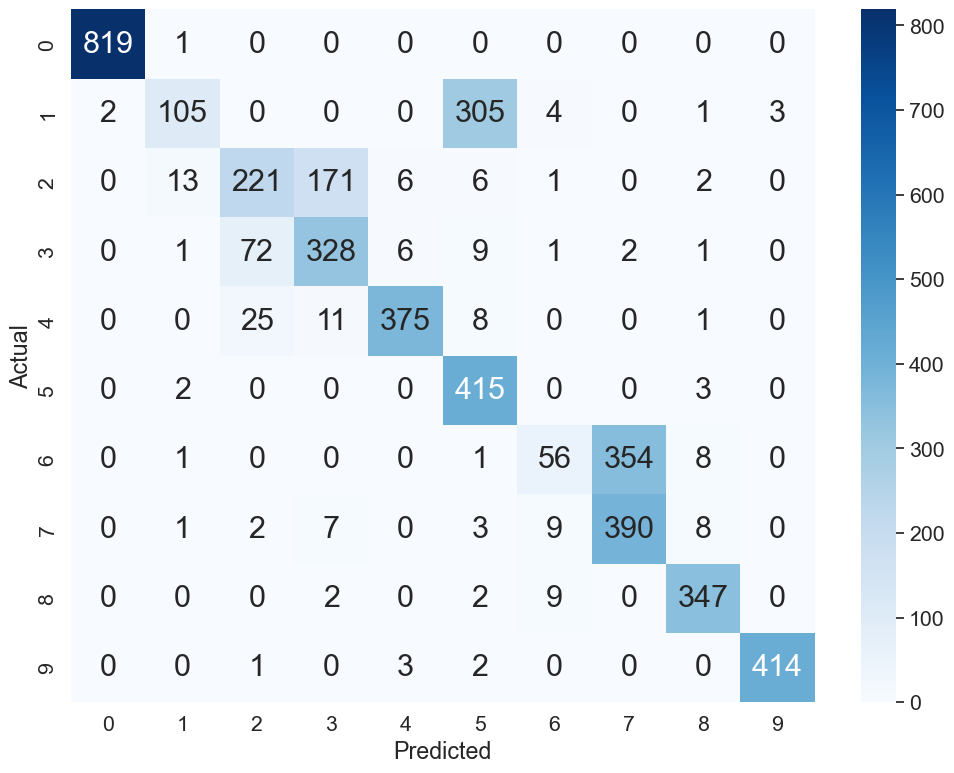

The data in Table 2 clearly indicate that CNNs outperform DNNs and other machine learning models, achieving the highest accuracy rates: 99.93% for UAV detection, 93.30% for UAV type classification, and 76.43% for UAV mode classification. The superior performance of the CNN is further illustrated in Figure 1, which presents confusion matrices showing the CNN’s effectiveness across various detection and classification tasks.

(a) UAV detection.

(b) UAV type classification.

(c) UAV mode classification.

Figure 1: Confusion matrices for CNN.

7. Conclusion

In this research, we utilized an open radio frequency dataset to investigate UAV detection using CNNs. Our findings demonstrate that CNNs significantly outperform traditional machine learning models such as random forest, AdaBoost, and XGBoost in detecting UAVs. Specifically, CNNs achieved exceptional accuracy rates: 99.93% for UAV detection, 93.30% for classifying UAV types, and 76.43% for categorizing UAV modes.

Several future research directions are proposed to advance UAV detection technology. The first direction involves multi-modal fusion to enhance detection accuracy. This approach aims to develop advanced algorithms that integrate multiple data sources—such as RF signals, visual data, and acoustic signals—to improve overall UAV detection performance [18, 19, 20]. The second direction focuses on real-time UAV detection and classification using edge computing. This research aims to design and implement systems that leverage edge computing to minimize latency and reduce computational load, enabling more efficient real-time detection [21, 22]. The third direction is adaptive learning and generalization in UAV detection. This area seeks to develop deep learning models that can adapt to new UAV types and behaviors without requiring extensive retraining, thus improving flexibility and scalability [23].

References

[1]. Jiang W, Han H, He M, et al. Network simulation tools for unmanned aerial vehicle communications: A survey[J]. International Journal of Communication Systems, e5878.

[2]. Jianping W, Guangqiu Q, Chunming W, et al. Federated learning for network attack detection using attention-based graph neural networks[J]. Scientific Reports, 2024, 14(1): 19088.

[3]. Jiang W, Han H, Zhang Y, et al. Federated split learning for sequential data in satellite–terrestrial integrated networks[J]. Information Fusion, 2024, 103: 102141.

[4]. Zhang Y, Xu S, Zhang L, et al. Short-term multi-step-ahead sector-based traffic flow prediction based on the attention-enhanced graph convolutional LSTM network (AGC-LSTM)[J]. Neural Computing and Applications, 2024: 1-20.

[5]. Jiang W, Han H, He M, et al. ML-based pre-deployment SDN performance prediction with neural network boosting regression[J]. Expert Systems with Applications, 2024, 241: 122774.

[6]. Hou C, Fu D, Zhou Z, et al. A Deep Learning-Based Multi-Signal Radio Spectrum Monitoring Method for UAV Communication[J]. Drones, 2023, 7(8): 511.

[7]. Bi L, Xu Z X, Yang L. Low-cost UAV detection via WiFi traffic analysis and machine learning[J]. Scientific Reports, 2023, 13(1): 20892.

[8]. Ali M, Nathwani K. Exploiting Wavelet Scattering Transform & 1D-CNN for Unmanned Aerial Vehicle Detection[J]. IEEE Signal Processing Letters, 2024.

[9]. Anidjar O H, Barak A, Ben-Moshe B, et al. A stethoscope for drones: Transformers-based methods for UAVs acoustic anomaly detection[J]. IEEE Access, 2023, 11: 33336-33353.

[10]. Dewangan V, Saxena A, Thakur R, et al. Application of image processing techniques for uav detection using deep learning and distance-wise analysis[J]. Drones, 2023, 7(3): 174.

[11]. Wang H, Wang X, Zhou C, et al. Low in resolution, high in precision: UAV detection with super-resolution and motion information extraction[C]//ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023: 1-5.

[12]. Başpınar Ö O, Omuz B, Öncü A. Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones[J]. Drones, 2023, 7(2): 86.

[13]. Rosamilia M, Aubry A, Balleri A, et al. Radar Detection Performance Via Frequency Agility Using Measured UAVs RCS Data[J]. IEEE Sensors Journal, 2023.

[14]. Allahham M H D S, Al-Sa'd M F, Al-Ali A, et al. DroneRF dataset: A dataset of drones for RF-based detection, classification and identification[J]. Data in brief, 2019, 26: 104313.

[15]. Al-Sa’d M F, Al-Ali A, Mohamed A, et al. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database[J]. Future Generation Computer Systems, 2019, 100: 86-97

[16]. Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).

[17]. Simard, P. Y., Steinkraus, D., & Platt, J. C. (2003). Best practices for convolutional neural networks applied to visual document analysis. In ICDAR (Vol. 3, pp. 958-962). IEEE.

[18]. He M, Jiang W, Gu W. TriChronoNet: Advancing electricity price prediction with Multi-module fusion[J]. Applied Energy, 2024, 371: 123626.

[19]. Xia Z, Liu Y, Wang X, et al. Infrared and Visible Image Fusion via Hybrid Variational Model[J]. IEICE TRANSACTIONS on Information and Systems, 2024, 107(4): 569-573.

[20]. Yang B, Wang X, Xing Y, et al. Modality Fusion Vision Transformer for Hyperspectral and LiDAR Data Collaborative Classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024.

[21]. Zhou Z, Bao Z, Jiang W, et al. Latent vector optimization-based generative image steganography for consumer electronic applications[J]. IEEE Transactions on Consumer Electronics, 2024.

[22]. Jiang W, Zhang Y, Han H, et al. Mobile traffic prediction in consumer applications: a multimodal deep learning approach[J]. IEEE Transactions on Consumer Electronics, 2024.

[23]. Lu Y, Wang W, Bai R, et al. Hyper-relational interaction modeling in multi-modal trajectory prediction for intelligent connected vehicles in smart cites[J]. Information Fusion, 2024: 102682.

Cite this article

Chen,J. (2024). UAV Detection using Convolutional Neural Networks and Radio Frequency Data. Applied and Computational Engineering,100,129-136.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Jiang W, Han H, He M, et al. Network simulation tools for unmanned aerial vehicle communications: A survey[J]. International Journal of Communication Systems, e5878.

[2]. Jianping W, Guangqiu Q, Chunming W, et al. Federated learning for network attack detection using attention-based graph neural networks[J]. Scientific Reports, 2024, 14(1): 19088.

[3]. Jiang W, Han H, Zhang Y, et al. Federated split learning for sequential data in satellite–terrestrial integrated networks[J]. Information Fusion, 2024, 103: 102141.

[4]. Zhang Y, Xu S, Zhang L, et al. Short-term multi-step-ahead sector-based traffic flow prediction based on the attention-enhanced graph convolutional LSTM network (AGC-LSTM)[J]. Neural Computing and Applications, 2024: 1-20.

[5]. Jiang W, Han H, He M, et al. ML-based pre-deployment SDN performance prediction with neural network boosting regression[J]. Expert Systems with Applications, 2024, 241: 122774.

[6]. Hou C, Fu D, Zhou Z, et al. A Deep Learning-Based Multi-Signal Radio Spectrum Monitoring Method for UAV Communication[J]. Drones, 2023, 7(8): 511.

[7]. Bi L, Xu Z X, Yang L. Low-cost UAV detection via WiFi traffic analysis and machine learning[J]. Scientific Reports, 2023, 13(1): 20892.

[8]. Ali M, Nathwani K. Exploiting Wavelet Scattering Transform & 1D-CNN for Unmanned Aerial Vehicle Detection[J]. IEEE Signal Processing Letters, 2024.

[9]. Anidjar O H, Barak A, Ben-Moshe B, et al. A stethoscope for drones: Transformers-based methods for UAVs acoustic anomaly detection[J]. IEEE Access, 2023, 11: 33336-33353.

[10]. Dewangan V, Saxena A, Thakur R, et al. Application of image processing techniques for uav detection using deep learning and distance-wise analysis[J]. Drones, 2023, 7(3): 174.

[11]. Wang H, Wang X, Zhou C, et al. Low in resolution, high in precision: UAV detection with super-resolution and motion information extraction[C]//ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023: 1-5.

[12]. Başpınar Ö O, Omuz B, Öncü A. Detection of the Altitude and On-the-Ground Objects Using 77-GHz FMCW Radar Onboard Small Drones[J]. Drones, 2023, 7(2): 86.

[13]. Rosamilia M, Aubry A, Balleri A, et al. Radar Detection Performance Via Frequency Agility Using Measured UAVs RCS Data[J]. IEEE Sensors Journal, 2023.

[14]. Allahham M H D S, Al-Sa'd M F, Al-Ali A, et al. DroneRF dataset: A dataset of drones for RF-based detection, classification and identification[J]. Data in brief, 2019, 26: 104313.

[15]. Al-Sa’d M F, Al-Ali A, Mohamed A, et al. RF-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database[J]. Future Generation Computer Systems, 2019, 100: 86-97

[16]. Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in neural information processing systems (pp. 1097-1105).

[17]. Simard, P. Y., Steinkraus, D., & Platt, J. C. (2003). Best practices for convolutional neural networks applied to visual document analysis. In ICDAR (Vol. 3, pp. 958-962). IEEE.

[18]. He M, Jiang W, Gu W. TriChronoNet: Advancing electricity price prediction with Multi-module fusion[J]. Applied Energy, 2024, 371: 123626.

[19]. Xia Z, Liu Y, Wang X, et al. Infrared and Visible Image Fusion via Hybrid Variational Model[J]. IEICE TRANSACTIONS on Information and Systems, 2024, 107(4): 569-573.

[20]. Yang B, Wang X, Xing Y, et al. Modality Fusion Vision Transformer for Hyperspectral and LiDAR Data Collaborative Classification[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2024.

[21]. Zhou Z, Bao Z, Jiang W, et al. Latent vector optimization-based generative image steganography for consumer electronic applications[J]. IEEE Transactions on Consumer Electronics, 2024.

[22]. Jiang W, Zhang Y, Han H, et al. Mobile traffic prediction in consumer applications: a multimodal deep learning approach[J]. IEEE Transactions on Consumer Electronics, 2024.

[23]. Lu Y, Wang W, Bai R, et al. Hyper-relational interaction modeling in multi-modal trajectory prediction for intelligent connected vehicles in smart cites[J]. Information Fusion, 2024: 102682.