1. Introduction

As global climate change intensifies, the importance of accurate weather forecasting has escalated, impacting agriculture, energy management, environmental protection, and daily life. The precision of weather predictions is crucial for socio-economic development and the overall quality of life. Traditional forecasting methods, relying on physical models and statistical approaches, often struggle to handle the complexities of nonlinear and time-varying features, particularly under the uncertainties posed by climate change.

Recent advancements in deep learning have emerged as promising solutions for improving weather prediction accuracy. Techniques such as Convolutional Neural Networks (CNNs) effectively capture spatial patterns, while Recurrent Neural Networks (RNNs) and Long Short-Term Memory networks (LSTMs) excel in processing time series data, effectively managing time dependencies in meteorological variables. Despite notable progress in predicting rainfall and temperature trends, single-model approaches often falter when faced with high-dimensional, multi-scale weather data. This has led researchers to explore hybrid models, particularly the CNN-LSTM combination, to enhance prediction performance.

This study specifically focuses on analyzing and predicting historical temperature data, utilizing a CNN-LSTM hybrid model. The research involves a comprehensive workflow that includes data preprocessing, exploratory analysis, and model training, demonstrating the hybrid model's strong performance in prediction accuracy and stability. By effectively addressing challenges such as missing data and the complexities of high-dimensional meteorological information, this research showcases the potential of deep learning technologies in weather forecasting.

2. Related Work

Time series forecasting has traditionally relied on models like Autoregressive Moving Average (ARMA) and Autoregressive Integrated Moving Average (ARIMA), known for their effectiveness with linear and stationary data in fields such as economic forecasting and energy consumption. However, these methods are limited by their linear assumptions, struggling with nonlinear and time-varying datasets [1]. In response, Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks emerged, capturing long-term dependencies in sequential data, and proving useful in areas like speech recognition. Yet, LSTMs can encounter challenges with intricate local patterns and overfitting, especially in noisy environments [2].

To enhance performance, hybrid models combining Convolutional Neural Networks (CNNs) and LSTMs have been developed, capitalizing on CNNs' ability to extract local features while utilizing LSTMs for temporal modeling. This CNN-LSTM architecture has shown promise in various applications, including precipitation forecasting, though it introduces computational complexity and overfitting risks, particularly with high-dimensional data [3].

Recent advancements have increasingly incorporated deep learning techniques into weather forecasting, especially in regions with complex climates. These models have significantly improved the accuracy of temperature and weather predictions. Notable studies include Ranjan et al., who introduced a hybrid neural network for predicting traffic congestion, and Zhang et al., who employed a CNN-LSTM model for long-term global temperature forecasting, demonstrating enhanced prediction accuracy [4-5]. Huang and Kuo applied CNN-LSTM for PM2.5 concentration forecasting, achieving superior results compared to other machine learning methods [6]. Wang et al. explored CNN-LSTM for short-term storm surge prediction, finding that the hybrid model outperformed traditional approaches like Support Vector Regression [7].

Overall, the integration of CNNs and LSTMs has marked a significant advancement in time series forecasting, particularly in meteorological applications, by effectively managing complex data patterns and improving prediction accuracy. These developments offer valuable insights for future research and practical applications in various domains, including climate change mitigation and urban planning [8].

3. Predicting Temperature Model

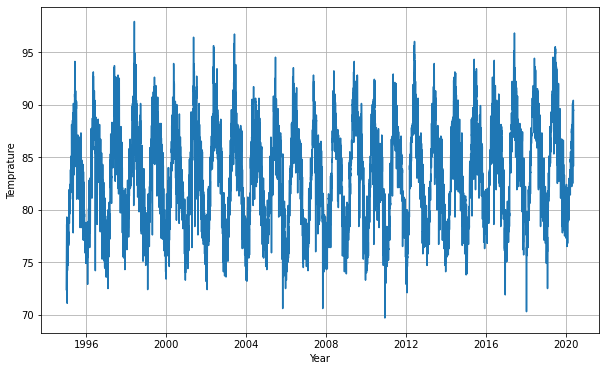

3.1. Data

This dataset primarily consists of eight fields: Region, Country, State, City, Month, Day, Year, and AvgTemperature. The dataset covers multiple regions, with North America accounting for 54%, Europe for 13%, and other regions for 33%. This distribution indicates that the amount of data from North America is significantly higher than that from other regions. The dataset does not specify individual countries in detail, but it can be inferred that the majority of data originates from North America and Europe. This diversity may provide a comparative basis for climate characteristics across different regions.

The data is primarily concentrated in the state of Texas, which represents 4% of the total. Data from other states or regions is classified as "Other," accounting for 46%. This may reflect a regional preference in data collection, which should be considered in the analysis.

In terms of cities, data has been collected from a total of 321 cities and regions. The Month variable records the observation months, which helps in analyzing seasonal climate changes and temperature trends. Data from different months can reveal inter-annual temperature variation patterns. The Day variable records the specific observation dates, providing a foundation for analyzing daily temperature fluctuations. This variable, when combined with the Month variable, aids in understanding temperature changes within the season.

Figure 1: Structure of CNN-LSTM Model

3.2. CNN-LSTM Model

Table 1 summarizes the architecture of the model, detailing each layer's output shape and associated parameters. The Input_layer has a shape of (None, 60, 1), accepting sequences of length 60 with a single feature, and contains no parameters. The first Conv1D layer, with 60 filters, produces an output shape of (None, 56, 60) and introduces 360 parameters for convolutional operations. Two LSTM layers, each with 60 units, maintain an output shape of (None, 60, 60) and consist of 24,840 parameters each, enabling the capture of long-term dependencies in the data. The first Dense layer reduces the output to 30 units, resulting in a shape of (None, 30) with 1,830 parameters. The second Dense layer further decreases this to 10 units, producing an output shape of (None, 10) and incorporating 310 parameters. The final Dense layer outputs a single prediction with 11 parameters. Lastly, the Lambda layer applies a scaling transformation to the output, maintaining the shape (None, 1) and containing no additional parameters. Overall, this table provides a comprehensive overview of the model's structure and how each layer contributes to processing and predicting time series data [9].

Table 1: All the layers with specific parameters.

Layer | Out Shape | Param |

Input_layer | (None, 60, 1) | 0 |

Conv1D (filters=60) | (None, 56, 60) | 360 |

LSTM (units=60) | (None, 60, 60) | 24840 |

LSTM (units=60) | (None, 60, 60) | 24840 |

Dense (units=30) | (None, 30) | 1830 |

Dense (units=10) | (None, 10) | 310 |

Dense (units=1) | (None, 1) | 11 |

Lambda | (None, 1) | 0 |

The first Conv1D layer utilizes 60 convolutional filters, each with a kernel size of 5. By applying the ReLU activation function, this layer introduces non-linearity into the model, enabling it to efficiently extract local features from the input sequence. The input consists of 60 time steps, each containing one feature, making this layer essential for identifying initial patterns in the data. The second Conv1D layer continues the feature extraction process, maintaining 60 filters to refine and capture higher-level features. The ReLU activation function ensures that the model can recognize more complex patterns within the time series data.

Following the convolutional layers, the model includes two LSTM layers, each comprising 60 units. These layers are crucial for capturing long-term dependencies within the input sequence, as they process the output from the previous layers. Both LSTM layers return sequences, preserving the temporal information necessary for subsequent processing. The LSTM architecture enables the model to understand the intricate relationships between data points over time, enhancing its predictive capabilities.

4. Results

The selection of the loss function is a critical factor that significantly influences the prediction accuracy of the model. In this study, we have chosen to utilize Mean Absolute Error (MAE) as the primary loss function for evaluating the model's performance. MAE quantifies the average magnitude of the errors in a set of predictions by calculating the absolute differences between predicted values and actual outcomes. One of the key advantages of MAE is that it does not take into account the direction of these errors; instead, it focuses solely on their size. This characteristic makes MAE particularly suitable for tasks where the objective is to minimize the overall prediction errors, regardless of whether those errors are positive or negative.

Furthermore, MAE provides a clear and intuitive interpretation of the model’s performance. By representing the average error in the same units as the predicted values, it allows for easy comparison and understanding of the model’s accuracy. For example, if the predictions are in degrees Celsius, the MAE will also be expressed in degrees Celsius, making it straightforward for practitioners to gauge how closely the model's predictions align with actual temperature readings. This clarity is invaluable in practical applications, where stakeholders often seek to understand the implications of model performance in tangible terms. Overall, the use of MAE as the loss function not only enhances the reliability of the model’s predictions but also aids in communicating its effectiveness in a meaningful way.

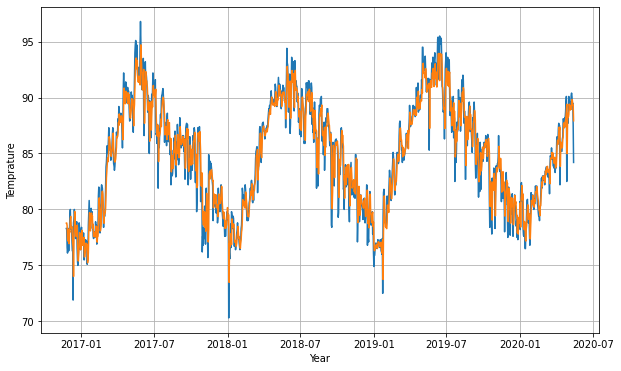

Figure 2: The prediction curve and test curve.

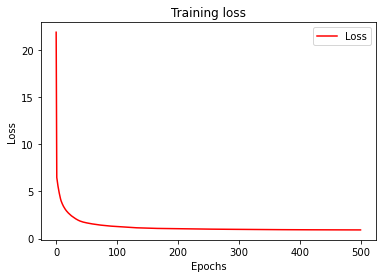

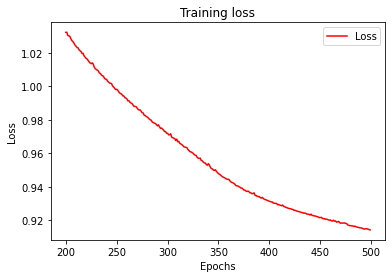

This study examines the model's convergence by plotting the loss function's variation curve during the training process. The results indicate that the Mean Absolute Error (MAE) experiences a rapid decline from over 20 in the early stages of training, quickly approaching a value near 1. This sharp decrease illustrates the model's effective learning mechanism, as it swiftly adapts to the underlying patterns present in the training data. Following this initial phase, the MAE stabilizes, ultimately reaching a value close to 0.90. This stability is crucial, as it not only signifies that the model has successfully learned the significant features and trends within the dataset but also reflects an enhanced prediction accuracy. The consistent reduction in the loss function throughout training highlights the model's robustness and reliability in capturing the complexities of the temperature data, thereby reinforcing its effectiveness in weather forecasting. Overall, the convergence observed in the loss function illustrates the model's capacity to generalize well to unseen data, which is a critical aspect of its performance.

Figure 3: Training Loss curve.

The results demonstrate that the CNN-LSTM model performs well on these metrics, with high prediction accuracy and stability. The prediction curve's high similarity to the test curve further supports the model's effectiveness in temperature prediction.

Figure 4: Training Loss - Zoomed In curve.

The performance comparison of various models for predicting historical temperature data, as outlined in Table 2, highlights the advantages of utilizing more sophisticated approaches in deep learning.

Linear Regression serves as the baseline model, achieving a variance of 0.682, an R² score of 0.623, and a Mean Absolute Error (MAE) of 2.125. While it provides a foundational understanding of the data, its performance indicates limitations in capturing the complexities of temperature patterns.

Convolutional Neural Network (CNN) improves upon linear regression, with a variance of 0.756 and an R² score of 0.791. The MAE decreases to 1.536, demonstrating the effectiveness of CNNs in capturing spatial features of the temperature data. This model shows a clear advancement in accuracy, although it still lacks the temporal processing capabilities necessary for comprehensive time series forecasting.

Table 2: Comparison of Model Performance

Model | Variance | R2 Score | MAE |

Linear Regression | 0.682 | 0.623 | 2.125 |

CNN | 0.756 | 0.791 | 1.536 |

LSTM | 0.873 | 0.890 | 1.018 |

CNN- LSTM | 0.927 | 0.901 | 0.901 |

Long Short-Term Memory (LSTM) networks further enhance prediction performance, achieving a variance of 0.873 and an R² score of 0.890. The MAE drops significantly to 1.018, underscoring the LSTM’s strength in modeling sequential data and capturing long-term dependencies. This model illustrates a marked improvement over both linear regression and CNN, highlighting its suitability for time series forecasting.

CNN-LSTM Hybrid Model demonstrates the highest performance metrics, with a variance of 0.927 and an R² score of 0.901. The MAE is further reduced to 0.901, confirming the model’s effectiveness in addressing both spatial and temporal aspects of the temperature data.

5. Conclusions

This study implemented a CNN-LSTM hybrid model to predict historical temperature data demonstrating the efficacy of deep learning techniques in weather forecasting. The hybrid model effectively combined the strengths of Convolutional Neural Networks (CNNs) for spatial feature extraction and Long Short-Term Memory (LSTM) networks for capturing temporal dependencies, resulting in high prediction accuracy and stability. The use of Mean Absolute Error (MAE) as the loss function provided a clear and interpretable evaluation of the model’s performance.

The results indicated that the CNN-LSTM model excelled at handling complex meteorological data, addressing key challenges such as missing values and high-dimensionality. The strong alignment between the prediction curve and the actual test data highlighted the model's reliability and its potential for broader applications in climate prediction.

These findings offer valuable insights for sectors such as agriculture, energy management, and urban planning. Future research could explore the application of this model to other regions with similar weather variability and extend its use to predict additional weather-related phenomena, further enhancing the capabilities of meteorological forecasting in the context of global climate change.

References

[1]. Y. LeCun, Y. Bengio, and G. Hinton, "Deep learning, " Nature, vol. 521, pp. 436-444, 2015.

[2]. S. Hochreiter and J. Schmidhuber, "Long short-term memory, " Neural Comput., vol. 9, no. 8, pp. 1735-1780, 1997.

[3]. L. Ma and S. Tian, "A Hybrid CNN-LSTM Model for Aircraft 4D Trajectory Prediction, " in IEEE Access, vol. 8, pp. 134668-134680, 2020.

[4]. N. Ranjan, S. Bhandari, H. P. Zhao, H. Kim and P. Khan, "City-Wide Traffic Congestion Prediction Based on CNN, LSTM and Transpose CNN, " in IEEE Access, vol. 8, pp. 81606-81620, 2020. https://doi.org/10.1109/ACCESS.2020.2991462

[5]. Zhang Yu, He Qingxia, Zeng Shiyi Research on Global Temperature Prediction Based on CNN-LSTM Model [J] Progress in Applied Mathematics, 2024, 13 (1): 302-312

[6]. Huang Chao, Li Qiaoping, Xie Yijun, et al., 2022. Application of Machine Learning Methods in Summer Precipitation Prediction in Hunan Province [J]. Transactions of Atmospheric Sciences, 45(2): 191-202.

[7]. Wang, B., Liu, S., Wang, B. et al. Multi-step ahead short-term predictions of storm surge level using CNN and LSTM network. Acta Oceanol. Sin. 40, 104–118 (2021).

[8]. A. Ahmed and J. A. S. Alalana, "Temperature prediction using LSTM neural network, " in Proc. IEEE 9th International Conference on Electronics (ICEL), 2020, pp. 210-215.

[9]. H. Wang et al., "Temperature forecasting using deep learning methods: A comprehensive review, " Energy Reports, vol. 6, pp. 232-245, 2020.

Cite this article

Gong,Y.;Zhang,Y.;Wang,F.;Lee,C. (2024). Deep Learning for Weather Forecasting: A CNN-LSTM Hybrid Model for Predicting Historical Temperature Data. Applied and Computational Engineering,99,168-174.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Y. LeCun, Y. Bengio, and G. Hinton, "Deep learning, " Nature, vol. 521, pp. 436-444, 2015.

[2]. S. Hochreiter and J. Schmidhuber, "Long short-term memory, " Neural Comput., vol. 9, no. 8, pp. 1735-1780, 1997.

[3]. L. Ma and S. Tian, "A Hybrid CNN-LSTM Model for Aircraft 4D Trajectory Prediction, " in IEEE Access, vol. 8, pp. 134668-134680, 2020.

[4]. N. Ranjan, S. Bhandari, H. P. Zhao, H. Kim and P. Khan, "City-Wide Traffic Congestion Prediction Based on CNN, LSTM and Transpose CNN, " in IEEE Access, vol. 8, pp. 81606-81620, 2020. https://doi.org/10.1109/ACCESS.2020.2991462

[5]. Zhang Yu, He Qingxia, Zeng Shiyi Research on Global Temperature Prediction Based on CNN-LSTM Model [J] Progress in Applied Mathematics, 2024, 13 (1): 302-312

[6]. Huang Chao, Li Qiaoping, Xie Yijun, et al., 2022. Application of Machine Learning Methods in Summer Precipitation Prediction in Hunan Province [J]. Transactions of Atmospheric Sciences, 45(2): 191-202.

[7]. Wang, B., Liu, S., Wang, B. et al. Multi-step ahead short-term predictions of storm surge level using CNN and LSTM network. Acta Oceanol. Sin. 40, 104–118 (2021).

[8]. A. Ahmed and J. A. S. Alalana, "Temperature prediction using LSTM neural network, " in Proc. IEEE 9th International Conference on Electronics (ICEL), 2020, pp. 210-215.

[9]. H. Wang et al., "Temperature forecasting using deep learning methods: A comprehensive review, " Energy Reports, vol. 6, pp. 232-245, 2020.