1. Introduction

With the rapid development of autonomous driving, UAVs, and robotics, the accuracy and real-time nature of environmental sensing has become increasingly important. In these applications, effective identification, tracking, and avoiding obstacles are key to ensuring safe and efficient operations [1]. Traditional single-sensor approaches, while effective in certain scenarios, often face various limitations. For example, camera performance is significantly degraded in low light or inclement weather, while lidar provides accurate depth information but can experience data loss in complex environments [2]. To solve these problems, multi-sensor fusion technology has emerged [3].

By combining information from different sensors, multi-sensor fusion technology enables the generation of a more comprehensive and reliable model of the environment. These sensors may include cameras [4], lidar [5], ultrasonic sensors, inertial measurement units (IMUs) [6], and so on. By fusing data from these sensors, the system can leverage the strengths of each sensor while complementing the shortcomings of a single sensor. For example, lidar is able to provide high-precision three-dimensional spatial data, while cameras are able to capture visual details. In urban environments, this fusion not only improves the accuracy of obstacle detection, but also improves the robustness of the system in complex and dynamic environments.

In recent years, multi-sensor fusion technology has made significant progress in many fields. To achieve efficient environment sensing and dynamic obstacle avoidance, the fusion of LiDAR and camera has been widely used in self-driving cars. In the field of unmanned aerial vehicles, the combination of data from vision sensors and IMUs can help systems navigate and avoid obstacles more accurately. In addition, many studies have focused on further improvement of the performance and adaptability of sensor fusion by using intelligent algorithms such as deep learning and reinforcement learning [7].

The purpose of this paper is to discuss the application status and future development direction of multi-sensor fusion technology in dynamic obstacle avoidance. By analyzing the current research results and technical challenges, this paper will summarize how multi-sensor fusion technology can improve the performance of dynamic obstacle avoidance systems, and discuss the application of intelligent algorithms and future research directions [8].

The article will be divided into six sections. the second part will detail the single-sensor-based navigation approach. The third part will explore existing multi-sensor fusion schemes; The fourth part will analyze the basic concepts and classifications of dynamic obstacle avoidance. The fifth part will discuss the improvement of multi-sensor fusion technology for dynamic obstacle avoidance, the sixth part will look forward to the future research direction and technology trends, and finally summarize the full text in the seventh part and put forward the prospect of future applications.

2. Single-sensor based navigation

There are many different types of sensors used in mobile navigation, including vision sensors, lidar, inertial measurement units (IMUs), and ultra-wideband (UWB) devices. In addition to these sensors for a wide range of applications, other types of sensors such as Wi-Fi, Bluetooth, and others are available. In navigation systems, single-sensor navigation is an important research direction, which involves the use of a single sensor to complete tasks such as environment perception, path planning, and localization. In this article, several typical single-sensor navigation methods will be analyzed in detail, together with their advantages, disadvantages, and application scenarios.

2.1. Vision Sensor Navigation

Vision sensors are a common type of single-sensor navigation, mainly including monocular cameras, stereo cameras, and RGB-D cameras. Monocular cameras realize environment map construction and localization by extracting and matching feature points. Methods like ORB-SLAM and LSD-SLAM perform well in indoor and small-scale environments but are affected by illumination and lack direct depth information [9]. Stereo cameras capture the same scene with multiple cameras and restore 3D information through complex feature point matching. They are widely used in mobile robot navigation but have increased computational complexity and limitations in environments with few feature points. RGB-D cameras combine a regular camera and a depth sensor to capture color and depth information. Solutions like RGB-D SLAM V2 and DVO-SLAM [10] are available. However, RGB-D cameras are susceptible to interference in strong lighting and may have reduced depth measurement accuracy.

2.2. LiDAR Navigation

Lidar calculates the distance of an object by emitting a laser and measuring the reflection time, enabling environmental modeling and localization. There are two types of LiDAR navigation: 2D and 3D.

2D LiDAR is mainly used for real-time navigation in small-scale environments, such as GMapping, Hector and other algorithms. Although these methods can work in real time, they cannot obtain the height information of objects, which limits their application when building 3D maps.

3D LiDAR is able to construct real-time 3D environment maps by collecting depth information at different heights. Typical 3D LiDAR navigation methods include IMLS-SLAM and LOAM [11]. Compared with 2D LiDAR, 3D LiDAR is more widely used in autonomous driving and robot navigation, especially for its excellent obstacle detection ability and positioning accuracy. However, the semantic information of LiDAR data is less, and it is difficult to obtain the color and boundary information of objects in the environment [11].

2.3. Inertial Measurement Unit (IMU) Navigation

An inertial measurement unit (IMU) typically consists of a three-axis gyroscope and a three-axis accelerometer, capable of measuring the angular velocity and acceleration of an object in real time. IMUs are commonly used for the estimation of the state of motion in a short period of time, and are able to calculate the angle and distance of an object by integrating angular velocity and acceleration. However, a major disadvantage of IMUs is that when used for long periods of time, errors can accumulate, resulting in a decrease in navigation accuracy. Therefore, IMUs are often used as auxiliary sensors in combination with other sensors, such as the fusion method LIO with LiDAR [12] and the fusion method MSCKF with cameras [13].

3. Multi-sensor fusion

3.1. Principle of multi-sensor fusion

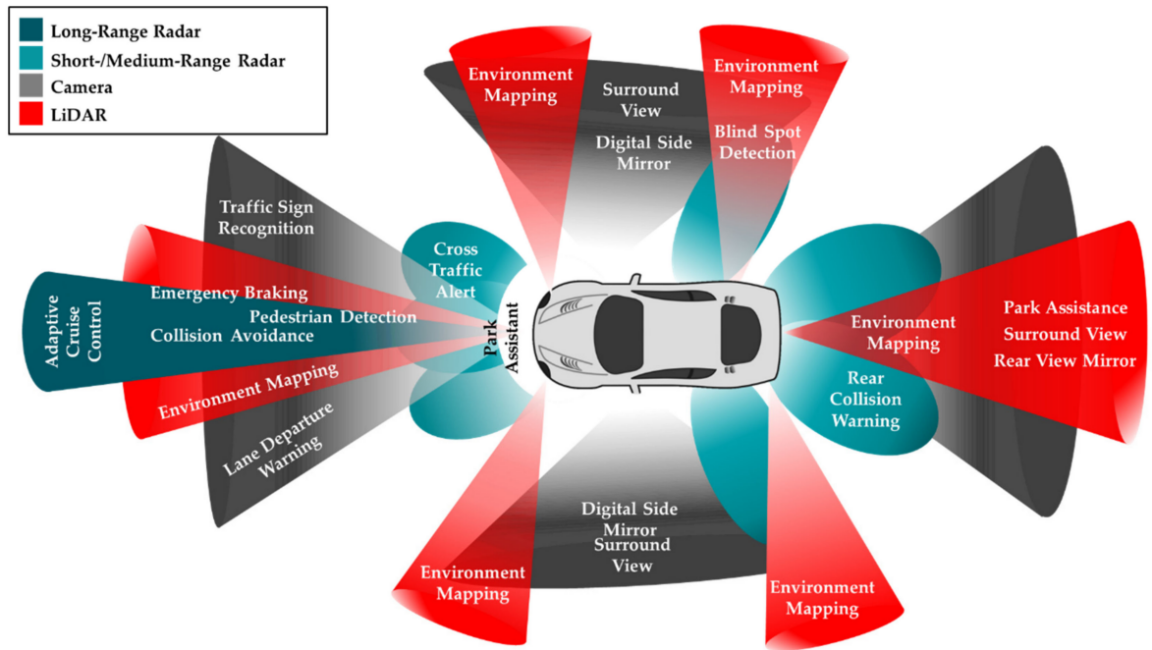

Multi-sensor fusion technology integrates data from different sensors to comprehensively perceive the environment. The core idea is to leverage complementary advantages and address shortcomings to improve the accuracy and robustness of autonomous driving systems. In autonomous driving, common sensors include LiDAR, cameras, IMUs, GPS, and radar, providing different information, as shown on Figure 1 [14]. By fusing this data, the system can map the environment accurately and locate in real time. There are three main levels of multi-sensor fusion: data-level (highest information and complexity), feature-level (moderate information and lower complexity), and decision-level (minimal information but high system flexibility). Extended Kalman Filtering (EKF) and Factor Graph Optimization are common data fusion methods. EKF suits nonlinear systems, while factor graph optimization enables global optimization by constructing graph models [15].

Figure 1. An example of the type and positioning of sensors in an automated vehicle [14].

3.2. Fusion strategies for different sensors

In the autonomous driving system, the fusion strategy of different sensors directly affects the effect of SLAM (synchronous localization and mapping) and dynamic obstacle avoidance. The fusion of LiDAR and cameras is one of the most common combinations. LiDAR provides high-precision 3D point cloud data through laser ranging, but it has limited effect when dealing with fast-moving objects. Cameras are able to capture a wealth of visual information, especially in object recognition and classification. However, the camera is sensitive to lighting conditions and does not perform well in low- or high-light environments. The fusion of data from these two sensors can significantly improve the system's environmental perception capabilities, especially in complex urban environments, and the system can build a more accurate and comprehensive environment map by fusing the precise distance information of LiDAR and the visual characteristics of the cameras [16].

The fusion of IMU and LiDAR also plays a key role in improving the real-time and anti-interference capabilities of SLAM systems. The IMU provides high-frequency acceleration and angular velocity data, which helps to adjust the vehicle's motion state in real time to reduce jitter and drift issues in LiDAR data. For example, in the UAV SLAM system, the IMU data can be corrected to the point cloud data generated by LiDAR, so as to ensure the stable positioning and navigation of the UAV in complex and dynamic environments [17]. The fusion of GPS and vision sensors focuses on improving the global positioning accuracy of the system. In the case of weak or interference GPS signals, vision sensors can help the system accurately locate by identifying fixed landmarks, thereby enhancing the adaptability of the system in complex environments.

In addition, the fusion of sensor data can be achieved by tight or loose coupling. In a tightly coupled system, sensor data is fused directly at a low level, such as joint processing of sensor data through factor map optimization to generate more accurate maps and location estimates. The tightly coupled system can make full use of the complementary information of the sensor and reduce the error, but the computational complexity is high. The loosely coupled system performs high-level data fusion after each sensor is processed independently, which is more suitable for application scenarios with high real-time requirements, although there is more information loss, but it is more computationally efficient [18].

3.3. Application of multi-sensor fusion in SLAM

The application of multi-sensor fusion technology in SLAM has been verified in multiple autonomous driving systems. The following are a few specific application cases to demonstrate the practical application of multi-sensor fusion technology in SLAM and dynamic obstacle avoidance.

3.3.1. Application of LiDAR and vision sensor fusion in SLAM

The fusion of LiDAR and vision sensor is one of the mainstream solutions in SLAM technology. The combination of LiDAR data that generates high-resolution 3D point clouds that provide accurate distance measurements, and vision sensors that capture rich texture and color information enables more accurate maps of the environment. In a typical SLAM system, the point cloud data provided by LiDAR is supplemented and corrected by vision sensors, especially in the changing urban environment, and this fusion strategy can effectively cope with different environmental characteristics. For example, by integrating LiDAR to accurately detect the location of buildings, pedestrians, and other vehicles on complex urban roads, and cameras to recognize traffic signs and lights, SLAM systems are able to generate more reliable maps of the environment and provide more accurate location services [16]

3.3.2. Application of IMU and LiDAR fusion in UAV SLAM

The combination of IMU and LiDAR plays a crucial role in UAV SLAM. UAVs need to respond to complex dynamic environments in real time during flight, and the high-frequency attitude data provided by the IMU can help correct the errors in the LiDAR data, so as to ensure the accuracy of the point cloud data. For example, in a highly dynamic environment, the IMU can help the SLAM system quickly adjust its positioning results by measuring the acceleration and rotation angle of the drone in real time. This fusion strategy not only improves the real-time performance of the UAV SLAM system, but also enhances its robustness in complex environments, enabling it to maintain stable performance in various flight missions [17].

3.3.3. Application of GPS and vision sensor fusion in high-precision navigation

The fusion of GPS and vision sensor plays an important role in enhancing the global positioning capability of SLAM system. In environments where GPS signals are unstable such as urban canyons and forests, vision sensors can help correct GPS positioning errors by identifying fixed landmarks in the environment, thereby providing more accurate location information. For example, in autonomous driving applications, the vehicle can obtain the initial position through GPS, and then use the image data captured by the vision sensor to recognize the surrounding landmarks, further improving the positioning accuracy. Through this fusion strategy, SLAM systems are able to provide reliable global positioning services and maintain a high level of navigation performance in a variety of complex environments [15].

4. Dynamic obstacle avoidance

According to the characteristics of obstacles, dynamic obstacle avoidance algorithms can be divided into three categories: rule-based algorithms, optimization-based algorithms, and learning-based algorithms.

4.1. Rule-Based Algorithms

Rule-based algorithms often rely on predefined rules or models to perform obstacle avoidance decisions by analyzing information in the environment. The advantage of this type of algorithm is that it is simple to calculate, and it is suitable for application scenarios with high real-time requirements. However, rule-based algorithms often lack flexibility and struggle to cope with complex and dynamic environments [19].

4.1.1. Artificial potential field method

Artificial potential field method (APF) is a rule-based classical obstacle avoidance algorithm. This method works by treating the position of the device as a "charged particle", with obstacles in the environment creating a "repulsive force" and a target point creating a "gravitational force". The device determines the direction of movement based on the combined force of these forces, thus avoiding obstacles and moving towards the target. However, the artificial potential field method has the problem of "local optimality", that is, the device may fall into a "dead zone" where it cannot move forward [20].

4.1.2. Virtual force field method

Virtual Force Field is an improved version of the artificial potential field method. It adjusts the obstacle avoidance force based on the distance of the obstacle by generating a virtual force field for the area around the obstacle. The closer the distance, the greater the repulsion. The virtual force field method reduces the occurrence of the local optimal problem by improving the potential field model, but it still cannot completely solve the path planning problem in complex environments [21].

4.2. Optimization-based algorithms

The optimization-based algorithm achieves obstacle avoidance by seeking the global optimal solution of the path. This type of algorithm usually searches and evaluates multiple possible paths to select the best path to avoid obstacles. Optimization algorithms are often able to achieve better obstacle avoidance than rule-based algorithms, especially in complex environments.

4.2.1. Ant colony algorithm

Ant colony optimization (ACO) is an optimization algorithm based on swarm intelligence, which simulates the behavior of ants in the process of foraging through pheromone labeling paths. In obstacle avoidance, the ant colony algorithm uses virtual "ants" to find the optimal path in the environment, and optimizes the path selection through multiple iterations. The ant colony algorithm can well cope with the global path planning problem, but its computational complexity is high, especially in the dynamic environment, which requires real-time calculation and optimization [22].

4.2.2. Genetic algorithms

Genetic Algorithm (GA) is an optimization algorithm based on evolutionary principles. It simulates the process of natural selection and selects the most adaptable path for iterative optimization. Genetic algorithm (GA) has strong global search ability in obstacle avoidance problems, but like ant colony algorithms, genetic algorithms have high computational complexity and poor real-time performance, so they are usually optimized in combination with other algorithms in practical applications [23].

4.2.3. Particle swarm optimization

Particle Swarm Optimization (PSO) algorithm simulates the foraging behavior of birds to find the global optimal path. Each "particle" represents a path selection, and the algorithm optimizes the path progressively through the exchange of information between the particles. PSO performs well in continuous optimization problems and can quickly find optimal solutions in obstacle avoidance tasks [24].

4.3. Learning-based algorithms

Learning-based algorithms mainly rely on machine learning and reinforcement learning techniques to train models through data, so that the system can learn obstacle avoidance strategies autonomously. This type of algorithm is suitable for dealing with complex and dynamic environments, and is highly adaptive and flexible.

4.3.1. Deep learning

Deep learning uses multi-layered neural network models to learn and identify complex features in the environment, such as the type, location, and movement trends of obstacles. Through the multi-modal data obtained by sensors such as cameras and lidars, deep learning algorithms can achieve high-precision obstacle detection and path planning. However, the training of deep learning models relies on a large amount of annotated data, and the computational complexity of the model is high, which poses certain challenges in terms of real-time performance [25].

4.3.2. Reinforcement learning

reinforcement learning (RL) enables the system to learn obstacle avoidance strategies autonomously through continuous interaction with the environment. Common algorithms, such as Q learning and deep Q networks (DQN), update the policy value function through environmental feedback, so that the system can continuously optimize obstacle avoidance decisions in a complex dynamic environment [26]. Reinforcement learning is highly adaptable and able to respond to changes in obstacles in real time, making it particularly suitable for highly dynamic scenarios. However, reinforcement learning algorithms converge slowly and can take a significant amount of training time.

5. The improvement of dynamic obstacle avoidance by multi-sensor fusion technology

5.1. Ways to improve obstacle avoidance performance through multi-sensor fusion

5.1.1. Data fusion improves the accuracy of obstacle detection

Multi-sensor fusion technology greatly improves the accuracy of obstacle detection by combining data from different sensors such as LiDAR, cameras, radar, and IMUs. LiDAR provides three-dimensional depth information, cameras capture visual details, and radar works reliably in bad weather, fusing this data to provide a more comprehensive understanding of the environment. Through the fusion of multi-source data, the system can overcome the noise and uncertainty of a single sensor and improve the accuracy of obstacle detection and path planning [27]. For example, in autonomous driving, the fusion of LiDAR and camera data enables more accurate identification of pedestrians and vehicles and supports safe driving in complex urban environments.

5.1.2. Reduce the limitations of a single sensor and improve system robustness

The limitations of a single sensor in harsh environments are obvious, such as the camera performing poorly in poor lighting, and LiDAR may not provide accurate data in fog or heavy rain conditions. Multi-sensor fusion can compensate for these shortcomings by prioritizing the use of suitable sensors under different conditions [28]. For example, in low-light environments, LiDAR can provide ranging capabilities to complement cameras, while radar can stably detect obstacles in bad weather, ensuring that the system remains highly robust in complex conditions.

5.2. Challenges and future development directions of fusion algorithms

5.2.1. Computational complexity and real-time system problems

Although multi-sensor fusion improves system performance, it also brings computational complexity and real-time problems. Processing large amounts of data from multiple high-resolution sensors can put enormous pressure on system resources, especially in applications that require real-time decision-making, such as autonomous driving [29]. The fusion algorithm based on deep learning has outstanding performance in detection accuracy, but the computational overhead is large and the real-time performance is limited. Therefore, researchers have developed edge computing and distributed processing architectures, which offload a portion of the preprocessing tasks to edge devices to reduce latency and reduce the burden on the central processing unit.

5.2.2. Application of hardware acceleration and edge computing technology in fusion algorithms

In response to real-time demand, hardware acceleration has become a key solution for multi-sensor fusion systems. Hardware such as GPUs and FPGAs greatly speed up data processing by processing tasks in parallel. GPUs are particularly efficient at inferring deep learning models, while FPGAs provide flexible acceleration support at lower power consumption for mobile or embedded systems. At the same time, edge computing technology reduces data transmission latency and improves the system's ability to respond quickly to environmental changes by processing sensor data locally [30]. Combined with hardware acceleration, edge computing provides effective real-time performance assurance for high data throughput

5.3. Future Directions

5.3.1. Adaptive fusion algorithms and smart sensors

In the future, multi-sensor fusion systems will be more intelligent, and adaptive algorithms will enable the system to dynamically adjust the weights of different sensors according to the environment. For example, the system can automatically adjust the data fusion ratio of LiDAR and camera based on ambient lighting conditions, enhancing the ability to cope with complex and dynamic environments. This adaptive fusion will greatly improve the flexibility and robustness of the system.

5.3.2. More efficient algorithms with low-power hardware

With the increasing demand for real-time performance and energy efficiency, future sensor fusion systems will rely on more efficient algorithms and low-power hardware. Lightweight deep learning models, low-power sensors, and processors will enable systems to operate efficiently in resource-constrained scenarios such as drones and portable robots. Advancements in these technologies will pave the way for the popularization of sensor fusion in a variety of applications

6. Future outlook

6.1. Future trends in multi-sensor fusion technology

With the continuous progress of science and technology, the future multi-sensor fusion technology will achieve significant improvements in sensor performance, data fusion algorithms, and the application of intelligent algorithms.

6.1.1. Advances in sensor technology

Future sensors will have higher resolution and lower power consumption, which will greatly improve the overall performance of multi-sensor fusion systems. High-resolution sensors such as lidar and cameras will provide more detailed environmental information, while low-power sensors will extend the battery life of mobile devices, making drones, robots, and other devices more widely used in complex scenarios [31].

6.1.2. Optimization of data fusion algorithms

Future fusion algorithms will be more efficient and able to handle larger datasets while maintaining high accuracy and real-time. Through the further application of intelligent algorithms such as deep learning and reinforcement learning, the fusion system will be able to autonomously learn and optimize the fusion method of different sensor data. For example, in complex environments, adaptive fusion algorithms can dynamically adjust the weights of different sensors according to environmental changes, ensuring that the obstacle avoidance system can make accurate path planning and decision-making in real-time situations [32].

6.2. Innovative direction of dynamic obstacle avoidance technology

In the future, dynamic obstacle avoidance technology will focus on applications in more dynamic and complex environments. Autonomous driving, drones, and intelligent robots still face challenges in complex environments such as highly dynamic urban traffic, complex terrain, or bad weather. To this end, the development of dynamic obstacle avoidance technology will focus on the following aspects:

6.2.1. Ability to adapt to more complex environments

Future obstacle avoidance systems must be able to cope with more complex and dynamic environments, especially in the fields of autonomous driving and intelligent robotics. Integrating high-precision sensors and intelligent algorithms, the obstacle avoidance system will have the ability to deal with unexpected situations and rapidly changing obstacles. For example, multimodal fusion combining lidar and cameras can enable the system to react quickly when a pedestrian or vehicle suddenly appears [33].

6.2.2. Continuous optimization of intelligent learning algorithms

The continuous optimization of intelligent algorithms such as reinforcement learning and deep learning will promote the development of dynamic obstacle avoidance technology in a more efficient direction. In the future, intelligent algorithms will be better at dealing with unknown or complex dynamic factors in the environment, making autonomous driving or robotic systems more adaptable and able to autonomously adjust obstacle avoidance strategies in uncertain or complex scenarios. In addition, the continuous iteration and optimization of intelligent algorithms will improve the computing efficiency of the system, reduce energy consumption, and ensure real-time performance [34].

7. Conclusion

Multi-sensor fusion technology has shown great potential in dynamic obstacle avoidance. By fusing data from multiple sensors (such as lidar, camera, radar, etc.), the system can improve the accuracy of obstacle detection, enhance environmental perception, and achieve more accurate path planning in dynamic and complex environments. The existing technological breakthroughs have significantly improved the dynamic obstacle avoidance capabilities in the fields of autonomous driving, unmanned aerial vehicles (UAVs) and intelligent robots, especially in dealing with bad weather, changeable obstacles, and a variety of perception information processing, and multi-sensor fusion technology provides strong support for the obstacle avoidance system.

However, despite advances in technology, challenges remain. Technical bottlenecks such as computational complexity, real-time problems, and high energy consumption need to be further solved. At the same time, with the increase of the types and number of sensors, how to effectively fuse the data of different sensors and optimize the computational efficiency and decision-making speed of the algorithm is still the focus of future research. In addition, the robustness of the system in dynamic environments also needs to be continuously optimized, especially in dealing with emergencies and complex scenarios.

In the future, with the continuous progress of sensor technology and the further optimization of intelligent algorithms, multi-sensor fusion technology will show its wide potential in more application scenarios. Autonomous driving, intelligent robotics and drone technology will benefit from more intelligent and adaptive dynamic obstacle avoidance systems that can perform tasks more efficiently in complex environments. With the development of edge computing, hardware acceleration, and adaptive algorithms, future multi-sensor fusion technology will be able to achieve higher accuracy, lower power consumption, and more intelligent real-time obstacle avoidance, providing strong technical support for various automation systems.

References

[1]. Qazi S, Khawaja BA, Farooq QU. IoT-equipped and AI-enabled next generation smart agriculture: A critical review, current challenges and future trends. Ieee Access. 2022 Feb 17;10:21219-35.

[2]. Davison. Real-time simultaneous localisation and map** with a single camera. In Proceedings Ninth IEEE International Conference on Computer Vision 2003 Oct 13 (pp. 1403-1410). IEEE.

[3]. Yeong DJ, Velasco-Hernandez G, Barry J, Walsh J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors. 2021 Mar 18;21(6):2140.

[4]. Mur-Artal R, Montiel JM, Tardos JD. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE transactions on robotics. 2015 Aug 24;31(5):1147-63.

[5]. Kohlbrecher S, Von Stryk O, Meyer J, Klingauf U. A flexible and scalable SLAM system with full 3D motion estimation. In2011 IEEE international symposium on safety, security, and rescue robotics 2011 Nov 1 (pp. 155-160). IEEE.

[6]. Huang G. Visual-inertial navigation: A concise review. In: 2019 International Conference on Robotics and Automation (ICRA). IEEE; 2019. p. 9572-82.

[7]. Adiuku N, Avdelidis NP, Tang G, et al. Improved Hybrid Model for Obstacle Detection and Avoidance in Robot Operating System Framework (Rapidly Exploring Random Tree and Dynamic Windows Approach). Sensors. 2024;24(7):2262.

[8]. Engel J, Schöps T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM. In: European Conference on Computer Vision. Cham: Springer International Publishing; 2014. p. 834-49.

[9]. Trejos K, Rincón L, Bolaños M, et al. 2D SLAM algorithms characterization, calibration, and comparison considering pose error, map accuracy as well as CPU and memory usage. Sensors. 2022;22(18):6903.

[10]. Wang J, Wang S. Preparation, modification and environmental application of biochar: A review. J Clean Prod. 2019;227:1002-22.

[11]. Xu W, Zhang F. Fast-LIO: A fast, robust lidar-inertial odometry package by tightly-coupled iterated Kalman filter. IEEE Robot Autom Lett. 2021;6(2):3317-24.

[12]. Al-Madani B, Orujov F, Maskeliūnas R, et al. Fuzzy logic type-2 based wireless indoor localization system for navigation of visually impaired people in buildings. Sensors. 2019;19(9):2114.

[13]. Xiao F. Multi-sensor data fusion based on the belief divergence measure of evidences and the belief entropy. Inf Fusion. 2019;46:23-32.

[14]. Yeong DJ, Velasco-Hernandez G, Barry J, et al. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors. 2021;21(6):2140.

[15]. Frosi M, Matteucci M. ART-SLAM: Accurate real-time 6DOF lidar SLAM. IEEE Robot Autom Lett. 2022;7(2):2692-9.

[16]. Chen W, Lin X, Lee J, et al. 5G-advanced toward 6G: Past, present, and future. IEEE J Sel Areas Commun. 2023;41(6):1592-619.

[17]. Dang X, Rong Z, Liang X. Sensor fusion-based approach to eliminating moving objects for SLAM in dynamic environments. Sensors. 2021;21(1):230.

[18]. Li A, Cao J, Li S, et al. Map construction and path planning method for a mobile robot based on multi-sensor information fusion. Appl Sci. 2022;12(6):2913.

[19]. Lv J, Qu C, Du S, et al. Research on obstacle avoidance algorithm for unmanned ground vehicle based on multi-sensor information fusion. Math Biosci Eng. 2021;18(2):1022-39.

[20]. Li Y, Zhang R. A Dynamic SLAM Algorithm Based on Lidar-Vision Fusion. In: 2022 6th International Conference on Electronic Information Technology and Computer Engineering. 2022. p. 812-5.

[21]. Li L, Yuan J, Sun H, et al. An SLAM algorithm based on laser radar and vision fusion with loop detection optimization. J Phys Conf Ser. 2022;2203(1):012029.

[22]. Canh TN, Nguyen TS, Quach CH, et al. Multisensor data fusion for reliable obstacle avoidance. In: 2022 11th International Conference on Control, Automation and Information Sciences (ICCAIS). IEEE; 2022. p. 385-90.

[23]. Tao Q, Sang H, Guo H, et al. Research on multi-AGVs scheduling based on genetic particle swarm optimization algorithm. In: 2021 40th Chinese Control Conference (CCC). IEEE; 2021. p. 1814-9.

[24]. Xue J, Shen B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J Supercomput. 2023;79(7):7305-36.

[25]. Yang Y, Chen Z. Optimization of dynamic obstacle avoidance path of multirotor UAV based on ant colony algorithm. Wirel Commun Mob Comput. 2022;2022(1):1299434.

[26]. Hama Rashid DN, Rashid TA, Mirjalili S. ANA: Ant nesting algorithm for optimizing real-world problems. Mathematics. 2021;9(23):3111.

[27]. Li A, Cao J, Li S, et al. Map construction and path planning method for a mobile robot based on multi-sensor information fusion. Appl Sci. 2022;12(6):2913.

[28]. Nabati R, Harris L, Qi H. Cftrack: Center-based radar and camera fusion for 3D multi-object tracking. In: 2021 IEEE Intelligent Vehicles Symposium Workshops (IV Workshops). IEEE; 2021. p. 243-8.

[29]. Rawashdeh NA, Bos JP, Abu-Alrub NJ. Drivable path detection using CNN sensor fusion for autonomous driving in the snow. In: Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2021. SPIE; 2021. p. 36-45.

[30]. Long N, Yan H, Wang L, et al. Unifying obstacle detection, recognition, and fusion based on the polarization color stereo camera and LiDAR for the ADAS. Sensors. 2022;22(7):2453.

[31]. Li Y, Deng J, Zhang Y, et al. EZFusion: A Close Look at the Integration of LiDAR, Millimeter-Wave Radar, and Camera for Accurate 3D Object Detection and Tracking. IEEE Robot Autom Lett. 2022;7(4):11182-9.

[32]. Broedermann T, Sakaridis C, Dai D, et al. HRFuser: A multi-resolution sensor fusion architecture for 2D object detection. In: 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC). IEEE; 2023. p. 4159-66.

[33]. Wang L, Zhang X, Li J, et al. Multi-modal and multi-scale fusion 3D object detection of 4D radar and LiDAR for autonomous driving. IEEE Trans Veh Technol. 2022;72(5):5628-41.

[34]. Liang T, Xie H, Yu K, et al. Bevfusion: A simple and robust lidar-camera fusion framework. Adv Neural Inf Process Syst. 2022;35:10421-34.

Cite this article

Chen,L. (2024). Dynamic Obstacle Avoidance Technology Based on Multi-Sensor Fusion in Autonomous Driving. Applied and Computational Engineering,111,131-140.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-MLA 2024 Workshop: Mastering the Art of GANs: Unleashing Creativity with Generative Adversarial Networks

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Qazi S, Khawaja BA, Farooq QU. IoT-equipped and AI-enabled next generation smart agriculture: A critical review, current challenges and future trends. Ieee Access. 2022 Feb 17;10:21219-35.

[2]. Davison. Real-time simultaneous localisation and map** with a single camera. In Proceedings Ninth IEEE International Conference on Computer Vision 2003 Oct 13 (pp. 1403-1410). IEEE.

[3]. Yeong DJ, Velasco-Hernandez G, Barry J, Walsh J. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors. 2021 Mar 18;21(6):2140.

[4]. Mur-Artal R, Montiel JM, Tardos JD. ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE transactions on robotics. 2015 Aug 24;31(5):1147-63.

[5]. Kohlbrecher S, Von Stryk O, Meyer J, Klingauf U. A flexible and scalable SLAM system with full 3D motion estimation. In2011 IEEE international symposium on safety, security, and rescue robotics 2011 Nov 1 (pp. 155-160). IEEE.

[6]. Huang G. Visual-inertial navigation: A concise review. In: 2019 International Conference on Robotics and Automation (ICRA). IEEE; 2019. p. 9572-82.

[7]. Adiuku N, Avdelidis NP, Tang G, et al. Improved Hybrid Model for Obstacle Detection and Avoidance in Robot Operating System Framework (Rapidly Exploring Random Tree and Dynamic Windows Approach). Sensors. 2024;24(7):2262.

[8]. Engel J, Schöps T, Cremers D. LSD-SLAM: Large-scale direct monocular SLAM. In: European Conference on Computer Vision. Cham: Springer International Publishing; 2014. p. 834-49.

[9]. Trejos K, Rincón L, Bolaños M, et al. 2D SLAM algorithms characterization, calibration, and comparison considering pose error, map accuracy as well as CPU and memory usage. Sensors. 2022;22(18):6903.

[10]. Wang J, Wang S. Preparation, modification and environmental application of biochar: A review. J Clean Prod. 2019;227:1002-22.

[11]. Xu W, Zhang F. Fast-LIO: A fast, robust lidar-inertial odometry package by tightly-coupled iterated Kalman filter. IEEE Robot Autom Lett. 2021;6(2):3317-24.

[12]. Al-Madani B, Orujov F, Maskeliūnas R, et al. Fuzzy logic type-2 based wireless indoor localization system for navigation of visually impaired people in buildings. Sensors. 2019;19(9):2114.

[13]. Xiao F. Multi-sensor data fusion based on the belief divergence measure of evidences and the belief entropy. Inf Fusion. 2019;46:23-32.

[14]. Yeong DJ, Velasco-Hernandez G, Barry J, et al. Sensor and sensor fusion technology in autonomous vehicles: A review. Sensors. 2021;21(6):2140.

[15]. Frosi M, Matteucci M. ART-SLAM: Accurate real-time 6DOF lidar SLAM. IEEE Robot Autom Lett. 2022;7(2):2692-9.

[16]. Chen W, Lin X, Lee J, et al. 5G-advanced toward 6G: Past, present, and future. IEEE J Sel Areas Commun. 2023;41(6):1592-619.

[17]. Dang X, Rong Z, Liang X. Sensor fusion-based approach to eliminating moving objects for SLAM in dynamic environments. Sensors. 2021;21(1):230.

[18]. Li A, Cao J, Li S, et al. Map construction and path planning method for a mobile robot based on multi-sensor information fusion. Appl Sci. 2022;12(6):2913.

[19]. Lv J, Qu C, Du S, et al. Research on obstacle avoidance algorithm for unmanned ground vehicle based on multi-sensor information fusion. Math Biosci Eng. 2021;18(2):1022-39.

[20]. Li Y, Zhang R. A Dynamic SLAM Algorithm Based on Lidar-Vision Fusion. In: 2022 6th International Conference on Electronic Information Technology and Computer Engineering. 2022. p. 812-5.

[21]. Li L, Yuan J, Sun H, et al. An SLAM algorithm based on laser radar and vision fusion with loop detection optimization. J Phys Conf Ser. 2022;2203(1):012029.

[22]. Canh TN, Nguyen TS, Quach CH, et al. Multisensor data fusion for reliable obstacle avoidance. In: 2022 11th International Conference on Control, Automation and Information Sciences (ICCAIS). IEEE; 2022. p. 385-90.

[23]. Tao Q, Sang H, Guo H, et al. Research on multi-AGVs scheduling based on genetic particle swarm optimization algorithm. In: 2021 40th Chinese Control Conference (CCC). IEEE; 2021. p. 1814-9.

[24]. Xue J, Shen B. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J Supercomput. 2023;79(7):7305-36.

[25]. Yang Y, Chen Z. Optimization of dynamic obstacle avoidance path of multirotor UAV based on ant colony algorithm. Wirel Commun Mob Comput. 2022;2022(1):1299434.

[26]. Hama Rashid DN, Rashid TA, Mirjalili S. ANA: Ant nesting algorithm for optimizing real-world problems. Mathematics. 2021;9(23):3111.

[27]. Li A, Cao J, Li S, et al. Map construction and path planning method for a mobile robot based on multi-sensor information fusion. Appl Sci. 2022;12(6):2913.

[28]. Nabati R, Harris L, Qi H. Cftrack: Center-based radar and camera fusion for 3D multi-object tracking. In: 2021 IEEE Intelligent Vehicles Symposium Workshops (IV Workshops). IEEE; 2021. p. 243-8.

[29]. Rawashdeh NA, Bos JP, Abu-Alrub NJ. Drivable path detection using CNN sensor fusion for autonomous driving in the snow. In: Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2021. SPIE; 2021. p. 36-45.

[30]. Long N, Yan H, Wang L, et al. Unifying obstacle detection, recognition, and fusion based on the polarization color stereo camera and LiDAR for the ADAS. Sensors. 2022;22(7):2453.

[31]. Li Y, Deng J, Zhang Y, et al. EZFusion: A Close Look at the Integration of LiDAR, Millimeter-Wave Radar, and Camera for Accurate 3D Object Detection and Tracking. IEEE Robot Autom Lett. 2022;7(4):11182-9.

[32]. Broedermann T, Sakaridis C, Dai D, et al. HRFuser: A multi-resolution sensor fusion architecture for 2D object detection. In: 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC). IEEE; 2023. p. 4159-66.

[33]. Wang L, Zhang X, Li J, et al. Multi-modal and multi-scale fusion 3D object detection of 4D radar and LiDAR for autonomous driving. IEEE Trans Veh Technol. 2022;72(5):5628-41.

[34]. Liang T, Xie H, Yu K, et al. Bevfusion: A simple and robust lidar-camera fusion framework. Adv Neural Inf Process Syst. 2022;35:10421-34.