1. Introduction

Music therapy is a personalized therapeutic practice led by a trained music therapist, utilizing music elements such as rhythm and harmony to address mental, emotional, physical, social, and cognitive needs [1]. Lyrics discussion is one of the methods used by music therapists that help clients explore emotions, self-expression, and difficult feelings through guided discussions about meaningful song lyrics. One of the therapeutic purposes and objectives of song lyrics discussion is to promote a technique where difficult emotions can be expressed and discussed via the song. [2] This led to the topic of our research on how applications of machine learning, Bert models, are able to support personalized music therapy by classifying songs to different emotions based on the sentiment of the lyrics.

2. Related Work

2.1. Music Classification and Music Therapy

Research in music classification has primarily focused on classifying music by genre. Works such as Poria et al., Elbir et al., Ma, Dhyani1 et al. focused on music classification into genres where diverse methodologies and techniques to improve applicability are discussed [3-6]. Music emotion recognition has also been explored using a variety of approaches with an emphasis on audio feature extraction, as shown in the works of Jamdar et al., Panda et al., and Na and Yong [7-9].

About music therapy, recent research has highlighted the potential of personalized music recommendations based on emotional states. For instance, Modran et al. [10] explored emotion classification and therapeutic effects prediction of music based on individual preferences, mood, and solfeggio frequencies, aiming to assist music therapists in personalized treatment. Shen [11] introduces a particle swarm optimization-based emotion classification model to analyze the impact of interactive music appreciation on college student's mental health. However, these approaches predominantly target monolingual datasets without applications in linguistically diverse contexts.

2.2. Models in Text Classification

Various models have been explored in the task of music emotion recognition. For instance, Agrawal et al. [12] utilize a transformer-based XLNet model to enhance music emotion recognition through lyrics analysis, offering advancements for emotion-based playlist generation and recommendation systems. Among transformer-based models, BERT (Bidirectional Encoder Representations from Transformers) has demonstrated remarkable performance in NLP task text classification. The use of pre-trained language models, as shown by Devlin et al. [13], has significantly reduced the need for extensive labeled datasets. Variants of BERT, such as RoBERTa and DistilBERT, have been fine-tuned for domain-specific tasks, further enhancing their adaptability. In the context of music classification, Pizarro et al. [14] utilized DistilBERT to achieve genre classification, success prediction, and release year estimation. Similar to the purpose of our work, Revathy et al. [15] developed an emotion classification model, LyEmoBert, that analyzes song lyrics to predict emotions—happy, sad, relaxed, or angry. However, these studies often neglect the challenges posed by multilingual and code-switched texts, which are prevalent in global music datasets.

2.3. Contribution of This Work

While prior studies in music classification and emotion recognition have made significant strides, they predominantly focus on monolingual datasets and audio features. Our work addresses the multilingual aspect by classifying emotions from song lyrics across multiple languages using the Bert model, designed to handle the complexities of multilingual datasets, offering a solution that bridges the gap between linguistic diversity and emotion recognition in music. By introducing a multilingual perspective, we are able to expand the potential applications of emotion-aware music recommendation systems and music therapy tools, providing an innovative contribution.

3. Methodology

3.1. Data Collection

To conduct sentiment analysis on Chinese and English song lyrics, we collected relevant data from the following sources:

3.1.1. Chinese Lyrics Sources

Chinese lyrics were gathered from Spotify, ensuring a diverse representation of language and emotional expression within the dataset. A total of 150,000 lyrics were collected, ensuring a wide variety in musical styles and emotional types.

3.1.2. English Lyrics Sources

We used a dataset from Github, which holds over 1000 English song entries with key variables of the artist, genre, title, album, year, and lyrics [16]. For our project, we focused on the key variable lyrics only.

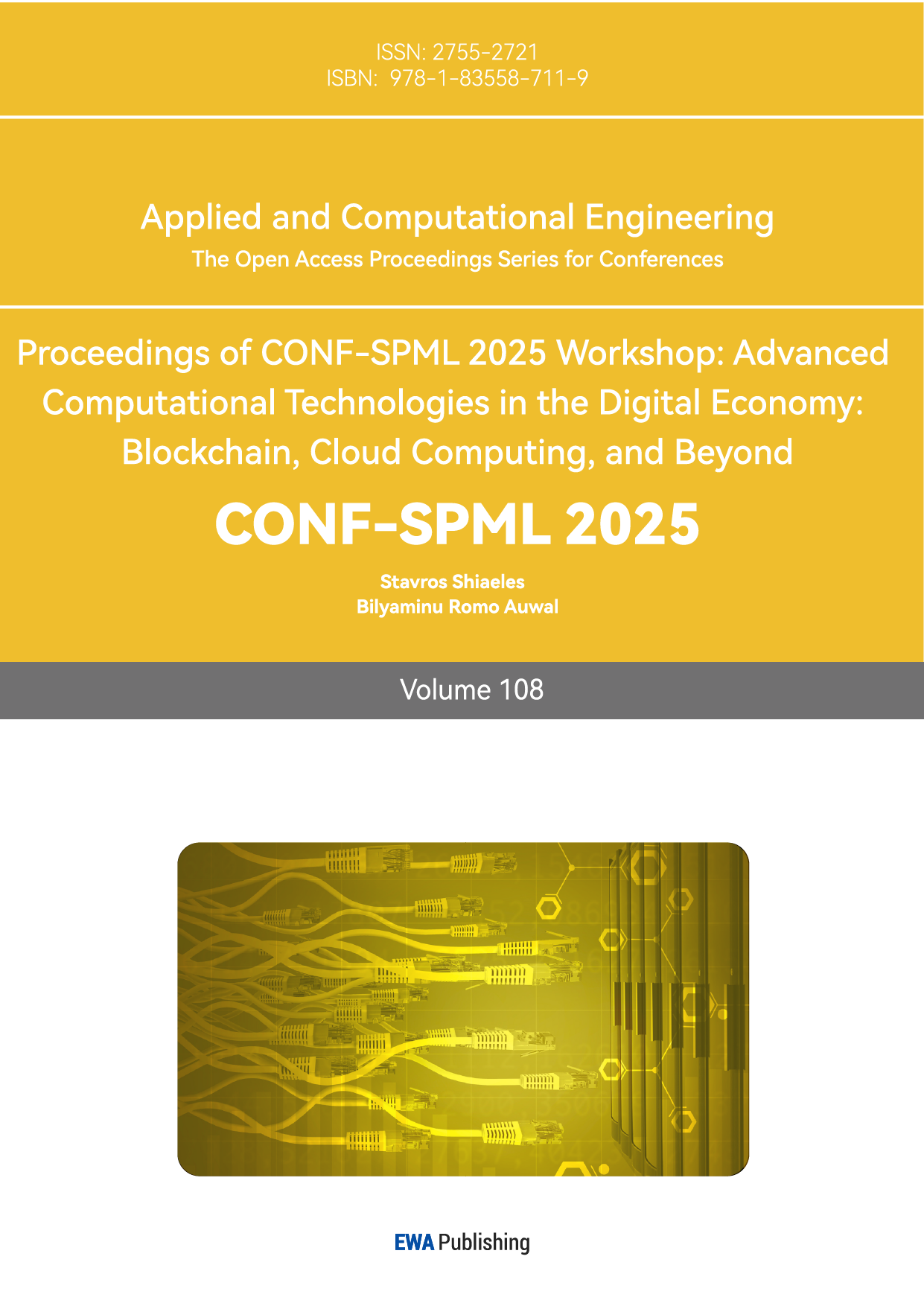

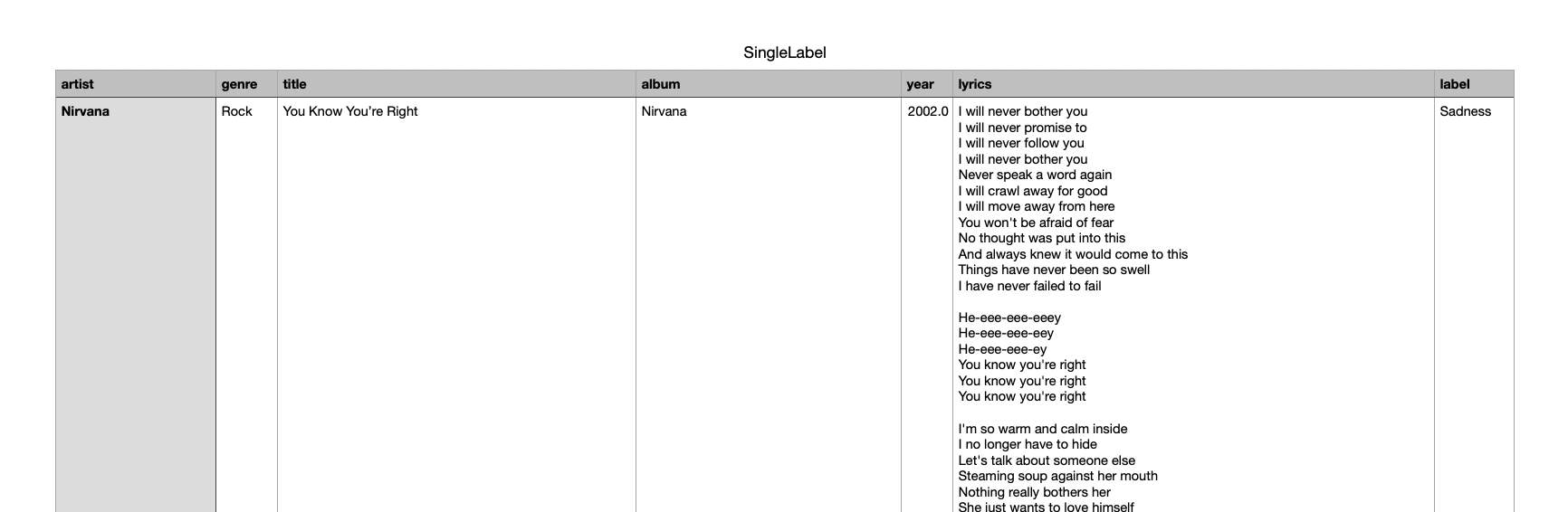

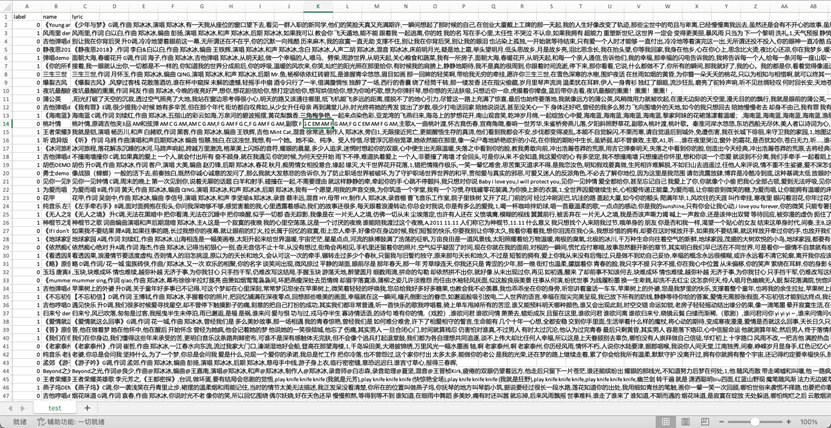

In “SingleLabel” data, as shown in Figure 1, the songs are annotated with emotion labels in 3 categories: sadness, tenderness, and tension. In “OriginalAnnotation” data, as shown in Figure 2, the songs are annotated with Amazement, Calmness, Joyful activation, Nostalgia, Power, Sadness, Solemnity, Tenderness, and Tension.

Figure 1: Single Labeled English Data

Figure 2: Multi Labeled English Data

3.2. Data Preprocessing [1]

Single Labeled English lyrics are tokenized using a BERT tokenizer, which transforms them into token sequences and attention masks, ensuring that each lyric is limited to 512 tokens (max length). Each emotional label (sadness, tenderness, tension) is converted into numerical labels (0, 1, or 2).

For Multi Labeled English lyrics, the emotional labels are binarized (1 for presence of emotion type, 0 for absence) across 9 classes: Amazement, Calmness, Joyful activation, Nostalgia, Power, Sadness, Solemnity, Tenderness, and Tension. A new data frame is created with two columns: the song lyrics (text) and their corresponding binarized labels (labels). The lyrics are tokenized using the DistilBertTokenizer, which converts text into input IDs and attention masks. The maximum token length is set to 128 to ensure uniform input size.

The collected Chinese lyrics underwent several preprocessing steps:

• Data Cleaning: Noise elements such as platform watermarks and advertisements were removed to focus the sentiment analysis on the lyrical content.

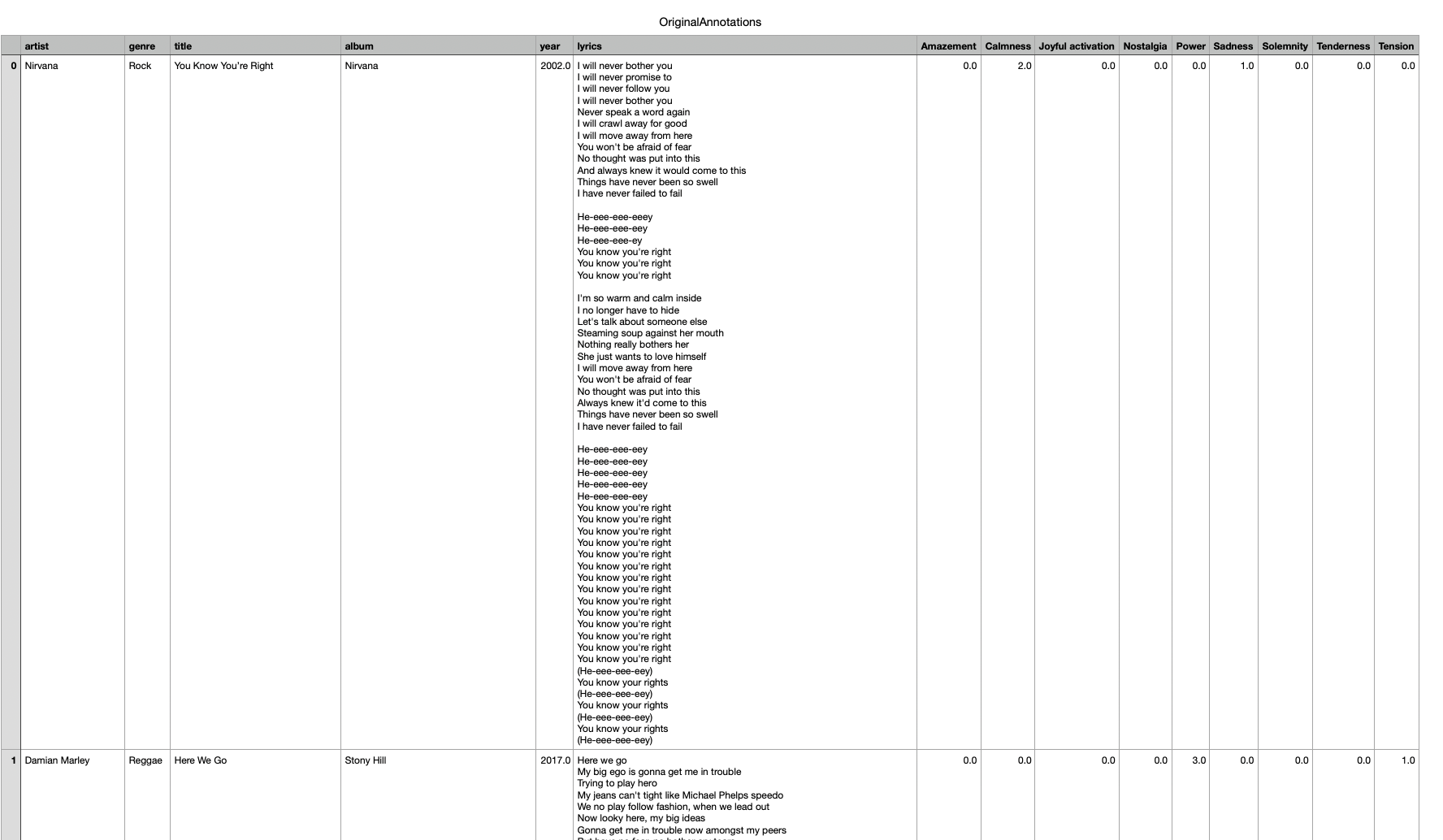

• MySQL Storage: The cleaned data (raw lyrics) were stored in a MySQL database for effective management (Figure 3). The storage allowed for easy retrieval, manipulation, and updates. The specific steps included:

1. Storing cleaned lyrics in a MySQL database, using an appropriate schema to save both Chinese and English lyrics along with metadata (e.g., song title, artist, language type).

2. Database fields included: id (song ID), name (song title), singer (artist), lyric (lyrical content), and label (emotion label).

Figure 3: MySQL Storage

• Data Export: Lyrics were exported in the required format for deep learning training using SQL queries. Data was exported in CSV format, with each line containing the lyrical content and corresponding sentiment label (Figure 4).

Figure 4: Data Export

• Tokenization: Lyrics were tokenized using Jieba to enable word-level processing for model training.

• Stopword Removal: Irrelevant words, such as common Chinese particles and English articles, were removed using a stopword list.

3.3. Tools and Models

3.3.1. BERT

Since the Transformer models cannot process raw text directly, we use Bert to convert the text input into numbers, which are serviceable to the models. This process is called tokenization, which will be the response for:

• Splitting the input into words, subwords, or symbols (like punctuation) that are called tokens

• Mapping each token to an integer IDs

• Adding additional inputs that may be useful to the model

BERT receives this list of IDs per sentence and converts them to tensors.

3.3.2. Deep Learning Models

We employed several deep-learning models for sentiment analysis:

• Convolutional Neural Network (CNN): Primarily used for extracting local emotional features from shorter sentences.

• Long Short-Term Memory (LSTM): Excels at processing long textual sequences, capturing emotional flows and long-term dependencies.

• Gated Recurrent Unit (GRU): Similar to LSTM but with a simplified structure for efficient computation, suitable for large lyric datasets.

• GRU + CNN Combination Model: Integrates GRU's sequential modeling capability with CNN's feature extraction to enhance sentiment classification accuracy.

3.3.3. GRU + CNN Embedding

To improve context understanding of lyrics, we introduced the GRU + CNN model. Its bidirectional encoder captures nuanced emotional expressions from the lyrical context, making it particularly effective for multilingual sentiment analysis. The model's pre-trained universal language understanding capability allows it to handle translated lyrics efficiently, preserving emotional information.

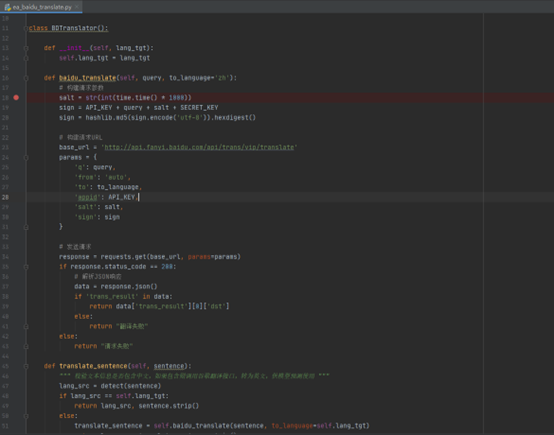

3.4. Translation Processing

For handling Chinese lyrics, we utilized the Baidu Translate API, which leverages neural network translation technology for efficient and accurate translations, ensuring the retention of original emotional content. The API call code for translation is shown in Figure 5.

Figure 5: API Call Code for Translation

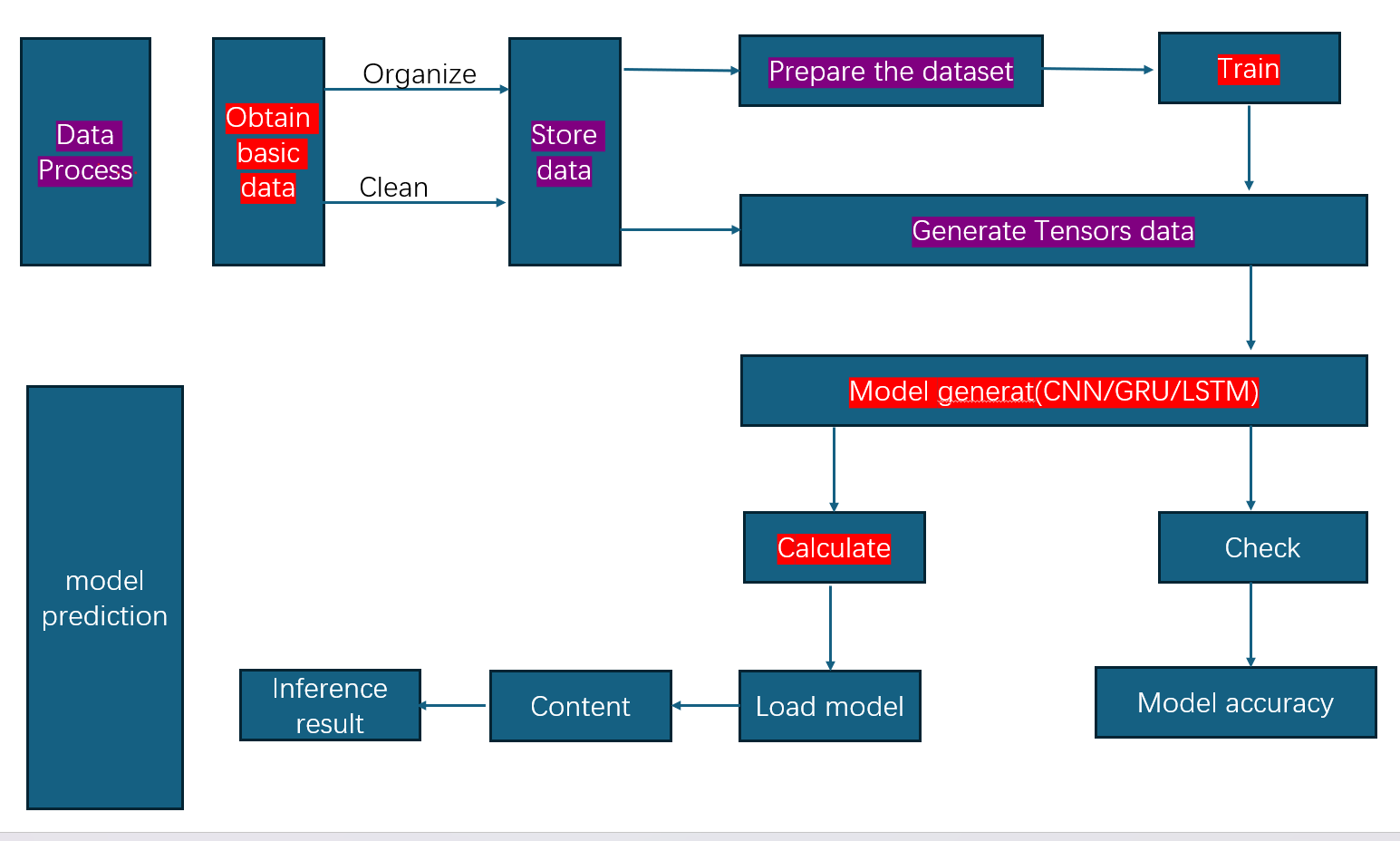

Figure 6: Flowchart of Sentiment Analysis

The flowchart illustrates the sentiment analysis workflow (Figure 6). Initially, data is processed and cleaned, generating Tensors for model use. Subsequently, models such as CNN, GRU, or LSTM are employed for training, validation, and sentiment classification, ultimately producing inference results.

4. Experiments and Evaluation

4.1. English Lyrics

4.1.1. Single Labeled

We used the pre-trained BERT model that learns contextual relationships between words in the lyrics. After BERT processes the lyrics, the model passes the pooled output (representing the overall sentiment of the lyric) through a dropout layer (prevent overfitting) and a final linear layer to classify the lyrics into one of the emotion classes.

For Training and Evaluation, we utilized the following:

• Optimizer: AdamW optimizer with a learning rate scheduler to adjust the learning rate over time

• Loss Function: Cross-entropy loss is used to measure the difference between the predicted and actual labels

• Training process: For each epoch, feed in lyrics and their corresponding labels, using backpropagation and gradient descent to minimize the loss function

• Validation: evaluate the model on a separate validation dataset after each epoch, tracking accuracy and loss to monitor overfitting and generalization

4.1.2. Multi Labeled data

We used pre-trained DistilBERT as the base to extract features from the lyrics. On top of DistilBERT, a custom classification head is added, consisting of:

• A pre-classifier linear layer

• A dropout layer to prevent overfitting

• A final classifier layer that outputs logits for the nine emotion categories

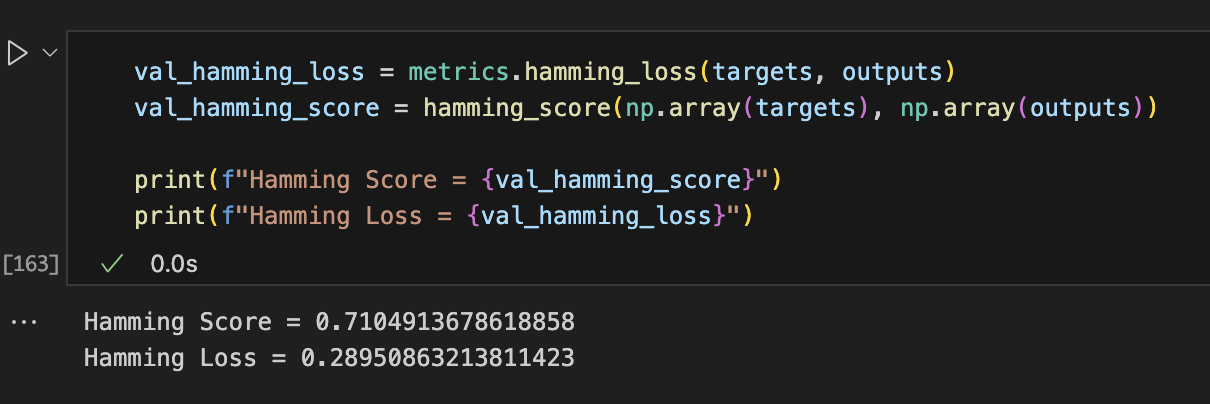

After the training, we used the Hamming Score to measure the performance.

4.2. Chinese Lyrics

To validate the effectiveness of this methodology, we translated the bilingual lyric dataset and input it into CNN, LSTM, GRU, and GRU + CNN models for training. Model performance was assessed using the following metrics:

• Data Size: Length of the text.

• Accuracy: Proportion of correctly classified results.

Experimental results indicated that the GRU + CNN model outperformed traditional CNN and LSTM models in sentiment classification of translated lyrics, achieving an accuracy of 78.58%. Table 1 shows the comparison of the GRU+CNN model with other models.

Table 1: Comparison [3]

Neural Network | Size | Accuracy |

cnn | 1.000 | 72,75 |

lstm | 1.000 | 72,87 |

gru | 1.000 | 73,90 |

gru+cnn | 1.000 | 73,57 |

cnn | 10.000 | 78,32 |

lstm | 10.000 | 78,53 |

gru | 10.000 | 78,47 |

gru+cnn | 10.000 | 78,58 |

Based on the comparison results in Table 1, we selected the GRU + CNN model as the final predictive model.

5. Results and Discussion

5.1. English Lyrics

As Table 2 and Figure 8 show, the results of the BERT-based sentiment classification model on Single Labeled Dataset show a moderate performance across three sentiment classes: "sadness," "tenderness," and "tension." The model achieved an overall accuracy of 67%, which means about two-thirds of the test samples were correctly classified.

Table 2: Single Labeled English Data

Precision | Recall | f1-score | Support | |

sadness | 0.68 | 0.84 | 0.75 | 63 |

tenderness | 0.62 | 0.55 | 0.58 | 29 |

tension | 0.75 | 0.38 | 0.5 | 24 |

accuracy | - | - | 0.67 | 116 |

macro avg | 0.68 | 0.59 | 0.61 | 116 |

weighted avg | 0.68 | 0.67 | 0.66 | 116 |

For the metric of the DistilBert model used on Multi Labeled Data, we used the metric hamming score, which showed an overall accuracy of 71%.

Figure 7: Single Labeled English Data

5.2. Chinese Lyrics

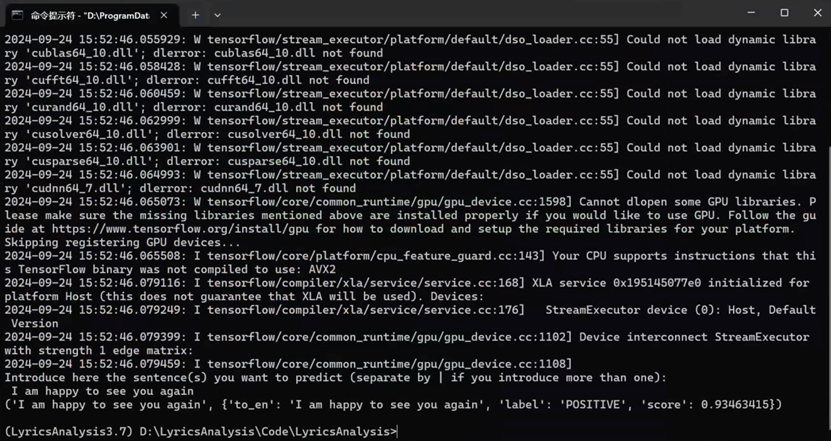

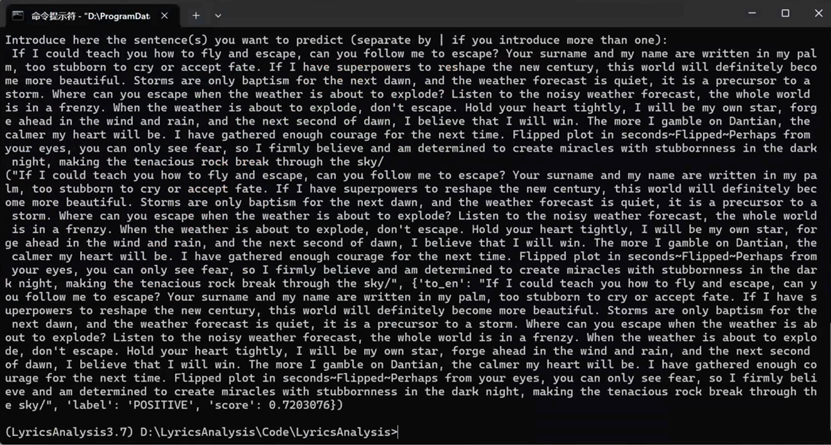

Figure 8: Short Sentences in English

Figure 9: Long Sentences in English

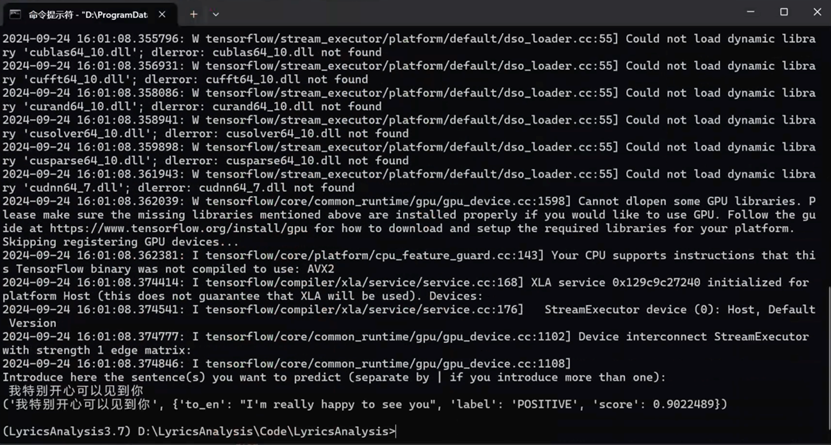

Figure 10: Short Sentences in Chinese

As shown in Figures 8-10, the experimental outcomes demonstrate that the inclusion of a translation processing module significantly enhances cross-lingual sentiment analysis accuracy. Particularly in handling complex emotional expressions and semantic transformations, the GRU + CNN model exhibited robust emotional capture capabilities. While CNN and LSTM excel in single-language sentiment analysis, the GRU + CNN model proved more advantageous in multilingual contexts. Moreover, the GRU + CNN model maintained stable performance for both long and short sentences. [3]

6. Conclusion

This research demonstrates the effectiveness of using BERT models for emotion classification in multilingual song lyrics, with a focus on enhancing personalized music therapy practices. The GRU + CNN hybrid model proved to be the most effective in accurately classifying emotions across both English and Chinese datasets, outperforming traditional models like CNN and LSTM, particularly in cross-lingual contexts. These findings suggest that advanced deep learning techniques can significantly contribute to improving sentiment analysis in multilingual settings. Future work could explore expanding the emotion categories, integrating more diverse multilingual datasets, and refining the models further to improve classification accuracy and real-world applicability in therapeutic practices.

Acknowledgement

Yiyang Hong and Yuqi Xue contributed equally to this work and should be considered co-first authors.

References

[1]. Cleveland Clinic. “Music Therapy: What Is It, Types & Treatment.” Cleveland Clinic, Cleveland Clinic, 18 July 2023, my.clevelandclinic.org/health/treatments/8817-music-therapy.

[2]. Tiemann, Julie. “Music Therapy Intervention Series: Lyric Discussion.” Metro Music Makers, 25 Nov. 2019, www.metromusicmakers.com/2019/11/music-therapy-intervention-series-lyric-discussion/.

[3]. Poria, S., Gelbukh, A., Hussain, A., Bandyopadhyay, S., & Howard, N. (2013). Music Genre Classification: A Semi-supervised Approach. Mexican Conference on Pattern Recognition.

[4]. Elbir, Ahmet, and Nizamettin Aydin. 2020. “Music Genre Classification and Music Recommendation by Using Deep Learning.” Electronics Letters, March. https://doi.org/10.1049/el.2019.4202.

[5]. Ma, Xundong. 2023. “Music Genre Classification Based on Machine Learning Methods.” Highlights in Science, Engineering and Technology 34 (February): 168–75. https://doi.org/10.54097/hset.v34i.5443.

[6]. Ritika Dhyani. 2023. “Song Classification Using Machine Learning.” International Journal for Research in Applied Science and Engineering Technology 11 (4): 3760–64. https://doi.org/10.22214/ijraset.2023.50890.

[7]. Jamdar, Adit, Jessica Abraham, Karishma Khanna, and Rahul Dubey. 2015. “Emotion Analysis of Songs Based on Lyrical and Audio Features.” International Journal of Artificial Intelligence & Applications 6 (3): 35–50. https://doi.org/10.5121/ijaia.2015.6304.

[8]. Panda, Renato, Bruno Rocha, and Rui Pedro Paiva. 2015. “Music Emotion Recognition with Standard and Melodic Audio Features.” Applied Artificial Intelligence 29 (4): 313–34. https://doi.org/10.1080/08839514.2015.1016389.

[9]. Na, Wang, and Fang Yong. 2022. “Music Recognition and Classification Algorithm Considering Audio Emotion.” Scientific Programming 2022 (January): e3138851. https://doi.org/10.1155/2022/3138851.

[10]. Modran, Horia Alexandru, Tinashe Chamunorwa, Doru Ursuțiu, Cornel Samoilă, and Horia Hedeșiu. 2023. “Using Deep Learning to Recognize Therapeutic Effects of Music Based on Emotions.” Sensors 23 (2): 986. https://doi.org/10.3390/s23020986.

[11]. Shen, Qiangwei. 2023. “The Influence of Music Teaching Appreciation on the Mental Health of College Students Based on Multimedia Data Analysis.” PeerJ Computer Science 9 (September): e1589–89. https://doi.org/10.7717/peerj-cs.1589.

[12]. Agrawal, Yudhik, Ramaguru Guru Ravi Shanker, and Vinoo Alluri. 2021. “Transformer-Based Approach towards Music Emotion Recognition from Lyrics.” Lecture Notes in Computer Science, 167–75. https://doi.org/10.1007/978-3-030-72240-1_12.

[13]. Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. “BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding.” ArXiv.org. May 24, 2019. https://doi.org/10.48550/arXiv.1810.04805.

[14]. Martinez, Servando Pizarro, Moritz Zimmermann, Miguel Serkan Offermann, and Florian Reither. 2024. “Exploring Genre and Success Classification through Song Lyrics Using DistilBERT: A Fun NLP Venture.” ArXiv (Cornell University), July. https://doi.org/10.48550/arxiv.2407.21068.

[15]. Revathy, V R, Anitha S Pillai, and Fatemah Daneshfar. 2023. “LyEmoBERT: Classification of Lyrics’ Emotion and Recommendation Using a Pre-Trained Model.” Procedia Computer Science 218 (January): 1196–1208. https://doi.org/10.1016/j.procs.2023.01.098.

[16]. Imdiptanu. 2019. “GitHub - Imdiptanu/Lyrics-Emotion-Detection: Single-Label and Multi-Label Classifiers to Detect Emotions in Lyrics Achieved 0.65 and 0.82 F1 Scores Respectively.” GitHub. 2019. https://github.com/imdiptanu/lyrics-emotion-detection/tree/master.

Cite this article

Hong,Y.;Xue,Y. (2025). Emotion Classification through Song Lyrics in Multi-Languages with Bert. Applied and Computational Engineering,108,59-68.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Cleveland Clinic. “Music Therapy: What Is It, Types & Treatment.” Cleveland Clinic, Cleveland Clinic, 18 July 2023, my.clevelandclinic.org/health/treatments/8817-music-therapy.

[2]. Tiemann, Julie. “Music Therapy Intervention Series: Lyric Discussion.” Metro Music Makers, 25 Nov. 2019, www.metromusicmakers.com/2019/11/music-therapy-intervention-series-lyric-discussion/.

[3]. Poria, S., Gelbukh, A., Hussain, A., Bandyopadhyay, S., & Howard, N. (2013). Music Genre Classification: A Semi-supervised Approach. Mexican Conference on Pattern Recognition.

[4]. Elbir, Ahmet, and Nizamettin Aydin. 2020. “Music Genre Classification and Music Recommendation by Using Deep Learning.” Electronics Letters, March. https://doi.org/10.1049/el.2019.4202.

[5]. Ma, Xundong. 2023. “Music Genre Classification Based on Machine Learning Methods.” Highlights in Science, Engineering and Technology 34 (February): 168–75. https://doi.org/10.54097/hset.v34i.5443.

[6]. Ritika Dhyani. 2023. “Song Classification Using Machine Learning.” International Journal for Research in Applied Science and Engineering Technology 11 (4): 3760–64. https://doi.org/10.22214/ijraset.2023.50890.

[7]. Jamdar, Adit, Jessica Abraham, Karishma Khanna, and Rahul Dubey. 2015. “Emotion Analysis of Songs Based on Lyrical and Audio Features.” International Journal of Artificial Intelligence & Applications 6 (3): 35–50. https://doi.org/10.5121/ijaia.2015.6304.

[8]. Panda, Renato, Bruno Rocha, and Rui Pedro Paiva. 2015. “Music Emotion Recognition with Standard and Melodic Audio Features.” Applied Artificial Intelligence 29 (4): 313–34. https://doi.org/10.1080/08839514.2015.1016389.

[9]. Na, Wang, and Fang Yong. 2022. “Music Recognition and Classification Algorithm Considering Audio Emotion.” Scientific Programming 2022 (January): e3138851. https://doi.org/10.1155/2022/3138851.

[10]. Modran, Horia Alexandru, Tinashe Chamunorwa, Doru Ursuțiu, Cornel Samoilă, and Horia Hedeșiu. 2023. “Using Deep Learning to Recognize Therapeutic Effects of Music Based on Emotions.” Sensors 23 (2): 986. https://doi.org/10.3390/s23020986.

[11]. Shen, Qiangwei. 2023. “The Influence of Music Teaching Appreciation on the Mental Health of College Students Based on Multimedia Data Analysis.” PeerJ Computer Science 9 (September): e1589–89. https://doi.org/10.7717/peerj-cs.1589.

[12]. Agrawal, Yudhik, Ramaguru Guru Ravi Shanker, and Vinoo Alluri. 2021. “Transformer-Based Approach towards Music Emotion Recognition from Lyrics.” Lecture Notes in Computer Science, 167–75. https://doi.org/10.1007/978-3-030-72240-1_12.

[13]. Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. “BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding.” ArXiv.org. May 24, 2019. https://doi.org/10.48550/arXiv.1810.04805.

[14]. Martinez, Servando Pizarro, Moritz Zimmermann, Miguel Serkan Offermann, and Florian Reither. 2024. “Exploring Genre and Success Classification through Song Lyrics Using DistilBERT: A Fun NLP Venture.” ArXiv (Cornell University), July. https://doi.org/10.48550/arxiv.2407.21068.

[15]. Revathy, V R, Anitha S Pillai, and Fatemah Daneshfar. 2023. “LyEmoBERT: Classification of Lyrics’ Emotion and Recommendation Using a Pre-Trained Model.” Procedia Computer Science 218 (January): 1196–1208. https://doi.org/10.1016/j.procs.2023.01.098.

[16]. Imdiptanu. 2019. “GitHub - Imdiptanu/Lyrics-Emotion-Detection: Single-Label and Multi-Label Classifiers to Detect Emotions in Lyrics Achieved 0.65 and 0.82 F1 Scores Respectively.” GitHub. 2019. https://github.com/imdiptanu/lyrics-emotion-detection/tree/master.