1. Introduction

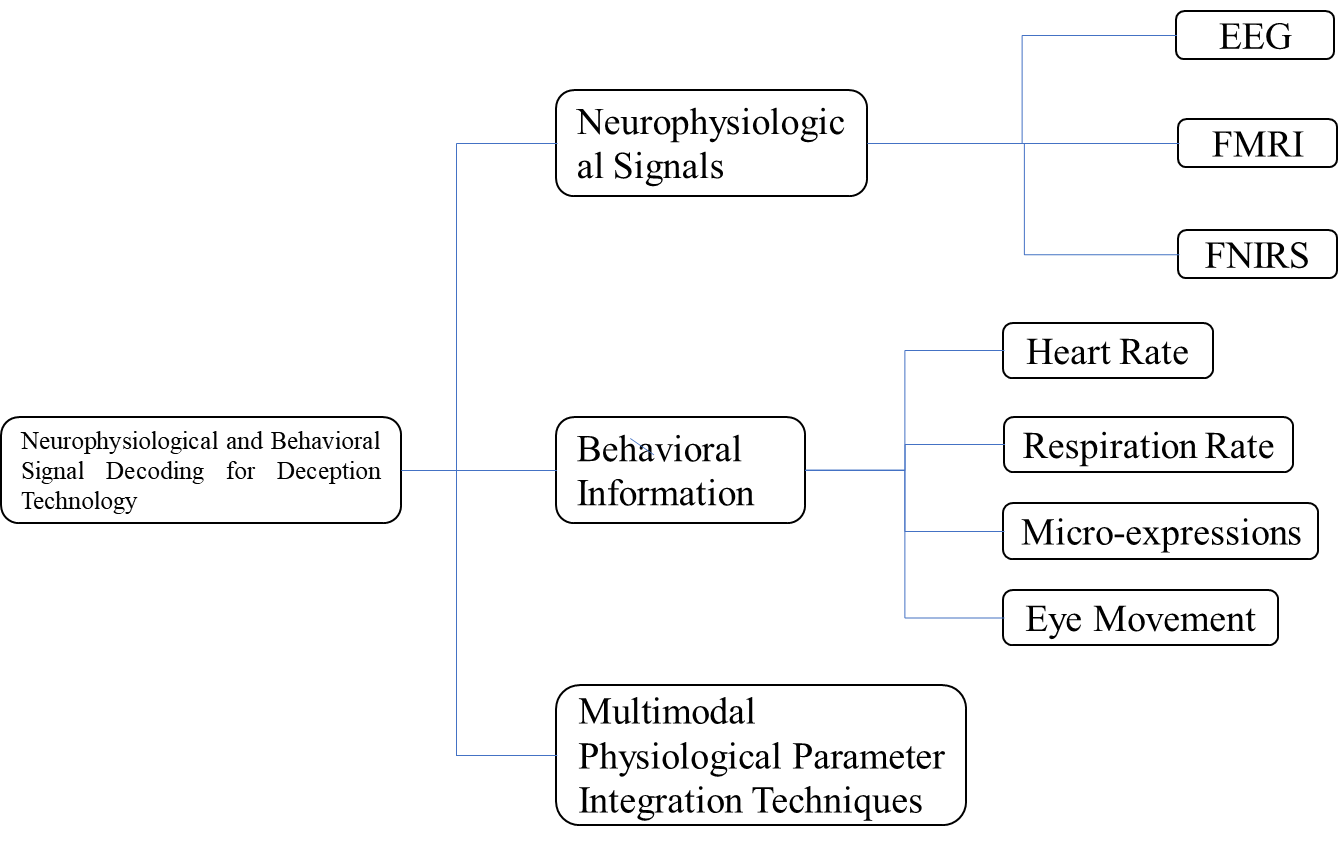

Lie detection is in high demand in fields such as judicial interrogation, national security, and psychological assessment. However, traditional lie detection technologies (such as polygraphs) rely on single physiological signals such as heart rate and respiration rate, which are susceptible to individual differences, environmental interference, and countermeasure strategies, limiting their accuracy. In recent years, the rapid development of neuroscience and artificial intelligence has driven the innovation of lie detection technology: Neurophysiological signals (such as EEG, fMRI, fNIRS) provide in-depth analysis of the mechanisms behind lying by revealing brain cognitive conflicts (such as the P300 wave), prefrontal cortex activation (BOLD signals), and hemodynamic changes. Behavioral physiological signals (such as heart rate, respiration rate, eye movement patterns, and micro-expressions) compensate for the lack of scene adaptability in neuro-technologies by quantifying autonomic nervous system stress responses, emotional fluctuations, and cognitive load. Multimodal fusion technology integrates the high temporal resolution of neuro-signals with the dynamic representation of behavioral physiological signals (such as EEG + eye movement, fNIRS + heart rate), and utilizes deep learning algorithms (such as LSTM and cross-modal attention mechanisms), significantly improving detection accuracy and robustness. However, challenges still exist in this field, such as the high cost of neuro-technologies, environmental interference affecting behavioral physiological signals, and the heterogeneity of multimodal data. To address these challenges, this paper provides a systematic review of the progress in lie detection research based on neurophysiological and behavioral physiological signals (Figure 1), with a focus on analyzing technological breakthroughs in multimodal fusion and artificial intelligence-driven approaches, aiming to provide theoretical support and technical pathways for the development of high-precision, practical lie detection systems.

Figure 1: Lie detection technology based on neurophysiological and behavioral physiological signals.

2. Lie Detection Technology Based on Neurophysiological Signal Decoding

Neurophysiology, as the science studying the functions of the nervous system and its relationship with behavior, directly focuses on the activity patterns of the brain and nervous system. These signals, including electroencephalography (EEG), functional magnetic resonance imaging (fMRI), functional near-infrared spectroscopy (fNIRS), and electrocardiography (ECG), each provide unique perspectives to help scientists gain a deeper understanding of the complex psychological mechanisms behind lies. This section aims to review the current applications, technical principles, and research advancements of neurophysiology in the field of lie detection.

2.1. EEG

The application of EEG technology in lie detection began in the 1970s when Lykken [1] experimentally recorded participants’ EEG signals during truthful and false statements, initially verifying the potential of brain waves in lie detection. In the 1990s, Emanuel Donchin and Lawrence A. Farwell discovered that the P300 component in event-related potentials (ERP) was related to concealed information detection and proposed the concept of “brain fingerprinting” [2]. In the early 2000s, V. Abootalebi [3] and others validated the application of the P300 wave in lie detection, particularly in multi-channel EEG systems, studying the differences in brain waves during truthful and false statements. In the 2010s, Navjot Saini et al. (2018) [4] introduced machine learning methods into EEG signal processing, improving the classification accuracy of lie detection. In the 2020s, R. Hefron et al. proposed a model based on Long Short-Term Memory (LSTM) networks, enhancing the accuracy of time-series analysis of EEG signals [5]. R. J. Deligan et al. (2022) combined EEG with fNIRS signals, advancing the application of multimodal fusion technology in complex scenarios [6]. Through the continuous progress of these studies, EEG technology in lie detection has gradually matured. From the initial P300 waveform analysis to the current integration of deep learning and multimodal fusion technologies, the application of EEG in lie detection has made significant progress, providing a solid foundation for real-time and accurate lie detection systems.

2.2. FMRI

Functional magnetic resonance imaging (fMRI), which reflects brain activity through blood-oxygen-level-dependent (BOLD) signals, has been widely applied in lie detection research. Its application can be traced back to the 1990s, and with the development of technology, fMRI has gradually made significant progress in the field of lie detection. In the 1990s, fMRI technology began to be applied in neuroscience research, and in 2002, it was first used in a lie detection experiment. At that time, Langleben [7] and others studied brain activity during lying using fMRI, finding that the activity of the prefrontal cortex and cingulate gyrus was significantly enhanced when lying, thus verifying the potential of fMRI in lie detection [7]. Subsequently, in 2005, Ganis [8] and others further proved that brain activity during lying has unique characteristics by comparing the brain activity patterns of lying and truthful responses, further promoting the application of fMRI in the field of lie detection [8]. However, the low temporal resolution of fMRI limits its use in real-time lie detection. In the 2010s, researchers began attempting to combine fMRI with other physiological signals (such as heart rate, skin conductance, etc.), forming multimodal lie detection systems. This method significantly improved the accuracy of lie detection, overcoming the limitations of using a single technique. In recent years, researchers have also begun to focus on the impact of experimental design, task complexity, and emotional factors on fMRI signals, providing new perspectives for optimizing lie detection systems. Nevertheless, fMRI still faces challenges in the field of lie detection, such as high costs, poor portability, and complex data processing.

2.3. FNIRS

Functional near-infrared spectroscopy (fNIRS) is a technique that evaluates neural activity by monitoring changes in cortical blood oxygen levels. It is portable and non-invasive, making it more suitable for application in different environments compared to fMRI. The application of fNIRS in lie detection began in the 1970s and gradually developed over the following decades. In the 1970s, Jobsis, F. F., and others first applied fNIRS to measure brain blood oxygen levels, providing a technological foundation for subsequent research [9]. By the 1990s, with advancements in technology, fNIRS was considered to have potential for use in lie detection. In the early 2000s, Fenghua Tian and others applied fNIRS in lie detection experiments, finding that the oxygenated hemoglobin (HbO) level in the prefrontal cortex significantly increased during lying, thereby verifying the effectiveness of fNIRS as a lie detection tool [10]. In the 2010s, researchers began combining fNIRS with other physiological signals to improve the accuracy of lie detection. Gonzalez and others, by combining fNIRS with EEG, found significant signal correlations between the two, improving the accuracy of lie detection [6]. As technology continued to develop, the flexibility and adaptability of fNIRS in various lie detection scenarios were further explored. Although fNIRS is portable and non-invasive, its lower spatial resolution and sensitivity to scalp and skull thickness may affect signal stability. The multimodal combination approach improved accuracy but also increased the complexity of data analysis. Thus, fNIRS still faces technical challenges in the field of lie detection.

3. Lie Detection Technology Based on Behavioral Information Decoding

Heart rate, respiration rate, eye movements, and micro-expressions have also undergone extensive research and practice in the field of lie detection. Each of these reflects cognitive and emotional changes related to lying based on different physiological indicators, gradually becoming an important part of lie detection technology.

3.1. Heart Rate

In the 1960s, Thackray, R.I.’s research found that deceptive behavior could trigger significant heart rate acceleration. This marked the first time heart rate was listed alongside skin conductance and respiration as a core parameter in lie detection [11]. In the 1980s, Raskin, D.C. developed the first rule-based computer algorithm (PolyScore prototype) to analyze heart rate patterns [12]. In the 1990s, with the development of computing technology, research gradually shifted towards real-time processing and algorithm breakthroughs, such as using artificial neural networks (ANN) to analyze deceptive patterns in heart rate time series. Over the past 20 years, with the rapid development of machine learning and multimodal fusion technologies, Pavlidis and others proposed an integrated lie detection approach combining multiple physiological signals (including heart rate and skin conductance), improving the reliability of lie detection [13]. In the 2010s, Verkruysse, W. and other scholars introduced machine learning algorithms for heart rate data analysis, while also proposing remote lie detection technology based on heart rate data [14]. In recent years, the popularity of wearable devices has made real-time collection and analysis of heart rate data more convenient, driving the civilian application of lie detection technology and providing more possibilities for its use [15].

3.2. Respiration Rate

Research from the 1960s already indicated that people’s breathing becomes more rapid and shallow when they are emotionally tense or lying, laying the foundation for the application of respiration rate in lie detection. By the 1980s, with the development of multi-parameter physiological signal analysis methods, Raskin, D. C. and others proposed that combining respiration rate, heart rate, and skin conductance could more accurately identify deceptive behavior [16]. In the 1990s, scientists like Kircher, J. C. introduced computer technology, making the automatic analysis of respiration rate signals possible, improving the efficiency and accuracy of lie detection [17]. In the early 2000s, machine learning algorithms (such as support vector machines and neural networks) were introduced into the lie detection field, and respiration rate features were used to build more complex classification models. Over the past 20 years, with the continuous progress of sensor technology and data analysis methods, the relationship between respiration rate changes and emotions or psychological stress has become clearer, making it an indispensable part of modern lie detection technology.

3.3. Eye Movements

Eye movement tracking technology was first introduced into the field of lie detection in the 1970s. Hess, E. H. and others explored the relationship between pupil size and visual stimulus interest, providing the theoretical basis for the subsequent application of eye movement detection in lie detection [18]. In the 1990s, eye movement detection technology gradually matured and began to be widely applied in lie detection experiments. Bradley, M. M. discovered the relationship between pupil size, emotional arousal, and autonomic nervous system activation [19], further supporting the application of eye movement detection in lie detection. From the 2000s to the present, with the development of artificial intelligence and big data technologies, eye movement detection in lie detection has become more intelligent and precise. Researchers have developed various eye movement-based lie detection models using machine learning, such as Alnæs, D. and others exploring the relationship between pupil size and mental effort, predicting brain activity and providing a new perspective for eye movement detection in lie detection [20]. Nowadays, eye movement, as a physiological signal, has become an indispensable part of modern lie detection technology, especially in the context of multimodal physiological signal analysis, where its role is becoming more prominent.

3.4. Micro-Expressions

Micro-expressions, a concept introduced by Ekman and Friesen in 1969, are usually unconscious and last for a very short duration [21]. In the 1980s, Ekman, P. and other researchers began exploring the application of micro-expressions in lie detection, believing that micro-expressions could serve as an auxiliary tool for detecting lies. In the 1990s, with the development of computer vision technology, researchers began to experiment with automating the detection of micro-expressions [22]. In the 21st century, researchers established the first micro-expression database, and the introduction of deep learning technology significantly improved the accuracy and efficiency of micro-expression detection [23]. In recent years, micro-expression detection technology has been applied to real-time lie detection and emotion analysis, especially in security, psychological assessment, and other fields [24]. The multimodal lie detection systems combining facial expression recognition technology with physiological signals have further elevated the application of micro-expressions. As a covert and powerful physiological cue, micro-expressions have become an important component of modern lie detection technology that cannot be ignored.

4. Conclusion

In the past 20 years, significant progress has been made in the field of lie detection through multimodal physiological parameter fusion technology. By combining various types of physiological signals (such as EEG, fMRI, heart rate, and skin electrical activity), researchers have improved the accuracy of lie detection, especially with the assistance of deep learning and data analysis methods, achieving more precise detection results. For example, Radhika Kuttala and Ramanathan Subramanian [25] proposed a multimodal hierarchical CNN feature fusion method, which performed excellently in stress detection; Xiaowei Zhang [26] and others introduced a multimodal physiological signal emotion recognition method based on regularized deep kernel machine fusion, significantly improving the accuracy of emotion recognition. These innovative fusion methods continue to drive technological development. In the future, with breakthroughs in artificial intelligence and real-time data processing technologies, multimodal fusion is expected to play an increasingly important role in the field of lie detection, supporting more accurate and reliable lie detection methods.

The development of lie detection technology has shown a trend from contact-based to non-contact-based methods, and from single physiological signals to multimodal fusion. Heart rate, respiratory rate, eye movements, and micro-expressions each provide unique physiological cues. The rise of non-contact technologies has made monitoring more flexible and natural, but its accuracy in complex environments still needs improvement. In the future, lie detection technology will increasingly rely on multimodal fusion, which, by integrating multiple physiological signals, can more comprehensively capture the physiological and emotional changes of individuals. Artificial intelligence and machine learning will play a key role in this field, advancing lie detection technology towards higher precision and greater robustness, ultimately achieving a more reliable lie detection system.

References

[1]. Lykken, D. T. (1974). Psychology and the lie detector industry. American Psychologist, 29(10), 725–739. https://doi.org/10.1037/h0037441

[2]. Farwell, L. A., & Donchin, E. (1991). The truth will out: Interrogative polygraphy (“lie detection”) with event-related brain potentials. Psychophysiology, 28(5), 531–547. https://doi.org/10.1111/j.1469-8986.1991.tb01990.x PMID: 1758929.

[3]. Abootalebi, V., Moradi, M. H., & Khalilzadeh, M. A. (2009). A new approach for EEG feature extraction in P300-based lie detection. Computers in Biology and Medicine, 94(1), 48–57. https://doi.org/10.1016/j.cmpb.2008.10.001 Epub 2008 Nov 28. PMID: 19041154.

[4]. Saini, N., Bhardwaj, S., & Agarwal, R. (2020). Classification of EEG signals using a hybrid combination of features for lie detection. Neural Computing and Applications, 32(8), 2465–2474. https://doi.org/10.1007/s00521-019-04078-z

[5]. Hefron, R. G., Borghetti, B. J., Christensen, J. C., & Schubert Kabban, C. M. (2017). Deep long short-term memory structures model temporal dependencies improving cognitive workload estimation. Pattern Recognition Letters, 94, 96–104. https://doi.org/10.1016/j.patrec.2017.05.020

[6]. Jafari Deligani, R., Borgheai, S. B., McLinden, J., & Shahriari, Y. (2021). Multimodal fusion of EEG-fNIRS: A mutual information-based hybrid classification framework. Biomed. Opt. Express, 12, 1635–1650.

[7]. Langleben, D. D., et al. (2002). Brain activity during simulated deception: An event-related functional magnetic resonance study. NeuroImage, 15(3), 1122–1131.

[8]. Ganis, G., et al. (2005). Classifying spatial patterns of brain activity with machine learning methods: Application to lie detection. NeuroImage.

[9]. Jöbsis, F. F. (1977). Noninvasive, infrared monitoring of cerebral and myocardial oxygen sufficiency and circulatory parameters. Science, 198(4323), 1264–1267. https://doi.org/10.1126/science.929199

[10]. Tian, F., Sharma, V., Kozel, F. A., & Liu, H. (2009). Functional near-infrared spectroscopy to investigate hemodynamic responses to deception in the prefrontal cortex. Brain Research, 1303, 120–130. https://doi.org/10.1016/j.brainres.2009.09.085 Epub 2009 Sep 25. PMID: 19782657.

[11]. Thackray, R. I., & Orne, M. T. (1968). A comparison of physiological indices in detection of deception. Psychophysiology, 4(3), 329–339.

[12]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302. https://doi.org/10.1037/0021-9010.73.2.291 PMID: 3384775.

[13]. Pavlidis, I., Eberhardt, N. L., & Levine, J. A. (2002). Seeing through the face of deception. Nature, 415(6867), 35. https://doi.org/10.1038/415035a

[14]. Verkruysse, W., Svaasand, L. O., & Nelson, J. S. (2008). Remote plethysmographic imaging using ambient light. Optics Express, 16(26), 21434–21445. https://doi.org/10.1364/oe.16.021434 PMID: 19104573; PMCID: PMC2717852.

[15]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302. https://doi.org/10.1037/0021-9010.73.2.291 PMID: 3384775.

[16]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302.

[17]. Hess, E. H., & Polt, J. M. (1960). Pupil size as related to interest value of visual stimuli. Science, 132(3423), 349–350. https://doi.org/10.1126/science.132.3423.349 PMID: 14401489.

[18]. Bradley, M. M., Miccoli, L., Escrig, M. A., & Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology, 45(4), 602–607. https://doi.org/10.1111/j.1469-8986.2008.00654.x Epub 2008 Feb 11. PMID: 18282202; PMCID: PMC3612940.

[19]. Alnæs, D., Sneve, M. H., Espeseth, T., Endestad, T., van de Pavert, S. H., & Laeng, B. (2014). Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. Journal of Vision, 14(4), 1. https://doi.org/10.1167/14.4.1 PMID: 24692319.

[20]. Ekman, P. (2009). Telling lies: Clues to deceit in the marketplace, politics, and marriage. W. W. Norton & Co.

[21]. Frank, M. G., & Ekman, P. (1997). The ability to detect deceit generalizes across different types of high-stake lies. Journal of Personality and Social Psychology, 72(6), 1429–1439.

[22]. Li, X., Pfister, T., Huang, X., Zhao, G., & Pietikäinen, M. (2013). A spontaneous micro-expression database: Inducement, collection, and baseline. IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), 1–6.

[23]. Liong, S. T., See, J., Wong, K., & Phan, R. C. W. (2019). Less is more: Micro-expression recognition from video using apex frame. Signal Processing: Image Communication, 62, 82–92.

[24]. Kuttala, R., & Subramanian, R. (2020). Multimodal hierarchical CNN feature fusion for stress detection. IEEE Transactions on Affective Computing, 11(2), 321–332.

[25]. Zhang, X., et al. (2021). Emotion recognition from multimodal physiological signals using a regularized deep fusion of kernel machine. IEEE Transactions on Cybernetics, 51(9), 4386–4399. https://doi.org/10.1109/TCYB.2020.2987575

Cite this article

Chen,C. (2025). Research on Lie Detection Technology Based on Neurophysiological and Behavioral Signal Decoding. Applied and Computational Engineering,119,55-60.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Lykken, D. T. (1974). Psychology and the lie detector industry. American Psychologist, 29(10), 725–739. https://doi.org/10.1037/h0037441

[2]. Farwell, L. A., & Donchin, E. (1991). The truth will out: Interrogative polygraphy (“lie detection”) with event-related brain potentials. Psychophysiology, 28(5), 531–547. https://doi.org/10.1111/j.1469-8986.1991.tb01990.x PMID: 1758929.

[3]. Abootalebi, V., Moradi, M. H., & Khalilzadeh, M. A. (2009). A new approach for EEG feature extraction in P300-based lie detection. Computers in Biology and Medicine, 94(1), 48–57. https://doi.org/10.1016/j.cmpb.2008.10.001 Epub 2008 Nov 28. PMID: 19041154.

[4]. Saini, N., Bhardwaj, S., & Agarwal, R. (2020). Classification of EEG signals using a hybrid combination of features for lie detection. Neural Computing and Applications, 32(8), 2465–2474. https://doi.org/10.1007/s00521-019-04078-z

[5]. Hefron, R. G., Borghetti, B. J., Christensen, J. C., & Schubert Kabban, C. M. (2017). Deep long short-term memory structures model temporal dependencies improving cognitive workload estimation. Pattern Recognition Letters, 94, 96–104. https://doi.org/10.1016/j.patrec.2017.05.020

[6]. Jafari Deligani, R., Borgheai, S. B., McLinden, J., & Shahriari, Y. (2021). Multimodal fusion of EEG-fNIRS: A mutual information-based hybrid classification framework. Biomed. Opt. Express, 12, 1635–1650.

[7]. Langleben, D. D., et al. (2002). Brain activity during simulated deception: An event-related functional magnetic resonance study. NeuroImage, 15(3), 1122–1131.

[8]. Ganis, G., et al. (2005). Classifying spatial patterns of brain activity with machine learning methods: Application to lie detection. NeuroImage.

[9]. Jöbsis, F. F. (1977). Noninvasive, infrared monitoring of cerebral and myocardial oxygen sufficiency and circulatory parameters. Science, 198(4323), 1264–1267. https://doi.org/10.1126/science.929199

[10]. Tian, F., Sharma, V., Kozel, F. A., & Liu, H. (2009). Functional near-infrared spectroscopy to investigate hemodynamic responses to deception in the prefrontal cortex. Brain Research, 1303, 120–130. https://doi.org/10.1016/j.brainres.2009.09.085 Epub 2009 Sep 25. PMID: 19782657.

[11]. Thackray, R. I., & Orne, M. T. (1968). A comparison of physiological indices in detection of deception. Psychophysiology, 4(3), 329–339.

[12]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302. https://doi.org/10.1037/0021-9010.73.2.291 PMID: 3384775.

[13]. Pavlidis, I., Eberhardt, N. L., & Levine, J. A. (2002). Seeing through the face of deception. Nature, 415(6867), 35. https://doi.org/10.1038/415035a

[14]. Verkruysse, W., Svaasand, L. O., & Nelson, J. S. (2008). Remote plethysmographic imaging using ambient light. Optics Express, 16(26), 21434–21445. https://doi.org/10.1364/oe.16.021434 PMID: 19104573; PMCID: PMC2717852.

[15]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302. https://doi.org/10.1037/0021-9010.73.2.291 PMID: 3384775.

[16]. Kircher, J. C., & Raskin, D. C. (1988). Human versus computerized evaluations of polygraph data in a laboratory setting. Journal of Applied Psychology, 73(2), 291–302.

[17]. Hess, E. H., & Polt, J. M. (1960). Pupil size as related to interest value of visual stimuli. Science, 132(3423), 349–350. https://doi.org/10.1126/science.132.3423.349 PMID: 14401489.

[18]. Bradley, M. M., Miccoli, L., Escrig, M. A., & Lang, P. J. (2008). The pupil as a measure of emotional arousal and autonomic activation. Psychophysiology, 45(4), 602–607. https://doi.org/10.1111/j.1469-8986.2008.00654.x Epub 2008 Feb 11. PMID: 18282202; PMCID: PMC3612940.

[19]. Alnæs, D., Sneve, M. H., Espeseth, T., Endestad, T., van de Pavert, S. H., & Laeng, B. (2014). Pupil size signals mental effort deployed during multiple object tracking and predicts brain activity in the dorsal attention network and the locus coeruleus. Journal of Vision, 14(4), 1. https://doi.org/10.1167/14.4.1 PMID: 24692319.

[20]. Ekman, P. (2009). Telling lies: Clues to deceit in the marketplace, politics, and marriage. W. W. Norton & Co.

[21]. Frank, M. G., & Ekman, P. (1997). The ability to detect deceit generalizes across different types of high-stake lies. Journal of Personality and Social Psychology, 72(6), 1429–1439.

[22]. Li, X., Pfister, T., Huang, X., Zhao, G., & Pietikäinen, M. (2013). A spontaneous micro-expression database: Inducement, collection, and baseline. IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), 1–6.

[23]. Liong, S. T., See, J., Wong, K., & Phan, R. C. W. (2019). Less is more: Micro-expression recognition from video using apex frame. Signal Processing: Image Communication, 62, 82–92.

[24]. Kuttala, R., & Subramanian, R. (2020). Multimodal hierarchical CNN feature fusion for stress detection. IEEE Transactions on Affective Computing, 11(2), 321–332.

[25]. Zhang, X., et al. (2021). Emotion recognition from multimodal physiological signals using a regularized deep fusion of kernel machine. IEEE Transactions on Cybernetics, 51(9), 4386–4399. https://doi.org/10.1109/TCYB.2020.2987575