1. Introduction

1.1. Research background

According to the results of the seventh national census in 2020 [1], China's population aged 65 and above has reached 190 million, accounting for 13.5% of the total population. In addition, the China Disabled Persons' Federation states that about 24.12 million people suffer from lower limb disabilities, while the penetration rate of assistive devices is less than 10% [2]. Wheelchairs, as an assistive device, provide convenience to the elderly and disabled in their daily lives. With the advent of the technological era, the electric wheelchair was invented. Its advantages over conventional ones include better maneuverability, no need for caregiver operation, and no upper limb control. Therefore, topics related to assistive intelligent wheelchairs are the focus of discussion and research in today's society and scientific community [3].

Due to the essential requirements of wheelchairs to replace people with lower limb impairments, one should not only be concerned with the mobility of the wheelchair but also the safety of the wheelchair itself. Ordinary wheelchairs require users to have a certain level of wheelchair driving experience, be able to react quickly to their environment, and require a high level of concentration during driving. However, most of their users are elderly and disabled, and they are often unable to accurately control the movement of the wheelchair and also have difficulty meeting the above requirements, which could lead to collisions and falls [4]. Therefore, obstacle avoidance of smart wheelchairs is particularly important. In this regard, sensors act like a second pair of eyes for the user, trying to anticipate hazards and take evasive action to play an active protective role when they are unable to react correctly to the environment.

1.2. Current status of sensor research

In 1986, the first intelligent wheelchair was invented in the United Kingdom, which could determine position and orientation by a combination of radio frequency identification and GPS [5]. In addition, the TAO intelligent wheelchair, improved and invented by a Canadian company, added Light Detection and Ranging to sense the surroundings and detect obstacles along the way. Later, the American team further improved the performance by introducing vision sensors and ultrasonic sensors [5, 6]. The wheelchair manufactured by the 863 project of the Chinese Academy of Sciences was pioneering work in this field in China, enabling obstacle avoidance through vision sensors [5]. Through the efforts of countless scientists, China has also developed intelligent wheelchairs incorporating vision, ultrasonic and laser technologies, achieving a breakthrough from catching up to leading the field [7]. Figure 1 is the Navchair invented by Michigan University and Jiaolong wheelchair invented by Shanghai Jiaotong University is shown in Figure 2.

Figure 1. UMich: Navchair [6].

Figure 2. SJTU: Jiaolong [7].

The mainstream wheelchairs on the market now use roughly three different types of sensors, namely visible light sensors, invisible light sensors, and acoustic sensors[8]. From the author's point of view, most of the previous literature focuses on multi-sensor integration and the corresponding computer algorithm research, but there is a comparative analysis of the available sensor types in less literature. Therefore, this paper will elaborate on the working methods and principles of acoustic, visible, and invisible light sensors in wheelchair operation and by summarizing the references and searching the relevant information. Passive binocular vision systems from visible light sensors; LiDAR, Infrared ranging module(Triangulation and TOF method), and structured light from non-visible light sensors; ultrasonic distance measurement modules from acoustic sensors are selected to analyze and compare their advantages and limitations respectively.

Monocular cameras are mostly used for image acquisition rather than distance measurement, so at this stage, the use of monocular cameras for distance measurement function of wheelchair is rare. As for some types of TOF-based infrared ranging modules like HJ-IR2, Although it is often used in obstacle avoidance systems, it is alarmed by setting a threshold value rather than having the ability to measure distance and output data. Therefore, it is considered not to have a distance measurement function and would not be selected for introducing either.

2. Introduction to the principles of sensors

2.1. Three TOF distance measurement sensors

TOF, or time of flight, is essentially the measurement of the time difference between a particle being emitted from a given medium and it being detected when it bounces back. This is combined with the speed of the particle movement to calculate the distance.

All the ultrasonic distance measurement modules selected in this paper use the I/O port to trigger the distance measurement, these modules automatically send 8 square waves of 40kHz and detect the return ultrasonic waves; if the ultrasonic waves return, a signal is input through Echo to the I/O port, and the duration of the signal is the time from the emission to the return of the ultrasonic waves[9]. By measuring the time difference between the time of emission and the time of reception, T, the distance D (in m) between the wheelchair and the obstacle can be found:

\( D=a×(T-t) \) (1)

*a is the speed of sound, which equals 340 m/s generally; t is the reaction time of the receiver.

LiDAR is similar in principle to ultrasound, except that the particles move at the speed of light. Multi-line LiDAR has multiple transmitters and receivers in the vertical direction, and through the rotation of the transmitter, multiple lines of reflected light are obtained, and a three-dimensional spatial point cloud of data for subsequent obstacle avoidance is provided. The more lines, the more perfect the surface contour of the object, as well as the more data processed, the higher the hardware requirements. As for single-line LiDAR, because it has only one line in the vertical plane, it does not have the ability to range in the vertical plane, which is why a single-line LiDAR can only output the distribution of obstacles in a two-dimensional plane.

Infrared ranging module(TOF method): The infrared ranging module also uses TOF method, which can be simply understood as a single-line LIDAR that does not scan, but stays in position to measure the distance. Because it has only one line in the vertical plane, it does not have the ability to range in the vertical plane, which is why single-line radar can only output the position of one obstacle in a two-dimensional plane at a time. The light pulses are emitted by the transmitting light path and then reach the surface of the object to be measured and scattered in all directions. The receiving light path of the distance measurement module receives part of the scattered light energy and then converts it into a photo-current which is fed to the return signal processing circuit; after processing an electrical pulse is obtained which is used to trigger the timing unit to stop the timing.

2.2. Three geometric distance measurement sensors

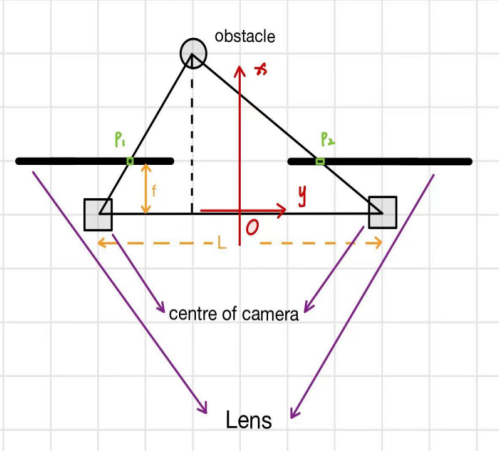

The binocular vision system is more complex. For its principle, in general terms it uses two cameras to find out the exact direction and position by the calculation of a triangle. The principal how binocular vision system measures the distance of obstacles is shown in Figure 3:

Figure 3. Principal of triangulation method.

\( f \) is the focal length of the camera, the distance between the two cameras is L, and the imaging coordinates on the lens are P1(y1,z1) P2(y2,z2): according to the similar triangle property, the coordinates of the obstacle Ob (X,Y,Z) can be found by the following formulas:

\( X=\frac{Lf}{\{y_{1}}-{y_{2}}\} \) (2)

\( Y=\frac{L({y_{1}}+{y_{2}})}{2({y_{1}}-{y_{2}})} \) (3)

\( Z=\frac{L({z_{1}}+{z_{2}})}{2({z_{1}}-{z_{2}})} \) (4)

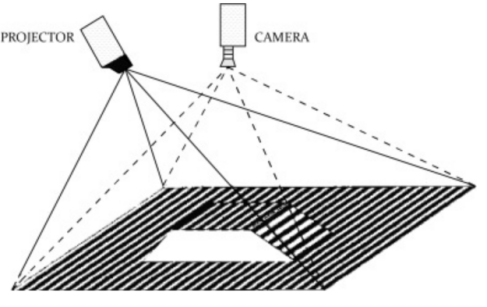

These show that the vertical distance between the obstacle and the wheelchair is X, (f is small and can be ignored). To simplify the calculation, the data in the z-axis direction can be ignored. The principle of structured light distance measurement is shown in the following diagram (see Figure 4).

Figure 4. Structured light distance measurement principle[10].

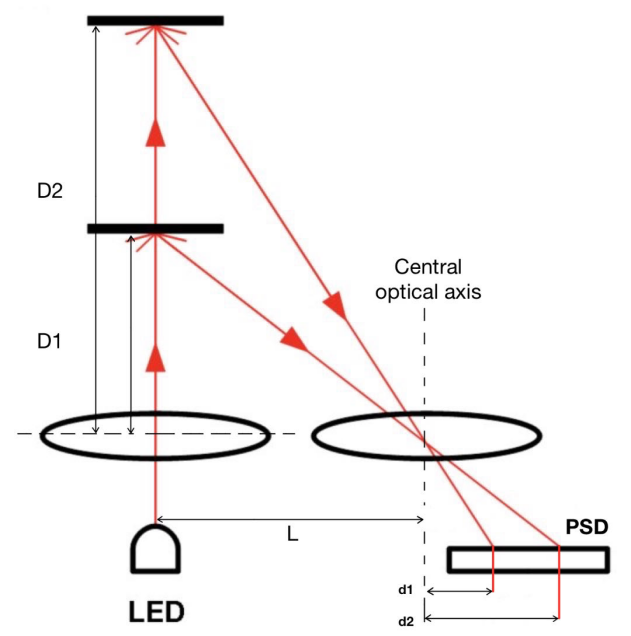

The square on the left is a structured light projector, which usually emits an infrared laser, in front of which a diffraction grating is mounted so that the infrared laser forms a structured spot (a lattice or line). A CCD camera is used to photograph the degree of bending of the stripe modulated by the object, demodulate the bending stripe to obtain the phase, which is then converted into the distance of the obstacle [10]. The infrared ranging module also uses triangulation ranging method. Compared to structured light, infrared ranging module(triangulation) emits infrared light without crossing diffraction grating. The conductivity of position-sensible photo detector(PSD) depends on the position where the beam falls [11]. The distance measurement principle of triangulation-based infrared ranging module is shown in Figure 5 with the relative formulas below:

Figure 5. Principal of infrared ranging module [11].

\( D=\frac{f×(L+d)}{d} \) (5)

* \( f \) is the focal length of the camera. As can be seen, the three TOF distance sensors are simpler in principle; they both derive the distance by calculating the wave propagation time. The other three sensors require analysis of the geometry, matrix transformation and a large number of operations to derive the coordinates and distance of the obstacle. In addition, some algorithms for vision sensing also need to cover the directional recognition of obstacles in order to make the most correct obstacle avoidance behaviour.

3. Sensor performance analysis

3.1. TOF distance measurement sensors

3.1.1. Performance analysis. Ultrasonic sensor: This paper selects 3 different brands of ultrasonic sensor which are widely used in wheelchair obstacle avoidance and their various performance parameters are shown in the following table 1:

Table 1. Ultrasonic distance measurement modules.

Name | Range of vision(degree) | Distance(cm) | Precision |

HC-SR04 | [-15,15] | [2,450] | 5% |

CS100A | [-15,15] | [2,800] | 0.1cm+1% |

US-016A | [-15,15] | [2,560] | 0.3cm+1% |

LiDAR: The motor with transmitter of LiDAR rotates at a fixed frequency. The range of the LiDAR is determined by the reflectivity of the space on the side to be measured, reaching a maximum when the reflectivity is >90% (white), after which the range decreases as the reflectivity decreases. The parameters of 3 different brand of LiDAR are shown in the table 2:

Table 2. Light detection and ranging.

Name | Scan period(ms) | Range of vision(degree) | Distance(cm) | Distance error(cm) |

UBG-04LX-F01 | 28 | 240 | [6,400] | (100,400)1% |

KILS-F31 | 67 | 270 | [5,500/800] | 3 |

KELi-LS2 | 40 | 270 | [2,500/1000] | 2 |

Infrared ranging module(TOF): This article investigates two of the most common brands on the market and their information is in table 3:

Table 3. Infrared ranging module(TOF).

Name | Distance (cm) | Distance error (cm) | Range of vision (degree) |

GY-TOF10M | [15, 1000] | [15, 300] (±5), [300,1000] (±1.5%) | 2 |

DFRobot(0.2~12) | [20, 1200] | [20, 350](±5), [350,1200] (±1.5%) | 2 |

3.1.2. Mechanical property. The mechanical properties in this paper refer specifically to the dimensions, mass and price of each sensor. The data of most frequently used products in each sensor category are shown in the following table(see table 4):

Table 4. Comparison of mechanical properties of sensors based on TOF method.

Sensor Type | Supersonic | LiDAR | IRM (TOF) |

Name | HC-SR04 | UBG-04LX-F01 | GY-TOF10M |

Length(mm) | 45 | 60 | 26 |

Width(mm) | 20 | 75 | 16 |

Height(mm) | 15 | 45 | 12.5 |

Mass(g) | 12.5 | 260 | 5 |

Price(¥) | 4.4 | 2899 | 89 |

3.2. Geometric distance sensor

3.2.1. Performance analysis. Binocular vision system: This article takes 3 different brands as an example. Their performances are shown in the table below(table 5):

Table 5. Binocular vision system.

Name | Baseline (cm) | Max resolution | Pixel size (um) | Range H x V (degree) | Deep vision distance (cm) | Precision | Frame rate (fps) |

ZED 2 | 12 | 1280 x 720 | 2 | 110 x 70 | [30,2000] | 3m(1%) 15m(5%) | 60 |

Bumblebee 2 firewire | 12 | 1032x766 | 4.65 | 97 x 66 | [30,2000] | 3m(0.1%) 15m(10%) | 20 |

Mynt eye D1000-50/color | 12 | 1920 x 1280 | NA | 64 x 38 | [50,1000] | <2% | 60 |

Structured light: This paper investigates 3 structure light ranging sensors, which use Light Coding to read the projected infrared pattern and obtains the depth information through the deformation of the pattern. Their information can be found in table 6:

Table 6. Structured light.

Name | Max resolution | H x V (degree) | Distance (cm) | Precision |

D435 | 1280 x 720 | 87 x 58 | [28, 300] | <2% at 2m |

Kinect V1 | 640 x 480 | 57 x 43 | [40, 450] | <5% |

Orbbee Astra | 1280 x 1024 | 58.4 x 45.5 | [60, 800] | ±(1~3)mm at 1m |

Infrared ranging module(triangulation): As there are limited products available on the internet and information is difficult to search for, this article lists only those products for which key parameters can be found(Sharp and KODENSHI) in table 7:

Table 7. Infrared ranging module(triangulation).

Name | Distance(cm) | Precision(cm) | Output voltage(v) | Working voltage(v) |

GP2Y0A02YK0F | [20, 150] | <0.2[12] | 2.8 | 5.5 |

ORA1L03-A0 | [10,150] | <1 | 3.5 | 7 |

3.2.2. Mechanical properties. The representative products of three triangulation sensors are listed in table 8:

Table 8. Comparison of mechanical properties of sensors based on triangulation method.

Sensor type | Binocular vision | Structured light | IRM (triangulation) |

Name | ZED 2 | RealSense D435 | GP2Y0A02YK0F |

Length(mm) | 175 | 90 | 37 |

Width(mm) | 30 | 25 | 13 |

Height(mm) | 33 | 25 | 21.6 |

Mass(g) | 166 | 210 | 4.8 |

Price(¥) | About 3255 | About 2276.5 | 22.7 |

4. Limitations analysis and advantages comparison

4.1. The limitations of sensors based on invisible light

For the LiDAR and infrared ranging module(TOF), it is very important to synchronize the transmitter and receiver times and to determine the receiver response time. Since the speed of light is fast, the measured distance will differ significantly from the actual distance if there are large errors in the calibration of the sensor for the emitting and receiving times[13]. Therefore, the high requirements for time-synchronous hardware make both distance measurement sensors based on light more expensive than ultrasonic distance measurement modules. In addition, the use of infrared-based sensors is limited. Firstly, if the reflectivity of the environment is low, such as a dark wall, the maximum detection distance will be reduced and if the range is already not large and the wheelchair is running fast, a collision may occur due to insufficient braking distance. Secondly, if objects with high transparency, such as glass doors, the infrared light may penetrate directly or be refracted, resulting in large errors or no feedback at all, which can be a major hazard for wheelchair users who are visually impaired. Lastly, the weather environment can affect the distance measurement efficiency in non-clear weather conditions such as heavy rain, snow and fog [14].

The structured light method is influenced more significantly by bright light and highly reflective objects, as excessively bright light and double image due to reflection can make the projection of the structured light less visible, therefore structured light cameras do not perform as well as other sensors outdoors. Also, it is generally more costly and less timely than other sensors due to the extensive steps and calculations required, which are the limitations of infrared ranging module(triangulation).

4.2. The limitations of sensors based on ultrasound

In terms of ultrasonic distance measurement sensors, there is a relationship between the speed of sound and temperature due to the following:

\( a=\sqrt[]{γRT} \) (6)

\( a \) is the speed of sound, \( γ \) and \( R \) are constants. Therefore, the sensor needs to be re-calibrated when the ambient temperature changes by more than ±10℃. Because of the large scattering angle and poor directionality of the ultrasonic waves, the sensor often has a large error when ranging in complex circumstances and over long distances because of the weak echo signal. There is also a minimum range because the signal going directly from the transmitter to the receiver needs to be treated as an error. In addition, objects that are too small or made of sound-absorbing materials do not reflect ultrasound effectively and therefore do not produce valid results[9].

4.3. The limitation of sensors based on visible light

For binocular vision sensor, the fact that it is a passive vision sensor that requires external light (usually at least 0.1 lux), which means that it does not work properly at night. In addition, the correction of the radial and tangential aberrations of the two lenses by the binocular vision system requires a large number of matrix operations, which is quite demanding on the software. In order to accommodate the size of the wheelchair, the sensor will not be designed with a large dimension, which leads to a limitation in its baseline length, which determines its maximum measure range and accuracy.

4.4. Advantages comparison

Each type of sensor has its own specific applications. As far as application scenarios are concerned, several other than the binocular camera can work at night. In addition, due to the calculation complexity of software, sensors using TOF method respond faster, which means that they are more suitable for use in complex road conditions and faster ambient conditions[15].

For measuring targets, the LiDAR can perform a flat scan and can quickly locate obstacles at a distance; the structured light method is accurate for objects within 1m of the wheelchair and can be used to detect obstacles on the ground near the front of the wheelchair; the binocular camera has a larger field of view and can be used with the ultrasonic distance module to detect obstacles above the wheelchair such as tree branches and door frames; binocular distance measurement also It can be mounted directly in front of the wheelchair for better obstacle avoidance planning.

Both infrared ranging modules are very small and can therefore be installed anywhere in the wheelchair geometry without taking up too much space or significantly increasing the weight of the wheelchair. Even if the detection field of view of each sensor is small, this can be compensated for by increasing the number of sensors used. A LiDAR can often have a field of view of 270 degrees and, if its field of view is not obstructed, can detect the entire front of an obstacle. This is why it can be used to maximum advantage if it is mounted in the centre of the backrest of the wheelchair.

5. Conclusion

This paper analyses the six common sensors on the market, lists their limitations and draws out their advantages. Each of the six sensors has its own advantages and disadvantages, and how to choose the more efficient sensor for a particular need, how to properly match the six sensors to ensure the accuracy of obstacle measurement and to keep the cost of controlling the wheelchair within a reasonable range is the focus of research into intelligent wheelchair obstacle avoidance systems. The trend in recent years has seen the cost of higher technology distance sensors such as LiDAR and binocular depth cameras fall gradually with their localization, meaning that factories can use these sensors while keeping the cost of wheelchairs within reasonable limits. For lower-cost sensors such as ultrasonic distance modules, when increasing their number to ensure the avoidance effect has become the norm in today's market. It is worth looking at how to optimize the performance and reduce the number of sensors by combining them with other kinds of sensors. With the development of technology, the performances of each sensor can be significantly improved and anyone with relevant requirements can afford to acquire a wheelchair with excellent obstacle avoidance capability at a proper price.

References

[1]. Wei, Y., et al. Looking at the population development of China in the new era from the seventh census data. J. Xi'an University of Finance and Economics, 2021, 34 (05): 107-121

[2]. Meng, F. Xu, L. Analysis of aging under China's national development strategy. Industrial Science and Technology Innovation, 2020, 30.

[3]. Liang, D., et al. Research status and development trends of mobile robots. Science and Technology Information, 2014, 09.

[4]. Zhang, D. Research on intelligent obstacle avoidance and control strategy of wheelchair robot for safe use. Shenyang University of Technology, 2022. 000295.

[5]. Bai, C. Research on motion control of unmanned wheelchair based on multi-sensor information fusion. Shandong University of Technology, 2022. 000262.

[6]. Simpson R C, Levine S P. Automatic adaptation in the NavChair Assistive Wheelchair Navigation System. [J]. IEEE Transactions on Rehabilitation Engineering, 1999, 7(4): 452-463.

[7]. https://robotics.sjtu.edu.cn/cpyy/142.html.

[8]. Tian S. Research and implementation of path planning for intelligent wheelchairs based on multi sensor fusion. Tianjin University of Science and Technology, MA thesis. 2021, 2: 2-3.

[9]. Wang G. Research on ultrasonic distance sensor. Heilongjiang University, 2014, 10:20-23.

[10]. Sam V. J., Joris J. J. Dirckx, Real-time structured light profilometry: a review. 2016, 87: 18-31.

[11]. Do Y, Kim J. Infrared Range Sensor Array for 3D Sensing in Robotic Applications. International Journal of Advanced Robotic Systems. 2013, 10(4).

[12]. Ngoc-Thang B., et al. Meas. Sci. Technol. 2022, 33: 075001.

[13]. Luo Z. Research on key technologies of single line omnidirectional Lidar system. Shenzhen University, MA thesis. 2019,01:11.

[14]. Yang F., et al. Simulation analysis of the impact of adverse weather on the performance of frequency modulated continuous wave lidar. Laser and Infrared, 2023,53 (05): 663-669.

[15]. Hu Y. Research on interaction system of mobile service robot for helping the elderly and the disabled based on gesture recognition. Nanjing University of Posts and Telecommunications, 2016, 02: 9.

Cite this article

Wang,C. (2024). Comparative analysis of obstacle avoidance sensors based on assistive intelligent wheel chair. Applied and Computational Engineering,31,9-18.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2023 International Conference on Machine Learning and Automation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Wei, Y., et al. Looking at the population development of China in the new era from the seventh census data. J. Xi'an University of Finance and Economics, 2021, 34 (05): 107-121

[2]. Meng, F. Xu, L. Analysis of aging under China's national development strategy. Industrial Science and Technology Innovation, 2020, 30.

[3]. Liang, D., et al. Research status and development trends of mobile robots. Science and Technology Information, 2014, 09.

[4]. Zhang, D. Research on intelligent obstacle avoidance and control strategy of wheelchair robot for safe use. Shenyang University of Technology, 2022. 000295.

[5]. Bai, C. Research on motion control of unmanned wheelchair based on multi-sensor information fusion. Shandong University of Technology, 2022. 000262.

[6]. Simpson R C, Levine S P. Automatic adaptation in the NavChair Assistive Wheelchair Navigation System. [J]. IEEE Transactions on Rehabilitation Engineering, 1999, 7(4): 452-463.

[7]. https://robotics.sjtu.edu.cn/cpyy/142.html.

[8]. Tian S. Research and implementation of path planning for intelligent wheelchairs based on multi sensor fusion. Tianjin University of Science and Technology, MA thesis. 2021, 2: 2-3.

[9]. Wang G. Research on ultrasonic distance sensor. Heilongjiang University, 2014, 10:20-23.

[10]. Sam V. J., Joris J. J. Dirckx, Real-time structured light profilometry: a review. 2016, 87: 18-31.

[11]. Do Y, Kim J. Infrared Range Sensor Array for 3D Sensing in Robotic Applications. International Journal of Advanced Robotic Systems. 2013, 10(4).

[12]. Ngoc-Thang B., et al. Meas. Sci. Technol. 2022, 33: 075001.

[13]. Luo Z. Research on key technologies of single line omnidirectional Lidar system. Shenzhen University, MA thesis. 2019,01:11.

[14]. Yang F., et al. Simulation analysis of the impact of adverse weather on the performance of frequency modulated continuous wave lidar. Laser and Infrared, 2023,53 (05): 663-669.

[15]. Hu Y. Research on interaction system of mobile service robot for helping the elderly and the disabled based on gesture recognition. Nanjing University of Posts and Telecommunications, 2016, 02: 9.