1. Introduction

With the rapid development of Internet technology, social media platforms have been rapidly popularized, profoundly changing the way and pattern of information dissemination. It breaks the time and space constraints of traditional information transmission, so that information can be transmitted to all corners of the world in an instant, and thus becomes an important platform for people to obtain information, share ideas and interact with others. However, the rapid expansion of social media has also brought the problem of the proliferation of disinformation. Disinformation includes false news, misleading data, altered images and videos, and exaggerated or one-sided narratives. It is highly misleading and provocative, which not only disturbs public cognition and affects individual decision-making, but also threatens public safety and social order [1], causing great hidden dangers to the network environment and social development.

In view of the dangers of disinformation, the World Health Organization and the United Nations launched a joint statement in 2020, calling on people to prevent the spread of false health information by sharing correct health information [2]. With the increasing complexity of the network information environment, it is urgent to carry out public governance of disinformation through social correction mechanism to improve the inclusiveness of the information society. Studies have shown that the correction of social media users will reduce the public’s reception and dissemination of disinformation [3]. In the social media environment where disinformation is rampant, users are not only the receivers of information, but also the key nodes in the process of dissemination. Whether they will verify disinformation or suspected disinformation, and what factors will affect the users’ information verification behavior, these issues have not been explored. Clarifying the influencing factors of social media users’ disinformation verification intention will help reduce the dissemination of disinformation and promote the high-quality development of network information.

Current research on disinformation in the academic circle mainly focuses on the concept [4], detection and recognition technology [5, 6], communication mechanism [7, 8], risk and governance [9, 10]. In general, although scholars have conducted multi-dimensional and systematic research on the problem of disinformation, there are still some key issues that need to be further explored. The dissemination of disinformation [11], sharing [12], response [13] and other user information behaviors have not received sufficient attention, especially the research on the verification behavior of disinformation is still insufficient. A few relevant studies on the verification behavior of disinformation mainly analyze the user’s behavior in a certain context of disinformation from the perspective of “information verification”. For example, Torres et al. [14] constructed a news verification behavior model based on the theory of cognition and trust, and found that news communicators’ sharing perception, risk awareness of fake news, source credibility, and sharing intention jointly affect the information verification behavior of social network users; by comparing the information dissemination and verification behavior of social media users in India and the United States, Sharma et al. [15] found that information quality and information category have a significant impact on users’ sharing and verifying information. Most of the existing studies have adopted structural equation model to verify and explore the influence mechanism of some variables on social media users’ verification behavior, such as attitude, subjective norm, perceived behavioral control, perceived organizational support, trust, information literacy, social media literacy, verification behavior, missing anxiety, risk perception, etc. [16-21] Among these variables, the most commonly studied are the three variables of attitude, subjective norm and perceived behavioral control contained in the theory of planned behavior (TPB). Many scholars have chosen TPB and its extended model to study the information behavior of social media users. For example, Pundir V et al. [18] expanded TPB by adding three variables: consciousness, knowledge and measure anxiety; Alwreikat A [19] used TPB combined with the perceived severity variable to conduct an empirical study on the user’s behavior intention to share disinformation. However, the original analytical framework of TPB is not sufficient to fully reveal individual behavioral intention in different contexts [22]. Therefore, it is necessary to expand the theoretical model by integrating other variables or models.

Most of the previous studies only studied the verification behavior of social media disinformation from a certain perspective, and rarely considered it from the dual perspectives of rationality and morality, self-interest and altruism. In addition, the intersection of “digitization” and “aging” has formed new characteristics of the times. Different digital generations may lead to differences in user behavior of social media disinformation. However, the main body of existing research focuses on the “digital native” group [23, 24], lacking comparison between different digital generations groups. To sum up, this paper constructs a comprehensive theoretical framework of planned behavior theory and norm activation model, discusses the driving mechanism of disinformation verification intention of social media users with dual characteristics, and compares the differences between different generation groups, in order to provide some references for optimizing disinformation governance strategies and targeted incentives for users to proactively verify disinformation.

2. Theoretical Foundation

2.1. Theory of Planned Behavior

Theory of Planned Behavior (TPB) is the most famous theory of attitude-behavior relationship in social psychology, which was proposed by Ajzen based on Theory of Reasoned Action (TRA). Ajzen found in his research that individual behavior is not completely controlled by individual will, but also closely related to the ability and resources of individuals to perform this behavior. Therefore, Ajzen added the new variable of perceived behavioral control to the theory of reasoned action, and developed it into a widely used theoretical model of planned behavior [25].

The core idea of TPB is that behavioral intention can effectively explain the variance of actual behavior, and behavioral intention is the result of the combined effect of attitude towards the behavior (AT), subjective norm (SN) and perceived behavioral control (PBC). From the perspective of information processing, this theory uses the expected value model to explain the general decision-making process of individual behavior, which is applicable to all kinds of behaviors that need careful consideration and careful planning. Since its birth, TPB has been widely used in psychology [26], economics [27], management [28], and environmental ecology [29], and its explanatory power has also been fully confirmed in the study of user information behavior.

The more positive the individual’s attitude towards the implementation of a certain behavior is, the stronger the subjective norm and perceived behavioral control are, the stronger the intention to implement the behavior is. Although these three explanatory variables are conceptually independent of each other, they sometimes have a common belief base, so there is often a correlation between them. In addition, the relative importance of the three explanatory variables in behavioral intention prediction will vary due to behavior and context.

2.2. Norm Activation Model

Norm Activation Model (NAM) is a psychological theory proposed by Schwartz in 1977 to explain altruistic behavior, aiming to detect individual prosocial behavior intention [30]. Prosocial behavior refers to the behavior of individuals in order to help others, including a variety of helping, sharing and cooperative behaviors [31]. The theory holds that it is necessary to meet certain conditions to realize the activation of moral norms, that is, individuals can clearly know their behavioral results and have the spirit of responsibility to implement behaviors. Therefore, the norm activation theory proposes three types of antecedents to predict prosocial behaviors, namely, Awareness of Consequences (AC), Ascription of Responsibility (AR) and Personal Norm (PN).

NAM holds that individual prosocial behavior is driven by personal norms, which enable individuals to consider behavior based on their own internal values and moral standards, and the activation of personal norms mainly depends on the combined effect of consequence consciousness and responsibility attribution. In the early stage, NAM was mostly used to study pro-social behaviors such as unpaid blood donation and volunteer service [32]. Later, some scholars pointed out that pro-environmental behaviors can benefit others and are also pro-social behaviors. They began to use NAM to study pro-environmental behaviors such as green travel, purchase of environmentally friendly products, and resource recycling [31], fully confirmed the applicability and good explanatory power of NAM in pro-social behavior research. Disinformation verification behavior can help others to form free from harm, prevent making wrong decisions due to disinformation, and reduce the adverse effects caused by disinformation. It also belongs to the category of prosocial behavior. According to this, it is believed that the norm activation theory can well explain the intention to verify disinformation.

2.3. Combination of Planned Behavior Theory and Norm Activation Theory

Some studies have shown the effectiveness of combining TPB with NAM, and have also been verified in the context of prosocial behavior [33-35]. The differences between NAM and TPB are as follows: first, the former emphasizes altruistic attention, and altruism precedes self-interest; the latter emphasizes personal utility. Although the norm activation theory may think that it is positive to perform certain behaviors because it can bring benefits to others, these behaviors are not necessarily necessary. Secondly, the norm activation model focuses on internal behavior norms, while TPB focuses on external behavior norms. Third, TPB focuses on the role of perceived behavior control in behavior, while the theory of norm activation model does not emphasize this role.

In the exploration of the influence mechanism of behavioral intention, if only based on a single theory, there will be obvious incompleteness and restriction in the research. When the factors in the above two theories will affect the generation of behavioral intention, exploring a more systematic and comprehensive theoretical model to study the driving mechanism of behavioral intention will become the trend of research.

3. Study Hypothesis and Model

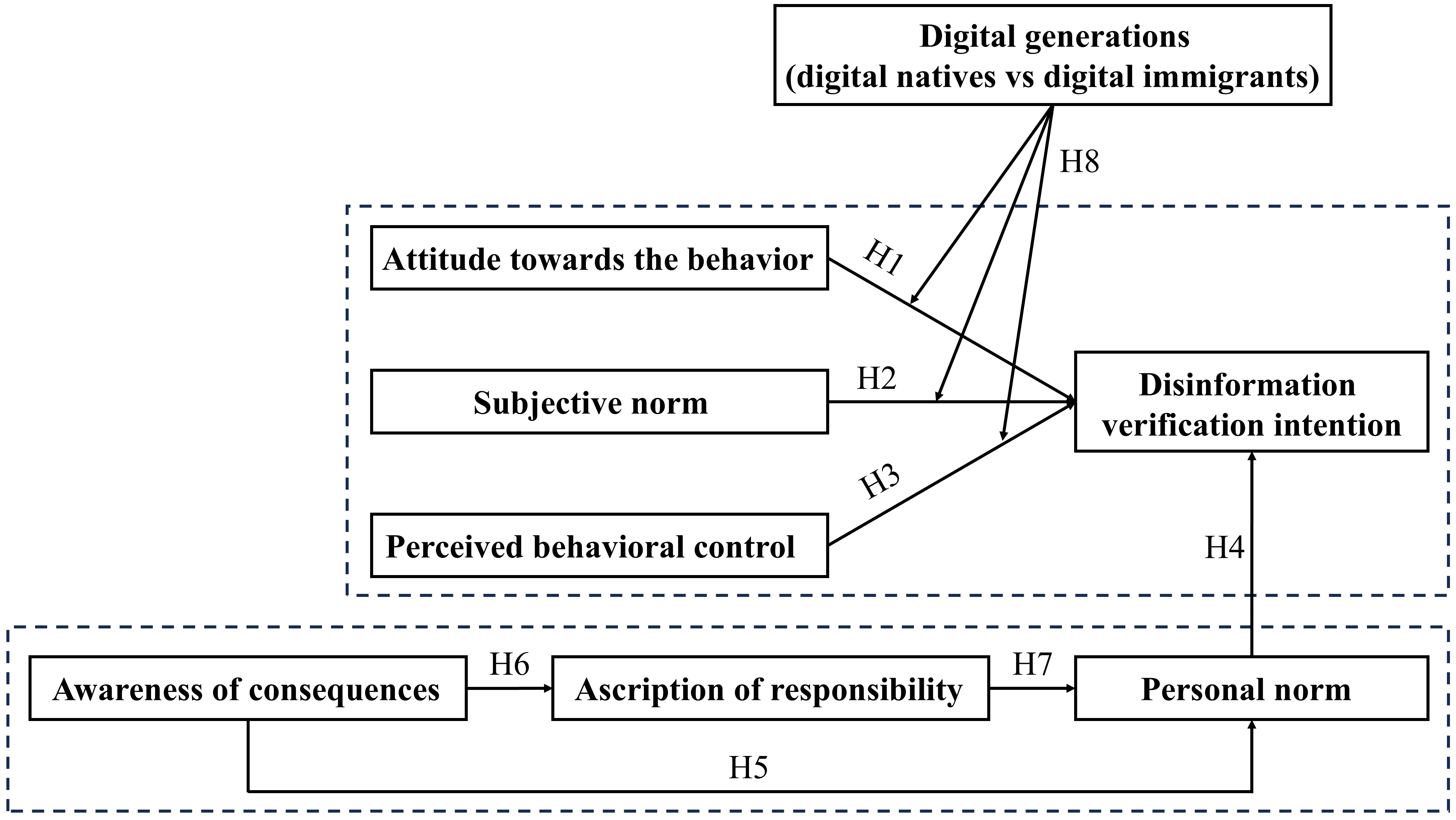

TPB and norm activation model are important theories in the field of prosocial behavior research. TPB emphasizes the driving role of rationality in individual decision-making behavior and has egoism. NAM emphasizes the role of moral drive in individual decision-making and has the trait of altruism. The disinformation verification behavior itself has both altruism and egoism. It is not enough to fully explain the internal driving mechanism of social media users’ disinformation verification behavior only by relying on one of the theories. Therefore, this study constructs a TPB-NAM integrated theoretical model (Figure 1) to provide a more systematic research perspective for clarifying the influence mechanism of social media users’ intention to verify disinformation.

Figure 1. research model based on TPB-NAM integration framework.

3.1. Relevant Hypotheses of TPB

In TPB, attitude is one of the effective predictors of behavioral intention. The attitude in this article refers to the degree to which social media users have a positive or negative view of the verification of disinformation. When users believe that verifying disinformation can help improve their own information literacy, avoid being misled and maintain the information quality of social networks, they are more likely to have the intention to verify. Domestic and foreign scholars have studied the relationship between attitude and behavioral intention. For example, Pundir V et al. [18] found through empirical research that the attitude of users to verify social media information before sharing significantly affects their intention to verify information. Ding X et al. [36] found that people who had a positive attitude towards the verification of COVID-19 rumors also had a positive correlation with their intention to identify rumors. Based on this, this study proposes the following hypothesis:

H1: Attitude towards the behavior positively affects social media users’ disinformation verification intention.

Subjective norm reflects the influence of social pressure and other people’s expectations on individual behavior. The subjective norm in this study refers to the fact that the social media users’ disinformation verification intention mainly comes from the expectations of others (such as the behavior of their organization hoping to verify disinformation) or from the observation of others’ behavior (such as seeing friends and colleagues verify disinformation on social media). In the social media environment, users’ behavior is often constrained by the surrounding population and social environment. If the user’s social circle generally attaches great importance to the verification of disinformation, or public opinion advocates positive information verification behavior, then users will subjectively feel a kind of pressure and expectation, prompting them to participate in the verification of disinformation. Ding X et al. [36] found that there was a positive correlation between subjective norm and the intention to verify COVID-19 rumors. Karnowski V et al. [37] took social media users as the research object and found that their subjective norm positively affected their news sharing intention. Based on the existing research, the following hypothesis is proposed:

H2: Subjective norm positively affects social media users’ intention to verify disinformation.

Perceived behavioral control is they will factor in TPB, which includes a person’s control perception of his ability or behavior. Perceived behavioral control in this study refers to the degree of difficulty that social media users perceive to implement disinformation verification behavior. For social media users, perceived behavioral control is mainly reflected in their assessment of the ability and resources to verify disinformation. If users feel they have enough knowledge, skills and access to verify disinformation, then they will be more confident and motivated to verify. Previous studies have shown that there is a correlation between perceived behavioral control and information security behavior intention [38, 39]. In view of this, TPB is applied to the study of social media users’ disinformation verification intention, and the following hypothesis is proposed:

H3: Perceived behavioral control positively affects the intention of social media users to verify disinformation.

3.2. Relevant Hypotheses of NAM

Personal norm refers to the individual’s perception of his own moral obligation to carry out a specific behavior. It is a sense of personal moral obligation formed by internalizing moral norms, legal provisions, and even unwritten norms into the heart. It is an important force driving individual prosocial behaviors [40]. NAM believes that once personal norm is activated, individual will be prompted to implement prosocial behavior [41]. As an intrinsic motivation, personal norm can stimulate the sense of responsibility and mission of social media users, prompting them to actively verify disinformation. In addition, the studies of Zhao et al. [42] and Tang et al. [43] confirmed that personal norm is an important factor affecting individuals’ behavior against disinformation, and higher personal norm is conducive to driving individuals’ anti-rumor behavior. Verifying disinformation is everyone’s responsibility and obligation. The higher the individual’s personal norm, the stronger the sense of moral obligation to verify disinformation, and the more likely it is to carry out disinformation verification. Therefore, this study proposes the following hypothesis:

H4: Personal norm positively affects social media users’ disinformation verification intention.

Awareness of consequences refers to the individual’s understanding of the negative consequences that may be caused to others or other things by his failure to perform a specific behavior. According to NAM, individual’s awareness of consequences can have an indirect positive impact on prosocial behavior through personal norm. Many studies have also confirmed that individual awareness of consequences has a positive impact on personal norm. For example, Harland et al. [44] and Zhang et al. [41] studied family environmental protection behavior and employees’ energy-saving behavior in the organization. The results showed that the awareness of consequences positively affects personal norm. Tang et al. [43] found that perceived consequences significantly positively affected individuals’ personal norm against disinformation. The spread of disinformation may lead to various adverse consequences, such as disturbing social order, causing cognitive confusion, reducing the quality of health information, and affecting the credibility of media. When individuals are aware of these adverse consequences, their sense of moral obligation to verify disinformation may be stronger. Therefore, this study proposes the following hypothesis:

H5: Awareness of consequences positively affects personal norm.

The ascription of responsibility refers to the individual’s sense of responsibility for the adverse consequences of not implementing specific behavior. Ascription of responsibility is an important factor affecting individual disinformation verification behavior. Existing studies mainly regard it as an intermediary between awareness of consequences and personal norm. For example, Zhao et al. [42] investigated the rumor confrontation behavior of social media users in crisis situations and found that awareness of consequences positively affected ascription of responsibility, which in turn had a positive impact on personal norm; Rui et al. [45] found that awareness of consequences can activate personal norm by enhancing ascription of responsibility, which in turn triggers individual COVID-19 mitigation behavior. When individuals realize that their failure to verify disinformation will have negative consequences on others, they tend to attribute responsibility to themselves and form a higher level of responsibility. This sense of responsibility is a reflection of the individual’s moral foundation and may further activate personal norm. Therefore, this study proposes the following hypotheses:

H6: Awareness of consequences positively affects ascription of responsibility.

H7: Ascription of responsibility has a positive impact on personal norm.

3.3. Moderating Effect of Digital Generations

In 2001, Prensky [46] proposed the concepts of digital natives and digital migrants: the former refers to the group born and raised after the emergence of the digital network society and generally have relatively high level of information technology capabilities. In Western academic circles, the definition of digital natives mostly refers to those born after 1980. However, due to the different development time lines of digital technology between China and the West, Chinese scholars generally recognize 1994 as the digital generations dividing line. The consensus of different scholars on the generation concept is that groups born in the same period of time will have similar ideas and behaviors, while groups in different times have some stability differences [47]. This study divides the digital generations by the Internet access in China in 1994. With the popularity of mobile Internet, digital immigrant groups have become an important part of the contemporary network information society. Clarifying the differences in social media disinformation verification intention between digital generations has reference significance for practice. Lusk [48] found that the group had different characteristics of network behavior from digital immigrant groups by combing the relevant literature on the use of online media by digital aborigines. In addition, there are also studies that have demonstrated that there are differences in value orientation and behavioral performance among different generations, and generation is often used as a moderating variable. Based on this, this study proposes the following hypothesis:

H8: Digital generations play a moderating role in the effect of attitude towards the behavior, subjective norm and perceived behavioral control on the intention to verify disinformation.

4. Research Design

4.1. Questionnaire Design

First of all, on the basis of literature research and expert consultation, the questionnaire design is carried out. The questionnaire consists of two parts. The first part is about the basic information of the research object, and the second part is about the investigation of the influencing factors of the disinformation verification behavior of social media users. The item is the observation variable of each variable in the theoretical model. In order to ensure the accuracy and effectiveness of the variable measurement, the scale items of this study refer to the mature scales in the existing research, and adjust and adapt the existing scales according to the specific use situation of this paper. The specific scales are shown in Table 1. All scale items were measured using the Likert 5-level scale, and 1-5 corresponded to “very disagree” to “very agree”.

Table 1. Scale items and literature sources.

Variables | Measure items | Scale items | Literature sources |

Attitude towards the behavior (AT) | AT1 | I think disinformation verification is a meaningful behavior. | Ajzen [25] and Fishbein [49] |

AT2 | I think disinformation verification is a necessary behavior. | ||

AT3 | I believe that disinformation verification helps to improve the network information security environment. | ||

Subjective Norm (SN) | SN1 | My relatives and friends agree with my behavior of disinformation verification. | Ajzen [25] and Fishbein [49] |

SN2 | My group or organization advocates the concept of verifying disinformation. | ||

SN3 | If my colleagues/classmates have the intention to verify disinformation, then I may also have this intention. | ||

Perceived Behavioral Control (PBC) | PBC1 | It is easy for me to verify disinformation on social media. | Ajzen [25] and Fishbein [49] |

PBC2 | Verifying disinformation on social media is entirely up to me. | ||

PBC3 | In terms of verifying disinformation on social media, I feel that I have full control. | ||

Awareness of Consequences (AC) | AC1 | I worry that I do not verify disinformation that others will be misled. | Werff and Steg [50] |

AC2 | I am worried that my failure to verify disinformation will have a negative impact on people around me. | ||

AC3 | I do not prevent the spread of disinformation may exacerbate people ' s negative emotions. | ||

AC4 | I do not prevent the spread of disinformation may have adverse effects. | ||

Ascription of Responsibility (AR) | AR1 | The verification of disinformation is mainly the responsibility of the government and relevant departments. | Steg and De Groot [31] |

AR2 | I share a common responsibility to verify disinformation. | ||

AR3 | Not verifying disinformation is a personal choice and has nothing to do with responsibility. | ||

Personal Norm (PN) | PN1 | I have an obligation to prevent the spread of disinformation. | Werff and Steg [50] |

PN2 | I should verify the disinformation. | ||

PN3 | I have an obligation to advise people around to verify disinformation. | ||

PN4 | Not verifying disinformation makes me feel guilty. | ||

Verification Intention (VI) | VI1 | When I find information on social media, I check whether the information is complete and comprehensive. | Flanagin and Metzger [51] |

VI2 | When information is found on social media, I will look for other ways to verify the information. | ||

VI3 | When I find information on social media, I will consider the purpose of the author posting information online. | ||

VI4 | When information is found on social media, I check whether the information is up-to-date. |

4.2. Data Collection

In the formal investigation, the questionnaire was generated by the Questionnaire Star platform, and the data was collected by the acquaintance snowball recommendation and the data mart service of the questionnaire platform. A total of 600 questionnaires were collected. For research purposes, in order to ensure the balance between digital immigrants and digital indigenous samples, 492 valid questionnaires were finally screened (the ratio of digital indigenous and digital immigrant samples was 1:1). The main reasons for the deletion of invalid questionnaires are: no social media use experience, screening questions are not up to standard, and the answers are not serious (such as the answer time is too short, the answers of consecutive variables are consistent, etc.).

4.3. Data Analysis

4.3.1. Reliability and Validity Test

Before applying the SEM model, the reliability and validity of the scale are tested, which is helpful to verify the validity and reliability of the scale. In this study, SPSS 27.0 and AMOS 27.0 were used to test the reliability and validity of the overall data. The results are shown in Table 2, showing that the Cronbach’s α values of all variables are greater than 0.7, which meets the acceptance criteria. The combined reliability (CR) values ranged from 0.786 to 0.844, which were higher than the threshold condition of 0.7, indicating that the scale had good reliability. The factor loading values of all measurement questions are greater than the standard of 0.6, and the average variance extraction (AVE) value of the latent variables is 0.520-0.626, which meets the minimum requirement of 0.5, indicating that the scale has good convergence validity. Table 3 shows the test results of the discriminant validity of the questionnaire. The square roots of the AVE values of all latent variables are greater than the absolute values of the corresponding correlation coefficients, indicating that the discriminant validity between the latent variables is good. In summary, the measurement tools of this study passed the reliability and validity test.

Table 2. Reliability and validity analysis.

Variables | Measure items | Factor loading | Cronbach’s α | CR | AVE |

AT | AT1 | 0.734 | 0.804 | 0.806 | 0.580 |

AT2 | 0.771 | ||||

AT3 | 0.779 | ||||

SN | SN1 | 0.783 | 0.784 | 0.786 | 0.551 |

SN2 | 0.756 | ||||

SN3 | 0.684 | ||||

PBC | PBC1 | 0.771 | 0.807 | 0.807 | 0.583 |

PBC2 | 0.730 | ||||

PBC3 | 0.788 | ||||

AC | AC1 | 0.751 | 0.844 | 0.844 | 0.575 |

AC2 | 0.742 | ||||

AC3 | 0.754 | ||||

AC4 | 0.785 | ||||

AR | AR1 | 0.799 | 0.833 | 0.834 | 0.626 |

AR2 | 0.794 | ||||

AR3 | 0.780 | ||||

PN | PN1 | 0.708 | 0.834 | 0.840 | 0.567 |

PN2 | 0.790 | ||||

PN3 | 0.793 | ||||

PN4 | 0.718 | ||||

VI | VI1 | 0.713 | 0.820 | 0.812 | 0.520 |

VI2 | 0.720 | ||||

VI3 | 0.681 | ||||

VI4 | 0.765 |

Table 3. Discrimination validity test results.

Variables | AT | SN | PBC | AC | AR | PN | VI |

AT | 0.762 | ||||||

SN | 0.419 | 0.742 | |||||

PBC | 0.453 | 0.453 | 0.764 | ||||

AC | 0.451 | 0.594 | 0.490 | 0.758 | |||

AR | 0.278 | 0.312 | 0.438 | 0.401 | 0.791 | ||

PN | 0.400 | 0.421 | 0.525 | 0.586 | 0.363 | 0.753 | |

VI | 0.655 | 0.454 | 0.658 | 0.542 | 0.385 | 0.637 | 0.721 |

Note: the number on the diagonal is the square root value of the latent variable AVE, and the number below the diagonal is the correlation coefficient between the latent variables.

4.3.2. Model Fit Test

The matching of data and theoretical model can reflect whether the model is scientific or not. The index system composed of absolute fit index (CMIN/DF), incremental fit index (RMSEA, GFI, AGFI, CFI, IFI, TLI) and simple fit index (PGFI, PNFI) was used to test the model fitness of the theoretical model. The analysis results show that the model fitness index reaches the ideal standard, which proves that it is an acceptable model fitting, as shown in Table 4.

Table 4. Model fit test results.

Indicator category | Fit index | Judgment standard | Targeted value | Fitting results |

Absolute fit index | CMIN/DF | <3.0 | 2.245 | ideal |

Incremental fit index | RMSEA | <0.08 | 0.050 | ideal |

GFI | >0.9 | 0.918 | ideal | |

AGFI | >0.8 | 0.897 | ideal | |

CFI | >0.9 | 0.943 | ideal | |

IFI | >0.9 | 0.944 | ideal | |

TLI | >0.9 | 0.935 | ideal | |

Simple fit index | PGFI | >0.5 | 0.731 | ideal |

PNFI | >0.5 | 0.782 | ideal |

4.3.3. Common Method Bias

Common method bias refers to human systematic bias caused by the same data source or rather, the same measurement environment, measurement context and the project itself. Common method bias is common in empirical research, and is often found in the measurement data of self-report scale. When the common method deviation is serious, it may affect the accuracy of the research results. This study only collects data through questionnaires, and there may be homologous bias. Therefore, the commonly used Harman single factor test method is used to test the common method bias to check whether the common method bias of sample data is serious. The test results show that the maximum factor variance interpretation rate is 33.78%, less than 50% of the standard, indicating that there is no serious common method deviation problem, and the data can be used for subsequent analysis.

4.3.4. Model Hypothesis Testing

Based on the theoretical model, this paper constructs a structural equation model, and uses AMOS27.0 software to test the path relationship of the model. The model hypothesis test results are shown in Table 5. The results show that the relationship between subjective norms and verification intention is not significant, that is, except for the hypothesis H2, the other hypotheses are valid. That is, the behavioral attitude, perceived behavioral control and personal norms of social media users have a significant positive impact on their intention to verify disinformation; consequence awareness of social media users has a significant positive impact on their attribution of responsibility and personal norms, and the attribution of responsibility of social media users has a significant positive impact on their personal norms; the subjective norms of social media users are not significantly positive to their intention to verify disinformation.

Table 5. Path coefficient test results.

Path | Standardized path coefficients | S.E. | C.R. | P | Significance |

AC→AR | 0.431 | 0.064 | 7.845 | *** | significant |

AC→PN | 0.562 | 0.055 | 9.413 | *** | significant |

AR→PN | 0.124 | 0.042 | 2.368 | 0.018 | significant |

AT→VI | 0.388 | 0.041 | 6.948 | *** | significant |

SN→VI | 0.013 | 0.044 | 0.244 | 0.807 | non-significant |

PBC→VI | 0.324 | 0.037 | 5.797 | *** | significant |

PN→VI | 0.345 | 0.040 | 6.972 | *** | significant |

4.3.5. Mediation Effect Test

Bootstrap method was used to verify the significant mediating effect of consequence awareness and intention to verify disinformation. According to the recommendation of Preacher K J et al., the Bootstrap sample size is set to 1000, and the confidence level is set to 95%. Each confidence interval does not contain 0, indicating that there is a mediating effect. The test results of the mediating effect of personal norm between the awareness of consequences and the verification intention of disinformation show that as shown in Table 6, the direct effect, indirect effect and total effect of the impact of social media users’ awareness of consequences on the verification intention are significant, indicating that there is a significant intermediary role between the two, and it is a partial intermediary. The chain mediating effect test results between the awareness of consequences and the verification intention of disinformation are as shown in Table 7. The total effect between the awareness of consequences and the verification intention of disinformation is 0.342, and the mediating effect of the chain mediating effect through the ascription of responsibility and the gradual transmission of personal norm is 0.016, accounting for 4.68% of the total effect; the simple mediating effect of ascription of responsibility is 0.0306, accounting for 8.95% of the total effect. The simple mediating effect of through personal norm was 0.1247, accounting for 36.46% of the total effect. There are three significant intermediary paths: awareness of consequences → ascription of responsibility → verification intention, awareness of consequences → personal norm → verification intention, awareness of consequences → ascription of responsibility → personal norm → verification intention.

Table 6. Analysis of the mediating role of personal norm between awareness of consequences and verification intention.

Effect types | Effect values | Standard error | Bootstrap95%CI | Proportion of effect | |

Lower limits | Upper limits | ||||

Direct effect | 0.1926 | 0.0323 | 0.1290 | 0.2561 | 56.32% |

Indirect effect | 0.1494 | 0.0283 | 0.0962 | 0.2088 | 43.68% |

Total effect | 0.3420 | 0.0306 | 0.2819 | 0.4021 | |

Table 7. Chain mediating effect analysis.

Paths | Effect values | Standard error | Bootstrap95%CI | Proportion of relative effect | |

Lower limits | Upper limits | ||||

Total effect | 0.3420 | 0.0306 | 0.2819 | 0.4021 | |

Direct effect: AC→VI | 0.1707 | 0.0329 | 0.1061 | 0.2353 | 49.91% |

Total indirect effect | 0.1713 | 0.0300 | 0.1146 | 0.2317 | 50.09% |

ind1: AC→AR→VI | 0.0306 | 0.0119 | 0.0087 | 0.0552 | 8.95% |

ind2: AC→PN→VI | 0.1247 | 0.0262 | 0.0770 | 0.1800 | 36.46% |

ind3: AC→AR→PN→VI | 0.0160 | 0.0052 | 0.0070 | 0.0273 | 4.68% |

4.3.6. Difference Test of Digital Generations in Each Dimension

The difference test of digital generations in each dimension, this study marked digital immigrants as 1, marked digital natives as 2, and conducted an independent sample T test on each dimension. The results are shown in Table 8. There are significant differences between digital aborigines and digital immigrant groups in six dimensions: attitude toward the behavior, subjective norm, perceived behavioral control, awareness of consequences, personal norm, and verification intention.

Table 8. Analysis of the differences of digital generations in various dimensions.

Variables | Digital generations | Number | Average values | Standard error | T values | P values | Multiple comparison results |

AT | digital immigrants | 246 | 3.949 | 0.913 | -3.083 | 0.002 | digital natives > digital immigrants |

digital natives | 246 | 4.192 | 0.830 | ||||

SN | digital immigrants | 246 | 3.643 | 0.850 | -4.406 | 0.000 | digital native > digital immigrants |

digital natives | 246 | 3.985 | 0.869 | ||||

PBC | digital immigrants | 246 | 3.340 | 0.945 | -5.661 | 0.000 | digital natives > digital immigrants |

digital natives | 246 | 3.803 | 0.869 | ||||

AC | digital immigrants | 246 | 3.635 | 0.853 | -2.777 | 0.006 | digital natives > digital immigrants |

digital natives | 246 | 3.858 | 0.931 | ||||

AR | digital immigrants | 246 | 3.276 | 1.063 | -1.402 | 0.161 | / |

digital natives | 246 | 3.406 | 0.993 | ||||

PN | digital immigrants | 246 | 3.797 | 0.872 | -2.930 | 0.004 | digital natives > digital immigrants |

digital natives | 246 | 4.009 | 0.722 | ||||

VI | digital immigrants | 246 | 3.896 | 0.707 | -4.849 | 0.000 | digital natives > digital immigrants |

digital natives | 246 | 4.188 | 0.625 |

4.3.7. Test of moderating effect

In the empirical research, if the independent variable and the adjustment variable are latent variables, the interaction term (independent variable * adjustment variable) is generally introduced, and the latent variable interaction effect structural equation model is modeled. If the path coefficient of the interaction term is significant, it shows that the adjustment effect exists. This study examines the moderating effect of digital generations. The test results are shown in Tables 9-11, indicating that in the model, the digital generations only exist in the moderating effect of perceived behavioral control on the path of verification intention. Therefore, it is assumed that H8 is partially true. The specific moderating effect of digital generations between perceived behavioral control and verification intention is shown in Table 12. The effect value and T value of digital natives are higher than those of digital immigrants, indicating that their moderating effect is stronger. This may be due to the fact that digital natives grew up in the digital age, are more familiar with digital technology, acquire and process information faster, have a wider social circle and are influenced by modern education, so they perform better in regulation.

Table 9. Analysis of the moderating effect of digital generations between attitude toward the behavior and verification intention.

Variable types | Effect | SE | T | P | LLCI | ULCI | ||

Constant | 2.7537 | 0.3719 | 7.4054*** | 0.0000 | 2.0231 | 3.4844 | ||

Independent variable | AT | 0.2437 | 0.0906 | 2.6910** | 0.0074 | 0.0658 | 0.4217 | |

Regulated variable | digital generations | -0.2483 | 0.2464 | -1.0079 | 0.3140 | -0.7324 | 0.2357 | |

interaction terms | AT * digital generations | 0.1084 | 0.0590 | 1.8377 | 0.0667 | -0.0075 | 0.2244 | |

Dependent variable: Verification Intention | ||||||||

Table 10. Analysis of the moderating effect of digital generations between subjective norm and verification intention.

Variable types | Effect | SE | T | P | LLCI | ULCI | ||

Constant | 3.2170 | 0.4010 | 8.0226*** | 0.0000 | 2.4291 | 4.0048 | ||

Independent variable | SN | 0.1345 | 0.1053 | 1.2775 | 0.2020 | -0.0724 | 0.3414 | |

Regulated variable | digital generations | -0.1119 | 0.2586 | -0.4329 | 0.6653 | -0.6200 | 0.3961 | |

interaction terms | SN * digital generations | 0.0827 | 0.0661 | 1.2500 | 0.2119 | -0.0473 | 0.2126 | |

Dependent variable: Verification Intention | ||||||||

Table 11. Analysis of the moderating effect of digital generations between perceived behavioral control and verification intention.

Variable types | Effect | SE | T | P | LLCI | ULCI | ||

Constant | 3.1936 | 0.3165 | 10.0917*** | 0.0000 | 2.5718 | 3.8154 | ||

Independent variable | PBC | 0.1837 | 0.0886 | 2.0750* | 0.0385 | 0.0098 | 0.3577 | |

Regulated variable | digital generations | -0.3345 | 0.2133 | -1.5684 | 0.1174 | -0.7536 | 0.0845 | |

interaction terms | PBC * digital generations | 0.1268 | 0.0575 | 2.2058* | 0.0279 | 0.0139 | 0.2397 | |

Dependent variable: Verification Intention | ||||||||

Table 12. Analysis of the specific regulatory role of digital generations between perceived behavioral control and verification intention.

Digital generations types | Effect | SE | T | P | LLCI | ULCI |

Digital immigrants | 0.3105 | 0.0389 | 7.9853*** | 0.0000 | 0.2341 | 0.3870 |

Digital natives | 0.4373 | 0.0423 | 10.3317*** | 0.0000 | 0.3542 | 0.5205 |

5. Conclusion

This study reveals the specific influence mechanism of social media users’ intention to verify disinformation from the perspective of digital generations in the context of the prevalence of disinformation, and understands the users’ disinformation verification behavior from a multi-dimensional perspective. Integrating TPB and NAM, the motivation of users’ disinformation verification behavior is divided into “egoism” and “altruism” to construct a research model. Based on the results of the questionnaire survey, the attitude toward the behavior, perceived behavioral control and personal norm of social media users have a significant positive impact on their intention to verify disinformation. Awareness of consequences of social media users has a significant positive impact on their ascription of responsibility and personal norm. The ascription of responsibility of social media users has a significant positive impact on their personal norm. At the same time, the personal norm of social media users plays a partial mediating role between the awareness of consequences and the intention to verify disinformation. The awareness of consequences of social media users has an impact on their intention to verify disinformation through ascription of responsibility and personal norm, and the mediating effect includes three paths: the simple mediating effect of ascription of responsibility and personal norm, and the chain mediating effect of ascription of responsibility-personal norm. In addition, through the difference test of digital generations in each dimension, it shows that there are significant differences between digital aborigines and digital immigrant groups in the six dimensions of attitude toward the behavior, subjective norm, perceived behavioral control, awareness of Consequences, personal norm and verification intention. Digital generations play a moderating role between perceived behavioral control and the intention to verify disinformation, and the moderating effect of digital aborigines is stronger than that of digital immigrants. In general, the “egoism” and “altruism” motives of social media users’ disinformation verification behavior will affect their intention to verify, and there are also significant differences in digital generations. This study explores the driving mechanism of disinformation verification intention of social media users with dual characteristics, and provides empirical data for the practicability of the integrated model of TPB and NAM in the study of user information behavior. In practice, it provides a reference for how to mobilize users’ intention to verify disinformation through multiple games between social media users’ attitude toward the behavior, subjective norm, perceived behavioral control, awareness of consequences, ascription of responsibility and personal norm from the perspective of digital generations.

References

[1]. Li, L., Hou, L., Deng, S. (2022). Research on influencing factors of network misinformation transmission behaviors in public health emergencies: A case study of weibo misinformation during the covid-19. Library and Information Service, 66(9), 4-13.

[2]. Melki, J., Tamim, H., Hadid, D., Farhat, S., Makki, M., Ghandour, L., Hitti, E. (2022). Media exposure and health behavior during pandemics: The mediating effect of perceived knowledge and fear on compliance with covid-19 prevention measures. Health communication, 37(5), 586-596.

[3]. Van der Meer, T.G., Jin, Y. (2020). Seeking formula for misinformation treatment in public health crises: The effects of corrective information type and source. Health communication, 35(5), 560-575.

[4]. Vraga, E.K., Bode, L. (2020). Defining misinformation and understanding its bounded nature: Using expertise and evidence for describing misinformation. Political Communication, 37(1), 136-144.

[5]. Englmeier, K. (2021). The role of text mining in mitigating the threats from fake news and misinformation in times of corona. Procedia Computer Science, 181, 149-156.

[6]. Kumari, R., Ashok, N., Ghosal, T., Ekbal, A. (2022). What the fake? Probing misinformation detection standing on the shoulder of novelty and emotion. Information Processing & Management, 59(1), 102740.

[7]. Cho, J.-H., Rager, S., O’Donovan, J., Adali, S., Horne, B.D. (2019). Uncertainty-based false information propagation in social networks. ACM Transactions on Social Computing, 2(2), 1-34.

[8]. Yang, L., Wang, J., Gao, C., Li, T. (2019). A crisis information propagation model based on a competitive relation. Journal of Ambient Intelligence and Humanized Computing, 10(8), 2999-3009.

[9]. Amoruso, M., Anello, D., Auletta, V., Cerulli, R., Ferraioli, D., Raiconi, A. (2020). Contrasting the spread of misinformation in online social networks. Journal of Artificial Intelligence Research, 69, 847-879.

[10]. Li, J., Chang, X. (2023). Combating misinformation by sharing the truth: A study on the spread of fact-checks on social media. Information systems frontiers, 25(4), 1479-1493.

[11]. Oh, H.J., Lee, H. (2019). When do people verify and share health rumors on social media? The effects of message importance, health anxiety, and health literacy. Journal of health communication, 24(11), 837-847.

[12]. Xiang, M., Guan, T., Lin, M., Xie, Y., Luo, X., Han, M., Lv, K. (2023). Configuration path study of influencing factors on health information-sharing behavior among users of online health communities: Based on SEM and fsQCA methods. Healthcare, 11(12), 1789.

[13]. Tokita, C.K., Aslett, K., Godel, W.P., Sanderson, Z., Tucker, J.A., Nagler, J., Persily, N., Bonneau, R. (2024). Measuring receptivity to misinformation at scale on a social media platform. PNAS nexus, 3(10), pgae396.

[14]. Torres, R., Gerhart, N., Negahban, A. (2018). Epistemology in the era of fake news: An exploration of information verification behaviors among social networking site users. ACM SIGMIS Database: The DATABASE for Advances in Information Systems, 49(3), 78-97.

[15]. Sharma, A., Kapoor, P.S. (2022). Message sharing and verification behaviour on social media during the covid-19 pandemic: A study in the context of india and the USA. Online Information Review, 46(1), 22-39.

[16]. Khan, M.L., Idris, I.K. (2019). Recognise misinformation and verify before sharing: A reasoned action and information literacy perspective. Behaviour & Information Technology, 38(12), 1194-1212.

[17]. Aoun Barakat, K., Dabbous, A., Tarhini, A. (2021). An empirical approach to understanding users' fake news identification on social media. Online Information Review, 45(6), 1080-1096.

[18]. Pundir, V., Devi, E.B., Nath, V. (2021). Arresting fake news sharing on social media: A theory of planned behavior approach. Management Research Review, 44(8), 1108-1138.

[19]. Alwreikat, A. (2022). Sharing of misinformation during covid-19 pandemic: Applying the theory of planned behavior with the integration of perceived severity. Science & Technology Libraries, 41(2), 133-151.

[20]. Bautista, J.R., Zhang, Y., Gwizdka, J. (2022). Predicting healthcare professionals’ intention to correct health misinformation on social media. Telematics and Informatics, 73, 101864.

[21]. Chen, L., Fu, L. (2022). Let's fight the infodemic: The third-person effect process of misinformation during public health emergencies. Internet Research, 32(4), 1357-1377.

[22]. Bosnjak, M., Ajzen, I., Schmidt, P. (2020). The theory of planned behavior: Selected recent advances and applications. Europe's journal of psychology, 16(3), 352-356.

[23]. Benaissa Pedriza, S. (2023). Disinformation perception by digital and social audiences: Threat awareness, decision-making and trust in media organizations. Encyclopedia, 3(4), 1387-1400.

[24]. Jiang, B., Wang, D. (2024). Perception of misinformation on social media among chinese college students. Frontiers in Psychology, 15, 1416792.

[25]. Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 179-211.

[26]. Puzzo, G., Prati, G. (2024). Psychological correlates of e-waste recycling intentions and behaviors: A meta-analysis. Resources, Conservation and Recycling, 204, 107462.

[27]. Tanveer, U., Sahara, S.N.A., Kremantzis, M., Ishaq, S. (2025). Integrating circular economy principles into a modified theory of planned behaviour: Exploring customer intentions and experiences with collaborative consumption on airbnb. Socio-Economic Planning Sciences, 98, 102136.

[28]. Wu, W., Yu, L. (2022). How does personal innovativeness in the domain of information technology promote knowledge workers’ innovative work behavior? Information & Management, 59(6), 103688.

[29]. Huang, Y., Aguilar, F., Yang, J., Qin, Y., Wen, Y. (2021). Predicting citizens’ participatory behavior in urban green space governance: Application of the extended theory of planned behavior. Urban Forestry & Urban Greening, 61, 127110.

[30]. Schwartz, S.H. (1977). Normative influences on altruism. Advances in experimental social psychology, 221-279.

[31]. De Groot, J.I., Steg, L. (2009). Morality and prosocial behavior: The role of awareness, responsibility, and norms in the norm activation model. The Journal of social psychology, 149(4), 425-449.

[32]. Steg, L., De Groot, J. (2010). Explaining prosocial intentions: Testing causal relationships in the norm activation model. British journal of social psychology, 49(4), 725-743.

[33]. Teisl, M.F., Noblet, C.L., Rubin, J. (2009). The psychology of eco-consumption. Journal of Agricultural & Food Industrial Organization, 7(2), 1-29.

[34]. Fu, W., Zhou, Y., Li, L., Yang, R. (2021). Understanding household electricity-saving behavior: Exploring the effects of perception and cognition factors. Sustainable Production and Consumption, 28, 116-128.

[35]. Ding, Z., Wen, X., Zuo, J., Chen, Y. (2023). Determinants of contractor’s construction and demolition waste recycling intention in china: Integrating theory of planned behavior and norm activation model. Waste Management, 161, 213-224.

[36]. Ding, X., Zhang, X., Fan, R., Xu, Q., Hunt, K., Zhuang, J. (2022). Rumor recognition behavior of social media users in emergencies. Journal of Management Science and Engineering, 7(1), 36-47.

[37]. Karnowski, V., Leonhard, L., Kümpel, A.S. (2018). Why users share the news: A theory of reasoned action-based study on the antecedents of news-sharing behavior. Communication Research Reports, 35(2), 91-100.

[38]. Lebek, B., Uffen, J., Neumann, M., Hohler, B., H. Breitner, M. (2014). Information security awareness and behavior: A theory-based literature review. Management Research Review, 37(12), 1049-1092.

[39]. Johnson, D.P. (2017). How attitude toward the behavior, subjective norm, and perceived behavioral control affects information security behavior intention, Walden University.

[40]. Han, H. (2014). The norm activation model and theory-broadening: Individuals' decision-making on environmentally-responsible convention attendance. Journal of environmental psychology, 40(4), 462-471.

[41]. Zhang, Y., Wang, Z., Zhou, G. (2013). Antecedents of employee electricity saving behavior in organizations: An empirical study based on norm activation model. Energy Policy, 62(11), 1120-1127.

[42]. Zhao, L., Yin, J., Song, Y. (2016). An exploration of rumor combating behavior on social media in the context of social crises. Computers in Human Behavior, 58(5), 25-36.

[43]. Tang, Z., Miller, A.S., Zhou, Z., Warkentin, M. (2022). Understanding rumor combating behavior on social media. Journal of Computer Information Systems, 62(6), 1112-1124.

[44]. Harland, P., Staats, H., Wilke, H.A. (2007). Situational and personality factors as direct or personal norm mediated predictors of pro-environmental behavior: Questions derived from norm-activation theory. Basic and applied social psychology, 29(4), 323-334.

[45]. Rui, J.R., Yuan, S., Xu, P. (2022). Motivating covid-19 mitigation actions via personal norm: An extension of the norm activation model. Patient Education and Counseling, 105(7), 2504-2511.

[46]. Perkins, M. (2001). Digital natives, digital immigrants part 1. On the Horizon, 9(5), 1-6.

[47]. Wey Smola, K., Sutton, C.D. (2002). Generational differences: Revisiting generational work values for the new millennium. Journal of Organizational Behavior, 23(4), 363-382.

[48]. Lusk, B. (2010). Digital natives and social media behaviors: An overview. Prevention Researcher, 17(5), 3-6.

[49]. Fishbein, M., Hennessy, M., Kamb, M., Bolan, G.A., Hoxworth, T., Iatesta, M., Rhodes, F., Zenilman, J.M., Group, P.R.S. (2001). Using intervention theory to model factors influencing behavior change: Project respect. Evaluation & the health professions, 24(4), 363-384.

[50]. Van der Werff, E., Steg, L. (2015). One model to predict them all: Predicting energy behaviours with the norm activation model. Energy Research & Social Science, 6, 8-14.

[51]. Flanagin, A.J., Metzger, M.J. (2000). Perceptions of internet information credibility. Journalism & mass communication quarterly, 77(3), 515-540.

Cite this article

Zhao,Y.;Zhang,S. (2025). Research on Social Media Users’ Disinformation Verification Intention from the Perspective of Digital Generations. Journal of Applied Economics and Policy Studies,17,38-49.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Journal:Journal of Applied Economics and Policy Studies

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Li, L., Hou, L., Deng, S. (2022). Research on influencing factors of network misinformation transmission behaviors in public health emergencies: A case study of weibo misinformation during the covid-19. Library and Information Service, 66(9), 4-13.

[2]. Melki, J., Tamim, H., Hadid, D., Farhat, S., Makki, M., Ghandour, L., Hitti, E. (2022). Media exposure and health behavior during pandemics: The mediating effect of perceived knowledge and fear on compliance with covid-19 prevention measures. Health communication, 37(5), 586-596.

[3]. Van der Meer, T.G., Jin, Y. (2020). Seeking formula for misinformation treatment in public health crises: The effects of corrective information type and source. Health communication, 35(5), 560-575.

[4]. Vraga, E.K., Bode, L. (2020). Defining misinformation and understanding its bounded nature: Using expertise and evidence for describing misinformation. Political Communication, 37(1), 136-144.

[5]. Englmeier, K. (2021). The role of text mining in mitigating the threats from fake news and misinformation in times of corona. Procedia Computer Science, 181, 149-156.

[6]. Kumari, R., Ashok, N., Ghosal, T., Ekbal, A. (2022). What the fake? Probing misinformation detection standing on the shoulder of novelty and emotion. Information Processing & Management, 59(1), 102740.

[7]. Cho, J.-H., Rager, S., O’Donovan, J., Adali, S., Horne, B.D. (2019). Uncertainty-based false information propagation in social networks. ACM Transactions on Social Computing, 2(2), 1-34.

[8]. Yang, L., Wang, J., Gao, C., Li, T. (2019). A crisis information propagation model based on a competitive relation. Journal of Ambient Intelligence and Humanized Computing, 10(8), 2999-3009.

[9]. Amoruso, M., Anello, D., Auletta, V., Cerulli, R., Ferraioli, D., Raiconi, A. (2020). Contrasting the spread of misinformation in online social networks. Journal of Artificial Intelligence Research, 69, 847-879.

[10]. Li, J., Chang, X. (2023). Combating misinformation by sharing the truth: A study on the spread of fact-checks on social media. Information systems frontiers, 25(4), 1479-1493.

[11]. Oh, H.J., Lee, H. (2019). When do people verify and share health rumors on social media? The effects of message importance, health anxiety, and health literacy. Journal of health communication, 24(11), 837-847.

[12]. Xiang, M., Guan, T., Lin, M., Xie, Y., Luo, X., Han, M., Lv, K. (2023). Configuration path study of influencing factors on health information-sharing behavior among users of online health communities: Based on SEM and fsQCA methods. Healthcare, 11(12), 1789.

[13]. Tokita, C.K., Aslett, K., Godel, W.P., Sanderson, Z., Tucker, J.A., Nagler, J., Persily, N., Bonneau, R. (2024). Measuring receptivity to misinformation at scale on a social media platform. PNAS nexus, 3(10), pgae396.

[14]. Torres, R., Gerhart, N., Negahban, A. (2018). Epistemology in the era of fake news: An exploration of information verification behaviors among social networking site users. ACM SIGMIS Database: The DATABASE for Advances in Information Systems, 49(3), 78-97.

[15]. Sharma, A., Kapoor, P.S. (2022). Message sharing and verification behaviour on social media during the covid-19 pandemic: A study in the context of india and the USA. Online Information Review, 46(1), 22-39.

[16]. Khan, M.L., Idris, I.K. (2019). Recognise misinformation and verify before sharing: A reasoned action and information literacy perspective. Behaviour & Information Technology, 38(12), 1194-1212.

[17]. Aoun Barakat, K., Dabbous, A., Tarhini, A. (2021). An empirical approach to understanding users' fake news identification on social media. Online Information Review, 45(6), 1080-1096.

[18]. Pundir, V., Devi, E.B., Nath, V. (2021). Arresting fake news sharing on social media: A theory of planned behavior approach. Management Research Review, 44(8), 1108-1138.

[19]. Alwreikat, A. (2022). Sharing of misinformation during covid-19 pandemic: Applying the theory of planned behavior with the integration of perceived severity. Science & Technology Libraries, 41(2), 133-151.

[20]. Bautista, J.R., Zhang, Y., Gwizdka, J. (2022). Predicting healthcare professionals’ intention to correct health misinformation on social media. Telematics and Informatics, 73, 101864.

[21]. Chen, L., Fu, L. (2022). Let's fight the infodemic: The third-person effect process of misinformation during public health emergencies. Internet Research, 32(4), 1357-1377.

[22]. Bosnjak, M., Ajzen, I., Schmidt, P. (2020). The theory of planned behavior: Selected recent advances and applications. Europe's journal of psychology, 16(3), 352-356.

[23]. Benaissa Pedriza, S. (2023). Disinformation perception by digital and social audiences: Threat awareness, decision-making and trust in media organizations. Encyclopedia, 3(4), 1387-1400.

[24]. Jiang, B., Wang, D. (2024). Perception of misinformation on social media among chinese college students. Frontiers in Psychology, 15, 1416792.

[25]. Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 179-211.

[26]. Puzzo, G., Prati, G. (2024). Psychological correlates of e-waste recycling intentions and behaviors: A meta-analysis. Resources, Conservation and Recycling, 204, 107462.

[27]. Tanveer, U., Sahara, S.N.A., Kremantzis, M., Ishaq, S. (2025). Integrating circular economy principles into a modified theory of planned behaviour: Exploring customer intentions and experiences with collaborative consumption on airbnb. Socio-Economic Planning Sciences, 98, 102136.

[28]. Wu, W., Yu, L. (2022). How does personal innovativeness in the domain of information technology promote knowledge workers’ innovative work behavior? Information & Management, 59(6), 103688.

[29]. Huang, Y., Aguilar, F., Yang, J., Qin, Y., Wen, Y. (2021). Predicting citizens’ participatory behavior in urban green space governance: Application of the extended theory of planned behavior. Urban Forestry & Urban Greening, 61, 127110.

[30]. Schwartz, S.H. (1977). Normative influences on altruism. Advances in experimental social psychology, 221-279.

[31]. De Groot, J.I., Steg, L. (2009). Morality and prosocial behavior: The role of awareness, responsibility, and norms in the norm activation model. The Journal of social psychology, 149(4), 425-449.

[32]. Steg, L., De Groot, J. (2010). Explaining prosocial intentions: Testing causal relationships in the norm activation model. British journal of social psychology, 49(4), 725-743.

[33]. Teisl, M.F., Noblet, C.L., Rubin, J. (2009). The psychology of eco-consumption. Journal of Agricultural & Food Industrial Organization, 7(2), 1-29.

[34]. Fu, W., Zhou, Y., Li, L., Yang, R. (2021). Understanding household electricity-saving behavior: Exploring the effects of perception and cognition factors. Sustainable Production and Consumption, 28, 116-128.

[35]. Ding, Z., Wen, X., Zuo, J., Chen, Y. (2023). Determinants of contractor’s construction and demolition waste recycling intention in china: Integrating theory of planned behavior and norm activation model. Waste Management, 161, 213-224.

[36]. Ding, X., Zhang, X., Fan, R., Xu, Q., Hunt, K., Zhuang, J. (2022). Rumor recognition behavior of social media users in emergencies. Journal of Management Science and Engineering, 7(1), 36-47.

[37]. Karnowski, V., Leonhard, L., Kümpel, A.S. (2018). Why users share the news: A theory of reasoned action-based study on the antecedents of news-sharing behavior. Communication Research Reports, 35(2), 91-100.

[38]. Lebek, B., Uffen, J., Neumann, M., Hohler, B., H. Breitner, M. (2014). Information security awareness and behavior: A theory-based literature review. Management Research Review, 37(12), 1049-1092.

[39]. Johnson, D.P. (2017). How attitude toward the behavior, subjective norm, and perceived behavioral control affects information security behavior intention, Walden University.

[40]. Han, H. (2014). The norm activation model and theory-broadening: Individuals' decision-making on environmentally-responsible convention attendance. Journal of environmental psychology, 40(4), 462-471.

[41]. Zhang, Y., Wang, Z., Zhou, G. (2013). Antecedents of employee electricity saving behavior in organizations: An empirical study based on norm activation model. Energy Policy, 62(11), 1120-1127.

[42]. Zhao, L., Yin, J., Song, Y. (2016). An exploration of rumor combating behavior on social media in the context of social crises. Computers in Human Behavior, 58(5), 25-36.

[43]. Tang, Z., Miller, A.S., Zhou, Z., Warkentin, M. (2022). Understanding rumor combating behavior on social media. Journal of Computer Information Systems, 62(6), 1112-1124.

[44]. Harland, P., Staats, H., Wilke, H.A. (2007). Situational and personality factors as direct or personal norm mediated predictors of pro-environmental behavior: Questions derived from norm-activation theory. Basic and applied social psychology, 29(4), 323-334.

[45]. Rui, J.R., Yuan, S., Xu, P. (2022). Motivating covid-19 mitigation actions via personal norm: An extension of the norm activation model. Patient Education and Counseling, 105(7), 2504-2511.

[46]. Perkins, M. (2001). Digital natives, digital immigrants part 1. On the Horizon, 9(5), 1-6.

[47]. Wey Smola, K., Sutton, C.D. (2002). Generational differences: Revisiting generational work values for the new millennium. Journal of Organizational Behavior, 23(4), 363-382.

[48]. Lusk, B. (2010). Digital natives and social media behaviors: An overview. Prevention Researcher, 17(5), 3-6.

[49]. Fishbein, M., Hennessy, M., Kamb, M., Bolan, G.A., Hoxworth, T., Iatesta, M., Rhodes, F., Zenilman, J.M., Group, P.R.S. (2001). Using intervention theory to model factors influencing behavior change: Project respect. Evaluation & the health professions, 24(4), 363-384.

[50]. Van der Werff, E., Steg, L. (2015). One model to predict them all: Predicting energy behaviours with the norm activation model. Energy Research & Social Science, 6, 8-14.

[51]. Flanagin, A.J., Metzger, M.J. (2000). Perceptions of internet information credibility. Journalism & mass communication quarterly, 77(3), 515-540.