1. Introduction

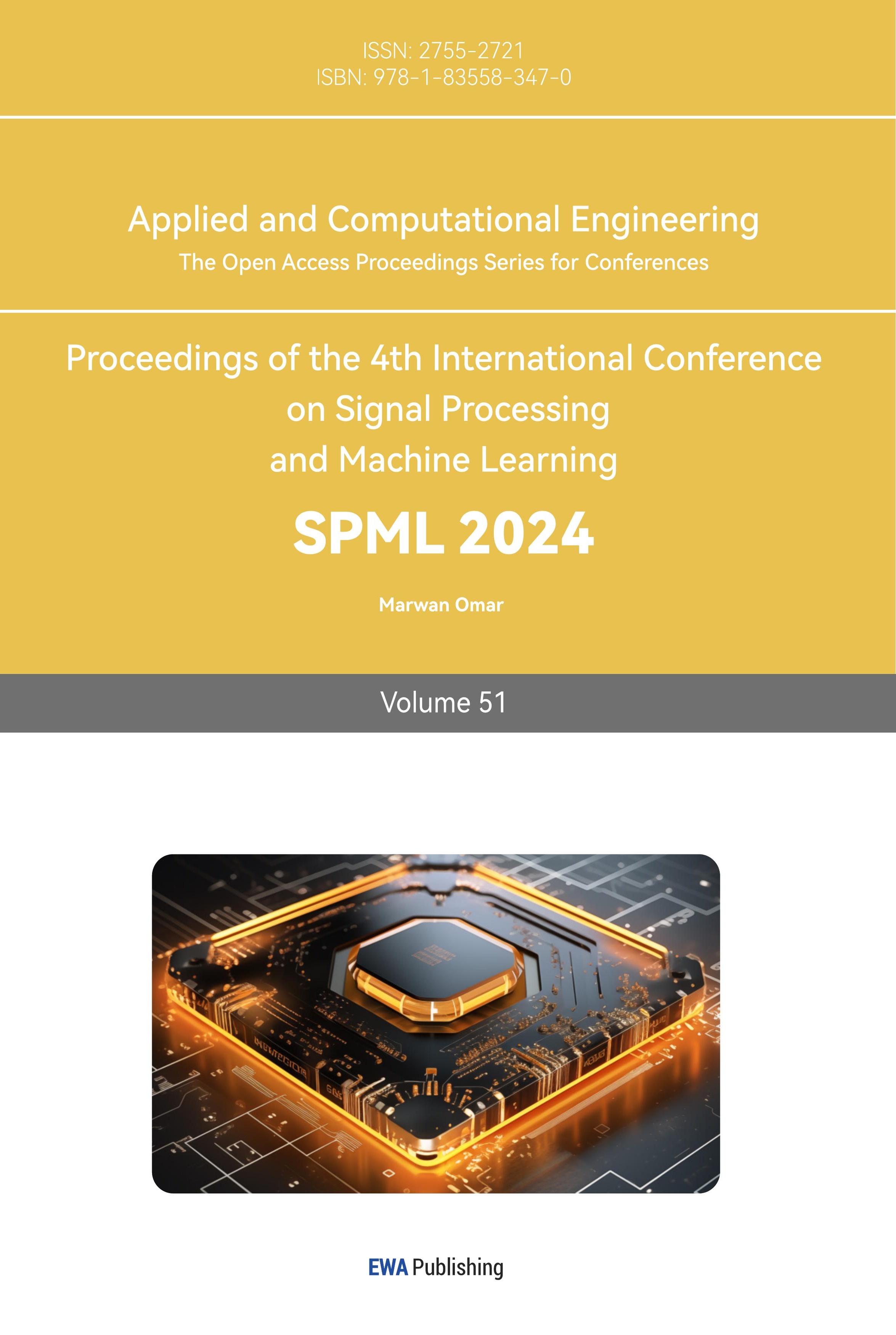

In recent years, the adoption of miniaturized, cost-effective drones has surged across diverse domains, including urban planning management, disaster monitoring, and virtual attraction tours. Unlike traditional data acquisition methods, such as orthophotography, oblique photography employs drones equipped with five cameras positioned at varied viewing angles. This configuration enables the capture of building images from one vertical and four oblique perspectives, enhancing the realism of the model. This approach addresses the traditional gap in portraying building facades' structure and texture details [1]. However, constraints such as flight altitude and aerial photography angles can lead to data and structural information losses. Integrating techniques like Building Information Modeling (BIM) and close-range photography can mitigate these losses and foster the construction of more detailed 3D models. Such integrations offer innovative insights for urban informatization, holding considerable theoretical and practical value. They contribute significantly to the progress of smart cities and data capture in sectors like agriculture and surveying, as illustrated in Figure 1.

Figure 1. Tilt photogrammetric acquisition data [2]

2. Typical methods and principles

2.1. Basic modeling process

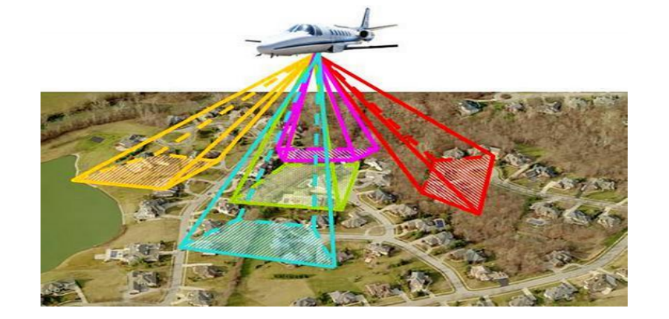

Figure 2. Modeling process (Photo/Picture credit: Original).

The usual UAV oblique photography modeling process is shown in Figure 2. It can be generally divided into four parts. First, researchers need to obtain data through drones. Then extract the image’s feature points and match them. Reconstruct the building point cloud. Finally, they can generate the reality 3D models.

2.2. Data acquisition

The route needs to be planned before the flight. The whole observation area are an rectangular and also have some extension, normally 75% of the altitude. The overlap should be set about 80% [2]. In order to get general information about the building, drones often use cross-coverage network routes. Because there are fewer undulations on the top of the building, researchers use orthophotography to design a larger overlap. And use oblique photography on the side of the building to flexibly adjust the angle to cover the façade [3].

2.3. Image matching

Image matching is a really important step in 3D modeling, its Matching accuracy is directly related to the quality of building model. The scale invariant feature transform technique discovers and computes unique image characteristics from scale-invariant key points. But it cannot identify matching points with significant differences in affine. Compared to SIFT ASIFT is able to cover all parameters of the affine transform to solve the problem of large differences in drone viewing angles [4]. It contains two parts: first, the poses taken by the drone are sampled multiple times to obtain a series of simulated affine images, and then the SIFT algorithm is used to complete feature matching.

2.3.1. Construct an affine model

The ASIFT algorithm starts from the transformation of images and simulates all affine transformations from different angles. A is a basic affine trans formation matrix.

A=λ[ \begin{array}{c} cosψ{ } \\ sinψ{ } \end{array} { \begin{array}{c} -sinψ \\ cosψ \end{array} }] [ \begin{array}{c} t \\ 0 \end{array} { \begin{array}{c} 0 \\ 1 \end{array} }]∙[ \begin{array}{c} cosφ{ } \\ sinφ{ } \end{array} { \begin{array}{c} -sinφ \\ cosφ \end{array} }] (1)

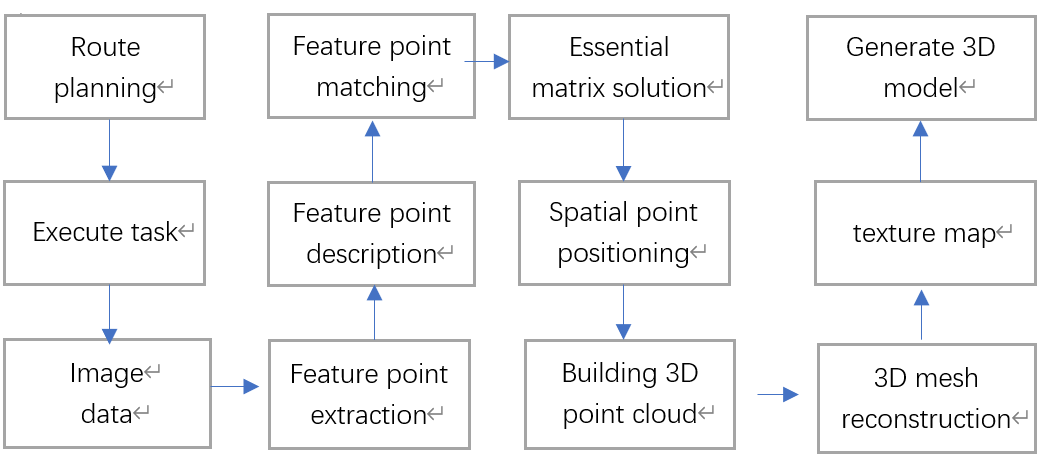

In this matrix, λ means the camera’s focal length, ψ represents the rotation angle, t and 1 are the two eigenvalues of the diagonal tilt measurement matrix. The camera parameters are φ∈[0, π] and θ=arccos\frac{1}{t} . An affine transformation model is shown in Figure 3 [5].

Figure 3. Geometric affine model [5]

Figure 3 shows the UAV camera as a quadrilateral in the upper right corner. U represents the object’s view, λ is the scaling factor, The observed angles are φ and θ . Camera parameter also set as ψ .

2.3.2. SIFT matching

In the process of image matching, the first task is to establish an scale space to enrich the extracted information. This scale space is convolved with a constantly changing Gaussian function and the original image, and then use the Taylor series to expend Gaussian difference for interpolation search. The ones with smaller contrast are removed, leaving more accurate ones. Sampling is carried out in the domain range centered on the feature point, and the gradient histogram can be used to more intuitively understand the feature point’s gradient direction. After sampling, a 16*16 square pixel window is also selected to be the center, and the gradient histogram of each 4*4 window is calculated. A total of 128 directions are obtained to form a 128-dimensional feature descriptor, which is established for the two images respectively. The matching is completed by comparing the feature point descriptors in the two images, and the Euclidean distance is used to determine the similarity measure.

2.4. Point cloud reconstruction

Use UAV sequence images to perform three-dimensional reconstruction of buildings, obtain the spatial coordinates in the real environment through the pose information of the UAV camera during shooting, solve the basic matrix through feature matching points, and then calculate the essential matrix, camera external parameter. Complete the positioning of three-dimensional space points, obtain three-dimensional scenes, and use images to reconstruct three-dimensional point clouds.

However, when performing point cloud matching on original architectural images, due to the influence of the shooting object itself, the quality of the shooting image, matching errors, etc., the point cloud may contain some extremely high or low outlier points. For this reason, when filtering the original building point cloud, outliers should also be removed to meet practical requirements [6].

2.5. 3D real scene information generation

In terms of 3D scene reconstruction, triangle mesh reconstruction technology and texture mapping technology are used to help achieve clear structures and vivid colors to reflect the real world respectively. Buildings can be composed of irregular triangular grids. After the previous image processing, a high-density point cloud will be obtained. The greater the density of the point cloud, the denser the triangular network constructed, and the more real of three-dimensional modeling will be. Texture mapping technology can complement the color and appearance of buildings on a three-dimensional mesh.

3. Challenges

3.1. Data flaws

Because of the shading of buildings or the flight height and angle restrictions, the information collected by drones is incomplete. In particular, the middle and lower parts of the buildings are prone to holes and burlap which will cause geometric and texture distortion, thus affecting the completeness and accuracy of modeling.

The problem’s feasible solution is to combine close photography with tilt photography technology (Sun et al. 2023). The researcher first used close-in photography technology to obtain high-resolution image data of the sides and bottom of the building under test. Then use oblique photography technology to obtain the main frame of the structure. After merging the data collected by the two and image matching using the SIFT algorithm and aerial triangulation calculations, constructing a high-precision 3D model. The accuracy of model can reach to centimeter level, meeting the apparent detection requirements [7].

3.2. Insufficient precision

Road design has technical difficulties such as large traffic volume, complex construction traffic organization design and small construction area. Therefore, the designer need to take various factors into considered [8]. Oblique images collected by drones have issue like large deformation in local, sparse network adjustment control points, and blind spots in areas with sudden changes in the shape of surface attachments. So, the accuracy of the image results obtained is low and it is difficult to be directly applied to road design [9].

The emergence of BIM technology provides new solutions to the above problems. BIM technology focuses on precision and can create a certain part of a small three-dimensional entity.

By applying BIM technology to municipal road design and using the three-dimensional real-scene data obtained by drone oblique photography as the three-dimensional basic data for BIM design. The combination can quickly obtain reasonable three-dimensional design solutions.

4. Application

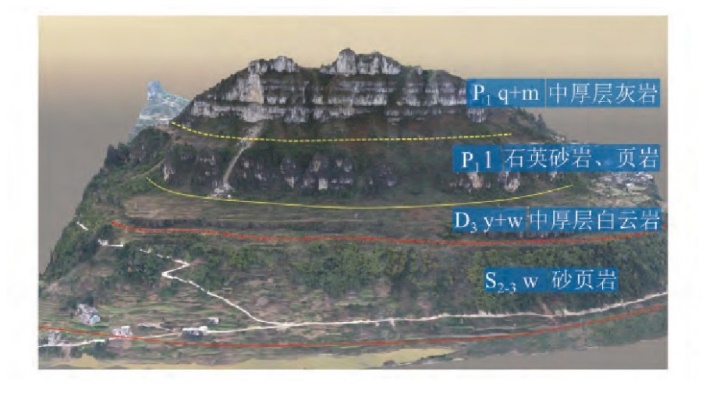

4.1. Investigation of high slope rock structure

In the actual investigation process of slope rock mass, traditional manual measurement has the characteristics of low efficiency, high risk and great environmental restrictions. Common 3D laser scanning technology also has problems with limited angles and large errors when encountering special and complex terrain. In contrast, using drone oblique photography to conduct surveys improves safety and efficiency.

The rock mass structural information obtained by drones is relatively comprehensive and has good visualization effects. It not only extracts the inclination angle and direction of the structural plane, but also directly obtains information such as the structural plane spacing, which provides basic data for the next step of evaluating the rock mass stability [10]. As shown in Figure 4.

Figure 4. Stratums and Distribution in Rockfall Source Area [10]

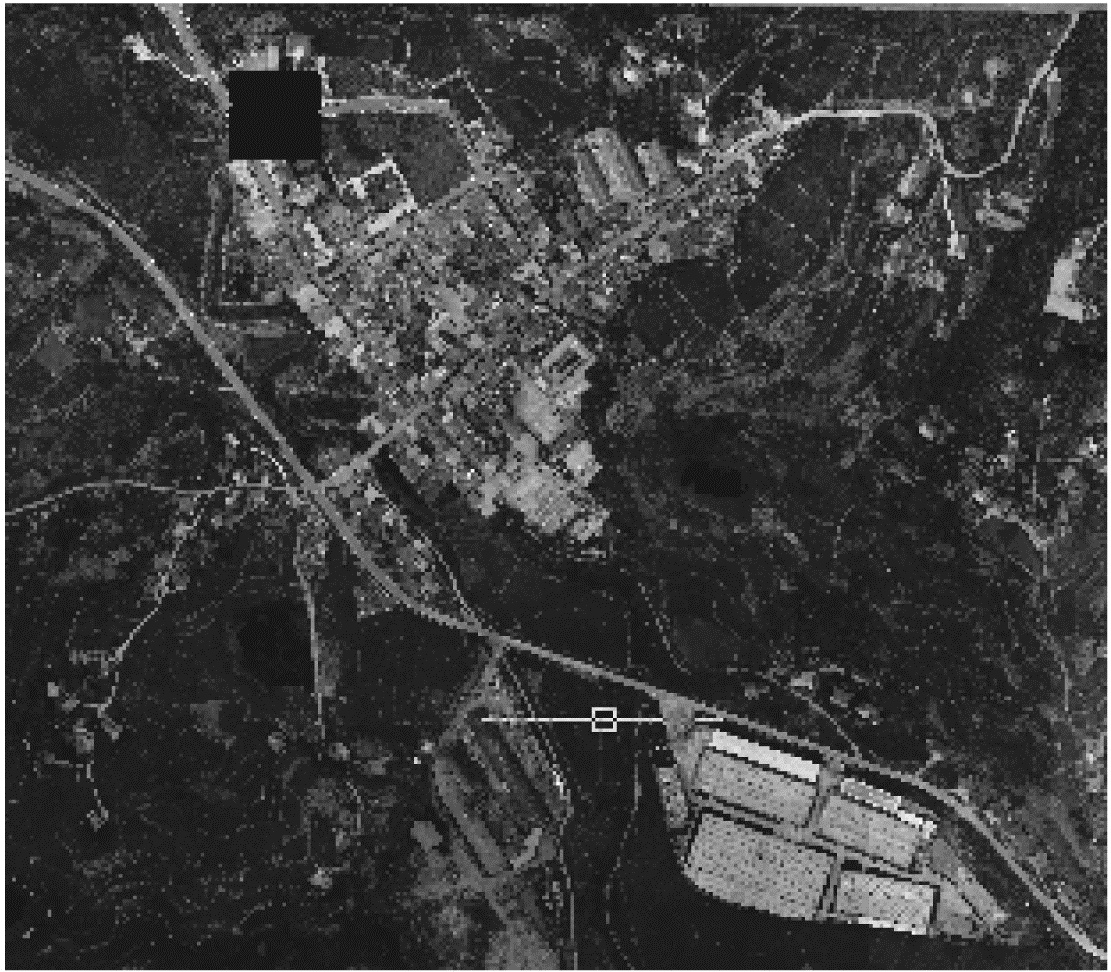

4.2. Rural modeling

UAV oblique photogrammetry data also can be used to conduct regional analysis of the rural three-dimensional model [11]. Unlike traditional 3D surveying, this collection method can easily collect the information of the corner without being affected by the eaves. Therefore, this method is applicable for the collection of house boundary points [12]. Besides, planners can more intuitively view the terrain and landscape conditions, and use the software to accurately measure the point areas of buildings and structures on the model, such as schools, hospitals and other public service facilities. It can also save time. The topography of the area can be measured on the model to obtain a digital line drawing. This map undoubtedly reduces the pressure of early planning analysis and provides convenience for facilitates subsequent rural planning and construction. As shown in Figure 5.

Figure 5. Study area images [11]

5. Conclusion

UAV oblique photography modeling technology stands as a burgeoning auxiliary modeling tool in today's technological panorama. Renowned for its multi-faceted data collection capability and high efficiency, it finds applications spanning various domains. This emerging technology, rich in developmental prospects, seamlessly integrates with BIM, laser scanning, and other advanced technologies, further enhancing its applicative reach. The integration manifests high value in diverse sectors including transportation and agriculture, offering a robust platform for constructing detailed three-dimensional models of real-world scenes or as a foundational element for comprehensive data analysis. Its impactful contribution extends to the future landscape of urban and rural development, playing a pivotal role in region-specific detection tasks and intricate plant growth simulations. The technology's adaptability and precision, married with its ability to complement other advanced technological tools, underscore its position as a crucial asset in contemporary and future spatial analysis, modeling, and various other applications, thus promising a substantial positive impact on myriad sectors in the coming times.

References

[1]. Sun, Z. (2023). Fine 3D modeling of buildings based on UAV (Doctoral dissertation, Xi'an Technological University). https://doi.org/10.27391/d.cnki.gxagu.2023.000363

[2]. Ren, J., Chen, X., & Zheng, Z. (2019). Future prospects of UAV tilt photogrammetry technology. IOP Conference Series: Materials Science and Engineering, 612(3), 032023. IOP Publishing.

[3]. Chen, W., & Zhang, J. (2020). Research on 3D modelling based on UAV tilt photogrammetry with KQCAM5 swing tilt camera. Journal of Physics: Conference Series, 1650(3), 032156. IOP Publishing.

[4]. Park, J., Kim, P., Cho, Y. K., & et al. (2019). Framework for automated registration of UAV and UGV point clouds using local features in images. Automation in Construction, 98, 175-182.

[5]. Gao, J., & Sun, Z. (2022). An Improved ASIFT Image Feature Matching Algorithm Based on POS Information. Sensors, 22(20), 7749.

[6]. Guo, Q., Liu, H., Hassan, F. M., & et al. (2021). Application of UAV tilt photogrammetry in 3D modeling of ancient buildings. International Journal of System Assurance Engineering and Management, 1-13.

[7]. Sun, B., Mo, C., Zhang, Y., & et al. (2023). Application of close and oblique photography in apparent disease detection of arch bridge. Remote Sensing Information, 38(02), 26-32. https://doi.org/10.20091/j.cnki.1000-3177.2023.02.004

[8]. Xiang, S., Zhao, J., Xu, R., & et al. (2019). Application of UAV oblique photography and BIM technology in municipal road design. Highway, 64(07), 192-195.

[9]. Lin, G., Sun, Z., Xiao, B., & et al. (2021). Research on road design integrating UAV, GIS, BIM technology. Highway, 66(03), 23-26.

[10]. Zhen, Y., Xu, Q., Liu, Q., & et al. (2020). Application of UAV oblique photogrammetry in structural surface investigation of slope rock mass. Journal of Wuhan University (Information Science Edition), 45(11), 1739-1746. https://doi.org/10.13203/j.whugis20200077

[11]. Wen, J., Zhu, B., Chen, J., & et al. (2023). Application of UAV oblique photography technology in rural construction. Bulletin of Surveying and Mapping, 2023(S1), 101-104+110. https://doi.org/10.13474/j.cnki.11-2246.2023.0521

[12]. Feng, W., Dandan, L., & Qiang, C. (2020). Discussion on the Application of UAV Oblique Photography in the Registration of Rural Housing and Real Estate Integration. In 2020 IEEE 5th International Conference on Intelligent Transportation Engineering (ICITE) (pp. 290-295). IEEE. https://doi.org/10.1109/ICITE50838.2020.9231411

Cite this article

Hou,L. (2024). Three-dimensional modeling and application based on UAV tilt photogrammetry technology. Applied and Computational Engineering,51,146-151.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 4th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Sun, Z. (2023). Fine 3D modeling of buildings based on UAV (Doctoral dissertation, Xi'an Technological University). https://doi.org/10.27391/d.cnki.gxagu.2023.000363

[2]. Ren, J., Chen, X., & Zheng, Z. (2019). Future prospects of UAV tilt photogrammetry technology. IOP Conference Series: Materials Science and Engineering, 612(3), 032023. IOP Publishing.

[3]. Chen, W., & Zhang, J. (2020). Research on 3D modelling based on UAV tilt photogrammetry with KQCAM5 swing tilt camera. Journal of Physics: Conference Series, 1650(3), 032156. IOP Publishing.

[4]. Park, J., Kim, P., Cho, Y. K., & et al. (2019). Framework for automated registration of UAV and UGV point clouds using local features in images. Automation in Construction, 98, 175-182.

[5]. Gao, J., & Sun, Z. (2022). An Improved ASIFT Image Feature Matching Algorithm Based on POS Information. Sensors, 22(20), 7749.

[6]. Guo, Q., Liu, H., Hassan, F. M., & et al. (2021). Application of UAV tilt photogrammetry in 3D modeling of ancient buildings. International Journal of System Assurance Engineering and Management, 1-13.

[7]. Sun, B., Mo, C., Zhang, Y., & et al. (2023). Application of close and oblique photography in apparent disease detection of arch bridge. Remote Sensing Information, 38(02), 26-32. https://doi.org/10.20091/j.cnki.1000-3177.2023.02.004

[8]. Xiang, S., Zhao, J., Xu, R., & et al. (2019). Application of UAV oblique photography and BIM technology in municipal road design. Highway, 64(07), 192-195.

[9]. Lin, G., Sun, Z., Xiao, B., & et al. (2021). Research on road design integrating UAV, GIS, BIM technology. Highway, 66(03), 23-26.

[10]. Zhen, Y., Xu, Q., Liu, Q., & et al. (2020). Application of UAV oblique photogrammetry in structural surface investigation of slope rock mass. Journal of Wuhan University (Information Science Edition), 45(11), 1739-1746. https://doi.org/10.13203/j.whugis20200077

[11]. Wen, J., Zhu, B., Chen, J., & et al. (2023). Application of UAV oblique photography technology in rural construction. Bulletin of Surveying and Mapping, 2023(S1), 101-104+110. https://doi.org/10.13474/j.cnki.11-2246.2023.0521

[12]. Feng, W., Dandan, L., & Qiang, C. (2020). Discussion on the Application of UAV Oblique Photography in the Registration of Rural Housing and Real Estate Integration. In 2020 IEEE 5th International Conference on Intelligent Transportation Engineering (ICITE) (pp. 290-295). IEEE. https://doi.org/10.1109/ICITE50838.2020.9231411