1. Introduction

In recent years, there has been a notable proliferation of artificial intelligence (AI) technology, which has consequently resulted in an expanding range of applications within the domain of psychology. AI systems that employ methodologies such as machine learning, Natural Language Processing (NLP), and computer vision are introducing novel functionalities in the domains of psychological assessment, therapy, and research. The use of AI into various domains of psychology has significant opportunities for enhancing human talents and improving results. Nevertheless, this phenomenon also gives rise to intricate ethical, legal, and social ramifications that necessitate meticulous scrutiny. This paper provides an overview of the present and upcoming implementations of AI in significant areas of psychology. It examines the notable advantages as well as the potential hazards and constraints that necessitate careful examination in the future.

The increasing capacities of AI are facilitating a paradigm shift in the field of psychological practice and research. In the context of therapeutic environments, AI chatbots that employ NLP offer enhanced opportunities for individuals to engage in cognitive behavioral treatment [1]. Additionally, machine learning algorithms play a crucial role in identifying mental health disorders by evaluating digital biomarkers obtained from smartphones and wearable devices. The utilization of AI in the analysis of extensive datasets provides valuable support to psychologists in the development of evidence-based treatment plans that are customized to the specific symptoms and historical backgrounds of individual patients. The utilization of automated techniques for analyzing emotions and psychological states in textual data and facial expressions presents novel opportunities within the field of psychological research. The utilization of AI-enabled technologies in the field of mental healthcare has significant promise for enhancing accessibility and customization. However, the integration of these tools into domains characterized by high sensitivity necessitates careful consideration of ethical quandaries. It is imperative to address the potential risks associated with privacy, transparency, accountability, and the dehumanization of care. It is imperative to possess a comprehensive comprehension of the constraints associated with AI in effectively capturing the many subtleties inherent in human psychology. This paper provides an overview of significant prospects and problems related to six domains, including AI chatbots for therapeutic purposes, algorithms designed to identify mental health illnesses, treatment recommendation systems, and affective computing techniques that utilize sentiment analysis. Through a comprehensive analysis of the significant advantages, ethical concerns, limitations, and biases associated with the utilization of AI, this study offers an impartial viewpoint that can facilitate the informed incorporation of AI capabilities within the realm of psychology.

2. Psychological AI

The ability of AI to automate, enhance, and comb through vast information has led to its rapid encroachment into the field of psychological. However, using AI raises concerns about ethics, confidentiality, liability, and societal effects. The inquiry that follows investigates six areas of how AI can be used to, weighing major benefits and drawbacks.

2.1. Using Chatbot to provide Cognitive behavioral therapy (CBT)

With the progression of technology, an increasing number of medical professionals are utilizing these tools to deliver mental health services that are cost-effective, convenient, and easily accessible. Programmable chatbots and conversational agents employ NLP techniques to establish communication with users [1]. The primary objective of their initiative is to provide assistance to individuals experiencing mental health concerns and facilitate their access to specialized professionals. These technological advancements enable individuals to get mental health care within the comfort of their own homes. Consequently, the accessibility and affordability of mental health therapy are enhanced for a broader population. These tools engage in natural language interactions with patients in order to customize and enhance their experience. The utilization of NLP facilitates the comprehension of human speech by agents, hence enhancing their ability to provide more precise responses to inquiries posed by individuals. This facilitates individuals to engage in communication with medical professionals and acquire information in a more organic manner. The utilization of this technology is crucial for mental healthcare providers.

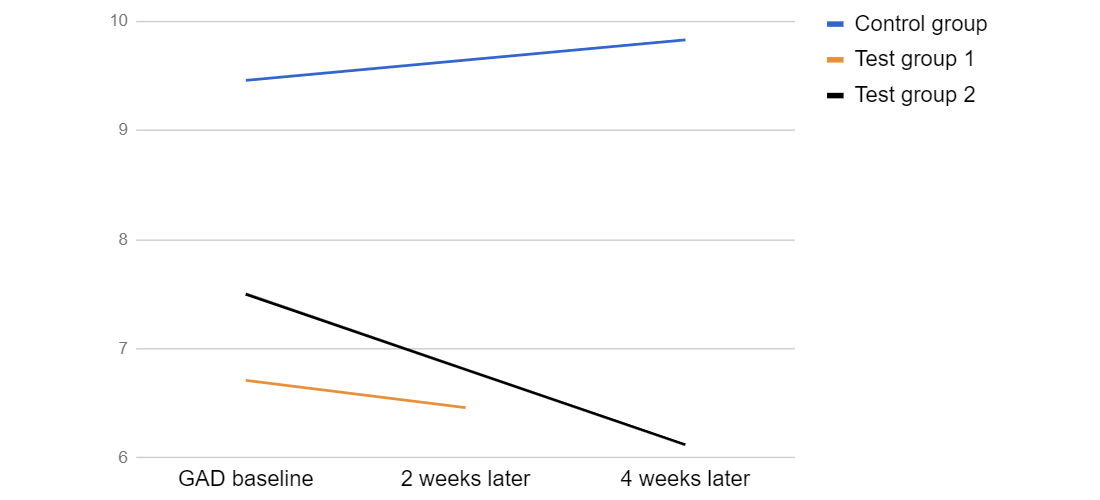

According to the findings presented in Figure 1, there was a notable disparity in depression levels between the two groups. Specifically, participants in the test group exhibited a significant decrease in symptoms of depression during the course of the study, while persons in the informative control group demonstrated a rise in depressive symptoms. Based on the research findings, it has been determined that the utilization of a chatbot has the potential to mitigate symptoms associated with depression [1].

Figure 1. Change in depression [1]

This particular approach enhances the efficiency and quality of patient treatment, while also reducing expenses. This technology facilitates the enhancement of patient care by mental healthcare providers. This technology enhances the process, enabling healthcare professionals to deliver exceptional care to their patients. Consequently, individuals have the opportunity to obtain a more comprehensive and personalized healthcare regimen, so facilitating the attainment of their objectives. The utilization of chatbots and conversational agents in the field of mental healthcare is experiencing a notable surge in popularity. In contrast to face-to-face consultations, these platforms facilitate convenient access to mental health therapy and counseling. The utilization of technology enables individuals residing in remote areas to access mental health care services that would otherwise be inaccessible. These technologies facilitate anonymous discussions, providing a sense of comfort and reassurance to individuals who may feel uneasy addressing their mental health concerns with a trained professional. The implementation of this approach has the potential to enhance and enhance the treatment process, hence yielding more favorable outcomes.The popularity of AI-based advising systems is increasing. Nevertheless, there are still doubts regarding their capacity to comprehend emotions and human behavior. AI-driven systems are prone to provide biased advice due to the limitations imposed by their training datasets [2].

Additional investigation is necessary in order to determine the dependability and impartiality of counseling services that are powered by AI. Although AI has the potential to provide exceptional care, it remains limited in its ability to accurately replicate human emotional relationships. Individuals seeking individualized support express concerns on the ability of AI to fully comprehend the multifaceted nature of human emotions. The decision to choose AI-driven therapy over human therapists raises ethical dilemmas. The discourse surrounding privacy, secrecy, and the possibility for misuse of AI is equally relevant in this context. The efficacy of chatbot therapy may be limited in terms of its ability to offer personalized attention and empathetic understanding when compared to human therapists. The examination of emerging AI capabilities within the mental health domain necessitates a thorough evaluation of the advantages and ethical intricacies associated with their implementation.

2.2. Detecting mental health disorders

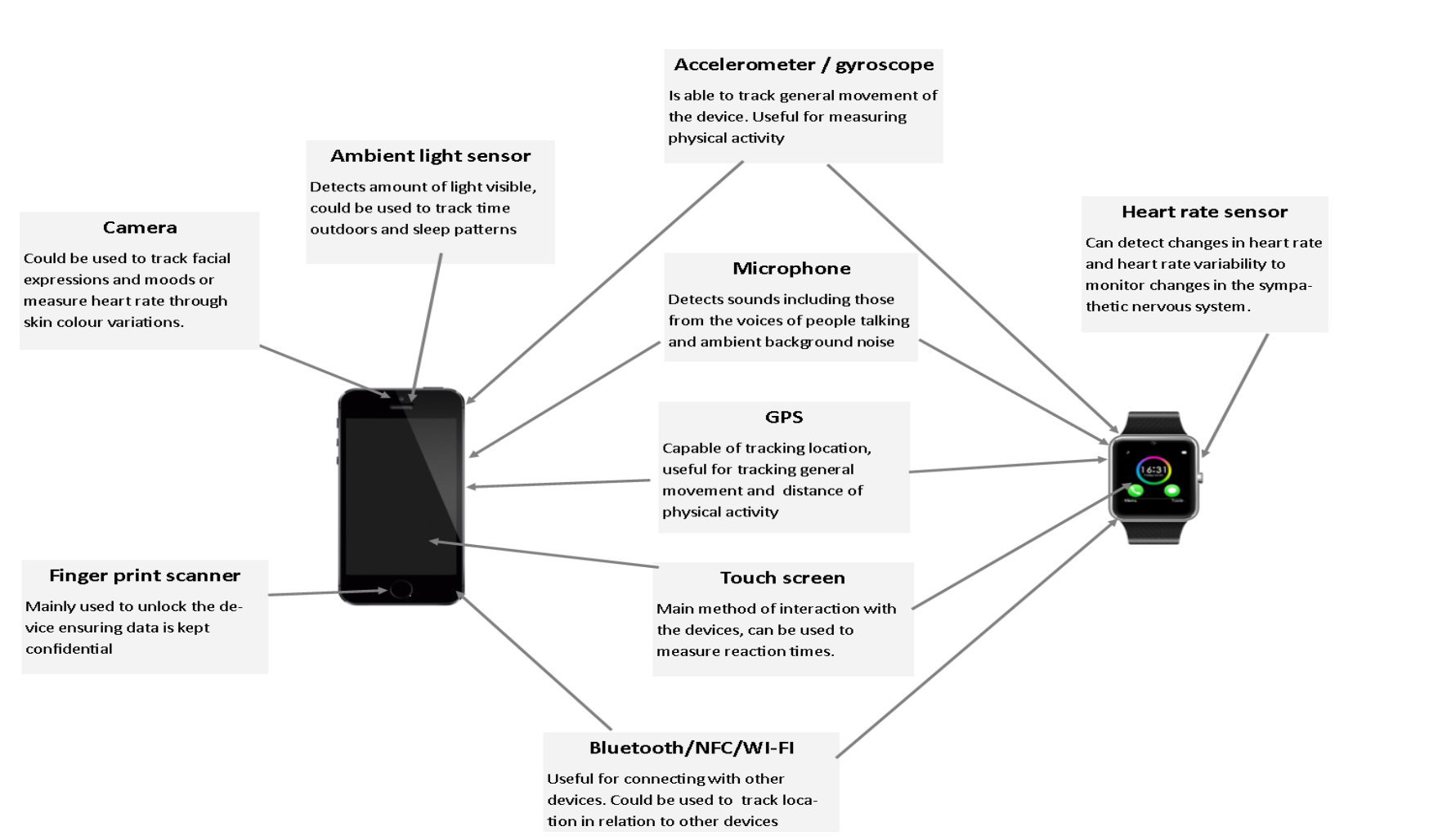

AI has the capability to detect disease-related signals, encompassing conditions such as depression and Post-traumatic stress disorder (PTSD), through the analysis of extensive volumes of behavioral data obtained from real-world sources [3]. Machine learning has gained significant popularity as a means of assessing digital footprints derived from many sources like social media, telephones, and wearables. This approach has proven to be effective in the detection and surveillance of mental health conditions, as depicted in Figure 2 [4].

Figure 2. Sensors on smartphones and smartwatches are being used to track mental health [4].

Nevertheless, despite its potential, the ethical implementation of digital phenotyping poses a delicate and complex issue. Research has demonstrated that linguistic patterns seen in online forums and textual exchanges have the potential to provide insights into mental health problems such as depression and suicide ideation [5]. The utilization of phone usage metadata, namely the observation of decreased communication patterns, has been associated with the ability to forecast the occurrence of psychotic episodes [6]. The data collected by wearable sensors, which includes information about sleep patterns, activity levels, and speech patterns, has been found to contain potential signs of emerging anxiety and mood disorders [7]. Machine learning models possess the capability to amalgamate information derived from a wide range of digital biomarkers in order to deduce an individual's mental condition. The methodology referred to as "digital phenotyping" offers researchers and practitioners detailed and up-to-date information concerning mental health [8]. This approach mitigates the need to depend solely on subjective self-reports obtained during infrequent office visits. The use of AI to the analysis of individuals' everyday digital footprints facilitates the ongoing monitoring of various conditions, hence reducing obstacles to accessing healthcare services. Additionally, it enables the implementation of population-level screening and individualized treatment approaches. Nevertheless, the deployment of digital phenotyping raises notable ethical problems. Some individuals may withhold their consent for the passive collection of data from their devices for the purpose of mental health screening. The utilization of predictive modeling techniques that rely solely on data patterns poses a potential risk of over diagnosis without proper clinical validation. The presence of flawed algorithms also gives rise to concerns over potential discrimination when machine learning incorporates societal biases. In order to effectively address these problems, it is imperative to establish transparency, accountability, and supervision procedures [2].

2.3. AI assist clinicians in targeted treatment planning

Recent advancements in the field of AI have facilitated the development of novel capabilities that allow for the prediction of ideal mental health therapies tailored to individual patients. These predictions are based on a comprehensive analysis of several factors, including the patients' unique symptoms, medical histories, genetic profiles, and other relevant data. AI systems have become capable of aiding clinicians in customizing therapeutic programs for patients based on their particular needs and probabilities of response by examining intricate combinations of clinical biomarkers and health records. Numerous research has provided evidence about the potential efficacy of AI-guided treatment recommendations in enhancing outcomes for individuals with mental health problems like as depression and anxiety. Nevertheless, it is crucial to carefully incorporate these tools into healthcare practices while also considering the ethical implications. The available research demonstrates that AI techniques have proven to be effective in predicting the efficacy of treatments through the analysis of diverse health data. As an illustration, the utilization of machine learning algorithms in conjunction with genetic markers and historical treatment records has yielded a prediction of antidepressant response with an accuracy over 80% [9]. With a sufficient amount of data, it is possible for reinforcement learning systems to infer suitable medication dosages from longitudinal records. The potential significance of reinforcement learning in the healthcare domain arises from its ability to effectively process and evaluate vast quantities of data, enabling informed decision-making. In addition, reinforcement learning has the potential to reveal previously unnoticed patterns and trends within medical records that may have been overlooked through manual examination. This might ultimately result in more customized therapies and enhanced patient outcomes. The utilization of reinforcement learning in the optimization of pharmacological dosages has the potential to enhance therapeutic efficacy and safety, while simultaneously reducing costs and improving patient satisfaction [10]. The capacity to predict reactions enables AI to support physicians in the development of focused treatment strategies. A psychiatrist can enter a patient's symptoms, genetics, and medical history into a prognostic model in order to provide personalized recommendations for drugs and psychotherapies that are optimized for that specific individual. The integration of AI has the potential to greatly enhance clinical decision-making through the facilitation of more comprehensive patient profiles.

Nevertheless, the seamless integration of AI therapy techniques presents certain problems. Physicians may exhibit hesitancy in placing trust in data-driven suggestions that contradict their own clinical judgment. It is imperative to establish measures to mitigate the risks associated with excessive dependence on flawed algorithms. The absence of diversity in training data might potentially introduce biases, hence posing potential dangers. The integration of AI should serve to enhance the proficiency of clinicians, rather than substituting human supervision and provision of healthcare. The ethical obligation of prioritizing patient benefit over profit necessitates the implementation of transparent design [11].

2.4. Understand and Influence Psychology Demographics

Machine learning and data mining methodologies provide novel opportunities for examining diverse psychological demographics and delivering customized information based on the acquired insights. By using machine learning algorithms on extensive information, these methodologies have the capability to discern archetypal clusters within a given community and depict their inclinations. Companies and organizations can utilize this acquired knowledge to effectively target various segments by tailoring messages and experiences that align with underlying preferences [12].

Nevertheless, it is imperative to carefully deliberate over the ethical implementation of said psychological profiling. Central to this methodology is the utilization of machine learning techniques to discern patterns and structures inherent in data, thereby unveiling psychological profiles. As individuals engage in online interactions, they produce digital footprints, encompassing their browsing history, social media engagement, online purchases, and other related activities. Sophisticated machine learning algorithms have the capability to analyze extensive behavioral datasets in order to detect clusters of individuals that demonstrate comparable behaviors and interests.

One potential use of machine learning involves the analysis of social media behavior to categorize people into separate categories. These groups may include individuals who regularly share photographs depicting their daily lives, those who actively seek information about holiday destinations, and individuals with a specific interest in fashion. Through the process of segmenting users into various groups, machine learning has the potential to offer organizations valuable insights regarding the interests of their target customers.

Once key audience segments are identified by machine learning, content and messaging can be tailored to align with the preferences of each group. Tailoring content to psychological profiles in this way is known as "persuasive computing". This approach relies on the theory that by aligning content to existing beliefs and motives, engagement and influence can be increased. Machine learning makes this possible on a large scale by creating algorithms that can map user profiles and automatically customize experiences. This technology provides an efficient and effective way to reach target audiences and influence their decisions [13]. However, the use of persuasive computing and psychological targeting to influence consumer behavior has raised ethical questions. Critics have argued that it takes advantage of vulnerable emotions and exploits psychological weaknesses for the sake of profit. This raises serious ethical concerns, as it could be argued that such practices are manipulative and unfair. It is important to consider the implications of such practices and consider whether they are ethically justifiable [2].

2.5. Leveraging AI Sentiment Analysis to Understand and Predict Patient Behavior

AI approaches like NLP and sentiment analysis are helping to understand and predict patient behavior by evaluating their emotions and attitudes. Extracting and evaluating sentiment from patient communication data can help healthcare practitioners understand psychological states that affect results. However, the reliability and ethical consequences of using AI for such delicate tasks must be assessed. Sentiment analysis uses AI to identify emotional tones and sentiments in textual data. Machine learning algorithms are taught to identify linguistic patterns that indicate emotions like anger, fear, joy, sadness, and others using large labeled datasets [14]. These models can automatically classify patient messages as positive or negative. An AI system could analyze therapy session transcripts and social media posts to classify moods. This technology may help therapists and mental health professionals understand patients' emotions. It can also help social media companies understand their users and tailor content. Multiple research have shown a strong link between patient sentiment and health behaviors. Positive patient sentiment is linked to better health outcomes and medical compliance. Negative emotions are associated to treatment non-compliance and poor health outcomes. The statistics show that patient sentiment matters in medical care [15]. Sentiment analysis on patient conversations helps AI discover psychological risk factors that affect patient outcomes. This permits targeted responses that meet predicted needs.

Nevertheless, there are still some unresolved concerns when it comes to implementing AI sentiment analysis for the purpose of predicting behavioral health. The measurement of performance is inevitably constrained in terms of accuracy, especially when examining diverse demographic groupings. The variation in performance outcomes can be attributed to the disparities in resource availability and opportunity among various groups. Therefore, it is crucial to take into account the specific circumstances in which performance is evaluated in order to guarantee the validity and impartiality of the conclusions derived from the findings. Furthermore, it is imperative to undertake endeavors aimed at ensuring the collection of data from a wide array of demographic groups. This is crucial in order to enhance our comprehension of the precision of performance assessments across various populations. The ethical ramifications of depending on algorithmic judgments of mental status instead of professional evaluations warrant careful consideration. The potential consequences of such decisions can have significant and wide-ranging effects, and therefore should be approached with careful consideration. Professional evaluations are commonly carried out by individuals who possess extensive training, skill sets, and experience necessary to render well-informed assessments. In contrast, algorithmic assessments generally rely on pre-established criteria and are susceptible to biases and various types of inaccuracies. Furthermore, it is worth noting that algorithmic assessments may fail to consider the intricate complexities of an individual's mental condition, perhaps resulting in conclusions that do not align with the optimal outcomes for that person. It is imperative to give due consideration to the ethical ramifications associated with exclusive dependence on algorithmic evaluations of mental state, owing to the following justifications.

2.6. Leveraging AI to Sway Consumer Psychology

One of the key ways AI is shaping consumer psychology is by enabling more sophisticated analysis of consumer data. Sentiment analysis is providing corporations new abilities to analyze consumer psychology and sway purchasing decisions. By extracting emotions and perceptions from consumer data, companies can tailor marketing content and shopping experiences to resonate at an individual level. However, the ethical application of such persuasive computing warrants careful deliberation. Sentiment analysis uses machine learning to categorize text across positivity, negativity and emotional dimensions. This allows AI to scan consumer reviews, social media, surveys and other data to capture attitudes and feelings about products, brands, features and more. Meanwhile, Sentiment analysis linguistic patterns to uncover consumer needs, values, pain points and motivations. Together, these techniques provide rich psychological insights to guide marketing. Armed with emotional and semantic intelligence on consumers, companies can strategically target content and offers. An example of how customer feedback can be used in a marketing strategy is to use neutral product reviews to trigger promotional messaging, while negative reviews can be used as an opportunity to reach out to customers with customer service support. This helps to ensure that customers are provided with a positive experience, and that their feedback is being taken into account. AI enables affective strategies at mass scale by automating psychological analysis of big consumer data.

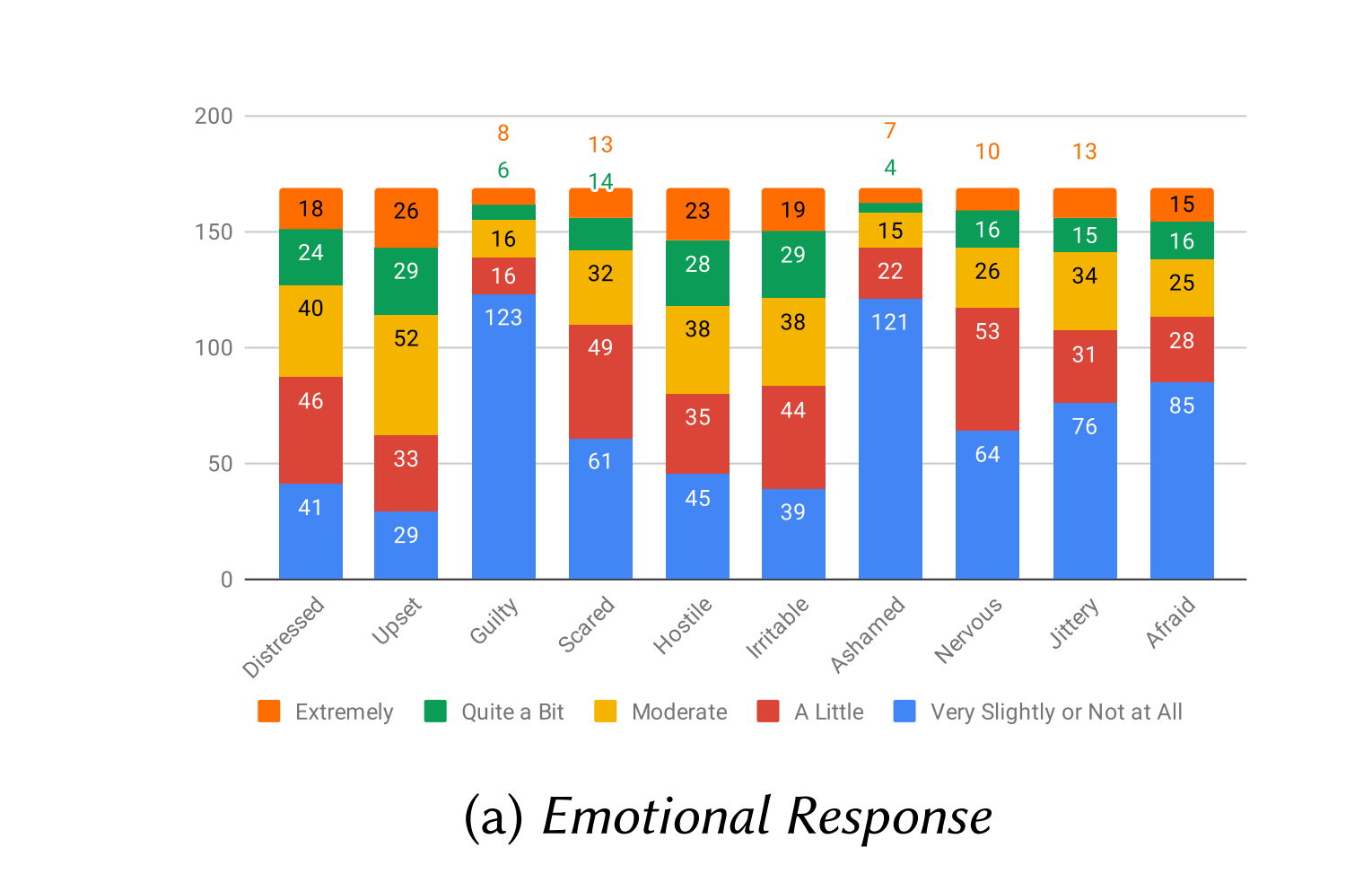

Nevertheless, the utilization of AI to deduce and influence consumer psychology gives rise to ethical concerns. There is a growing apprehension around the utilization of "dark patterns" in the realm of online design. These patterns encompass many strategies that aim to influence user behavior by capitalizing on emotional responses, such as the dread of missing out. The employment of these manipulative strategies has garnered significant ethical scrutiny due to their potential exploitation of individuals who may lack awareness regarding their false nature. Furthermore, the analysis of figure 3 demonstrates that the use of dark patterns can result in a subpar user experience, as it may engender a perception among users that their autonomy in decision-making is being undermined. Therefore, it is crucial for site designers and developers to possess knowledge regarding the probable existence of dark patterns and to guarantee that their designs refrain from including them in any manner [16].

Figure 3. Emotional response [16]

3. Conclusion

This study examined six fundamental domains in which AI is employed within the realm of psychology, encompassing various applications such as automated delivery of therapy and prediction of patient behavior. Each application demonstrates the significant potential of AI in augmenting and broadening the availability of healthcare services. This is achieved by the integration of many skills, including NLP, machine learning, and sentiment analysis. However, the research emphasizes significant ethical challenges associated with the appropriate utilization of these technologies, encompassing concerns such as maintaining anonymity and addressing bias mitigation. The expected growth of AI in the field of psychological is attributed to advancements in technology. Several notable trends need attention, including the heightened incorporation of AI assistants inside therapy workflows, the widespread availability of wearable sensors and applications for personalized digital phenotyping, and the growing utilization of predictive analytics to tailor therapeutic interventions. However, it is imperative to establish comprehensive governance and ethics frameworks to give priority to the well-being of patients as AI capabilities progress and gain more autonomy. It is imperative to uphold the fundamental principles of transparency, accountability, and clinical validation. The utilization of AI holds promise in enhancing the accessibility, quality, and results of applied psychology on a global scale, contingent upon the alignment of technology advancements with ethical principles that uphold human values.

References

[1]. Fulmer R, Joerin A, Gentile B, et al. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety randomized controlled trial[J]. JMIR mental health, 2018, 5(4) e9782.

[2]. Sheng E, Chang K W, Natarajan P, et al. The woman worked as a babysitter On biases in language generation[J]. arXiv preprint arXiv1909.01326, 2019.

[3]. Srividya M, Mohanavalli S, Bhalaji N. Behavioral modeling for mental health using machine learning algorithms[J]. Journal of medical systems, 2018, 42 1-12.

[4]. Woodward K, Kanjo E, Brown D J, et al. Beyond mobile apps a survey of technologies for mental well-being[J]. IEEE Transactions on Affective Computing, 2020, 13(3) 1216-1235.

[5]. Aladağ A E, Muderrisoglu S, Akbas N B, et al. Detecting suicidal ideation on forums proof-of-concept study[J]. Journal of medical Internet research, 2018, 20(6) e9840.

[6]. Barnett I, Torous J, Staples P, et al. Relapse prediction in schizophrenia through digital phenotyping a pilot study[J]. Neuropsychopharmacology, 2018, 43(8) 1660-1666.

[7]. Jacobson N C, Lekkas D, Huang R, et al. Deep learning paired with wearable passive sensing data predicts deterioration in anxiety disorder symptoms across 17–18 years[J]. Journal of affective disorders, 2021, 282 104-111.

[8]. Marsch L A. Opportunities and needs in digital phenotyping[J]. Neuropsychopharmacology, 2018, 43(8) 1637-1638.

[9]. Lin E, Kuo P H, Liu Y L, et al. A deep learning approach for predicting antidepressant response in major depression using clinical and genetic biomarkers[J]. Frontiers in psychiatry, 2018, 9 290.

[10]. Jacob P D. Management of patient healthcare information Healthcare-related information flow, access, and availability[M]//Fundamentals of telemedicine and telehealth. Academic Press, 2020 35-57.

[11]. Taddeo M, Floridi L. How AI can be a force for good[J]. Science, 2018, 361(6404) 751-752.

[12]. Dhelim S, Aung N, Bouras M A, et al. A survey on personality-aware recommendation systems[J]. Artificial Intelligence Review, 2022 1-46.

[13]. Dehnert M, Mongeau P A. Persuasion in the age of artificial intelligence (AI) Theories and complications of AI-based persuasion[J]. Human Communication Research, 2022, 48(3) 386-403.

[14]. Subramanian R R, Akshith N, Murthy G N, et al. A survey on sentiment analysis[C]//2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence). IEEE, 2021 70-75.

[15]. Sala M, Rochefort C, Lui P P, et al. Trait mindfulness and health behaviours a meta-analysis[J]. Health Psychology Review, 2020, 14(3) 345-393.

[16]. Gray C M, Chen J, Chivukula S S, et al. End user accounts of dark patterns as felt manipulation[J]. Proceedings of the ACM on Human-Computer Interaction, 2021, 5(CSCW2) 1-25.

Cite this article

Wang,B. (2024). Psychological AI: A critical analysis of capabilities, limitations, and ramifications. Applied and Computational Engineering,53,205-212.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 4th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Fulmer R, Joerin A, Gentile B, et al. Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety randomized controlled trial[J]. JMIR mental health, 2018, 5(4) e9782.

[2]. Sheng E, Chang K W, Natarajan P, et al. The woman worked as a babysitter On biases in language generation[J]. arXiv preprint arXiv1909.01326, 2019.

[3]. Srividya M, Mohanavalli S, Bhalaji N. Behavioral modeling for mental health using machine learning algorithms[J]. Journal of medical systems, 2018, 42 1-12.

[4]. Woodward K, Kanjo E, Brown D J, et al. Beyond mobile apps a survey of technologies for mental well-being[J]. IEEE Transactions on Affective Computing, 2020, 13(3) 1216-1235.

[5]. Aladağ A E, Muderrisoglu S, Akbas N B, et al. Detecting suicidal ideation on forums proof-of-concept study[J]. Journal of medical Internet research, 2018, 20(6) e9840.

[6]. Barnett I, Torous J, Staples P, et al. Relapse prediction in schizophrenia through digital phenotyping a pilot study[J]. Neuropsychopharmacology, 2018, 43(8) 1660-1666.

[7]. Jacobson N C, Lekkas D, Huang R, et al. Deep learning paired with wearable passive sensing data predicts deterioration in anxiety disorder symptoms across 17–18 years[J]. Journal of affective disorders, 2021, 282 104-111.

[8]. Marsch L A. Opportunities and needs in digital phenotyping[J]. Neuropsychopharmacology, 2018, 43(8) 1637-1638.

[9]. Lin E, Kuo P H, Liu Y L, et al. A deep learning approach for predicting antidepressant response in major depression using clinical and genetic biomarkers[J]. Frontiers in psychiatry, 2018, 9 290.

[10]. Jacob P D. Management of patient healthcare information Healthcare-related information flow, access, and availability[M]//Fundamentals of telemedicine and telehealth. Academic Press, 2020 35-57.

[11]. Taddeo M, Floridi L. How AI can be a force for good[J]. Science, 2018, 361(6404) 751-752.

[12]. Dhelim S, Aung N, Bouras M A, et al. A survey on personality-aware recommendation systems[J]. Artificial Intelligence Review, 2022 1-46.

[13]. Dehnert M, Mongeau P A. Persuasion in the age of artificial intelligence (AI) Theories and complications of AI-based persuasion[J]. Human Communication Research, 2022, 48(3) 386-403.

[14]. Subramanian R R, Akshith N, Murthy G N, et al. A survey on sentiment analysis[C]//2021 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence). IEEE, 2021 70-75.

[15]. Sala M, Rochefort C, Lui P P, et al. Trait mindfulness and health behaviours a meta-analysis[J]. Health Psychology Review, 2020, 14(3) 345-393.

[16]. Gray C M, Chen J, Chivukula S S, et al. End user accounts of dark patterns as felt manipulation[J]. Proceedings of the ACM on Human-Computer Interaction, 2021, 5(CSCW2) 1-25.