1. Introduction

The computational analysis of RNA sequences is a crucial step in the field of RNA biology. In May 2023, Briefings in Bioinformatics published a review article highlighting recent trends in predicting RNA secondary structure, RNA aptamers, and RNA drug discovery using machine learning, deep learning, and related techniques, and discussing potential future pathways in the field of RNA informatics. Currently, popular RNA tertiary structure prediction algorithms include knowledge-based RNA tertiary structure prediction algorithm and physics-based RNA tertiary structure prediction algorithm. Each of these two types of prediction algorithms has its advantages and disadvantages, but neither of them can achieve high-precision and high-integrity RNA tertiary structure modeling. Therefore, the research direction of this paper is to further optimize and improve the relevant prediction algorithm. Deep learning has matured and has achieved great success in many fields such as computer vision [1] and natural language processing [2]. However, deep learning methods that have been successful in many fields face challenges in predicting RNA secondary structure. At present, there are no high precision, low data dependence and high convenience RNA secondary structure prediction models. Despite the emergence of efficient and low-cost calculation methods, the accuracy of this calculation method is not satisfactory. Many machine learning methods have also been applied to this area, however, the accuracy rate has not improved significantly. Therefore, more innovative and effective methods are still needed to improve the accuracy and efficiency of RNA secondary structure prediction.

2. Related work

2.1. RNA structure prediction

RNA consists of long chains of molecules, typically four bases connected by phosphodiester bonds. [3]Hydrogen bonds can also form between bases, creating a pair of bases. These pairs can be classified as either normative or non-standard. "Canonical pairing" refers to A base pair in an RNA or DNA molecule where a stable hydrogen bond is formed between adenine (A) and uracil (U) and between guanine (G) and cytosine (C). These are common forms of pairing in biology. The term "irregular pairing" refers to any base pairing other than AU, GC, and GU. These ways of pairing may be less common, but still play a role in RNA or DNA molecules in some cases. RNA molecules exhibit a quaternary structure, comprising a single strand with base pairs forming the primary structure. The secondary structure consists of various elements such as hairpin loops, stems, internal loops, and pseudoknots, which emerge as a result of the folding process. [4]These secondary structure motifs play crucial roles in RNA function and regulation. Additionally, the tertiary structure of RNA involves further spatial bending of the helix based on the secondary structure, contributing to the molecule's overall three-dimensional conformation. Furthermore, the quaternary structure of RNA involves complex interactions between RNA molecules and proteins, forming intricate complexes essential for various cellular processes.

2.2. Deep learning and RNA secondary structure prediction

Recent advancements in deep learning have revolutionized the prediction of RNA and protein structures. These models, like the optimised differentiable model, bridge local and global protein structures by optimizing global geometry while adhering to local covalent chemistry principles, enabling accurate prediction of protein folding structures without prior co-evolution data. In RNA structure prediction, algorithms like [5]FARFAR2 and scoring systems like ARES, based on geometric deep learning, have demonstrated success in blind tests like RNA-Puzzles experiment. Inspired by AlphaFold2's success in protein structure prediction, new approaches like [6]DeepFoldRNA, RoseTTAFoldNA, and RhoFold have emerged. AlphaFold's neural network-based algorithm predicts base pair distances, optimized through gradient descent, enabling the generation of protein structures without complex sampling procedures. In comparison to fully connected networks, convolutional neural networks are capable of performing operations such as spatial translation and rotation. This not only preserves the internal correlation of the data but also reduces the relevant parameters in the network model. The convolutional structure effectively reduces the probability of overfitting the model.

The ResNet residual unit can be expressed as:

\( {y_{l}}=h({x_{l}})+F({x_{l}},{W_{l}}) {x_{l+1}}=f({y_{1}}) h({x_{1}})={x_{l}} \) (10

Where l represents the L-th residual unit, and xl and xl+1 represent its input and output, respectively. F() represents the residual function, f( ) represents the Relu activation function, and there are many types of Relu functions.

The main focus of algorithm analysis is to assess its correctness and complexity. [7]Correctness is the fundamental criterion for evaluating an algorithm, which is achieved when the algorithm produces the correct output after a series of limited and clear instructions when given an example problem. Complexity analysis is another crucial factor in evaluating algorithm performance. Algorithm complexity analysis typically involves evaluating the space-time complexity of algorithms. Time complexity refers to the total number of times the algorithm's basic operation is executed during its execution, while space complexity refers to the amount of memory required during implementation.

2.3. Transformer automatically predicts RNA structure

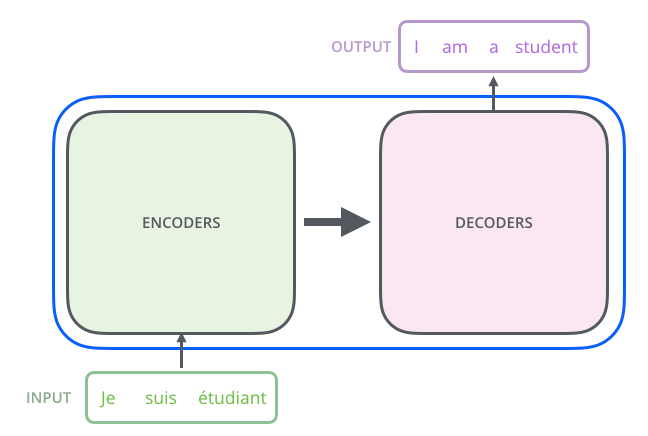

The emergence of the Transformer neural network, driven by an attention mechanism, has revolutionized structural biology. In 2020, DeepMind's AlphaFold2 marked a significant breakthrough by accurately predicting the three-dimensional structure of proteins from their amino acid sequences. This framework, powered by a Transformer neural network, excels in capturing long-range dependencies within input sequences, going beyond their sequential neighborhood. Subsequent frameworks such as [8]RoseTTAFold and OmegaFold have further advanced protein structure prediction, building upon the success of AlphaFold2. The Transformer model employs probabilistic autoencoders and [9]ELBO optimization to maximize marginal likelihood. Initially, the input sequence is encoded to generate vectors representing it. These vectors undergo processing using the Probabilistic Transformer model to produce a probability distribution representing the likelihood of each target tag given the input. During inference, the prediction model can yield varying results depending on the sample taken. In practice, the Transformer operates as an Encoder-Decoder architecture, with the middle portion divided into encoding and decoding components. The Transformer architecture revolutionized natural language processing (NLP) tasks by introducing a self-attention mechanism, enabling it to capture long-range dependencies in sequences efficiently. In practice, the Transformer architecture can indeed be viewed as an Encoder-Decoder architecture, consisting of two main components: the encoder and the decoder. By dividing the Transformer architecture into these two components, the model can effectively handle various sequence-to-sequence tasks, such as machine translation, text summarization, and question answering. The encoder learns to encode the input sequence into a fixed-length representation, capturing its semantic meaning, while the decoder uses this representation to generate the output sequence. This modular design has proven to be highly effective and flexible for a wide range of NLP tasks.

Figure 1. Transformer model (Encoder-Decoder architecture pattern)

The encoding component of the Transformer architecture consists of a multi-layer Encoder, which in this paper employs six layers. Each encoder layer contains two sub-layers(figure 1): a Self-Attention layer and a Position-wise Feed Forward Network (FFN)[10]. In the Self-Attention layer, the encoder can leverage information from other words in the input sentence to encode a specific word, allowing it to focus not only on the current word but also on contextual information from surrounding words. The output from the Self-Attention layer is then passed to the feedforward network for further processing. Similarly, the decoding component of the Transformer architecture also consists of decoders with six layers, each containing Self-Attention and FFN sub-layers. Additionally, there is an Attention layer (encoder-decoder Attention) between these sub-layers, enabling the decoder to focus on relevant parts of the input sentence, akin to the attention mechanism in seq2seq models.

The Transformer architecture's suitability for addressing challenges in predicting RNA structures stems from two key features. Firstly, it can accurately model long-term dependencies in sequence data by incorporating positional encoding into the input sequence. This allows the model to capture remote dependencies between input features without interference from intervening features. Secondly, the Transformer architecture is adept at modeling unordered sets of entities and their interactions, which is challenging for many other deep learning architectures. This is achieved by conducting most operations in a positional manner, enabling the model to handle unordered sets of features effectively. These advantages make the Transformer architecture an attractive choice for quantitative modeling of histone codes, as it enables researchers to simultaneously consider multiple remote regulatory regions near the transcription start site (TSS) in the wider genomic window.

3. Experiment and Methodology

trRosettaRNA, a tool for predicting RNA structure, has two main steps. First, it uses a technique called transformer networks to predict the one - and two-dimensional geometries of RNA. It then minimizes the energy to convert these geometries into the three-dimensional structure of RNA. By benchmarking its performance, trRosettaRNA was found to work better than traditional automated methods. In some blind tests, trRosettaRNA's predictions matched the top predictions of human experts. As a result, trRosettaRNA also outperforms other deep learning-based methods in many structural prediction competitions.

3.1. Experimental data set

This paper proposes the use of a Transformer network as an automatic sequence prediction model to predict RNA nucleotide sequences. To systematically generate different nucleic acid secondary structures, traditional neural network rMSA and SPOT-RNA program are employed. The generated secondary structure is then converted into a model, and the MSA representation and pair representation are modified. The resulting initial transformer network, called RNAformer, is used to predict 1D and 2D geometry. At the core of these steps is the ability to translate the geometry of the generated structural model into constraints to guide the final step in the folding of the gene structure based on energy minimization.

3.2. Data processing procedure

The complete process of transformer networks in RNA secondary structure prediction involves three main steps. The first is the input data preparation phase. Second, the final MSA is selected by running the Infernal program against the smaller RNAcentral database and based on the quality of the predicted distance graph. At the same time, SPOT-RNA was used to predict the secondary structure of RNA. The next step is to predict one - and two-dimensional geometries. In this step, a transformer network called RNAformer is used, similar to the Evoformer network in AlphaFold2. The RNAformer network first converts the input MSA and secondary structure into two representations: the MSA representation and the pair representation. Each RNAformer block then updates these two representations through four steps: (1) MSA to MSA, (2) MSA pairing, (3) pairs of two, and (4) pairing to MSA. In single-channel RNAformer, 48 blocks are looped 4 times in a complete inference, and finally the two-dimensional geometric prediction probability distribution is obtained by linear layer and softmax operation. The final step is to generate the all-atomic structure model. This process may involve parsing, optimizing, and validating the predicted results to obtain a final high-quality structural model.

Similar to trRosetta, trRosettaRNA uses deep learning potentials and physics-based energy terms in Rosetta to generate a complete model of atomic structure by minimizing the energy defined below:

\( E={ω_{1}}{E_{dist}}+{ω_{2}}{E_{ori}}+{ω_{3}}{E_{cont}}+{ω_{4}}{E_{ros}} \) (1)

\( {E_{ori}}={E_{ori,2D}}+\frac{L}{2}{E_{ori,1D}} \) (2)

The folding process in pyRosetta involves generating 20 all-atomic starting structures for each RNA using RNA_HelixAssembler, followed by refinement through quasi-Newtonian optimization L-BFGS to minimize total energy. The total energy comprises constraints based on distance (Edist), direction (Eori), contact (Econt), and Rosetta's internal energy term. Constraints in 2D and 1D directions are represented by Eori,2D and Eori,1D, respectively. The weights (w1 = 1.03, w2 = 1.0, w3 = 1.05, w4 = 0.05) are determined to minimize the average RMSD, based on hundreds of randomly selected RNAs from the training set. After refinement, 20 finely refined all-atomic structure models are obtained for each RNA, from which the model with the lowest total energy (Eq.1) is chosen as the final prediction.

3.3. Experimental results explain

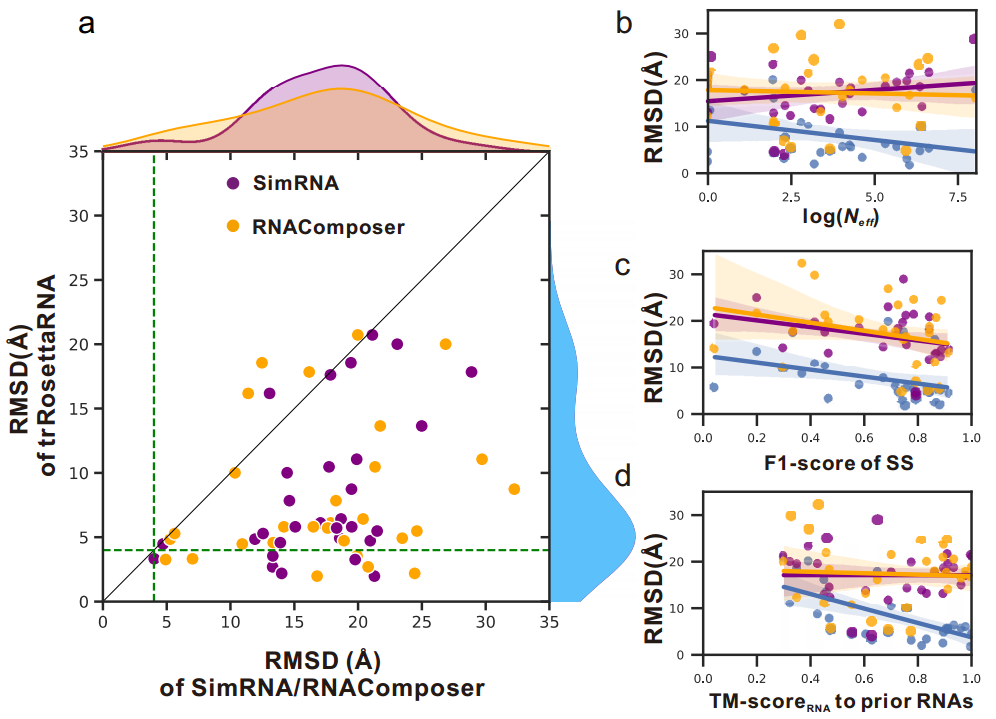

1.trRosettaRNA's performance on 30 individual RNAs

trRosettaRNA was tested against two other methods, RNAComposer and SimRNA, using 30 RNA structures. The average deviation in structure prediction (RMSD) was 8.5 angstroms for trRosettaRNA, compared to 17.4 angstroms for RNAComposer and 17.1 angstroms for SimRNA. This significant difference highlights trRosettaRNA's superior performance in RNA structure prediction.

Figure 2. Results of RNA2D structure prediction model

The results from the RNA2D structure prediction model, as depicted in Figure 2, highlight the superior performance of trRosettaRNA compared to traditional methods such as RNAComposer and SimRNA. In a dataset comprising 30 instances, trRosettaRNA outperformed RNAComposer 86.7% of the time and SimRNA 96.7% of the time. Notably, 20% of the models generated by trRosettaRNA exhibited an RMSD (Root Mean Square Deviation) of less than 4 A, a level of accuracy that neither RNAComposer nor SimRNA could achieve. [11]These findings underscore the efficacy of trRosettaRNA in RNA structure prediction, surpassing the capabilities of conventional methods.

The Das group was identified as the most accurate method, submitting models for 17 targets. It's worth noting that while some participating groups may leverage human expertise or literature data for guidance, trRosettaRNA's predictions are entirely automated and demonstrate comparable accuracy to the top-performing human prediction group. Overall, these results highlight the effectiveness of trRosettaRNA in addressing the challenges of RNA structure prediction and its potential to rival expert human predictions.

2. Blind test of CASP15

In the blind testing of CASP15, the researchers participated as part of the Yang-Server team, utilizing the trRosettaRNA model as an automation server. They achieved a significant 9th position among 42 RNA structure prediction teams, including both human and server teams. Notably, within the server teams, Yang-Server ranked second, following UltraFold_Server. Furthermore, Yang-Server's performance was enhanced when considering the cumulative Z-score (> 0.0) of RMSD, placing 5th among all groups and 1st among server groups. Remarkably, Yang-Server surpassed other deep learning-based groups in terms of Z-scores for RMSD. Particularly accurate predictions were observed for two protein-binding targets, R1189 and R1190, highlighting the method's potential in predicting protein-binding RNAs, despite the absence of binding partner information, though further accuracy improvements are possible.

To address these challenges and improve future RNA structure predictions, one potential approach is to integrate the method with traditional techniques and optimize algorithms for underrepresented RNA structures. For instance, utilizing neural networks, such as physics-based neural networks, to learn force fields or identify/assemble local patterns instead of directly predicting global[12] 3D structures could mitigate biases against known RNA folding and enhance prediction accuracy.

4. Conclusion

Machine learning techniques, particularly deep learning approaches, have shown promising results in predicting RNA secondary structures. Compared to thermodynamic model-based methods, deep learning approaches make fewer assumptions, allowing for the consideration of false knots, third-order interactions, non-standard base pairing, and other previously unidentified constraints. Experimental findings indicate that the prediction accuracy of the multi-objective evolutionary strategy algorithm surpasses that of the evolutionary strategy algorithm, especially for long RNA sequences. However, the prediction performance for some complex RNA secondary structures remains suboptimal. This may be attributed to the inaccuracy of the selected external interface for computing free energy, which affects the accurate calculation of free energy for complex RNA secondary structures. Additionally, in some cases, the real structure of complex RNA secondary structures may be predominantly determined by corresponding points in the target space, resulting in predicted structures that are extremely close to reality but not accurately predicted.

Future improvements in the prediction performance of the algorithm can be achieved by refining the mutation operator and fitness evaluation function based on the multi-objective evolutionary strategy algorithm. Furthermore, exploring alternative free energy computing interfaces and refining the false knot free energy computation method could enhance the prediction accuracy of the algorithm. These advancements are crucial for addressing the challenges posed by complex RNA secondary structures and improving the overall efficacy of machine learning-based RNA structure prediction methods.

References

[1]. Seetin, Matthew G., and David H. Mathews. "RNA structure prediction: an overview of methods." Bacterial regulatory RNA: methods and protocols (2012): 99-122.

[2]. Reuter, Jessica S., and David H. Mathews. "RNAstructure: software for RNA secondary structure prediction and analysis." BMC bioinformatics 11 (2010): 1-9.

[3]. Wang, Yong, et al. "Construction and application of artificial intelligence crowdsourcing map based on multi-track GPS data." arXiv preprint arXiv:2402.15796 (2024).

[4]. Zhou, Y., Tan, K., Shen, X., & He, Z. (2024). A Protein Structure Prediction Approach Leveraging Transformer and CNN Integration. arXiv preprint arXiv:2402.19095.

[5]. Ni, Chunhe, et al. "Enhancing Cloud-Based Large Language Model Processing with Elasticsearch and Transformer Models." arXiv preprint arXiv:2403.00807 (2024).

[6]. Shapiro, Bruce A., et al. "Bridging the gap in RNA structure prediction." Current opinion in structural biology 17.2 (2007): 157-165.

[7]. Gardner, Paul P., and Robert Giegerich. "A comprehensive comparison of comparative RNA structure prediction approaches." BMC bioinformatics 5 (2004): 1-18.

[8]. Miao, Zhichao, and Eric Westhof. "RNA structure: advances and assessment of 3D structure prediction." Annual review of biophysics 46 (2017): 483-503.

[9]. Zheng, Jiajian, et al. "The Random Forest Model for Analyzing and Forecasting the US Stock Market in the Context of Smart Finance." arXiv preprint arXiv:2402.17194 (2024).

[10]. Yang, Le, et al. "AI-Driven Anonymization: Protecting Personal Data Privacy While Leveraging Machine Learning." arXiv preprint arXiv:2402.17191 (2024).

[11]. Cheng, Qishuo, et al. "Optimizing Portfolio Management and Risk Assessment in Digital Assets Using Deep Learning for Predictive Analysis." arXiv preprint arXiv:2402.15994 (2024).

[12]. Wu, Jiang, et al. "Data Pipeline Training: Integrating AutoML to Optimize the Data Flow of Machine Learning Models." arXiv preprint arXiv:2402.12916 (2024).

Cite this article

Zhou,Y.;Zhan,T.;Wu,Y.;Song,B.;Shi,C. (2024). RNA secondary structure prediction using transformer-based deep learning models. Applied and Computational Engineering,64,87-93.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Seetin, Matthew G., and David H. Mathews. "RNA structure prediction: an overview of methods." Bacterial regulatory RNA: methods and protocols (2012): 99-122.

[2]. Reuter, Jessica S., and David H. Mathews. "RNAstructure: software for RNA secondary structure prediction and analysis." BMC bioinformatics 11 (2010): 1-9.

[3]. Wang, Yong, et al. "Construction and application of artificial intelligence crowdsourcing map based on multi-track GPS data." arXiv preprint arXiv:2402.15796 (2024).

[4]. Zhou, Y., Tan, K., Shen, X., & He, Z. (2024). A Protein Structure Prediction Approach Leveraging Transformer and CNN Integration. arXiv preprint arXiv:2402.19095.

[5]. Ni, Chunhe, et al. "Enhancing Cloud-Based Large Language Model Processing with Elasticsearch and Transformer Models." arXiv preprint arXiv:2403.00807 (2024).

[6]. Shapiro, Bruce A., et al. "Bridging the gap in RNA structure prediction." Current opinion in structural biology 17.2 (2007): 157-165.

[7]. Gardner, Paul P., and Robert Giegerich. "A comprehensive comparison of comparative RNA structure prediction approaches." BMC bioinformatics 5 (2004): 1-18.

[8]. Miao, Zhichao, and Eric Westhof. "RNA structure: advances and assessment of 3D structure prediction." Annual review of biophysics 46 (2017): 483-503.

[9]. Zheng, Jiajian, et al. "The Random Forest Model for Analyzing and Forecasting the US Stock Market in the Context of Smart Finance." arXiv preprint arXiv:2402.17194 (2024).

[10]. Yang, Le, et al. "AI-Driven Anonymization: Protecting Personal Data Privacy While Leveraging Machine Learning." arXiv preprint arXiv:2402.17191 (2024).

[11]. Cheng, Qishuo, et al. "Optimizing Portfolio Management and Risk Assessment in Digital Assets Using Deep Learning for Predictive Analysis." arXiv preprint arXiv:2402.15994 (2024).

[12]. Wu, Jiang, et al. "Data Pipeline Training: Integrating AutoML to Optimize the Data Flow of Machine Learning Models." arXiv preprint arXiv:2402.12916 (2024).