1. Introduction

In the context of small-scale plant cultivation and care, the survival of plants greatly influences the overall productivity and cost of the production process. Unlike lathes and raw materials on a mechanical production line, which can be maintained through regular inspections and timely replacements to ensure steady production and relatively stable output, plants require more nuanced care. The vast diversity of plant species means that the environmental conditions and other external factors suitable for different plants vary significantly. Beyond the challenges posed by their delicate growth processes and fragile existence, plants are also highly susceptible to infections from various viruses, bacteria, and pests. Any unconsidered action or minor mistake can directly or indirectly lead to a substantial decline in plant productivity or even mass die-offs, resulting in significant economic losses. Therefore, continuous monitoring of plant health to promptly identify diseases and apply effective treatments is essential to maintaining the stable and healthy condition of plants [1].

Currently, there are three commonly used methods for plant health monitoring in the market: chemical detection methods, physical detection methods, and image recognition detection methods [2-3].

Chemical detection methods primarily involve analyzing a small portion of plant tissue or the chemical composition of the soil in which the plants grow to assess plant health. This approach is based on the assumption that individual problems are generally applicable, using detailed analysis of specific cases to infer potential conditions in the remaining samples. It offers the advantage of providing detailed and clear analytical results. However, in real-world production, we cannot exclude the possibility that individual plants may have characteristics that significantly differ from most samples, rendering their issues non-generalizable. Additionally, the complexity and high cost in terms of time and money associated with the testing process make it impractical for most situations, whether it is for personal hobbyist-level plant testing or large-scale agricultural production. Moreover, we cannot ignore the negative impact on plants caused by collecting plant organs or tissues as samples, which raises concerns about the authenticity of the results and the generalizability of the detected problems. These uncertainties complicate future targeted adjustments, making chemical detection methods less reliable and accurate.

Physical detection methods involve assessing the physical properties of plants and comparing the obtained data against a set standard. These properties include the reflectance of plant leaves, the density, dry weight, and color of plant tissues, among others. This method benefits from the ease of quantifying and comparing test results, which greatly enhances its objectivity and evaluative capacity compared to manual assessment. However, in large-scale production, conducting basic tests on tens of thousands of plants is time-consuming and labor-intensive. Additionally, the testing tools are expensive and prone to damage, making this method less than optimal for large-scale plant monitoring. Moreover, ordinary citizens lack the means to perform such complex tests or afford the costly equipment in their daily lives. Furthermore, this method relies on having extensive data on plant diseases, setting a high entry barrier that makes it inaccessible to small and medium-sized enterprises just entering the industry, as well as individuals in everyday contexts who do not have access to the necessary standards, rendering physical detection methods unusable. Image recognition detection is capable of large-scale monitoring within a short period while requiring only simple, compact, and easy-to-maintain equipment [4]. Furthermore, a significant amount of knowledge and data is stored in binary arrays, which can be converted into user-friendly apps, making them easy to operate for both businesses and individuals with minimal learning curve. The program can also store vast amounts of data to address the potential diseases of a wide variety of plants. As the level of modernization in plant cultivation across various agricultural fields deepens, the application of image recognition technology in practice is becoming more widespread. The development of image recognition-related products holds significant industrial prospects, making further development and exploration of the technology’s applications in both corporate and personal contexts highly valuable from an economic standpoint [5].

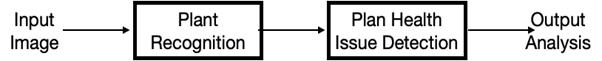

Due to its suitability for automation, image recognition methods are gaining increasing attention in smart logistics and smart agriculture. This paper focuses on image recognition methods, analyzing the shortcomings and limitations of existing methods, and proposes a new intelligent plant health monitoring detection framework. Given that the same plant disease factors can manifest differently in different plants, the proposed framework involves preliminary plant species detection, which provides plant information to enhance the efficiency of subsequent health monitoring monitoring.

The rest of this paper is organized as follows: Section II will analyze the existing methods and their limitations; Section III will introduce the new framework, which combines plant detection with plant health monitoring monitoring. Section IV will present some initial experimental results and analysis. Section V will draw the conclusion.

2. Background

We conducted a comprehensive analysis of related software operations to find ways to optimize app guidance in the plant care field. The software first identifies the image captured by the camera, selects the target plant, and compares it with the database of plant photos. By comparing the shape and color of the roots, stems, leaves, and the morphology of flowers or fruits, it lists possible plant species, allowing users to choose the correct plant species. Following this, by recognizing and comparing images of healthy plants of the selected species, the software determines the type of plant disease and offers suitable care suggestions and advice. The additional memo function assists users in regularly caring for and tending to the plants, improving the plants’ recovery and survival rates, thereby enhancing the app’s credibility and increasing user trust in the application. To improve the accuracy of plant identification, extensive data and image testing and accumulation are required. According to rough statistics, methods that rely on extensive model building and data comparison to identify the health monitoring of plants or fruits have been preliminarily applied and practiced in plant cultivation apps like “Xingse Yanghua” and “Ai Huacao.”

As shown in Table 1, a brief investigation and analysis of various practical apps and mini-programs reveal that these apps generally achieve accurate plant species identification by utilizing databases and basic image recognition technology. They also set up reminders for watering and other care-related tasks based on user-provided information. The active use of image recognition technology and the flexible handling and use of relevant data have greatly facilitated users’ daily lives, resolving their difficulties in accurately identifying plant species due to a lack of specialized knowledge. Moreover, most apps have effectively integrated memo functions across different disciplines, effectively meeting users’ diverse needs and potential requirements for related software. However, most apps still need further development regarding professionalization and broader application, such as disease identification. Currently, most apps require improvement and supplementation in providing reliable, professional, and personalized care plans and offering analysis and treatment suggestions for different plant diseases. They face issues of low identification accuracy, inadequate response strategies, and need to make further breakthroughs in the operability and success rate of plant disease analysis.

Table 1. Summary of Existing Software.

APP |

App Version Number |

Comparison of Software Size(M) |

Reliability |

Average Processing Speed(S) |

Flower Companion APP |

v3.2.12 |

68.3 |

High |

9 |

Shape and Color APP |

v3.14.21 |

9 |

Upper |

8 |

Baidu Image Recognition APP |

V13.34.0.11 |

129.9 |

Upper |

12 |

Flower Recognition Master APP |

v1.2.8 |

16.2 |

Normal |

15 |

Shihuajun APP |

v1.1.1 |

22.2 |

Upper |

13 |

Plant Recognition APP |

v2.3 |

12.33 |

Normal |

14 |

3. The New Proposed Framework

In the current field of plant disease identification, most methods directly identify plant photos and diagnose diseases accordingly. However, the same disease-causing factors may present differently in different plants. For example, canker disease commonly found in plants manifests as holes in the trunk with exudation in poplar trees, while in tulips, it appears as yellow spots on the leaves and flower stalks. Moreover, what appear to be similar disease symptoms in different plant species may actually be entirely different diseases. For instance, apple blotch and mango anthracnose may look very similar in appearance, yet they require different treatments. Therefore, identifying the plant species before analyzing the disease can significantly improve identification accuracy and treatment efficiency.

(a) Mango Anthracnose (b) Apple Blotch

Figure 1. Similar Appearance of Different Diseases: (a) Mango Anthracnose. (b) Apple Blotch.

(a) Canker Disease on Poplar Tree (b) Canker Disease on Tulip

Figure 2. Different Manifestations of Canker Disease: (a) Canker Disease on Poplar Tree (b) Canker Disease on Tulip.

Based on the characteristics that the same pathological changes may manifest differently in different plants, and different causes of disease may exhibit similar symptoms in various plant species, we have divided the operational process into two segments to improve the accuracy of image recognition when processing plant photo data while also meeting various practical needs: the plant identification segment and the plant health monitoring monitoring segment.

Figure 3. Framework of New Plant Health Issue Detection.

3.1. Plant Identification Segment

Plant identification technology originates from object recognition technology. With the rapid development of computer vision in recent years, object recognition technology, as a foundational technology, has made significant advancements. Classic object recognition technologies include Region Proposals (R-CNN), Fast R-CNN, Faster R-CNN, Cascade R-CNN, Single Shot MultiBox Detector (SSD), Single-Shot Refinement Neural Network for Object Detection (RefineDet), Retina-Net, Deformable Convolutional Networks, and the You Only Look Once (YOLO) network [6-14]. Based on these classic object recognition methods, scientists and researchers have continuously released updated versions to address the limitations of each method.

Plant identification technology is a branch of object recognition. By utilizing specialized plant classification databases, existing object recognition neural networks can be trained to directly recognize plant species. In this paper, we primarily use the YOLO network.

YOLO (You Only Look Once) is an object detection algorithm proposed by U.S. Ph.D. students Joseph Redmon and Ali Farhadi in 2016. Compared to other object detection algorithms, YOLO offers higher detection speed and accuracy, which has garnered widespread attention in the field of computer vision. Since its introduction, YOLO has undergone multiple iterations, from YOLOv1 to YOLOv4, with continuous improvements in performance.

The YOLO model architecture consists of three main components: input, output, and model parameters.

(1) Input: YOLO takes an image as input and divides it into an S*S grid. Each grid cell is responsible for detecting objects whose center falls within the cell. For each grid cell, YOLO predicts B bounding boxes, with each bounding box containing the following information: center coordinates (x, y), width and height (w, h), confidence score, and class information.

(2) Output: After training, YOLO outputs S*S*B*5 values. Here, S*S represents the number of grid cells, B represents the number of bounding boxes predicted by each grid cell, and 5 represents the information contained in each bounding box: center coordinates (x, y), width and height (w, h), confidence score, and class information.

(3) Model Parameters: The parameters of the YOLO model mainly include the number of convolutional layers, the number of pooling layers, and the number of nodes in the fully connected layers. These parameters can be adjusted based on the specific requirements of the task.

The advantages of YOLO are its speed, accuracy, and adaptability. YOLO uses a forward propagation method to calculate all grid cells at once, significantly reducing computational load, thereby achieving faster runtime. By dividing the image into grids, YOLO can more accurately detect the position and size of objects. YOLO is suitable for various tasks, such as object detection, instance segmentation, and key point detection.

3.2. Plant Health Monitoring

In the plant health monitoring segment, leveraging deep learning algorithms and real-time plant video surveillance, it is possible to use the effective results from plant species identification combined with the extensive knowledge of plant health research stored in the computer to evaluate the health monitoring of plants. Existing research primarily focuses on plant diseases. Plant disease is an abnormal response in plant health to a pathogen, or an organism that interferes with the normal processes of cells or tissues in a plant. Symptoms of plant disease include spots, dead or dying tissue, fuzzy spores, bumps, bulges, and irregular coloration on the fruits. The disease triangle consists of a susceptible plant, a pathogen, and favorable environmental conditions that allow the pathogen to infect the plant. Various types of plant pathogens, such as bacteria, fungi, nematodes, viruses, and phytoplasmas, can spread through different methods such as contact, wind, water, and insects. Identifying the specific pathogen responsible for the disease is crucial to implementing effective management strategies.

The plant health monitoring detection proposed in this paper goes beyond merely detecting diseases; it also includes monitoring the overall plant condition, such as detecting nutrient deficiencies and assessing moisture and light intensity levels. Through this detection method, the system can determine the plant’s health monitoring, including issues like pest infestations, nutrient deficiencies, and inadequate water or light conditions. To achieve this, neural networks are the primary technology employed. The classic Convolutional Neural Network (CNN) is a form of artificial neural network specifically designed to process pixel input and is widely used in image recognition [15].

4. Conclusion

After extensive research on the existing plant health detection apps available on the market, we found that all of these apps rely on directly identifying the cause of the plant’s disease, with most of them also incorporating additional features such as reminders to assist users in treatment. The integration of multiple functions greatly enhances the convenience for users. However, most apps face challenges such as low efficiency due to the complexity of the analysis process and low accuracy in identification, often resulting in mismatches between the identified plant species and the actual condition, or misdiagnosing the plant’s disease. To address these issues and specifically improve the efficiency and accuracy of plant health detection, we propose a two-part identification strategy and plan: 1) Identifying the plant species, and 2) Conducting health checks to provide diagnoses and treatment methods. During the health check process, the system can diagnose issues such as pest infestations, nutrient deficiencies, and suboptimal environmental conditions. This strategy, implemented through automated monitoring, will greatly benefit the large-scale development of agriculture.

References

[1]. Liew, O. W., Chong, P. C. J., Li, B., & Asundi, A. K. (2008). Signature optical cues: emerging technologies for monitoring plant health. Sensors, 8(5), 3205-3239.

[2]. Siddagangaiah, Srinidhi. “A novel approach to IoT based plant health monitoring system.” Int. Res. J. Eng. Technol 3.11 (2016): 880-886.

[3]. Coatsworth, Philip, et al. “Continuous monitoring of chemical signals in plants under stress.” Nature Reviews Chemistry 7.1 (2023): 7-25.

[4]. Chaerle, Laury, and Dominique Van Der Straeten. “Seeing is believing: imaging techniques to monitor plant health.” Biochimica et Biophysica Acta (BBA)-Gene Structure and Expression 1519.3 (2001): 153-166.

[5]. Presti, Daniela Lo, et al. “Current understanding, challenges and perspective on portable systems applied to plant monitoring and precision agriculture.” Biosensors and Bioelectronics 222 (2023): 115005.

[6]. Khalid, Mahnoor, et al. “Real-time plant health detection using deep convolutional neural networks.” Agriculture 13.2 (2023): 510.

[7]. He K, Gkioxari G, Dollár P, et al. Mask r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2017: 2961-2969.

[8]. Girshick R. Fast r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2015: 1440-1448.

[9]. Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28.

[10]. Cai Z, Vasconcelos N. Cascade r-cnn: Delving into high quality object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 6154-6162.

[11]. Liu W, Anguelov D, Erhan D, et al. Ssd: Single shot multibox detector[C]//Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer International Publishing, 2016: 21-37.

[12]. Zhang S, Wen L, Bian X, et al. Single-shot refinement neural network for object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 4203-4212.

[13]. Dai J, Qi H, Y, et al. Deformable convolutional networks[C]//Proceedings of the IEEE international conference on computer vision. 2017: 764-773.

[14]. Lan W, Dang J,Wang Y, et al. 2018 IEEE International Conference on Mechatronics and Automation (ICMA) [C]. Pedestrian Detection Based on YOLO Network Model. 2018, 2152-7431

[15]. Taye MM. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation. 2023; 11(3):52.

Cite this article

Chen,M. (2024). New plant health monitoring framework. Applied and Computational Engineering,95,210-215.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Liew, O. W., Chong, P. C. J., Li, B., & Asundi, A. K. (2008). Signature optical cues: emerging technologies for monitoring plant health. Sensors, 8(5), 3205-3239.

[2]. Siddagangaiah, Srinidhi. “A novel approach to IoT based plant health monitoring system.” Int. Res. J. Eng. Technol 3.11 (2016): 880-886.

[3]. Coatsworth, Philip, et al. “Continuous monitoring of chemical signals in plants under stress.” Nature Reviews Chemistry 7.1 (2023): 7-25.

[4]. Chaerle, Laury, and Dominique Van Der Straeten. “Seeing is believing: imaging techniques to monitor plant health.” Biochimica et Biophysica Acta (BBA)-Gene Structure and Expression 1519.3 (2001): 153-166.

[5]. Presti, Daniela Lo, et al. “Current understanding, challenges and perspective on portable systems applied to plant monitoring and precision agriculture.” Biosensors and Bioelectronics 222 (2023): 115005.

[6]. Khalid, Mahnoor, et al. “Real-time plant health detection using deep convolutional neural networks.” Agriculture 13.2 (2023): 510.

[7]. He K, Gkioxari G, Dollár P, et al. Mask r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2017: 2961-2969.

[8]. Girshick R. Fast r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2015: 1440-1448.

[9]. Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28.

[10]. Cai Z, Vasconcelos N. Cascade r-cnn: Delving into high quality object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 6154-6162.

[11]. Liu W, Anguelov D, Erhan D, et al. Ssd: Single shot multibox detector[C]//Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer International Publishing, 2016: 21-37.

[12]. Zhang S, Wen L, Bian X, et al. Single-shot refinement neural network for object detection[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2018: 4203-4212.

[13]. Dai J, Qi H, Y, et al. Deformable convolutional networks[C]//Proceedings of the IEEE international conference on computer vision. 2017: 764-773.

[14]. Lan W, Dang J,Wang Y, et al. 2018 IEEE International Conference on Mechatronics and Automation (ICMA) [C]. Pedestrian Detection Based on YOLO Network Model. 2018, 2152-7431

[15]. Taye MM. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation. 2023; 11(3):52.