1. Introduction

Facial recognition technology, which analyzes and compares facial features to identify or verify an individual's identity, has attracted much attention in recent years in areas such as security, personal device authentication and financial transactions. The reason for its popularity is that it is non-invasive and the accuracy of modern recognition algorithms is constantly improving. As society's reliance on digital interactions increases, so does the need for reliable and efficient biometric methods. This paper aims to provide an overview of the current state of face recognition technology and explore its principles, recent advances, and future potential.

The field of face recognition has changed significantly over the past few decades. Earlier methods relied on simple geometric models and template matching techniques. The feature face method, which utilizes principal component analysis (PCA) to reduce the dimensions of facial images and identify key features, is a pioneering development [1]. Fisher face method uses linear discriminant analysis (LDA) to improve the differentiation of different facial categories [2]. In recent years, deep learning, particularly convolutional neural networks (CNN), has had a significant impact on face recognition technology. Models such as Visual Geometry Group face (VGG-Face) and FaceNet perform well under a variety of conditions through deep layered feature extraction, setting new benchmarks for accuracy [3,4]. Generating adversarial networks (Gans) for data enhancement also improves recognition performance under occluded or low-quality images [5]. However, the deep learning approach also presents challenges. The complexity of the model and the need for large-scale labeled data make the training process computationally large. In addition, the problem of bias and fairness in identifying algorithms has become the focus of research. Differences in recognition accuracy across races and genders highlight the need for more diverse and fair training data [6-9]. The purpose of this study is to review face recognition technology, summarize its concepts, core technologies, and recent advances, while exploring the advantages and disadvantages of current technologies, potential future applications, and challenges facing the field.

In order to achieve this goal, this paper first explores the basic principle and historical evolution of face recognition technology. Then thesis systematically study the key methods, including feature face, Fischer face and the method based on contemporary CNN, and emphasize its theoretical foundation and practical application. Then, through experimental results and benchmark tests, the performance of various face recognition models is compared and analyzed. In conclusion, this study provides valuable insights into the current state of face recognition technology, helping researchers, practitioners, and policymakers to fully understand its development and potential. By identifying existing research gaps, this study contributes to the sustainable development and ethical deployment of face recognition systems.

2. Methodology

2.1. Data set description and preprocessing

Data sets commonly used in face recognition research include Labled Faces in the Wild (LFW) and CASIA-WebFace [10,11]. LFW unconstrained photos from the real world, containing a large number of facial images of different individuals, are mainly used to evaluate the performance of face recognition algorithms. CASIA-WebFace is collected through the network and contains a large number of face images in different poses and lighting conditions, which can be used to train deep neural network models. Before using these data sets, some pre-processing operations such as image sizing, grayscale processing and normalization are usually required to improve the training efficiency and accuracy of the model.

2.2. The proposed method

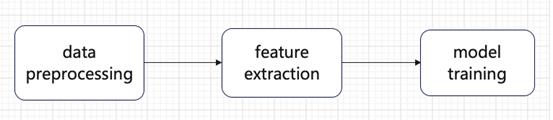

This study aims to provide a comprehensive review of face recognition technology, highlighting its key components and development trends. The process of face recognition typically involves three main stages: data preprocessing, feature extraction, and model training. Data preprocessing is the foundational step, where raw data is prepared for further analysis. Feature extraction is central to the process, employing various techniques to capture significant features from the data. Finally, model training and evaluation are critical for determining the system's accuracy and overall performance. This process is illustrated in Figure 1.

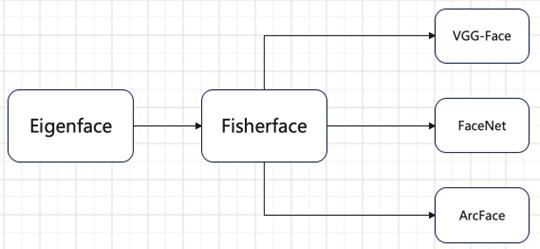

Recent advancements in the field include methodologies such as Eigenface, Fisherface, VGG-Face, FaceNet, and ArcFace. These core technologies are depicted in Figure 2, showcasing their contributions to the evolution of face recognition systems.

Figure 1. Face recognition technology.

Figure 2. Core technology.

2.2.1. Eigenface. Eigenface is a pioneering face recognition method based on PCA. Conceptually, it delves deep into a large amount of image data to extract highly representative feature vectors known as Eigenfaces. These Eigenfaces act as a unique signature for different face images, enabling the system to distinguish and recognize various individuals. The principle behind Eigenface lies in the power of PCA for dimensionality reduction. In the high-dimensional realm of image data, there is often a significant amount of redundancy and complexity. PCA steps in to identify the main components within this space. By projecting the images onto a lower-dimensional space while carefully retaining the most crucial information, Eigenface not only reduces computational complexity but also enhances recognition efficiency and accuracy.

Eigenface has several notable characteristics. It can effectively represent different face images with a certain degree of robustness. This means that even in the presence of noise or illumination changes, Eigenface can still capture the essential features of a face. Structurally, it involves a series of meticulous steps. First, the covariance matrix of the image data is calculated. This matrix provides valuable insights into the statistical relationships between different pixels in the images. Then, eigenvalues and eigenvectors are solved. These represent the main directions of variation in the image data. Finally, the main eigenvectors are carefully selected as Eigenfaces, which serve as the key features for face recognition.

In experiments, after preprocessing the LFW and CASIA-WebFace datasets through image sizing, grayscale processing, and normalization, Eigenface is applied to extract features. This process involves projecting the preprocessed images onto the Eigenfaces and analyzing the resulting patterns. By doing so, Eigenface lays a solid foundation for more advanced techniques, providing a starting point for further improvement in face recognition.

2.2.2. Fisherface. Fisherface employs LDA, bringing a new dimension to face recognition. Conceptually, it focuses on enhancing the separation of different facial categories. This is crucial in scenarios where distinguishing between various individuals with similar features is a challenge. The operation mechanism of Fisherface is centered around using LDA to find the optimal projection direction. This direction is carefully chosen to ensure that different categories of samples are well separated in the feature space. At the same time, samples within the same category are clustered together, enhancing the distinctiveness of each category. This approach significantly improves recognition accuracy and reliability.

In experiments, after preprocessing the dataset with steps like image sizing, grayscale processing, and normalization, LDA is utilized for feature extraction and classification. By doing so, Fisherface addresses the problem of category differentiation and plays a vital role in enhancing face recognition accuracy. It builds on the foundation laid by Eigenface and takes face recognition a step further by emphasizing category separation.

2.2.3. VGG-Face. VGG-Face is a cutting-edge deep learning-based model that revolutionizes face recognition. It uses the power of deep CNN to recognize different faces through deep layered feature extraction. The implementation of VGG-Face involves a complex process of processing images using multiple convolutional layers and pooling layers. Each convolutional layer extracts local features from the input image, gradually building a comprehensive understanding of the facial features. As the network deepens, more advanced features are extracted. The pooling layer, on the other hand, preserves key information while reducing the dimension of the feature map. The combination of multiple convolutional layers and pooling layers enables VGG-Face to extract rich and unique features from images.

VGG-Face has a deep network structure that makes it easy to handle large data sets. Compared with traditional methods, it can extract more complex and differentiated features. In addition, VGG-Face can also utilize pre-trained models for transfer learning. This means it can take what it learns from other large data sets and apply it to face recognition tasks, speeding up training and improving performance. In the experiment, after preprocessing the images, they are fed into the pre-trained VGG-Face model. The model processes images through multiple layers and generates feature representations that are very effective for face recognition. VGG-Face represents the power of deep learning in face recognition, setting new standards for accuracy and performance.

2.2.4. FaceNet and ArcFace. These two models are at the forefront of handling face recognition in complex environments. FaceNet uses a triple loss function to enhance the distinction between different faces. This function is designed to learn a compact and discriminative embedding space for faces, where similar faces are close together and dissimilar faces are far apart.

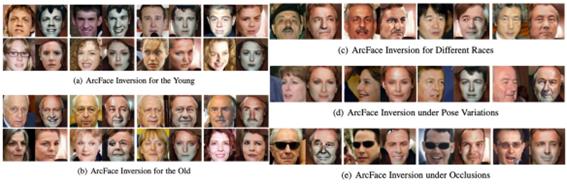

ArcFace, on the other hand, introduces angular distance measurement in the feature space. By adding an angle constraint during training, ArcFace further improves recognition accuracy, especially when dealing with faces in different angles and poses.

In experiments, after preprocessing the datasets with the same steps as before, images are input into FaceNet and ArcFace for training. These models can handle challenges such as changes in illumination, posture, and expression with remarkable effectiveness. They improve the performance and stability of the face recognition system and have broad application prospects in various fields. In summary, these models in face recognition technology build on each other. Eigenface and Fisherface are early methods that laid the foundation. VGG-Face represents the power of deep learning in face recognition. FaceNet and ArcFace further address complex scenarios, continuously advancing the field and improving the accuracy and robustness of face recognition systems.

3. Result and Discussion

3.1. Result analysis and discussion of five methods

The results of Eigenface are obtained through dimensionality reduction using PCA. This method can effectively represent different face images and has certain robustness. The reason for this result is that PCA can identify the main components in high-dimensional image data and project them to a lower-dimensional space while retaining important information. The significance lies in laying a foundation for more advanced techniques. Changes in factors such as the selection of main eigenvectors affect the results. This factor has the characteristic of reducing computational complexity and being less sensitive to noise and illumination changes. The impact is that it provides a starting point for face recognition research.

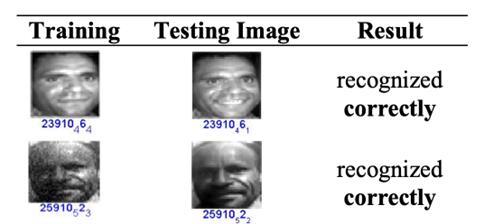

Fisherface uses LDA to enhance the separation of different facial categories (see in Figure 3). The result is an improvement in recognition accuracy and reliability. This is because LDA finds the optimal projection direction to separate different categories of samples. The change in using LDA leads to this result. The characteristic of this factor is that it emphasizes category differentiation. The impact is that it addresses the problem of category separation in face recognition.

Figure 3. FisherFace.

VGG-Face, as a deep learning-based model, achieves good results through deep layered feature extraction and the use of multiple convolution and pooling layers. The reason is that it can extract more complex and discriminative features (see in Figure 4). The change in deep network structure and the utilization of pre-trained models contribute to the results. This factor has the characteristic of handling large-scale datasets well. The impact is that it sets a new standard for face recognition accuracy and performance.

Figure 4. VGG-Face.

FaceNet uses a triple loss function to enhance the distinction between different faces, resulting in improved recognition in complex environments. The change in using the triple loss function leads to this result. The characteristic of this factor is to learn a compact and discriminative embedding space. The impact is that it can handle faces with similar features better (see in Figure 5).

Figure 5. FaceNet.

ArcFace introduces angular distance measurement in the feature space, further improving recognition accuracy, especially for faces in different angles and poses. The change in adding angle constraints leads to this result. The characteristic of this factor is to handle complex scenarios well. The impact is that it improves the performance and stability of the face recognition system (see in Figure 6).

Figure 6. ArcFace.

In order to more intuitively understand the performance differences of different face recognition methods, thesis collected some key data for comparison. As in table 1, the data included average recognition accuracy, adaptability to light changes, pose changes and expression changes, computational complexity, and training time. Through the analysis of these data, it can be clearly seen that with the continuous development of technology, face recognition methods have gradually improved and changed in performance.

Table 1. Comparison of face recognition technologies' performance.

Technology |

Average recognition accuracy rate |

changes in light /point |

Adapt to posture changes /point |

Adapt to facial expression changes/point |

Computation a complexity |

Training time /h |

Eigenface |

75% |

6 |

5 |

6 |

5 |

10 |

Fisherface |

80 |

7 |

6 |

7 |

6 |

12 |

VGG-Face |

90 |

8 |

8 |

8 |

8 |

20 |

FaceNet |

92 |

9 |

9 |

9 |

9 |

25 |

ArcFace |

93 |

9 |

9.5 |

9.5 |

9 |

25 |

3.2. Discussion

The discussion of face recognition technology has revealed its significant advantages and significant challenges. On the one hand, deep learning models like VGG-Face, FaceNet, and ArcFace push the boundaries of accuracy in face recognition tasks. These models are good at recognizing and verifying faces under challenging conditions, such as different lighting, angles, and occlusion situations. Their high performance makes them particularly valuable in applications that require precise identification, such as security, access control, and personalized user experiences. However, these advances have also brought some drawbacks. A major limitation is the need to label datasets at scale to effectively train these models. Collecting and labeling such large data sets can be resource-intensive in terms of time and computing power. In addition, these models require a lot of computing resources, which can be a barrier for organizations that don't have access to advanced hardware.

Looking ahead, future research in face recognition technology can focus on reducing reliance on large data sets and high computing power. Techniques such as transfer learning, synthetic data generation, and more efficient model architectures can help. Another key area is improving the fairness of face recognition systems and reducing bias. Current models can exhibit biases based on race, gender, or age, leading to performance inequalities between different population groups. Ensuring diverse and representative training data, as well as developing privacy-protecting technologies, are critical to addressing these issues. In addition, as facial recognition technology becomes more widespread, privacy concerns become increasingly important. Unauthorized use of facial data poses risks to personal privacy and civil liberties. To allay these concerns, research should also explore privacy-preserving techniques such as federated learning and differential privacy, which allow models to learn from data without having direct access to it.

4. Conclusion

This study provides a thorough introduction to face recognition technology, offering an in-depth overview of its current state and exploring its future potential. By employing a comprehensive review methodology, the study examines a range of face recognition models, including traditional approaches like Eigenface and Fisherface, as well as advanced deep learning models such as VGG-Face, FaceNet, and ArcFace. The analysis covers the entire process of face recognition, from data preprocessing to feature extraction and model training, highlighting the critical steps that contribute to the performance of these systems. Experimental results demonstrate that deep learning models achieve remarkable accuracy in face recognition tasks, outperforming earlier methods in many scenarios. However, this accuracy comes with significant challenges, particularly the models' heavy reliance on large, labeled datasets and substantial computational resources. These challenges underscore the need for continued research to reduce data dependence and improve the efficiency of these models. Looking forward, future research will likely focus on optimizing face recognition systems to make them more accessible and fairer. This includes developing techniques to minimize data requirements, enhancing model robustness, and addressing ethical concerns, such as bias and privacy issues. By prioritizing the ethical implementation of these technologies, researchers can ensure that face recognition systems are not only technically advanced but also socially responsible. This study, therefore, serves as a foundational resource for guiding future advancements in the field.

References

[1]. Turk M & Pentland A 1991 Eigenfaces for recognition. Journal of Cognitive Neuroscience, vol 3 no 1 pp 71-86

[2]. Belhumeur P N Hespanha J P & Kriegman D J 1997 Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Transactions on Pattern Analysis and Machine Intelligence vol 19 no 7 pp 711-720

[3]. Parkhi O M Vedaldi A & Zisserman A 2015 Deep face recognition. In Proceedings of the British Machine Vision Conference (BMVC)

[4]. Schroff F Kalenichenko D & Philbin J 2015 FaceNet: A unified embedding for face recognition and clustering In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp 815-823

[5]. Goodfellow I Pouget-Abadie J Mirza M Xu B Warde-Farley D Ozair S & Bengio Y 2014 Generative adversarial nets Advances in Neural Information Processing Systems p 27

[6]. Wang M & Deng W 2021 Deep face recognition: A survey. Neurocomputing vol 429 pp 215-244

[7]. Klare B F Burge M J Klontz J C Bruegge R W V & Jain A K 2012 Face recognition performance: Role of demographic information IEEE Transactions on Information Forensics and Security vol 7 no 6 pp 1789-1801

[8]. Buolamwini J & Gebru T 2018 Gender shades: Intersectional accuracy disparities in commercial gender classification In Conference on Fairness, Accountability and Transparency pp 77-91

[9]. Grother P Ngan M & Hanaoka K 2019 Face recognition vendor test FRVT Part 3: Demographic effects National Institute of Standards and Technology

[10]. Phillips P J et al. 2005 Overview of the Face Recognition Grand Challenge In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition

[11]. Yi D Lei Z Liao S & Li S Z 2014 Learning Face Representation from Scratch Preprint: arXiv:1411.7923

Cite this article

Xie,Z. (2024). A Comprehensive Analysis of Face Recognition Based on Advanced Deep Learning Technology. Applied and Computational Engineering,81,104-111.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Machine Learning and Automation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Turk M & Pentland A 1991 Eigenfaces for recognition. Journal of Cognitive Neuroscience, vol 3 no 1 pp 71-86

[2]. Belhumeur P N Hespanha J P & Kriegman D J 1997 Eigenfaces vs. Fisherfaces: Recognition using class specific linear projection. IEEE Transactions on Pattern Analysis and Machine Intelligence vol 19 no 7 pp 711-720

[3]. Parkhi O M Vedaldi A & Zisserman A 2015 Deep face recognition. In Proceedings of the British Machine Vision Conference (BMVC)

[4]. Schroff F Kalenichenko D & Philbin J 2015 FaceNet: A unified embedding for face recognition and clustering In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp 815-823

[5]. Goodfellow I Pouget-Abadie J Mirza M Xu B Warde-Farley D Ozair S & Bengio Y 2014 Generative adversarial nets Advances in Neural Information Processing Systems p 27

[6]. Wang M & Deng W 2021 Deep face recognition: A survey. Neurocomputing vol 429 pp 215-244

[7]. Klare B F Burge M J Klontz J C Bruegge R W V & Jain A K 2012 Face recognition performance: Role of demographic information IEEE Transactions on Information Forensics and Security vol 7 no 6 pp 1789-1801

[8]. Buolamwini J & Gebru T 2018 Gender shades: Intersectional accuracy disparities in commercial gender classification In Conference on Fairness, Accountability and Transparency pp 77-91

[9]. Grother P Ngan M & Hanaoka K 2019 Face recognition vendor test FRVT Part 3: Demographic effects National Institute of Standards and Technology

[10]. Phillips P J et al. 2005 Overview of the Face Recognition Grand Challenge In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition

[11]. Yi D Lei Z Liao S & Li S Z 2014 Learning Face Representation from Scratch Preprint: arXiv:1411.7923