1. Introduction

As autonomous driving technology advances, object detection remains a pivotal component impacting the safety and reliability of these systems. Despite significant improvements in the ability of vehicles to perceive and respond to their surroundings, particularly in complex driving conditions, significant challenges remain, with a primary one being the adaptation of object detection algorithms to varying lighting conditions, particularly in low-light environments. Traditional object detection algorithms primarily rely on RGB images, which are often enhanced through preprocessing steps. However, these enhancements can inadvertently result in the loss of crucial information, particularly under low-light conditions where RGB images perform inadequately. In contrast, RAW images can retain more of the original data, including detailed information on light intensity, potentially providing richer features for object detection. Despite this, their applications have not been thoroughly explored. Recent research demonstrates that RAW images can significantly enhance performance in low-light conditionss [1-3]. However, there is a distinct lack of research dedicated to the use of RAW images for object detection in autonomous driving scenarios, leaving the potential advantages of RAW data still under-explored. The paper explores the efficacy of RAW data for object detection in low-light environments, which addresses existing gaps in the field. The LOD dataset, including RAW and RGB images captured under both normal and low-light conditions, is utilized [4]. Specifically, RAW-normal and RAW-dark images are combined to form the RAW dataset, while RGB-normal and RGB-dark images constitute the RGB dataset. The models in both the control and experimental groups are trained by using three leading object detection frameworks: YOLOv8, Faster R-CNN, and EfficientDet [5-7]. The control group models are trained with an RGB dataset, while the experimental group models are trained with a RAW dataset. The results indicate that models trained with RAW data greatly outperformed those trained with RGB data in terms of detection accuracy and performance under low-light conditions. The improvement is attributed to RAW images preserving more of the original information, which enables more detailed and accurate detection and thereby enhances overall robustness.

2. Related Work

2.1. Object Detection in Computer Vision

Object detection represents a core challenge in computer vision, concerned with both identifying and precisely localizing objects within images or video sequences. Traditionally, object detection methods have been grounded in feature engineering coupled with machine learning algorithms, such as Support Vector Machines (SVM) and Adaboost [8]. Despite the significant successes of these early approaches, their dependence on manually engineered features frequently fell short in accurately capturing the complex and varied patterns encountered in real-world scenarios. The advent of deep learning, and in particular Convolutional Neural Networks (CNNs), has revolutionized object detection, leading to significant advancements in this field. Cutting-edge methods, including the R-CNN series, YOLO series, and SSD, have exhibited exceptional performance on benchmark datasets such as PASCAL VOC and COCO [9][10]. Nevertheless, these advanced frameworks are not without their challenges, particularly in adapting to dynamic environmental conditions, such as variable lighting.

2.2. Existing Research on RGB Image-based Object Detection

RGB images are the most commonly used format in computer vision and are extensively applied in various object detection tasks. Despite the ability of advanced processing techniques to enhance deep learning models with rich feature information, this approach is not without its limitations. For instance, Faster R-CNN, using Region Proposal Networks (RPN) in combination with subsequent classification and regression stages, often experiences performance degradation under low-light conditions [11]. Similarly, the YOLO series, although benefiting from its streamlined single-stage detection framework optimized for real-time processing, struggles with issues such as noise and detail loss in inadequate lighting environments. Even EfficientDet, which combines an advanced network architecture with a compound scaling strategy to improve both detection efficiency and accuracy, remains susceptible to performance issues under poor lighting conditions. These challenges highlight the inherent constraints faced by RGB-based models, particularly in low-illumination scenarios, where maintaining high levels of accuracy remains a significant challenge.

2.3. Application of RAW Data in Computer Vision

RAW images capture unprocessed data from camera sensors without processing by the Image Signal Processor (ISP), preserving detailed and comprehensive information. Unlike RGB images, which are typically 8-bit, RAW images use 12-bit or 14-bit depth, providing enhanced color accuracy, dynamic range, and detail retention. While the ISP performs tasks such as noise reduction, color correction, and contrast adjustment to improve visual quality, these processes can sometimes result in the loss of fine details. Consequently, the unprocessed data in RAW images remains valuable for specific applications. Recent studies are increasingly exploring advanced deep learning techniques to enhance RAW image processing for improved performance in various computer vision tasks. For example, CNNs have been employed to enhance feature extraction from RAW images, improving detail retention and recognition accuracy. Xu et al. optimized ISP techniques to better preserve the details and dynamic range of RAW images under low-light conditions, significantly enhancing detection accuracy [12]. Integrating RAW data into existing models presents substantial challenges, particularly regarding the large data volume and high computational complexity associated with processing RAW images. Large-scale RAW image databases, such as PASCALRAW and RAW-NOD, provide essential resources and experimental foundations for exploring the use of RAW images in object detection [13][14]. These datasets offer rich annotations and diverse scenes, fostering research into RAW image applications. Future research should focus on developing more efficient algorithms and processing architectures to manage the substantial data volumes and computational demands of RAW images. Additionally, einvestigating the integration of RAW data with other sensor modalities, such as LiDAR, could further enhance object detection performance. Although the full potential of RAW data in complex real-world applications has yet to be fully explored, its advantages in low-light scenarios and potential improvements through deep learning techniques indicate promising avenues for future research.

3. Methodology

3.1. Dataset Description

This study utilizes the LOD dataset, a collection rich in diverse scenes and varying lighting conditions, making it particularly effective for evaluating object detection performance in low-light environments. The dataset emulates a spectrum of lighting conditions for identical objects from consistent viewpoints through variations in camera exposure settings. Each image is precisely annotated with comprehensive information regarding object categories and spatial locations. And the dataset includes both RAW and RGB formats, capturing the same scene in different lighting conditions. The perspectives and object types included in the dataset closely resemble real-world scenarios encountered in autonomous driving, covering common traffic elements such as bicycles, cars, motorcycles, and buses. These characteristics make the LOD dataset highly relevant to autonomous driving research, as it can effectively simulate complex scenes and lighting variations encountered in actual driving environments, thereby providing realistic testing conditions for training and evaluating object detection systems in autonomous vehicles. Moreover, the dataset contains a total of 4,460 images, systematically divided into training, validation, and testing subsets in a 7:2:1 ratio. The training subset is used for model development, the validation subset for parameter tuning, and the testing subset for the final performance evaluation of the model. This structured division ensures the reliability and reproducibility of experimental results, enabling a comprehensive assessment of the model’s detection capabilities in simulated driving environments. In the pre-processing stage, RAW images, which contain the sensor’s raw output data, are standardized for noise reduction and white balance adjustments to ensure both consistency and quality. Specifically, noise reduction is achieved by using median filtering to remove noise artifacts, and white balance is optimized by using gray card white balance algorithms. Concurrently, RGB images are standardized to achieve a more uniform color distribution. All images are resized to meet the input requirements of various models, optimizing both efficiency and consistency during model training and inference.

3.2. Model Architecture

This study employes a combination of YOLOv8, Faster R-CNN, and EfficientDet to leverage their unique strengths and achieve robust object detection performance.

YOLOv8 is the latest iteration in the YOLO series, utilizing a single-stage detection framework that performs bounding box prediction and class labeling in a single pass. It features an improved network structure with enhanced feature extraction and a refined loss function to optimize object localization and classification. And this model is particularly suited for real-time applications, such as autonomous driving, where speed and accuracy are crucial. It utilizes an advanced network architecture to efficiently process RAW images for complex scenes with varying lighting conditions.

Faster R-CNN employs a two-stage detection approach. In the first stage, a RPN generates candidate object regions from convolutional feature maps. These proposed regions are subsequently refined in the second stage through classification and bounding box regression. The model excels in precision and is adept at producing detailed and accurate detections, even in challenging environments like low light or complex backgrounds. Faster R-CNN utilizes shared convolutional feature maps to enhance feature extraction and object localization. The model processes RAW images through a robust feature extraction pipeline, capturing fine details and maintaining accuracy in diverse conditions.

EfficientDet integrates the advanced feature extraction capabilities of EfficientNet with the BiFPN (Bidirectional Feature Pyramid Network), thus achieving an optimal balance between performance and efficiency. A notable innovation of EfficientDet is its compound scaling strategy, which concurrently optimizes network depth, width, and input resolution. This scaling approach allows the model to adapt flexibly across different computational budgets and operational scales, maintaining efficiency while scaling up or down. The model excels in scenarios with constrained computational resources, offering a high-performance, lightweight solution for diverse object detection tasks. Its efficient design ensures robust performance and high precision even under stringent resource limitations.

The selection of YOLOv8, Faster R-CNN, and EfficientDet ensures a comprehensive evaluation of object detection performance, as they cover a spectrum of application scenarios. YOLOv8 offers rapid detection for real-time applications, Faster R-CNN ensures high precision for detailed analysis, and EfficientDet delivers adaptability and efficiency. This strategic combination ensures that the study captures a comprehensive range of detection capabilities, from real-time processing to high-precision and resource-efficient tasks, providing a well-rounded assessment of object detection performance in diverse conditions.

3.3. Experimental Design

Images captured under normal lighting conditions in the dataset are categorized as RAW-normal and RGB-normal, whereas those taken under low-light conditions are labeled as RAW-dark and RGB-dark. For the comparative experiments, RAW datasets are created by merging RAW-normal and RAW-dark images, while RGB datasets are constructed by combining RGB-normal and RGB-dark images. These combined datasets are then utilized to train and evaluate the models, enabling comprehensive analysis of the performance discrepancy between RAW and RGB data in object detection in diverse lighting conditions. The models are trained using the Adam optimizer, selected for its adaptive learning rate capabilities and effectiveness in handling complex datasets. The initial learning rate is set to 0.001 and is dynamically adjusted using a step decay strategy, where the learning rate is reduced by a factor of 0.1 every 10 epochs. This adjustment helps in fine-tuning the model as it approaches convergence, improving training efficiency and model performance. YOLOv8, a single-stage object detection model, employs a loss function combining localization loss (for bounding box regression) and classification loss (for object classification). This integration simplifies the training process by addressing both tasks in a unified framework. Conversely, Faster R-CNN uses a multi-task loss function that separates Region Proposal Network (RPN) loss (for generating region proposals) from detection loss (for object classification and bounding box regression). This approach enhances precision by distinctly managing proposal generation and object detection. Both models are trained with a batch size of 32, balancing computational efficiency and model convergence. Besides, data augmentation techniques are applied, including random cropping, rotation, and color adjustments. Random cropping and rotation improve the model's ability to handle variations in object positioning and orientation, while color adjustments enhance its robustness to different lighting conditions. These enhancements help the model generalize better across different scenes. Evaluation metrics such as average precision (AP) and mean average precision (mAP) are adopted consistently across all models and datasets to ensure comparability and provide a robust assessment of detection performance.

3.4. Evaluation Metrics

Standard metrics such as mean Precision, Recall and Average Precision (mAP), were employed to evaluate the models' performance. mAP assesses detection accuracy across different object classes, Precision measures the accuracy of the model's predictions, and Recall reflects the completeness of the model's detections. Additionally, particular attention was given to the models’ performance under low-light conditions, with accuracy differences between models trained on RAW and RGB data being compared to analyze the advantages of RAW data.

\( Precision= \frac{TP}{TP+FP} \) (1)

\( Recall= \frac{TP}{TP+FN} \) (2)

\( AP=\int _{0}^{1}p(r)dr \) (3)

\( mAP=\frac{1}{N}\sum _{i=1}^{N}A{P_{i}} \) (4)

In the above Equations, TP denotes the number of true-positive samples, and FP denotes the number of false-positive samples. AP represents the area under the Precision-Recall (PR) curve, while mAP is the average of the AP values across all categories.

4. Results

4.1. Overall Detection Accuracy Comparison

In the experiments, models are trained using both RAW and RGB datasets, and their overall detection performance is evaluated. Table 1 provides the Precision, Recall, and mean Average Precision (mAP) scores for the three models, YOLOv8, Faster R-CNN, and EfficientDet, on the test set. The results demonstrate that models trained on RAW data consistently achieve superior detection accuracy compared to those trained on RGB data. This improvement is attributed to RAW data's ability to retain more detailed and original information from the images, which enhances the models' capacity to detect and classify objects more accurately.

Table 1. Performance of Various Models on RGB and RAW Datasets

Model | Dataset | Precision | Recall | mAP50 | mAP95 |

YOLOv8n | RGB | 0.821 | 0.644 | 0.724 | 0.521 |

RAW | 0.839 | 0.686 | 0.773 | 0.577 | |

Faster R-CNN | RGB | 0.574 | 0.768 | 0.756 | 0.532 |

RAW | 0.540 | 0.802 | 0.786 | 0.554 | |

EfficientDetd0 | RGB | 0.860 | 0.653 | 0.748 | 0.542 |

RAW | 0.875 | 0.673 | 0.766 | 0.559 |

The specific data include the mAP values, precision, and recall for each model, with separate statistics for performance under normal and low-light conditions. Overall, the introduction of RAW data significantly improved detection performance, with the advantages of RAW models being particularly evident in low-light scenarios. For the YOLOv8 model, the mAP50 increased by 4.9 percentage points, precision improved by 1.8 percentage points, and recall also saw an increase of 4.2 percentage points.

4.2. Detection Accuracy Under Low-Light Conditions

To further validate the advantage of RAW data in low-light conditions, the models' detection accuracy was specifically assessed on low-light images. Table 2 presents the Precision, Recall, and mAP scores for the three models under these conditions. The results indicate that models trained with RAW data achieve significantly higher detection accuracy in low-light environments compared to those trained with RGB data, which can be attributed to RAW data’s superior ability to preserve light intensity information, thereby facilitating more effective detail capture even in challenging lighting scenarios.

Table 2. Performance of Various Models on Low-Light Images in RGB and RAW Datasets

Model | Dataset | Precision | Recall | mAP50 | mAP95 |

YOLOv8n | RGB | 0.830 | 0.678 | 0.758 | 0.563 |

RAW | 0.838 | 0.724 | 0.805 | 0.607 | |

Faster R-CNN | RGB | 0.574 | 0.768 | 0.756 | 0.532 |

RAW | 0.586 | 0.819 | 0.804 | 0.586 | |

EfficientDet d0 | RGB | 0.860 | 0.653 | 0.748 | 0.542 |

RAW | 0.872 | 0.693 | 0.795 | 0.583 |

Detailed performance data under low-light conditions underscore the advantages of using RAW data. For instance, the mAP50 score for the YOLOv8 model increased by 4.2 percentage points with RAW data, accompanied by notable improvements in Precision and Recall. Similarly, Faster R-CNN and EfficientDet also demonstrated significant performance gains when trained with RAW data.

In experiments utilizing the YOLOv8 model for object detection, notable improvements were observed in the accuracy of certain object categories that previously exhibited low recognition rates with RGB images, as detailed in Table 3. For example, the pedestrian category, which historically showed poor recognition accuracy under low-light conditions, achieved substantial improvement with RAW data. This enhancement is attributed to RAW data’s superior capacity to capture and preserve details in low-light scenarios, allowing the YOLO model to extract features more effectively and accurately identify complex targets. Furthermore, RAW data’s retention of original light intensity information allows the model to more effectively handle intricate lighting conditions such as shadows and reflections, thereby reducing both false positives and missed detections. Consequently, the YOLO model trained on RAW data achieves significant performance improvements across multiple challenging object categories, demonstrating the advanced capabilities of RAW data in enhancing object detection accuracy.

Table 3. Performance Comparison of YOLOv8n Model on Specific Categories in Low-Light Images for RGB and RAW Datasets

Category | Dataset | Precision | Recall | mAP50 | mAP95 |

All | RGB | 0.830 | 0.678 | 0.758 | 0.563 |

RAW | 0.838 | 0.724 | 0.805 | 0.607 | |

Bicycle | RGB | 0.914 | 0.663 | 0.756 | 0.516 |

RAW | 0.893 | 0.726 | 0.835 | 0.597 | |

Car | RGB | 0.916 | 0.732 | 0.852 | 0.647 |

RAW | 0.925 | 0.827 | 0.917 | 0.779 | |

Motorbike | RGB | 0.757 | 0.619 | 0.691 | 0.486 |

RAW | 0.803 | 0.712 | 0.789 | 0.572 | |

Bus | RGB | 0.933 | 0.904 | 0.951 | 0.753 |

RAW | 0.880 | 0.858 | 0.882 | 0.701 | |

Bottle | RGB | 0.734 | 0.585 | 0.636 | 0.462 |

RAW | 0.871 | 0.703 | 0.820 | 0.517 | |

Chair | RGB | 0.796 | 0.587 | 0.684 | 0.468 |

RAW | 0.859 | 0.595 | 0.689 | 0.489 | |

Diningtable | RGB | 0.719 | 0.560 | 0.625 | 0.437 |

RAW | 0.629 | 0.527 | 0.579 | 0.402 | |

Tvmonitor | RGB | 0.868 | 0.771 | 0.868 | 0.735 |

RAW | 0.845 | 0.840 | 0.932 | 0.802 |

4.3. Performance Comparison of Detection Models with RAW and RGB Data

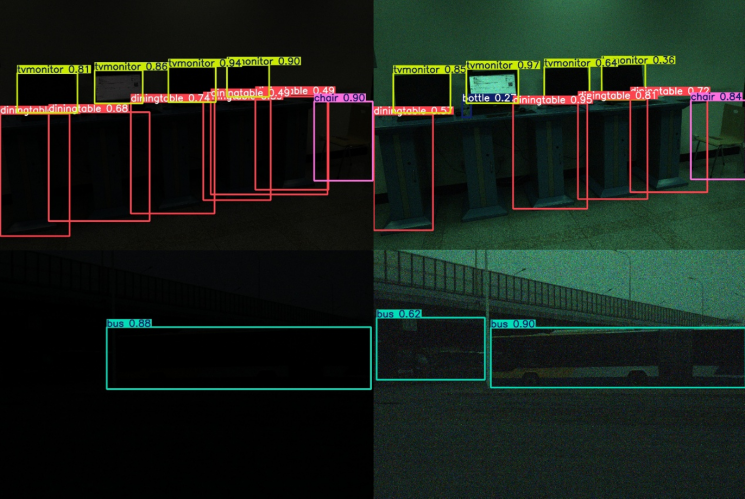

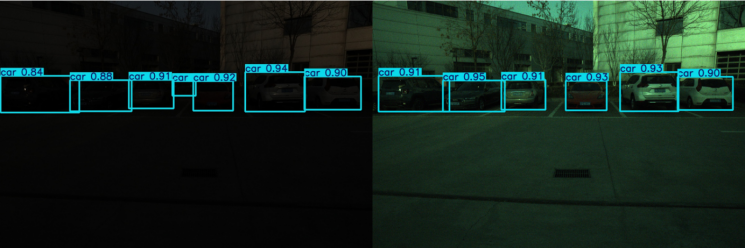

In this experiment, YOLOv8, Faster R-CNN, and EfficientDet each displayed unique strengths. YOLOv8, as a single-stage detection model, excelled in real-time detection tasks due to its speed and efficiency. With RAW data, YOLOv8 maintained its detection speed while improving accuracy, especially when handling low-light images. Faster R-CNN, a two-stage detection model, demonstrated significant advantages in accuracy. The experimental results indicate that, with RAW data, Faster R-CNN maintained a balanced high Precision and Recall, particularly in complex background and multi-object detection scenarios, making it ideal for tasks requiring precise localization. EfficientDet is known for its lightweight and high-performance capabilities. The experiments showed that EfficientDet, with RAW data, further enhanced its performance in resource-constrained environments. Its compound scaling strategy effectively utilized image information at different resolutions, particularly excelling under low-light conditions. The superior performance of RAW data in low-light environments is primarily due to its retention of the original information captured by the sensor, unprocessed by the ISP. While the ISP typically improves visual quality through noise reduction, white balance, and gamma correction, these processes may also lead to the loss of critical detail information. In contrast, RAW data preserves the full range of light and color information, providing a higher dynamic range and finer details. As shown in Figure 1, especially in low-light settings, aiding the detection models in more accurately identifying objects. As shown in Figure 1, this is particularly evident in low-light settings, where RAW data aids detection models in more accurately identifying objects, detecting smaller objects with greater precision, and identifying darker objects that might otherwise be missed.

Figure 1. Objects undetected on RGB images were detected on RAW images.

Furthermore, as shown in Figure 2, object detection on RAW images reduces the false alarm rate compared to detection on RGB images, which highlights the higher accuracy provided by RAW data.

Figure 2. RAW images demonstrated a reduction in false positives.

Compared to models trained with RGB datasets, those trained with RAW data showed significant improvements in both detection accuracy and recall. The experimental results demonstrate that, across all models (YOLOv8, Faster R-CNN, EfficientDet), RAW data particularly excels under low-light conditions. This advantage becomes more pronounced as RGB data suffer from noise and detail loss. The high fidelity and rich information in RAW data offer a broader range of feature extraction options, thereby enhancing detection outcomes.

4.4. Impact of RAW Pre-training vs. RGB Pre-training on Model Performance

The next phase of the research aims to investigate whether models pre-trained on RAW data and subsequently fine-tuned on conventional RGB images exhibit superior performance compared to models trained exclusively on RGB data. YOLOv8 is chosen for this investigation. In the experiment, the experimental group undergoes 50 epochs of pre-training on RAW data with YOLOv8, while the control group receives 50 epochs of pre-training on RGB data. Both groups then continue with 50 epochs of fine-tuning on RGB data, employing identical training protocols to ensure consistency and fairness in the comparison. As presented in Table 4, the results demonstrate that models pre-trained on RAW data show enhanced performance during the subsequent RGB fine-tuning phase. This finding supports the advantage of RAW data in the pre-training stage, particularly in complex scenarios and low-light conditions. The high fidelity and detailed information preserved in RAW data during pre-training establish a robust foundation for subsequent RGB training, resulting in significant enhancements in detection accuracy and recall.

Table 4. Performance Comparison of RAW Pre-Training vs. RGB Pre-Training on Low-Light Images in RGB and RAW Datasets

Category | Dataset | Precision | Recall | mAP50 | mAP95 |

All | RGB-pre | 0.853 | 0.637 | 0.734 | 0.504 |

RAW-pre | 0.887 | 0.622 | 0.742 | 0.518 | |

Bicycle | RGB-pre | 0.838 | 0.591 | 0.711 | 0.480 |

RAW-pre | 0.923 | 0.606 | 0.730 | 0.506 | |

Car | RGB-pre | 0.882 | 0.710 | 0.800 | 0.608 |

RAW-pre | 0.965 | 0.660 | 0.808 | 0.625 | |

Motorbike | RGB-pre | 0.900 | 0.714 | 0.811 | 0.552 |

RAW-pre | 0.894 | 0.653 | 0.791 | 0.529 | |

Bus | RGB-pre | 0.874 | 0.732 | 0.809 | 0.584 |

RAW-pre | 0.917 | 0.732 | 0.839 | 0.621 | |

Bottle | RGB-pre | 0.864 | 0.600 | 0.774 | 0.379 |

RAW-pre | 0.912 | 0.667 | 0.817 | 0.421 | |

Chair | RGB-pre | 0.783 | 0.537 | 0.625 | 0.453 |

RAW-pre | 0.848 | 0.543 | 0.635 | 0.455 | |

Diningtable | RGB-pre | 0.811 | 0.642 | 0.713 | 0.512 |

RAW-pre | 0.834 | 0.581 | 0.715 | 0.513 | |

Tvmonitor | RGB-pre | 0.872 | 0.571 | 0.630 | 0.467 |

RAW-pre | 0.804 | 0.536 | 0.603 | 0.476 |

5. Conclusion

The results reveal the substantial advantages of employing RAW data for object detection, particularly in advanced applications such as autonomous driving. In low-light environments, RAW data markedly enhances object recognition capabilities, thereby providing more reliable performance for self-driving cars at night and in other challenging lighting conditions. This improvement not only augments system safety and dependability, but paves the way for new technological advancements in ITS. In addition, it is found that combining RAW data with state-of-the-art object detection models can greatly improve performance, suggesting a fruitful avenue for future deep learning research and applications. However, several notable challenges remain. The high processing and storage demands of RAW data necessitate the development of more efficient compression and processing techniques to manage computational resources effectively. In addition, the diversity in RAW data formats from different imaging devices emphasizes the need for standardized processing protocols to ensure consistency and interoperability. Integrating RAW data with complementary sensor modalities, such as infrared and depth sensors, is a promising approach to advancing multimodal fusion detection models, which can enhance the ability of systems to interpret complex environments more comprehensively. Furthermore, investigating the application of RAW data in a range of unfavorable conditions, including fog, rain, and snow, is crucial for improving the robustness and adaptability of autonomous driving systems. Such investigations will contribute to the development of more resilient and practical solutions for real-world scenarios.

References

[1]. Ljungbergh, W., Johnander, J., et al. (2013) Raw or Cooked? Object Detection on Raw Images. Scandinavian Conference on Image Analysis, Springer Nature Switzerland, 374-385.

[2]. Omid-Zohoor, A., Ta, D., Murmann, B. (2014) PASCALRAW: Raw Image Database for Object Detection. Stanford Digital Repository.

[3]. Li, Z.H., Lu, M., et al. (2024) Efficient Visual Computing with Camera Raw Snapshots. IEEE Transactions on Pattern Analysis and Machine Intelligence, 46(7): 4684-4701.

[4]. Hong, Y., Wei, K., Chen, L. and Fu, Y. (2021) Crafting Object Detection in Very Low Light. in BMVC. https://github.com/Linwei-Chen/LIS.git.

[5]. Jocher, G., Chaurasia, A. and Qiu, J. (2023) YOLO by Ultralytics. https://docs.ultralytics.com/

[6]. Girshick, R. (2015) Fast R-CNN. IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 1440-1448.

[7]. Tan, M., et al. (2020) EfficientDet: Scalable and Efficient Object Detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10781-10790.

[8]. Osuna, E., et al. (1997) Training Support Vector Machines: An Application to Face Detection. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 130-136.

[9]. [9] Girshick, R., et al. (2014) Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. IEEE Conference on Computer Vision and Pattern Recognition, 580-587.

[10]. Redmon, J., et al. (2016) You Only Look Once: Unified, Real-Time Object Detection. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 779-788.

[11]. Jeon, M., Seo, J., Min, J. DA-RAW: Domain Adaptive Object Detection for Real-World Adverse Weather Conditions. arXiv preprint arXiv:2309.08152, 2023.

[12]. Xu, R., et al. (2023) Toward Raw Object Detection: A New Benchmark and a New Model. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 13384-13393.

[13]. Omid-Zohoor, A., Ta, D. and Murmann, B. (2015) PASCALRAW: Raw Image Database for Object Detection. Stanford Digital Repository. http://purl.stanford.edu/hq050zr7488

[14]. Morawski, I., et al. (2022) GenISP: Neural ISP for Low-Light Machine Cognition. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 629-638.

Cite this article

Xie,Z. (2024). Impact of RAW vs. RGB images on low-light object detection in autonomous driving. Applied and Computational Engineering,81,148-157.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Machine Learning and Automation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Ljungbergh, W., Johnander, J., et al. (2013) Raw or Cooked? Object Detection on Raw Images. Scandinavian Conference on Image Analysis, Springer Nature Switzerland, 374-385.

[2]. Omid-Zohoor, A., Ta, D., Murmann, B. (2014) PASCALRAW: Raw Image Database for Object Detection. Stanford Digital Repository.

[3]. Li, Z.H., Lu, M., et al. (2024) Efficient Visual Computing with Camera Raw Snapshots. IEEE Transactions on Pattern Analysis and Machine Intelligence, 46(7): 4684-4701.

[4]. Hong, Y., Wei, K., Chen, L. and Fu, Y. (2021) Crafting Object Detection in Very Low Light. in BMVC. https://github.com/Linwei-Chen/LIS.git.

[5]. Jocher, G., Chaurasia, A. and Qiu, J. (2023) YOLO by Ultralytics. https://docs.ultralytics.com/

[6]. Girshick, R. (2015) Fast R-CNN. IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 1440-1448.

[7]. Tan, M., et al. (2020) EfficientDet: Scalable and Efficient Object Detection. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 10781-10790.

[8]. Osuna, E., et al. (1997) Training Support Vector Machines: An Application to Face Detection. IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 130-136.

[9]. [9] Girshick, R., et al. (2014) Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. IEEE Conference on Computer Vision and Pattern Recognition, 580-587.

[10]. Redmon, J., et al. (2016) You Only Look Once: Unified, Real-Time Object Detection. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 779-788.

[11]. Jeon, M., Seo, J., Min, J. DA-RAW: Domain Adaptive Object Detection for Real-World Adverse Weather Conditions. arXiv preprint arXiv:2309.08152, 2023.

[12]. Xu, R., et al. (2023) Toward Raw Object Detection: A New Benchmark and a New Model. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 13384-13393.

[13]. Omid-Zohoor, A., Ta, D. and Murmann, B. (2015) PASCALRAW: Raw Image Database for Object Detection. Stanford Digital Repository. http://purl.stanford.edu/hq050zr7488

[14]. Morawski, I., et al. (2022) GenISP: Neural ISP for Low-Light Machine Cognition. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 629-638.