1. Introduction

Quantitative trading has been transformed by the use of artificial intelligence, which can enable higher-level models to discover patterns and make trades at a faster pace. But the opaque nature of most machine learning models, including deep learning, has caused alarms about their trustworthiness, interpretability, and regulatory adherence. Such problems are especially severe in environments where financial choices are high-risk. Investors, auditors and regulators ask for it to be transparent so that AI predictions do follow best practice and produce auditable reasoning. Explainable AI was born out of such problems, to explain models without compromising performance. Attention mechanisms, one well-known XAI method, constantly sort input variables in terms of their relevance, which is highly flexible and interpretable [1]. For example, in times of economic volatility, attention can be given to macroeconomic measures such as bond yields and unemployment, while in stable markets, technical signals such as moving averages take centre-stage. This flexibility not only boosts predictive accuracy, but also gives stakeholders transparency into the decision-making process. In this article, we explore how attention mechanisms can foster transparency and efficiency in quantitative trading. We explore their potential to counter bias, improve regulatory compliance, and promote stakeholder trust. We also tackle the pragmatic issues of applying attentional mechanisms – computation, integration with existing systems. In establishing the ability of attention systems to reconcile performance and interpretation, it fits into the growing literature on sustainable and ethical use of AI in finance.

2. Explainable AI in Quantitative Trading

2.1. The Need for Transparent Trading Models

The basis of quantitative trading is transparency in order to build trust and accountability in investment decisions. The opacity of machine learning models, especially those with sophisticated algorithms, can be a red flag for investors, auditors and regulators. That uncertainty also raises questions about predictions' accuracy and puts an obstacle in the way of mass adoption of AI-powered trading systems. Transparent models solve this issue by explaining their predictions in a straightforward way that cuts the model-complexity barrier and helps stakeholder understand them. These models provide usable data on decision-making and help build trust, allow for regulation compliance and support more efficient model debugging and development. Transparent models also contribute to risk mitigation, spotting and correcting trader errors. For example, they might flag models such as overfitting or excessive use of superfluous correlations that don't make sense for predictions [2]. During the disclosure of such vulnerabilities, open models ensure that trading strategies are robust, scalable and resilient to market dynamics. Furthermore, this visibility supports long-term financial goals through safe, trustless decision making of trading systems. Not only does transparency instill trust in AI, but it helps them become a tool that will enable sustainable, responsible financial policies in an increasingly complex market.

2.2. Challenges in Factor Relevance and Interpretation

Financial markets are highly complex and dynamic, and factor selection and interpretation is difficult for quantitative trading. Such things as macroeconomic indicators, price movements, trading volume, and market sentiment interact in non-linear ways, and their importance may change rapidly in response to the market dynamics. Classic feature selection methods, though successful in static environments, tend to lose track of these dynamic interactions, which results in models that are either overfitted to particular situations or underfits to wider market dynamics. Furthermore, the abundance of data is an even greater challenge because it gets harder to separate truly informative from irrelevant noise [3]. Complicating this is the fact that machine learning models – deep learning models in particular – tend to be considered black boxes when it comes to interpretability. This opaqueness can make it hard for stakeholders to put their faith in the model’s outputs when there is a high level of financial risk associated with a decision. To deal with these issues, it is necessary to create approaches that both prioritise relevant elements and give intuitive explanations of their importance. Such techniques will enable analysts to gain insight into and validate the decision making process of the model in response to market trends and enhance performance and reliability [4].

2.3. The Role of Attention Mechanisms

Attention mechanisms are the gold standard for solving the double problem of factor selection and model realism in quantitative trading. Using these algorithms to dynamically assign importance weights to the input features, they allow models to focus on the factors that are most relevant for a prediction. Specifically, attention mechanisms compute a set of weights, \( {α_{i}} \) , for each input feature \( {x_{i}} \) using a compatibility function, often expressed as:

\( {α_{i}}=\frac{exp({e_{i}})}{\sum _{j=1}^{n}exp({e_{j}})}, where {e_{i}}=f({x_{i}},h) \) (1)

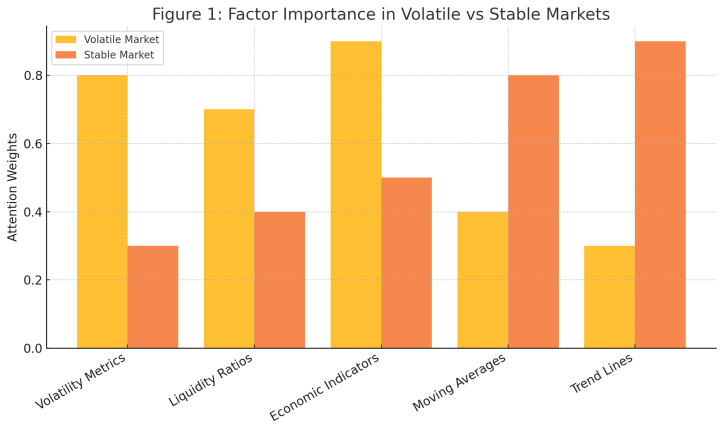

Here, \( {e_{i}} \) represents the compatibility score of the input \( {x_{i}} \) , determined by a function \( f({x_{i}},h) \) that evaluates the relevance of \( {x_{i}} \) in the context of hidden state \( h. \) The weights \( {α_{i}} \) are then used to calculate a weighted sum of the input features, effectively allowing the model to emphasize the most critical factors for prediction. For example, in an environment of market volatility, attention algorithms might weight features like volatility metrics, liquidity ratios, and other economic indicators to reflect their greater importance. On the other hand, if you have a fixed state, weights could shift toward technical analysis points such as moving averages or trend lines. This contextual flexibility means that the model will remain agile to evolving market conditions, thus improving its predictive ability. In addition to their performance benefits, attention mechanisms provide a transparent framework for understanding model behavior. By visualizing the attention weights \( {α_{i}} \) , stakeholders can gain insights into the factors that drive predictions, offering valuable interpretability in regulated environments where decision accountability is critical [5]. For example, in an environment of market volatility, attention algorithms might weight features like volatility metrics, liquidity ratios, and other economic indicators to reflect their greater importance. On the other hand, if you have a fixed state, weights could shift toward technical analysis points such as moving averages or trend lines.

3. Factor Selection with Attention Mechanisms

3.1. Identifying Relevant Factors in Dynamic Markets

Markets are constantly in flux and the role of interest rates, volumes and macroeconomic indicators shift with changes globally and regionally. Attention processes are specifically positioned to navigate this change environment by recognising trends across time and space, and allocating weights to factors that most matter. For instance, when there’s economic risk, bond yields, unemployment rates and inflation numbers tend to be the most influential drivers of decision-making as they measure macroeconomic conditions. Conversely, in bull markets, attention models can be adjusted to pivot toward momentum, sector growth and investor sentiment to seize on rising markets. This modularity of attentional mechanisms also means models are not limited by fixed factor weights, which rapidly become out of date in volatile contexts. Rather, these are mechanisms that allow for instant adjustments so predictions can match the current market environment. This capability minimizes overfitting or based on the wrong things, so trading strategies are as strong and practical as possible. Also, by training attention models on temporal dependencies, attention models pick up the interrelations missed by traditional approaches. For example, in a central bank policy change, interdependencies between interest rates, currencies and equity markets play a key role, and attentional processes can dynamically prioritize the dependent factors [6]. This flexibility not only increases the accuracy of predictive algorithms but also offers an excellent platform for constructing data-driven, situational trading strategies.

3.2. Visualizing Factor Importance for Stakeholders

The visualization of factor importance is one of the most powerful attributes of attention algorithms and provides valuable and concrete knowledge about model behaviour. Attention scores (the sum of all input variables’ relative importance) can be plotted to give a simple visual explanation of the way that the models come up with predictions. For example, in a model of stock prices, attention scores can reflect that technical parameters like moving averages and RSI are more important in times of stability, while macro variables like GDP growth and inflation are more important in volatile times [7]. This transparency helps stakeholders to verify the model decision-making and make sure it aligns with their strategy. Figure 1 is an actual example, a bar chart of attention scores on different factors under various market conditions. It is seen from the chart that in times of economic uncertainty volatility, liquidity ratios, and economic indicators receive higher attention weights (note the taller bars for these features). In stable periods, however, attention turns to technical indicators and sector-level metrics, which are more opportunistic there. This map not only shows contextual flexibility of attention mechanisms, but also gives stakeholders insight into what they need to monitor closely to make decisions.

Figure 1: Factor Importance in Volatile vs Stable Markets

In providing such visual feedback, attention mechanisms can close the loop between sophisticated ML models and the realities of financial analysts, portfolio managers and regulators. They bypass black-box models, give precise reasons for predictions, and allow analysts to tweak models and adjust trading rules based on data. This transparency creates stakeholder trust and makes AI-powered systems understandable and actionable.

3.3. Integrating Attention Mechanisms in Trading Systems

Attention mechanisms seamlessly blend machine learning with financial know-how in a trading system, allowing models to choose the relevant parameters without sacrificing theoretical validity. For example, at times of monetary policy adjustments, focus can be on inflation and interest rates, while for industry-level measures, it is on sector growth and firm performance. It makes use of machine learning power without sacrificing interpretability or expertise. Attention also facilitates scalability, by automatically selecting factors, reducing human intervention, and enforce compliance by announcing model choices. With increased predictive sensitivity and accuracy, they enable banks to craft adaptive, open-ended strategies in response to changing market conditions.

4. Enhancing Transparency in AI Models

4.1. Understanding Decision-Making Processes

Transparency in decision-making is a key element to the adoption of AI in trading where trust and accountability play a critical role. Attention mechanisms are extremely good at allowing this transparency because models are shown how they make their predictions, giving us a direct view into the decision process. By weighting and displaying input parameters, these features enable analysts to identify the variables with the highest impact on model outputs. To take an example, for estimating market conditions, attention-based models can indicate the importance of economic indicators such as GDP growth or consumer sentiment, where one can clearly see how these influence forecasts. This ability to easily explain the reason behind predictions allows stakeholders to rely on the output of AI models. It provides an interpretive capability that can be tied to existing financial principles so that decisions are data-based and not just theory-based. Also, this transparency also encourages the enforcement of regulatory rules that often require an auditable, justified decision-making process. In breaking down the boundaries between a dense model logic and stakeholder understanding, attentional mechanisms eliminate skepticism and foster trust with investors, auditors and regulators. The transparency offered by attention mechanisms also drives the further development of AI-powered trading initiatives. When stakeholders know why some inputs are prioritized, they are more likely to accept and promote these systems [8]. This trust helps to overcome resistance to change in fields such as finance where decisions have long been based on human wisdom. Being able to visualize and justify choices not only adds to trust but also enables AI to be part of existing workflows, enabling new, transparent trading strategies that support both the goals of the organisation and the requirements of the regulator.

4.2. Reducing Bias and Improving Accountability

AI model bias is problematic for quantitative trading and can make for poor trades, regulatory issues, and even reputational problems. Attention processes help to detect and correct for such biases by uncovering predictive factors. For instance, if a model favors some industry or location, attention scores can show such tendencies, enabling analysts to investigate the source of the bias. This increased visibility helps ensure transparency and more equitable decision making, which are essential for regulatory compliance and stakeholder confidence [9]. Table 1 summarizes the typical sources of bias for quantitative trading models, their symptoms, and the effects of attention. For example, feature selection bias can lead to over-reliance on historical trends, and data imbalance bias leads to bias in the prediction towards overrepresented sectors. Attention mechanisms overcome these biases by dynamically adjusting feature importance according to the market, thus ensuring equal and context-sensitive decision-making.

Table 1: Common Biases in Quantitative Trading Models and How Attention Mechanisms Address Them

Type of Bias | Example | How Attention Mechanisms Mitigate |

Feature Selection Bias | Over-reliance on historical patterns that no longer hold relevance. | Dynamically adjust feature importance to reflect current conditions. |

Data Imbalance Bias | Skewed predictions due to overrepresentation of certain sectors. | Equalize focus across sectors by redistributing attention weights. |

Geographic Bias | Favoring specific regions in global trading strategies. | Highlight relevant regions dynamically based on contextual factors. |

Temporal Bias | Ignoring time-sensitive changes in factor relevance. | Adapt weights in real time to reflect evolving market conditions. |

Confirmation Bias | Reinforcing existing assumptions in strategy design. | Introduce diverse perspectives by dynamically reweighting overlooked factors. |

Additionally, because factor significance is tracked continuously, attention mechanisms allow for predictive adjustments to trading strategies without the need for systemic mistakes or unpredictable effects on model behavior. For instance, when market conditions change, attention systems can shift attention from over-weighted segments to factors that are more relevant, synchronising predictions with reality [10]. Such dynamic flexibility, paired with the transparency of attention systems, instills accountability and trust in AI-powered trading strategies.

5. Conclusion

Attention algorithms represent a significant development in explicable AI for quantitative trading, solving the problems of transparency, trust and flexibility. By dynamically ranking relevant factors and delivering usable information about how the model is performing, they help us connect the dots between the most advanced machine learning algorithms and the business needs of the financial sector. These processes reduce risks by uncovering biases and vulnerabilities, improve scalability by automating factor selection, and ensure compliance with tight regulatory standards. Additionally, they are highly flexible to changing market environments and are perfect instruments for intelligent, adaptive trading strategies. As financial markets grow ever more fluid and data-intensive, incorporating attention into trading systems provides a long-term option. They allow institutions to create open, transparent, responsible and effective AI-driven strategies that satisfy organizational and regulatory mandates. Future research should target optimizing these for financial applications on a large scale, making them accessible and efficient in various trading contexts. With their distinctive combination of performance and interpretability, attention mechanisms can revolutionise quantitative trading and promote innovation and confidence in AI-based financial decision-making.

References

[1]. Kalra, Ashima, and Ruchi Mittal. "Explainable AI for Improved Financial Decision Support in Trading." 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO). IEEE, 2024.

[2]. Yasin, Alhassan S., and Prabdeep S. Gill. "Reinforcement Learning Framework for Quantitative Trading." arXiv preprint arXiv:2411.07585 (2024).

[3]. Han, Henry, et al. "Explainable machine learning for high frequency trading dynamics discovery." Information Sciences 684 (2024): 121286.

[4]. Khattak, Bilal Hassan Ahmed, et al. "A systematic survey of AI models in financial market forecasting for profitability analysis." IEEE Access (2023).

[5]. Akinrinola, Olatunji, et al. "Navigating and reviewing ethical dilemmas in AI development: Strategies for transparency, fairness, and accountability." GSC Advanced Research and Reviews 18.3 (2024): 050-058.

[6]. Amendola, Daniela, et al. "From Voxels to Insights: Exploring the Effectiveness and Transparency of Graph Neural Networks in Brain Tumor Segmentation." 2024 International Joint Conference on Neural Networks (IJCNN). IEEE, 2024.

[7]. Rane, Nitin, Saurabh Choudhary, and Jayesh Rane. "Explainable Artificial Intelligence (XAI) approaches for transparency and accountability in financial decision-making." Available at SSRN 4640316 (2023).

[8]. Luong, Raymond, and Jessica Kay Flake. "Measurement invariance testing using confirmatory factor analysis and alignment optimization: A tutorial for transparent analysis planning and reporting." Psychological Methods 28.4 (2023): 905.

[9]. Singh, Atul Kumar, et al. "Revealing the barriers of blockchain technology for supply chain transparency and sustainability in the construction industry: an application of pythagorean FAHP methods." Sustainability 15.13 (2023): 10681.

[10]. Ospital, Pantxika, et al. "Toward product transparency: Communicating traceability information to consumers." International Journal of Fashion Design, Technology and Education 16.2 (2023): 186-197.

Cite this article

Leng,R. (2025). Explainable AI in Quantitative Trading: Factor Selection and Model Transparency with Attention Mechanisms. Applied and Computational Engineering,120,146-151.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Kalra, Ashima, and Ruchi Mittal. "Explainable AI for Improved Financial Decision Support in Trading." 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO). IEEE, 2024.

[2]. Yasin, Alhassan S., and Prabdeep S. Gill. "Reinforcement Learning Framework for Quantitative Trading." arXiv preprint arXiv:2411.07585 (2024).

[3]. Han, Henry, et al. "Explainable machine learning for high frequency trading dynamics discovery." Information Sciences 684 (2024): 121286.

[4]. Khattak, Bilal Hassan Ahmed, et al. "A systematic survey of AI models in financial market forecasting for profitability analysis." IEEE Access (2023).

[5]. Akinrinola, Olatunji, et al. "Navigating and reviewing ethical dilemmas in AI development: Strategies for transparency, fairness, and accountability." GSC Advanced Research and Reviews 18.3 (2024): 050-058.

[6]. Amendola, Daniela, et al. "From Voxels to Insights: Exploring the Effectiveness and Transparency of Graph Neural Networks in Brain Tumor Segmentation." 2024 International Joint Conference on Neural Networks (IJCNN). IEEE, 2024.

[7]. Rane, Nitin, Saurabh Choudhary, and Jayesh Rane. "Explainable Artificial Intelligence (XAI) approaches for transparency and accountability in financial decision-making." Available at SSRN 4640316 (2023).

[8]. Luong, Raymond, and Jessica Kay Flake. "Measurement invariance testing using confirmatory factor analysis and alignment optimization: A tutorial for transparent analysis planning and reporting." Psychological Methods 28.4 (2023): 905.

[9]. Singh, Atul Kumar, et al. "Revealing the barriers of blockchain technology for supply chain transparency and sustainability in the construction industry: an application of pythagorean FAHP methods." Sustainability 15.13 (2023): 10681.

[10]. Ospital, Pantxika, et al. "Toward product transparency: Communicating traceability information to consumers." International Journal of Fashion Design, Technology and Education 16.2 (2023): 186-197.