1. Introduction

The adoption of Artificial Intelligence (AI) systems within media technology platforms has revolutionized content creation methods and consumption patterns alongside content moderation practices. AI technology enables content creation across diverse media formats that include news articles, music tracks, videos, and interactive experiences. Through their application of natural language processing (NLP) and generative adversarial networks (GANs), AI technologies have automated creative processes that traditionally required significant human effort while raising operational efficiency and productivity levels. AI platforms autonomously create written content and music while producing visual media which provides new possibilities for creativity within the media industry. The emergence of new technological capabilities brings critical ethical inquiries to the forefront about AI-generated content's authenticity and originality. AI's ability to spread biases and false information leads to worries about its competence in maintaining journalistic ethics and public confidence. The problem worsens because automated content creation systems and similar AI tools spread existing data biases which intensify media representation challenges related to fairness and diversity. The combination of AI with virtual reality (VR) and augmented reality (AR) storytelling tools leads to new ethical concerns. Immersive experiences require extensive personal data collection to customize storylines for individual users which triggers privacy concerns as well as questions around user consent and data security [1]. As the distinction between virtual experiences and real-life interactions fades more each day it threatens to harm users psychologically while making it more difficult for them to identify genuine versus synthetic interactions. Digital platforms now frequently implement AI-powered automated moderation systems to identify harmful content such as hate speech and misinformation. Although these systems deliver operational efficiency and scalability they struggle with context interpretation and fairness which results in excessive censorship and biased removal of content. This research evaluates AI technologies' ethical dimensions in media through their application in content creation and automated content moderation and immersive storytelling. The research employs surveys, focus group discussions and experimental simulations to achieve a thorough understanding of user perceptions regarding AI in media and to identify ethical issues stemming from its extensive use.

2. Literature Review

2.1. AI in Content Generation

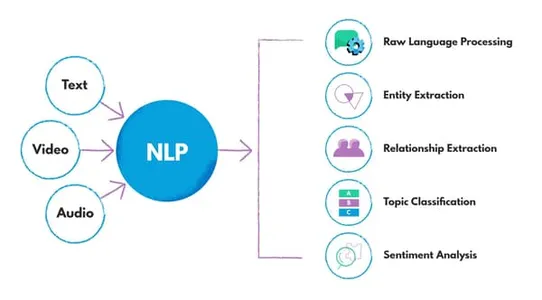

The field of media content creation relies heavily on AI technology due to breakthroughs in natural language processing (NLP) and generative adversarial networks (GANs) which allow creative tasks in journalism, video production and music creation to be automated. AI technologies improve productivity by making the execution of tasks that need extensive human labor much more efficient. AI-driven platforms have the capability to autonomously produce news articles and music while also generating video content [2]. Through Figure 1 we see machine learning applications on social media platforms which automate content creation and trend identification while personalizing user experiences and demonstrating AI's expanding influence in media. These technologies boost operational efficiency but bring up questions about the originality and authenticity of content generated by AI. Many people now worry that AI-generated content could increase biases while spreading false information which undermines public confidence in media outlets. AI tool dependence provokes concerns about their potential inherent bias and misuse risk when developers neglect proper oversight and ethical standards in content creation. [3]

Figure 1: How Machine Learning is Used on Social Media Platforms(Source:analyticsvidhya.com)

2.2. AI in Game and VR Content Creation

The combination of AI technology with virtual reality (VR) and augmented reality (AR) has enabled new opportunities for creating deeply immersive storytelling experiences. These technologies enable users to participate in interactive narratives which change based on their real-time decisions and input. Dynamic virtual characters and environments generated by AI serve as key elements in creating deeply emotional and intellectually engaging user experiences. These technologies offer immersive experiences but bring about ethical complications. A primary issue exists regarding AI's ability to alter user emotions and actions via meticulously designed storylines which subtly impact decision-making processes [4]. Constructing immersive environments requires gathering extensive personal data which triggers privacy concerns about how user consent and data security are handled. The convergence of real experience with AI-generated stories creates doubts about experience authenticity while potentially affecting users psychologically when they find it difficult to differentiate between real and virtual interactions.

2.3. AI in Automated Moderation Systems

Digital platforms now rely more frequently on AI-driven automated content moderation systems to detect harmful content including hate speech as well as graphic violence and misinformation. AI systems apply machine learning techniques to identify and mark inappropriate content and provide an efficient solution for managing the massive volume of user-generated content across social media platforms and online forums. These systems increase moderation efficiency but face substantial operational difficulties. AI systems face challenges in their ability to accurately interpret language context and detect linguistic subtleties which hinders their performance in identifying hate speech and misinformation [4]. There exists worry that insufficient transparency in operational methods of these systems could cause excessive censorship along with partial content management decisions. The systems used for AI moderation depend on pre-established rules and datasets which might unintentionally support specific political and social perspectives leading to ethical issues about freedom of speech and expression.

3. Experimental Methodology

3.1. Research Design

The study utilizes a mixed-methods approach which integrates qualitative and quantitative research methods to investigate the ethical implications of AI within the media field. Expert interviews and focus group discussions make up the qualitative research methods whereas user surveys and experimental simulations serve as quantitative methods to study media consumer perceptions and behaviors towards AI technologies [5].

3.2. Data Collection

The research collects information using multiple different sources. Media consumers including journalists, content creators and general users received online surveys to understand their experiences with AI-generated content, immersive storytelling and automated moderation systems. The research team conducted focus group discussions with media professionals and ethicists to explore the ethical challenges that AI technologies present. The study included experimental simulations that measured participant reactions to AI-driven media experiences including AI-generated news articles and immersive VR storylines.

3.3. Data Analysis

A statistical software program analyzed the quantitative survey data to detect patterns and relationships in participant attitudes toward AI media technologies. The research team used thematic analysis to code qualitative data from interviews and focus groups for identifying shared ethical issues and viewpoints [6]. The mixed-methods approach provides users' complete understanding by capturing both their emotional reactions and logical reasoning towards AI technologies in media.

4. Experimental Process

4.1. Survey Implementation

Media consumers from different backgrounds received the survey which included journalism and media production professionals and everyday users who engage with AI-created content. Participants were asked to share their views on AI as a content creator alongside their trust in automated moderation systems and their personal experiences with immersive AI storytelling. The survey sought to measure the respondents' ethical worries regarding AI algorithm biases and privacy risks from immersive technology usage [7]. The survey outcomes presented in Table 1 indicate participant perspectives on AI's function in content creation as well as their trust levels in moderation systems and ethical issues related to bias and privacy. The survey results revealed that 60% of participants strongly agreed that AI significantly contributes to content creation while 55% showed concerns about potential bias in AI-generated content.

Table 1: Survey Results: AI In Media

Category | Strongly Agree | Agree | Neutral | Disagree | Strongly Disagree |

AI Role in Content Creation | 60 | 30 | 10 | 0 | 0 |

Trust in Moderation Systems | 45 | 40 | 15 | 0 | 0 |

Ethical Concerns (Bias) | 55 | 35 | 5 | 5 | 0 |

Ethical Concerns (Privacy) | 40 | 45 | 10 | 5 | 0 |

4.2. Focus Group Discussions

Media professionals, ethicists, and technology experts participated in focus group discussions to examine AI's ethical impacts on the media industry. During discussions participants shared their thoughts about ethical issues related to AI such as data privacy in immersive storytelling and the trustworthiness of AI moderation systems along with worries about AI content spreading bias or misinformation. The discussions provided experts with the opportunity to deepen their understanding of AI integration perspectives in media and necessary ethical considerations [8]. Focus group responses summarized in Table 2 reveal that 80% of participants trusted AI moderation systems while 70% raised data privacy worries in immersive storytelling.

Table 2: Focus Group Ethical Challenges

Ethical Challenge | Agree | Disagree | Neutral |

Data Privacy in Immersive Storytelling | 70 | 20 | 10 |

Reliability of AI Moderation Systems | 80 | 10 | 10 |

Bias in AI-Generated Content | 75 | 15 | 10 |

Misinformation in AI Content | 65 | 25 | 10 |

4.3. Experimental Simulations

The experimental simulations tested participants' reactions by exposing them to a sequence of media technologies powered by AI. Through experiments participants witnessed AI-generated news articles and evaluated AI-powered virtual reality systems while observing automated content moderation systems functioning. The study aimed to determine the impact of AI technologies on both user engagement and trust while examining how ethical concerns like transparency and bias shape user responses to AI-generated media content [9]. The simulations demonstrated that ethical design principles are essential for building user trust and engagement because participants reacted to AI content based on their ethical concerns about transparency.

5. Experimental Results

5.1. Survey Findings

Users recognize AI-driven media technologies as beneficial yet remain concerned about their ethical implications. Most survey participants indicated their unease about biases present in AI algorithms when generating content. Table 3 demonstrates that 78% of participants expressed concerns about AI algorithm bias while 22% showed no concern. A large proportion of people expressed privacy concerns regarding immersive storytelling methods while 72% called for increased transparency and control over their personal information. The effectiveness of automated moderation systems in correctly identifying harmful content while maintaining appropriate boundaries was questioned by 65% of participants.

Table 3: Survey Findings On AI Ethics

Concern Area | Percentage Concerned (%) | Percentage Not Concerned (%) |

Bias in AI Algorithms | 78 | 22 |

Privacy Risks in Immersive Storytelling | 72 | 28 |

Reliability of Moderation Systems | 65 | 35 |

5.2. Focus Group Insights

The focus group participants identified a crucial need for AI algorithms to operate with increased transparency during content creation and moderation processes. Table 4 shows that 82% of participants believe AI systems need operational transparency to earn trust. Multiple participants demanded accountability for AI systems through established procedures to handle any ethical breaches. A majority of participants reached an agreement that users need increased control over their data in immersive storytelling environments with 75% stating that controlling data is essential. Experts stressed that informed consent and clear data usage communication represent fundamental ethical requirements.

Table 4: Focus Group Insights On AI Transparency And Data Control

Ethical Concern | Percentage of Agreement (%) |

Transparency in AI Algorithms | 82 |

User Control over Data | 75 |

Accountability for AI Systems | 88 |

5.3. Simulation Outcomes

Experimental simulations demonstrated that ethical considerations could greatly increase user trust in AI media technologies. Users exposed to AI systems that prioritize transparency and ethical design demonstrated greater levels of interaction with AI-created content and storytelling experiences. Limited transparency and exposure to biased content from AI technologies led to decreased user trust and diminished engagement. This research demonstrates that ethical design principles play a crucial role in building user trust and facilitating positive experiences with AI media applications.

6. Conclusion

This study demonstrates how Artificial Intelligence (AI) has become a transformative force within the media industry through its applications in content generation, immersive storytelling, and automated content moderation. AI technologies deliver substantial advantages through operational improvements and creative opportunities but they also present multiple ethical issues that require careful consideration. Research consisting of surveys, group discussions and simulations shows that users today recognize the ethical risks associated with AI in media which mainly relate to issues of bias and privacy as well as transparency requirements. AI systems require ethical design elements which focus on transparency alongside accountability and user control of personal data to achieve responsible implementation. The media industry stakeholders need to unite and create specific frameworks and guidelines to safeguard users' rights and interests as AI technology advances. Long-term social implications of AI-powered media technologies require further investigation to understand their effects on trust dynamics, misinformation spread, and the psychological outcomes from immersive narrative experiences. The resolution of ethical challenges related to AI will enable its power to serve both media organizations and society while establishing a media environment that is transparent and fair while also being captivating for audiences.

References

[1]. de-Lima-Santos, Mathias-Felipe, and Wilson Ceron. "Artificial intelligence in news media: current perceptions and future outlook." Journalism and media 3.1 (2021): 13-26.

[2]. Natale, Simone. Deceitful media: Artificial intelligence and social life after the Turing test. Oxford University Press, 2021.

[3]. Pavlik, John V. "Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education." Journalism & mass communication educator 78.1 (2023): 84-93.

[4]. Limna, Pongsakorn, et al. "A review of artificial intelligence (AI) in education during the digital era." Advance Knowledge for Executives 1.1 (2022): 1-9.

[5]. Anantrasirichai, Nantheera, and David Bull. "Artificial intelligence in the creative industries: a review." Artificial intelligence review 55.1 (2022): 589-656.

[6]. Zhang, Caiming, and Yang Lu. "Study on artificial intelligence: The state of the art and future prospects." Journal of Industrial Information Integration 23 (2021): 100224.

[7]. Noain Sánchez, Amaya. "Addressing the Impact of Artificial Intelligence on Journalism: The perception of experts, journalists and academics." (2022).

[8]. Fitria, Tira Nur. "Artificial intelligence (AI) technology in OpenAI ChatGPT application: A review of ChatGPT in writing English essay." ELT Forum: Journal of English Language Teaching. Vol. 12. No. 1. 2023.

[9]. Brem, Alexander, Ferran Giones, and Marcel Werle. "The AI digital revolution in innovation: A conceptual framework of artificial intelligence technologies for the management of innovation." IEEE Transactions on Engineering Management 70.2 (2021): 770-776.

Cite this article

Mao,W. (2025). Technological Realization of AI in Media: Ethical Implications for Content Generation, Immersive Storytelling, and Automated Moderation Systems. Applied and Computational Engineering,138,199-204.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. de-Lima-Santos, Mathias-Felipe, and Wilson Ceron. "Artificial intelligence in news media: current perceptions and future outlook." Journalism and media 3.1 (2021): 13-26.

[2]. Natale, Simone. Deceitful media: Artificial intelligence and social life after the Turing test. Oxford University Press, 2021.

[3]. Pavlik, John V. "Collaborating with ChatGPT: Considering the implications of generative artificial intelligence for journalism and media education." Journalism & mass communication educator 78.1 (2023): 84-93.

[4]. Limna, Pongsakorn, et al. "A review of artificial intelligence (AI) in education during the digital era." Advance Knowledge for Executives 1.1 (2022): 1-9.

[5]. Anantrasirichai, Nantheera, and David Bull. "Artificial intelligence in the creative industries: a review." Artificial intelligence review 55.1 (2022): 589-656.

[6]. Zhang, Caiming, and Yang Lu. "Study on artificial intelligence: The state of the art and future prospects." Journal of Industrial Information Integration 23 (2021): 100224.

[7]. Noain Sánchez, Amaya. "Addressing the Impact of Artificial Intelligence on Journalism: The perception of experts, journalists and academics." (2022).

[8]. Fitria, Tira Nur. "Artificial intelligence (AI) technology in OpenAI ChatGPT application: A review of ChatGPT in writing English essay." ELT Forum: Journal of English Language Teaching. Vol. 12. No. 1. 2023.

[9]. Brem, Alexander, Ferran Giones, and Marcel Werle. "The AI digital revolution in innovation: A conceptual framework of artificial intelligence technologies for the management of innovation." IEEE Transactions on Engineering Management 70.2 (2021): 770-776.