31730908127@qq.com

Introduction

Along with the continuous development of science and technology and artificial intelligence industry and iterative update, machine occupies proportion, increasing production in the production process. Machine production instead of manual production has become a trend that promotes industrial output efficiency. To promote industrial upgrading and technological revolution by robots instead of increasing human demand for production work, Industrial production has also become an important application field for intelligent robots. The manufacturing industry in various countries has a growing market for robot production, promoting optimisation and upgrading robot technology. An autonomous moving and navigation robot is a very challenging technology in robotics [1, 2]. Its technical difficulties are as follows. First, the robot needs to sense the surrounding environment and its location and then avoid the obstacles in the route through advanced algorithms to reach the predetermined location. It is challenging for robots to be real-time and accurate simultaneously. Although existing LiDAR sensors can feedback obstacles in the path in real-time, they are easy to receive interference such as external light, which affects the accuracy of obstacle data in the receiving environment and may cause misjudgment. At the same time, the sensors of such lidar are usually large, which may not be compatible with more miniature robots. Second, there is a kind of obstacle avoidance of robot based on camera by using image recognition technology can be through a series of image processing characteristics of the algorithm to compensate for the interference of its external factors such as light, but because of the way through calculation of the algorithm to pay for takes time, so this it's tough for autonomous mobile robot fact obstacle data and information feedback. Therefore, it may be one of the directions for the future development of autonomous mobile robots to make autonomous mobile navigation robots possess both real-time performance and accuracy.

Non-contact automatic COVID-19 nucleic acid detection robot is mainly used to realise the contactless automated COVID-19 nucleic acid detection robot[3-4], which can navigate to the workstation or location of the set personnel through the camera and radar and is mainly applied to the autonomous movement function of the existing automatic nucleic acid detection robot. The robot will conduct intelligent navigation according to the position of the nucleic acid person, avoid obstacles autonomously through radar and camera, and use clever navigation to plan the nearest route to the part the nucleic acid person needs. This product carries a camera and laser radar based on SLAM technology[5] for obstacle avoidance of autonomous mobile robots. Through the experiment of advanced algorithms and the team constantly optimised, the algorithm makes its universality, compatibility, real-time and precision to achieve the desired effect. These autonomous mobile robots are a vital part of the application in the industrial production industry.

Simultaneous Location and Mapping (SLAM) is an effective method[6] to measure the current robot position and posture under unknown conditions, and it can build a continuous map through algorithms. SLAM began at an IEEE conference in 1986, when scientists wanted to refine the way they used to calculate probability, from asking questions to finding algorithms. Finally, many scientists refined them based on experiments. SLAM technology mainly uses laser and camera for navigation and positioning. Visual SLAM and laser SLAM are currently two critical components of SLAM technology. SLAM has a wide range of applications in autonomous driving and industrial manufacturing, which is vital for making autonomous mobile robots in real time and accurate.

2. Positioning and navigation based on known maps

The Navigation Stack gets information from the Odometry and sensor stream and outputs speed commands. However, the navigation stack in the robot is much more complex than the ordinary one. First, a Robot Operating System (ROS) [7]must be run to publish sensor data with the correct ROS message type through the TF conversion tree. It also requires an excellent dynamic configuration to adjust the robot constantly. It can improve the performance level of the robot. However, this system only refers to the differential drive and full-wheeled robot. By sending the required command to precisely control the base, its speed form is X speed, Y speed, and theta speed. A planar laser mounted on a movable base is used for map construction and positioning. Navigation robots are mainly developed on square robots, which have good compatibility and can run on robots of different shapes and sizes. Therefore, its performance peaks on robots close to square or round. However, if a sizeable rectangular robot encounters a narrow or small space area, it may encounter difficulties or problems in operation. This problem is one of the essential development Spaces for the subsequent development of technology.

3. Use rtab-map for Map building and navigation.

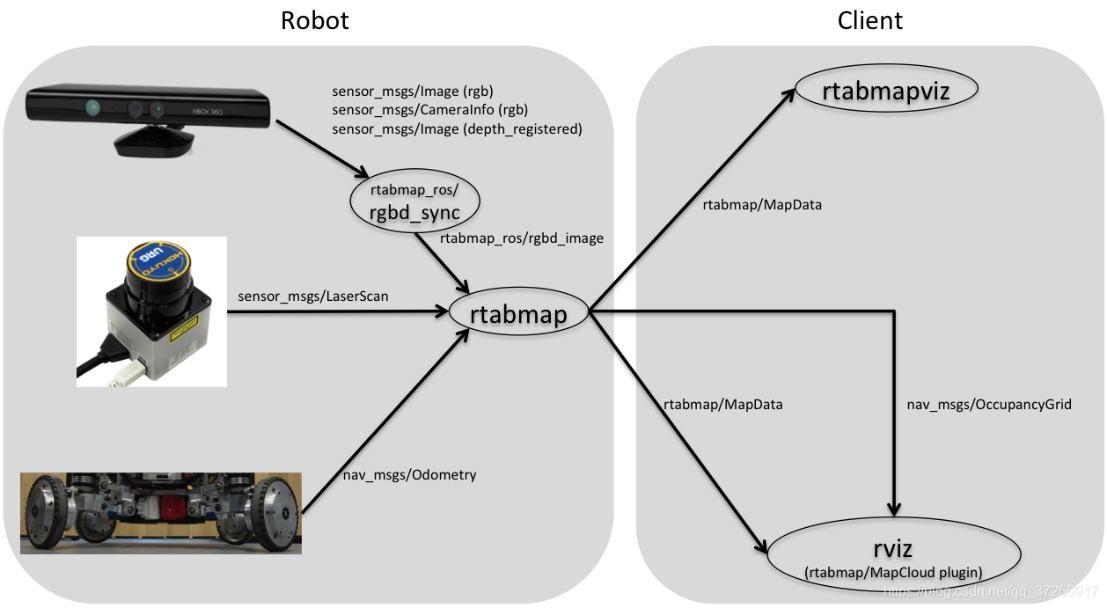

Rtab-map[8] can perform a real-time constraint. The system structure [9] is shown in Figure 1. It can use the SLAM method based on stereo and radar images, which uses a closed-loop detector to determine the initial position of the new photo and several possibilities of the existing situation. When the assumption of closure is accepted, the map is added to the new constraints, and the error in the map is minimised through graphical optimisation. The memory management method can reduce the number of closed-loop detectors, thus improving the real-time performance of the whole system.

|

|---|

| Figure 1. Block diagram of rtabmap System. |

Graph SLAM is an algorithm for solving complete SLAM problems. The algorithm can recover the entire route, not just the pose, in real time. This difference allows it to account for the current and previous posture dependence. An essential application of GraphSLAM[10] is underground mining. Large drilling machines need to work every day, and the drilling environment is constantly changing, so keeping the device with the correct workspace diagram is essential. One way to map space is to drive a LIDAR-equipped vehicle around the environment and collect data about the environment. The algorithm can also solve offline problems with a series of features.

4. Experimental process and experimental data

|

|---|

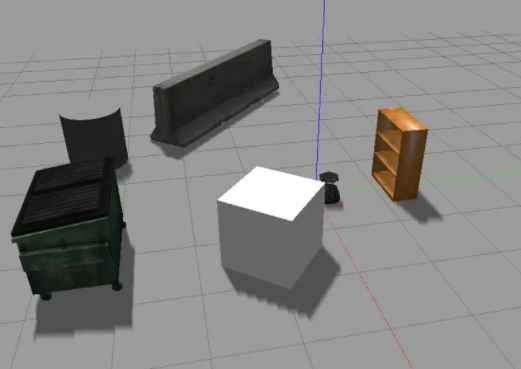

| Figure 2. Simulation scenario. |

The following two experiments mainly test the universality and accuracy of the autonomous mobile navigation system. The first experiment gives the experimental vehicle an unconventional application scenario to test whether it can generally work to reflect the universality of the experimental vehicle. In the second experiment, the car was given a fixed target to test the distance error between the position reached by the practical vehicle and the predetermined position after avoiding obstacles to reflect the accuracy of the autonomous mobile navigation system.

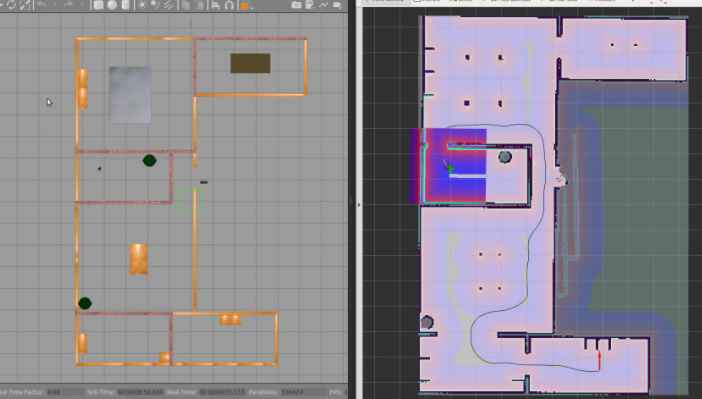

The workspace of robot path planning is established in several physical simulation environments, such as gazebo[11], as shown in Figure 2, and the robot model can be displayed in the visualization tool rviz. The experimental results show that the algorithm can plan the local path according to the current pose sequence updated in real time, in which each arrow represents a path point and its orientation, so as to maintain the accuracy and stability of the robot's turning process. In order to test the navigation accuracy, multiple target points are released continuously, and the error between the actual arrival and target points in the gazebo is measured. The experimental results show that the navigation accuracy can reach within 10cm under the 100m * 100m map, as shown in Figure 3, which can meet the application of the actual scene.

|

|---|

| Figure 3. Path planning in simulation scenario. |

5. Conclusion

In order to effectively control the spread of the new crown epidemic, it is a laborious and risky work to disinfect on time and point by point in many densely populated enclosed spaces with high risk of cross infection. Traditional manual disinfection methods have shortcomings such as high labor intensity and low work efficiency. Disinfection in a crowded enclosed space will increase the risk of infection and pose potential safety hazards. More and more non-contact mobile disinfection robots appear in the medical field, making great contributions to the fight against the epidemic. This paper designs a robot inspection system based on multi-sensor fusion. After the gazebo simulation experiment, It can well complete the patrol inspection and has a good application prospect. It can play an important role in the management and control of mobile personnel, reducing the risk of personnel infection, logistics support services, etc.

References

Rus D, Donald B, Jennings J. Moving furniture with teams of autonomous robots[C]//Proceedings 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human Robot Interaction and Cooperative Robots. IEEE, 1995, 1: 235-242.

Wu Y, Ma Y, Dai H N, et al. Deep learning for privacy preservation in autonomous moving platforms enhanced 5G heterogeneous networks[J]. Computer Networks, 2021, 185: 107743.

Yan B, Fan P, Lei X, et al. A real-time apple targets detection method for picking robot based on improved YOLOv5[J]. Remote Sensing, 2021, 13(9): 1619.

Song Q, Li S, Bai Q, et al. Object Detection Method for Grasping Robot Based on Improved YOLOv5[J]. Micromachines, 2021, 12(11): 1273.

Chen W, Shang G, Ji A, et al. An Overview on Visual SLAM: From Tradition to Semantic[J]. Remote Sensing, 2022, 14(13): 3010.

Gupta A, Fernando X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges[J]. Drones, 2022, 6(4): 85.

Macenski S, Foote T, Gerkey B, et al. Robot Operating System 2: Design, architecture, and uses in the wild[J]. Science Robotics, 2022, 7(66): eabm6074.

Labbé M, Michaud F. RTAB‐Map as an open‐source lidar and visual simultaneous localization and mapping library for large‐scale and long‐term online operation[J]. Journal of Field Robotics, 2019, 36(2): 416-446.

Ragot N, Khemmar R, Pokala A, et al. Benchmark of visual slam algorithms: Orb-slam2 vs rtab-map[C]//2019 Eighth International Conference on Emerging Security Technologies (EST). IEEE, 2019: 1-6.

Thrun S, Montemerlo M. The graph SLAM algorithm with applications to large-scale mapping of urban structures[J]. The International Journal of Robotics Research, 2006, 25(5-6): 403-429.

Koenig N, Howard A. Design and use paradigms for gazebo, an open-source multi-robot simulator[C]//2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566). IEEE, 2004, 3: 2149-2154.

References

[1]. Rus D, Donald B, Jennings J. Moving furniture with teams of autonomous robots[C]//Proceedings 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human Robot Interaction and Cooperative Robots. IEEE, 1995, 1: 235-242.

[2]. Wu Y, Ma Y, Dai H N, et al. Deep learning for privacy preservation in autonomous moving platforms enhanced 5G heterogeneous networks[J]. Computer Networks, 2021, 185: 107743.

[3]. Yan B, Fan P, Lei X, et al. A real-time apple targets detection method for picking robot based on improved YOLOv5[J]. Remote Sensing, 2021, 13(9): 1619.

[4]. Song Q, Li S, Bai Q, et al. Object Detection Method for Grasping Robot Based on Improved YOLOv5[J]. Micromachines, 2021, 12(11): 1273.

[5]. Chen W, Shang G, Ji A, et al. An Overview on Visual SLAM: From Tradition to Semantic[J]. Remote Sensing, 2022, 14(13): 3010.

[6]. Gupta A, Fernando X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges[J]. Drones, 2022, 6(4): 85.

[7]. Macenski S, Foote T, Gerkey B, et al. Robot Operating System 2: Design, architecture, and uses in the wild[J]. Science Robotics, 2022, 7(66): eabm6074.

[8]. Labbé M, Michaud F. RTAB‐Map as an open‐source lidar and visual simultaneous localization and mapping library for large‐scale and long‐term online operation[J]. Journal of Field Robotics, 2019, 36(2): 416-446.

[9]. Ragot N, Khemmar R, Pokala A, et al. Benchmark of visual slam algorithms: Orb-slam2 vs rtab-map[C]//2019 Eighth International Conference on Emerging Security Technologies (EST). IEEE, 2019: 1-6.

[10]. Thrun S, Montemerlo M. The graph SLAM algorithm with applications to large-scale mapping of urban structures[J]. The International Journal of Robotics Research, 2006, 25(5-6): 403-429.

[11]. Koenig N, Howard A. Design and use paradigms for gazebo, an open-source multi-robot simulator[C]//2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566). IEEE, 2004, 3: 2149-2154.

Cite this article

Wu,F.;Zhao,Y. (2023). Non-contact automatic COVID-19 nucleic acid detection robot. Applied and Computational Engineering,5,848-852.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Rus D, Donald B, Jennings J. Moving furniture with teams of autonomous robots[C]//Proceedings 1995 IEEE/RSJ International Conference on Intelligent Robots and Systems. Human Robot Interaction and Cooperative Robots. IEEE, 1995, 1: 235-242.

[2]. Wu Y, Ma Y, Dai H N, et al. Deep learning for privacy preservation in autonomous moving platforms enhanced 5G heterogeneous networks[J]. Computer Networks, 2021, 185: 107743.

[3]. Yan B, Fan P, Lei X, et al. A real-time apple targets detection method for picking robot based on improved YOLOv5[J]. Remote Sensing, 2021, 13(9): 1619.

[4]. Song Q, Li S, Bai Q, et al. Object Detection Method for Grasping Robot Based on Improved YOLOv5[J]. Micromachines, 2021, 12(11): 1273.

[5]. Chen W, Shang G, Ji A, et al. An Overview on Visual SLAM: From Tradition to Semantic[J]. Remote Sensing, 2022, 14(13): 3010.

[6]. Gupta A, Fernando X. Simultaneous Localization and Mapping (SLAM) and Data Fusion in Unmanned Aerial Vehicles: Recent Advances and Challenges[J]. Drones, 2022, 6(4): 85.

[7]. Macenski S, Foote T, Gerkey B, et al. Robot Operating System 2: Design, architecture, and uses in the wild[J]. Science Robotics, 2022, 7(66): eabm6074.

[8]. Labbé M, Michaud F. RTAB‐Map as an open‐source lidar and visual simultaneous localization and mapping library for large‐scale and long‐term online operation[J]. Journal of Field Robotics, 2019, 36(2): 416-446.

[9]. Ragot N, Khemmar R, Pokala A, et al. Benchmark of visual slam algorithms: Orb-slam2 vs rtab-map[C]//2019 Eighth International Conference on Emerging Security Technologies (EST). IEEE, 2019: 1-6.

[10]. Thrun S, Montemerlo M. The graph SLAM algorithm with applications to large-scale mapping of urban structures[J]. The International Journal of Robotics Research, 2006, 25(5-6): 403-429.

[11]. Koenig N, Howard A. Design and use paradigms for gazebo, an open-source multi-robot simulator[C]//2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)(IEEE Cat. No. 04CH37566). IEEE, 2004, 3: 2149-2154.