1. Introduction

Face recognition based on feature extraction is becoming more and more popular, bringing convenience and intelligence to people from education, security, medical and other fields, for example, face recognition technology can be used in security monitoring and crime investigation [1]. The police can quickly find out the identity information of the suspect by comparing the face of the suspect's photo, and deploy a face recognition system in public places to detect and track suspicious persons. In the business field, merchants can identify and record customers through face recognition technology to understand their shopping preferences, so as to provide them with better services and marketing strategies [2-4]. Moreover, in the construction of smart cities, face recognition technology can be applied to the detection of monitoring traffic lights and illegal driving. In addition, face recognition technology can also be widely used in the medical and health field, and hospitals can achieve doctor-patient information matching through face recognition technology to provide safer and more efficient medical services; At the same time, it can also be applied to certify the doctor's qualification to ensure that the doctor's identity is true and valid [5].

In short, as a biometric technology, face recognition technology has been widely used in many fields and is constantly creating new value and application scenarios. With the gradual maturity and development of technology, we believe that face recognition technology will bring more convenience and security to our life and work [1,6]. With the deep optimization of convolutional neural network models, face recognition models based on CNN will also appear with faster recognition speed and higher recognition accuracy, but the accuracy of face recognition is still limited by many factors, especially in the occlusion situation in different scenarios, which will greatly affect the efficiency and accuracy of face recognition [7,8].

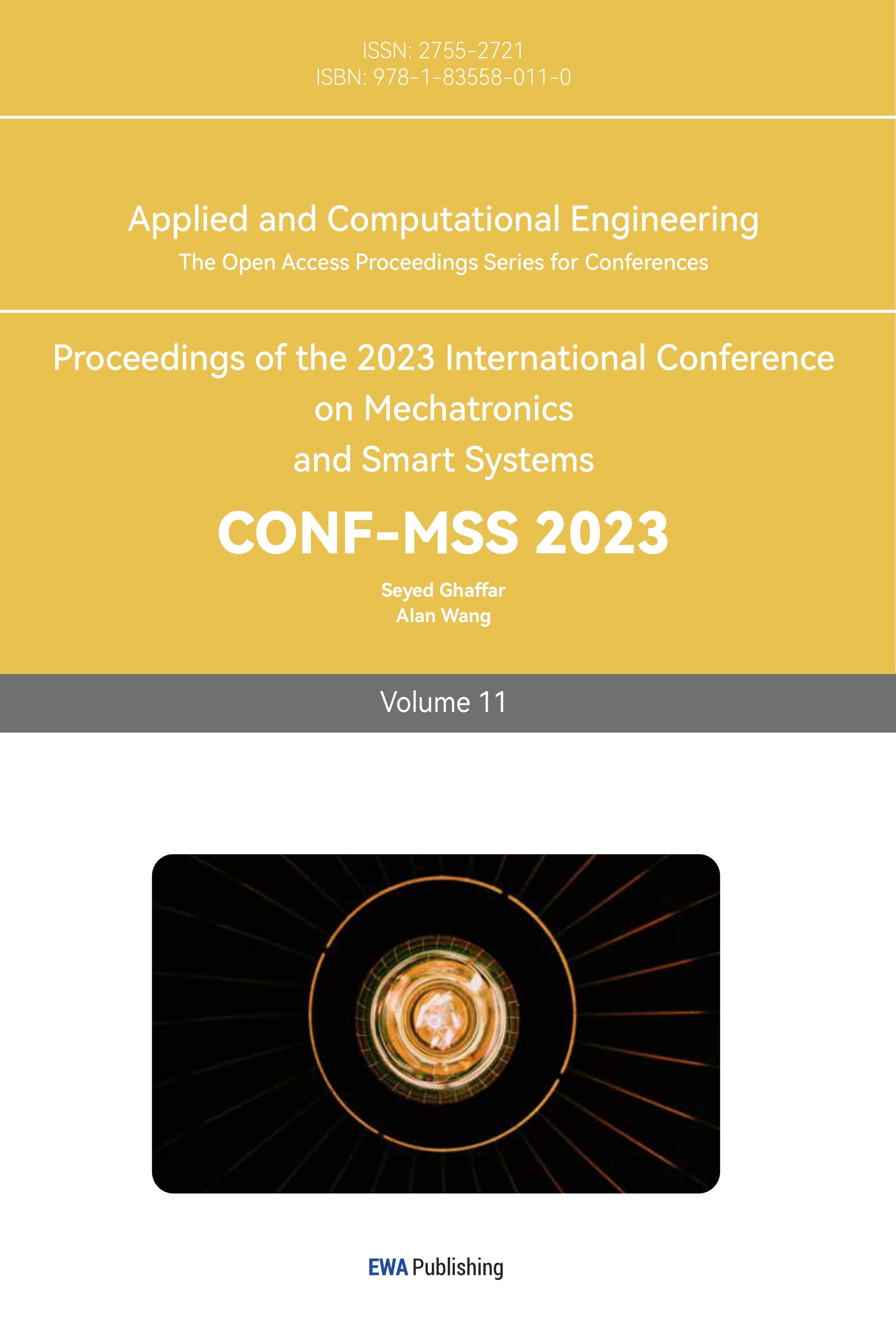

At present, in the study of occlusion face recognition, there is still no good solution to the problems of increasing training difficulty due to the relative lack of face data sets and complex and changeable network structures, but it is undeniable that deep learning will become the development trend of artificial intelligence and other fields in the future, playing a key role. At present, the research of occlusion face recognition methods is mainly divided into two directions, mainly including the method of completing and repairing the occlusion area and extracting the local features of the non-occlusion area. This article focuses on these two aspects of the analysis, the specific content is as Figure 1.

Figure 1. The facial recognition methods category frame diagram.

2. Based on the face recognition method under occlusion

2.1. Extract local features in non-occluded areas

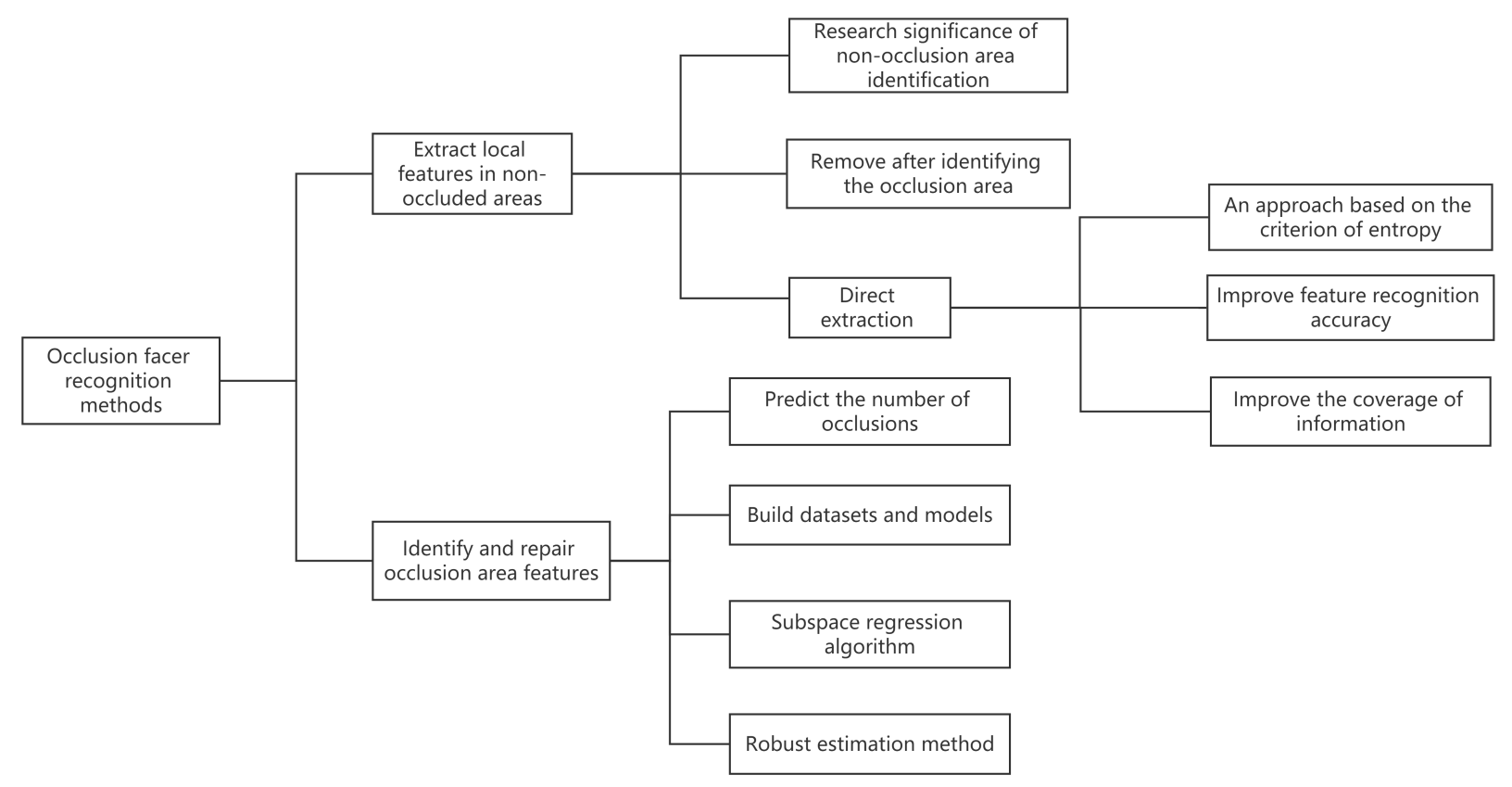

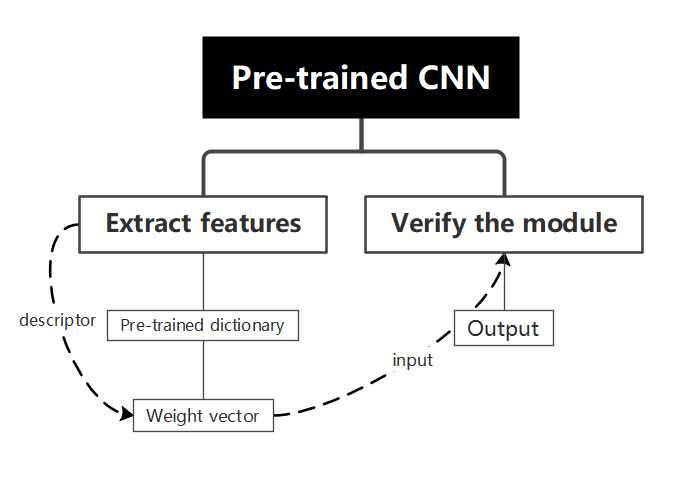

Feature extraction from non-occluded areas is done directly or indirectly. The main step is to decompose the image first, and then use the corresponding model to weight each sub-image, assign different weights, and extract specific image features (Figure 2).

This method can improve the efficiency of image extraction during feature decomposition, and the selection of appropriate algorithms can improve the accuracy of feature recognition. However, it is difficult to adapt to the characteristics of multiple areas, which may lead to the loss of information.

Figure 2. Face recognition process.

2.1.1. Research significance of non-occlusion area identification. Abate et al. believe that neural networks can have great significance for the development of face recognition, which is reflected in the nonlinear methods included in neural networks [9]. The author compares the research trend of two-dimensional images and three-dimensional models in the direction of face recognition, and uses multiple sets of parameters to support the view, thereby illustrating the importance of non-occlusion recognition in the field of face recognition.

Zhou H et al. summarized the influencing factors, advantages and disadvantages of each category, and expressed the use value of face database [10]. In the process of discussing the single-modal face recognition methodology, the author believes that the method of extracting features can be used to identify faces. Human posture, gaze direction, and facial expressions will have a certain impact on face recognition, but the recognition features based on non-occluded areas can better identify and extract biometrics.

2.1.2. Indirect extraction. Indirect extraction refers to the purpose of extracting the features of non-occluded areas through indirect extraction methods, such as casting the target at the features of the decomposed image and then projecting the features. In terms of occlusion detection, the face image is divided into multiple face components determined by the occlusion content, and then the Gabor wavelet features are obtained from the components, and the PCA projection of the Gabor wavelet features is used to achieve the effect of dimensionality reduction feature vector.

Considering the problem of identifying multiple occlusion areas that are difficult to adapt, experiments show that successful recognition is closely related to successful detection of occlusion, and the pixel set will greatly affect the efficiency of occlusion to a certain extent [2].

2.1.3. Direct extraction. Direct extraction refers to the method that can directly extract features, such as using the model to divide sub-images, assign different weights respectively, or extract features according to the corresponding recognition criteria, through specific selection methods, the accuracy of recognition can be effectively improved.

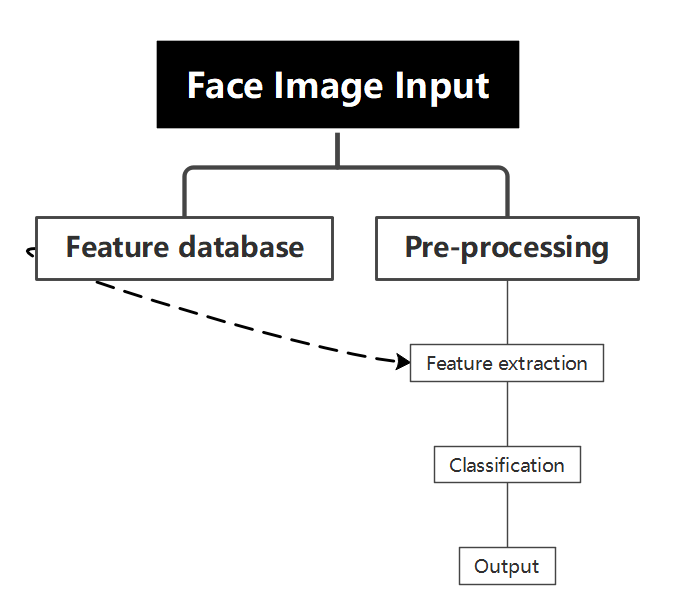

Mohit Sharmad et al use feature decomposition to remove occlusion areas [3]. In the processing of pictures, it is subdivided into multiple sub-images, and lighting and reflection are used as controllable errors, while in the processing of sub-images, weighted average and voting schemes are used more. Subsequently, the authors propose a new RGEF (Gabor-feature surface)-based system, which performs well when processing the display performance of Gabor filters and different feature points. After feature decomposition based on sub-images, the efficiency of extracting images is greatly improved. Lingxue Song et al. first proposed to use a paired differential Siam network (PDSN) to analyze the unoccluded area-occluded area and the damaged feature site, and use feature discard mask (FDM) to represent the relationship between the two (Figure 3) [4]. The superposition calculation of the initial feature and FDM can discard the damaged feature parts.

Figure 3. Overlay calculations to discard parts of damaged features.

Hou Xiankun et al. used the Hough line method to extract the features of the non-occluded area, weighted the prediction results of multiple prediction model samples, and gave each sample a corresponding weight, and selected the HOG features when extracting features, which improved the accuracy of face recognition while improving the efficiency of resource utilization [5]. Chunfei Ma et al. in terms of feature extraction, taking a representative face database as the experimental object, by comparing MLBP with traditional LBP features, it is believed that MLBP can contain more structural information and discriminative information, and can better represent the macro and microstructure of the face [6]. Fattah et al. proposed an entropy-based face recognition region selection criterion, which can accurately extract the spatial changes of local high information in the face, rather than facing the entire image [7]. Wang Xi et al. proposed a convolutional neural network model of multi-dimensional feature serial extraction module, which uses parallel and serial combination to extract features from both spatial and channel directions, which effectively improves the accuracy of feature recognition [8]. The effectiveness of MFNet is verified by cross-sectional comparison experiments.

2.2. Identify and repair occlusion area features

The most important thing to identify occlusion areas is the selection of algorithms, such as SRC algorithms can be used in image classification, but its oversized dictionary will lead to excessive computation or the influence of noise. In the image repair method, the number of datasets is too small, which may lead to incomplete repair.This type of method is more direct in object-oriented, and can classify images by reconstructing error analysis and dilution matrix. However, when the corresponding face dataset is missing, the difficulty of training increases, and the difficulty of implementing the algorithm is also greater.

Wright et al. demonstrated experimentally that sparsity is crucial in high-performance classification of high-dimensional images, and not only that, but also the sparse representation framework continues feature extraction [11]. In the experiment, the theory of sparse representation is used to predict the number of occlusions, find training methods for selecting images, and improve the robustness of occlusion. An SRC (sparse representation classification) algorithm based on image recognition is proposed to classify images by seeking the minimum reconstruction error and a suitable sparse matrix. In the theoretical representation of the author's sparse representation, the representation significance of processing the features of the occlusion area is reflected, and unlike the non-occlusion area, the identification of the features of the occlusion area is more object-oriented, but it is more difficult in the processing method.

Ou Weihua proposes a variety of machine learning algorithms based on the sparse representation non-negative matrix factorization theory [12]. It will be represented as several types of sparse representation models, and the sparser values will be obtained with the best reconstruction error.

( \( P_{0}^{ε}):{min‖α‖_{0}} s.t. ‖Aα-y‖_{2}^{2}≤ε. \) (1)

It is also possible to solve the optimal reconstruction error by controlling the degree of sparsity.

\( (P_{0}^{T}): min‖Aα-y‖_{2 }^{2}s.t. {‖α‖_{0}} \lt T. \) (2)

The authors divide the sparse representation-based methods into three main categories: the first type is based on local features, such as SRC, the reconstruction error is analyzed, including the measurement method, calculated by multiple methods, and estimated error distribution. The second type is to know the verification information of occlusion, and propose the MRF (Markov Random Fields) algorithm to identify the occluded face. Li proposes a structured sparse error coding recognition algorithm based on the occluded surface information [13]. The third category is based on occlusion dictionary learning, such as ESRC (Extended SRC Extended Sparse Representation Classification, combined with the idea of repairing images for face recognition, the general step is to repair first, then recognize.

The authors divide the sparse representation-based methods into three main categories: the first type is based on local features, such as SRC, the reconstruction error is analyzed, including the measurement method, calculated by multiple methods, and estimated error distribution. The second type is to know the verification information of occlusion, and propose the MRF (Markov Random Fields) algorithm to identify the occluded face. Li proposes a structured sparse error coding recognition algorithm based on the occluded surface information [13]. The third category is based on occlusion dictionary learning, such as ESRC (Extended SRC Extended Sparse Representation Classification, combined with the idea of repairing images for face recognition, the general step is to repair first, then recognize.

The authors propose a large face deletion dataset called MAFA, and LLE-CNN for occlusion detection under various types of noise conditions (Figure 4) [14]. At present, large face datasets are still relatively lacking, under the analysis of a variety of errors, LLE-CNN has more advantages in detecting occlusion, which is based on repairing facial features, which can resist the influence of noise to a large extent.

Figure 4. LLE-CNN model.

3. The prospect of face recognition

Face recognition based on feature extraction is becoming more and more popular, bringing convenience and intelligence to people from education, security, medical and other fields, and with the deep optimization of convolutional neural network models, face recognition models based on CNNs will also appear with faster recognition speed and higher recognition accuracy. Although the development of face recognition technology has made certain achievements, there are still some problems and challenges in practical applications: such as the face data set is not perfect, the complex and changeable network structure makes the amount of computing larger and the training difficulty, the more obvious problems include: 'black box problem', the working principle and algorithm of the face recognition system are not transparent enough, in addition, it is difficult to judge the correctness and accuracy of its judgment results in practical applications, resulting in some security and privacy problems, resulting in negative evaluation of the public. In terms of improvement, the future development of face recognition technology can start from improving the accuracy and reliability of algorithms and technologies, and improve the recognition accuracy by optimizing the facial feature extraction algorithm, while increasing research on the impact of light, angle and occlusion on recognition. Multimodal recognition can include features such as speech and retina, and appropriate combination can improve the accuracy of recognition. In terms of privacy, design more standardized data use and sharing policies to improve the management and privacy protection measures of facial data. In terms of public opinion, we can increase the publicity of face recognition, provide better social education and public popularization, and promote the gradual standardization and standardization of face recognition technology. The related research of shielding face recognition is the basis of face recognition technology, and it is also biometric research with broad prospects and application value, but its development still needs to solve some problems and challenges of existing technologies and models, and it is undeniable that deep learning will become the development trend of artificial intelligence and other fields in the future, playing a key role.

4. Conclusion

Studying how to improve face recognition performance under occlusion conditions is an important topic in the field of face recognition. In this paper, the face recognition method based on occlusion is divided into two categories: local feature class based on non-occlusion area and feature class based on recognition of occlusion area; The basic processes of these two types of methods are summarized, and the specific cases of these two types of occlusion face recognition methods are analyzed. Further summarize the advantages and disadvantages of each and elaborate them.

Masks and other occlusions affect the recognition efficiency of faces, so deep learning based on neural networks is an important model. This article summarizes the two types of face recognition methods and summarizes the advantages and disadvantages of each. In the future development direction, face recognition is mainly used in education, security, medical three fields. At present, there are still problems in the research, including the lack of data sets, the difficulty of training caused by the large number of complex calculations of the algorithm, so the technical accuracy and reliability of the algorithm have a very large room for improvement. At the same time, the 'black box' problem reflects the lack of reasonable basis for the development of artificial intelligence based on CNN technology, and in terms of policy, the government should also propose the use of norms and binding rules, and pay attention to the privacy and security of users.

References

[1]. R. Min, A. Hadid, J.L. Dugelay. Improving the recognition of faces occluded by facial accessories. 2011 Conference on Automatic Face Gesture Recognition and Workshops, 442-447.

[2]. Andrés A M, Padovani S, Tepper M, et al. Face recognition on partially occluded images using compressed sensing, 2014, Pattern Recognition Letters, 36: 235-242.

[3]. M.Sharma, S.Prakash, P.Gupta.An efficient partial occluded face recognition system 2013, Neurocomputing, 116. 231-241.

[4]. L. Song, D. Gong, Z. Li, etc. Occlusion Robust Face Recognition Based on Mask Learning with Pairwise Differential Siamese Network. 2019, International Conference on Computer Vision, 773-782.

[5]. Hou Xiankun, Zhou Wei Research on improving the accuracy of mask masking face recognition 2017, Neurocomputing, 12. 137-149.

[6]. Chunfei Ma, June-Young Jung, Seung-Wook Kim, Sung-Jea Ko. Random projection-based partial feature extraction for robust face recognition. 2015, Neurocomputing, 149:1232-1244.

[7]. Shaikh Anowarul Fattah, Hafiz Imtiaz. A Spectral Domain Local Feature Extraction Algorithm for Face Recognition. 2011, International Journal of Security, 5(2):169-172.

[8]. Wang Xi; Zhang Wei. Anti-occlusion face recognition algorithm based on a deep convolutional neural network. 2021, Computers & Electrical Engineering, 96:107461.

[9]. Abate A F, Nappi M, Riccio D, et al. 2D and 3D face recognition: A survey. 2007, Pattern Recognition Letters, 28(14): 1885-1906.

[10]. Zhou H, Mian A, Wei L, et al. Recent advances on singlemodal and multimodal face recognition: a survey.2014 IEEE Transactions on Human-Machine Systems, 44(6): 701-716.

[11]. Wright J, Yang A Y, Ganesh A, et al. Robust face recognition via sparse representation, 2009 IEEE Computer Society, 2, 1-11.

[12]. Ou Weihua Research on partially occluded face recognition based on sparse representation and non-negative matrix decomposition, 2011 IEEE Transactions on Systems, 211-223.

[13]. Li X, Dai D, Zhang X,et al. Structured Sparse Error Coding for Face Recognition with Occlusion. 2013, IEEE Transactions on Image Processing, 22(5):1889-1900.

[14]. S. Ge, J. Li, Q. Ye, Z. Luo, Detection Masked Faces in the Wild with LLE- CNNs, 2017 IEEE Conference on Computer Vision and Pattern Recognition, 426-434.

Cite this article

Fu,W. (2023). Research on occlusion face recognition based on deep networks. Applied and Computational Engineering,11,1-7.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2023 International Conference on Mechatronics and Smart Systems

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. R. Min, A. Hadid, J.L. Dugelay. Improving the recognition of faces occluded by facial accessories. 2011 Conference on Automatic Face Gesture Recognition and Workshops, 442-447.

[2]. Andrés A M, Padovani S, Tepper M, et al. Face recognition on partially occluded images using compressed sensing, 2014, Pattern Recognition Letters, 36: 235-242.

[3]. M.Sharma, S.Prakash, P.Gupta.An efficient partial occluded face recognition system 2013, Neurocomputing, 116. 231-241.

[4]. L. Song, D. Gong, Z. Li, etc. Occlusion Robust Face Recognition Based on Mask Learning with Pairwise Differential Siamese Network. 2019, International Conference on Computer Vision, 773-782.

[5]. Hou Xiankun, Zhou Wei Research on improving the accuracy of mask masking face recognition 2017, Neurocomputing, 12. 137-149.

[6]. Chunfei Ma, June-Young Jung, Seung-Wook Kim, Sung-Jea Ko. Random projection-based partial feature extraction for robust face recognition. 2015, Neurocomputing, 149:1232-1244.

[7]. Shaikh Anowarul Fattah, Hafiz Imtiaz. A Spectral Domain Local Feature Extraction Algorithm for Face Recognition. 2011, International Journal of Security, 5(2):169-172.

[8]. Wang Xi; Zhang Wei. Anti-occlusion face recognition algorithm based on a deep convolutional neural network. 2021, Computers & Electrical Engineering, 96:107461.

[9]. Abate A F, Nappi M, Riccio D, et al. 2D and 3D face recognition: A survey. 2007, Pattern Recognition Letters, 28(14): 1885-1906.

[10]. Zhou H, Mian A, Wei L, et al. Recent advances on singlemodal and multimodal face recognition: a survey.2014 IEEE Transactions on Human-Machine Systems, 44(6): 701-716.

[11]. Wright J, Yang A Y, Ganesh A, et al. Robust face recognition via sparse representation, 2009 IEEE Computer Society, 2, 1-11.

[12]. Ou Weihua Research on partially occluded face recognition based on sparse representation and non-negative matrix decomposition, 2011 IEEE Transactions on Systems, 211-223.

[13]. Li X, Dai D, Zhang X,et al. Structured Sparse Error Coding for Face Recognition with Occlusion. 2013, IEEE Transactions on Image Processing, 22(5):1889-1900.

[14]. S. Ge, J. Li, Q. Ye, Z. Luo, Detection Masked Faces in the Wild with LLE- CNNs, 2017 IEEE Conference on Computer Vision and Pattern Recognition, 426-434.