Introduction

The foundational technique for computer music, information theory, was developed as early as 1959. It is made possible by information theory to instruct a computer to create music. It is a method that sheds light on musical composition by humans and its structure [1-3]. The computer language for computer music synthesis, performance, and composition was developed by Loy and Abbott in 1985 [4]. They claimed in the paper that the complexity and variety of these requirements make the programming language paradigm a natural one in the use of computers in music. This paradigm offers composers a fresh perspective on their works. The first computer music software created by Moore in C debuted in 1990 [5]. Puckette created Pure Data in 1997, another integrated computer music environment [6]. Its design aims to address some of the Max program's shortcomings while preserving its positive aspects. The biggest drawback to Max is how challenging it is to maintain complex data structures of the kind that could develop during sound analysis and resynthesis or when recording and editing sequences of different events. Nyquist, the language employed in this study, was released in the same year [7-9]. A music formalism implemented as a computer program be completely unambiguous, and implementing ideas on a computer often leads to greater understanding and new insights into the underlying domain, as claimed by the creator of the system.

At present, computer music can already collaborate with human brain to achieve special needs of different people. It is reported that a proof-of-concept brain-computer music interfacing system (BCMI) is already able to put into use after tested with a patient with Locked-in Syndrome at the Royal Hospital for Neuro-disability, in London [10]. The patient understood the concept quickly and successfully demonstrated her skill in controlling the system with minimal practice. She was able to vary the intensity of her gaze, thus changing the consequent musical parameters [11]. In addition, one research also done by using Nyquist is Lyu’s research, which aims to develop a method that could transfer any music to Chinese style by changing the mode into a pentatonic scale [12]. It uses note-to-note modification that achieved the purpose well, which are suited for different situations.

There are previous studies showing that Chinese instruments in comparison to western instruments have a relatively low rate of confusion no matter for strings or percussion. This means that in most cases, Chinese instruments are more distinctive and easy to recognize. The study also presented the significant difference in the tone of Chinese instruments to western ones [13].

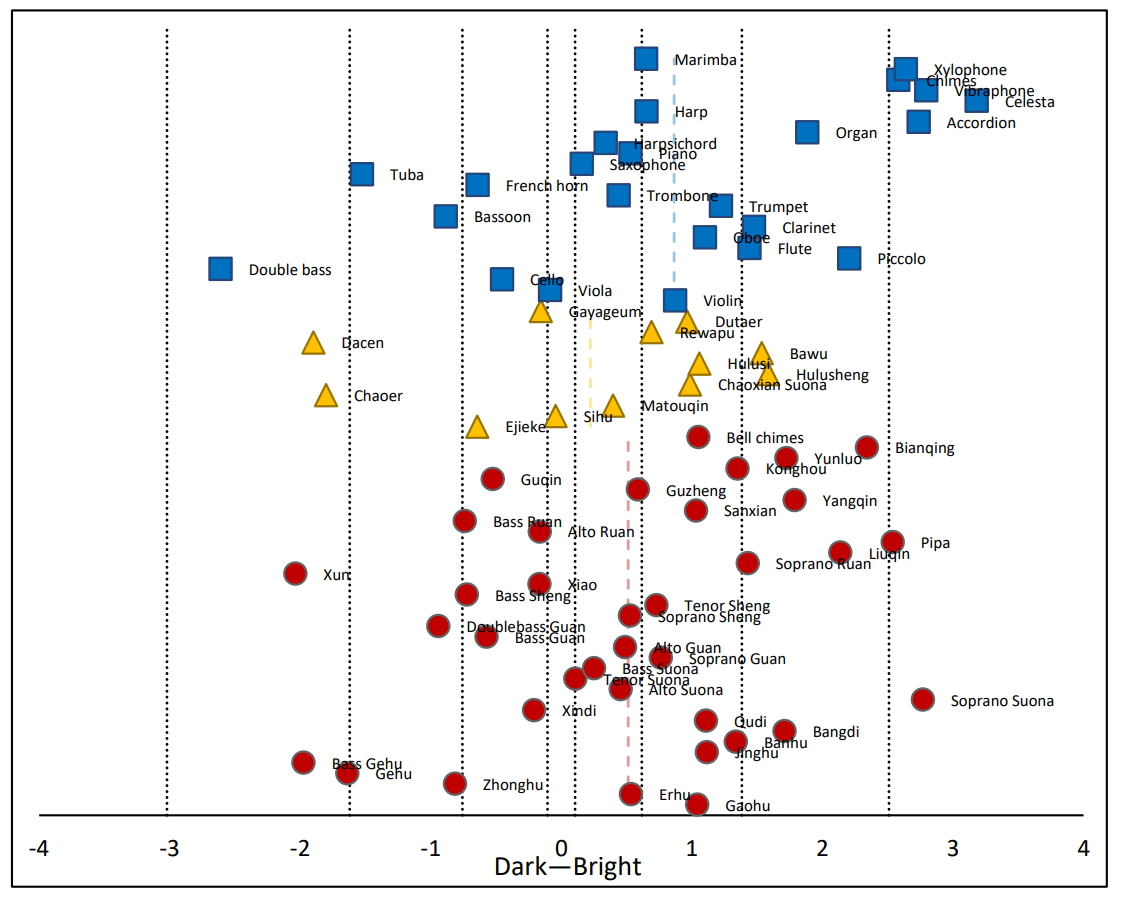

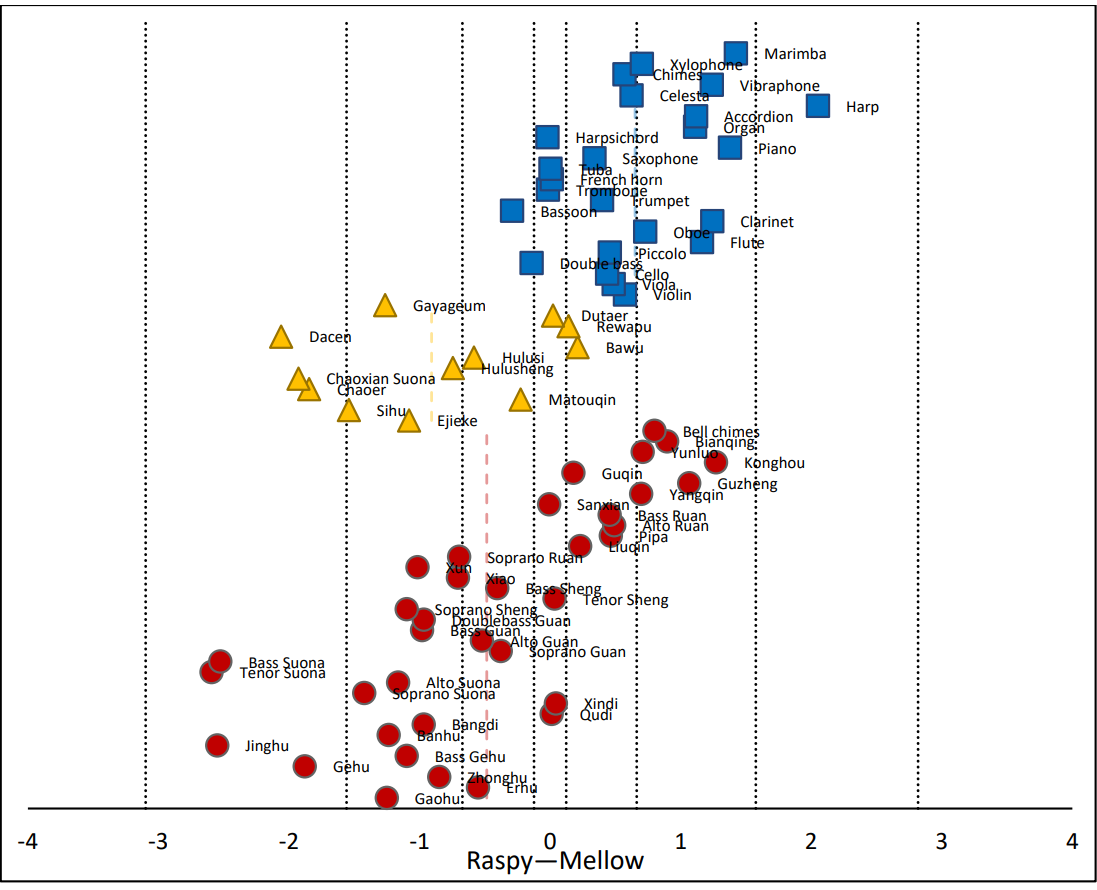

Previous studies have also listed the characteristics comparison of traditional Chinese instruments with western instruments. The study included characteristics like bright/dark, raspy/mellow, vigorous/sharp, coarse/pure, and hoarse/consonant [14]. As shown in Fig. 1 and Fig. 2, a brief reference to the results of the sounds of dark to bright and raspy to mellow is shown.

Figure 1. Reference of comparison of different timbre from dark to bright [14].

Figure 2. Reference of comparison of different timbre from raspy to mellow [14].

The goal of this research is to design Guzheng/Zhudi sampler. This program will contain three functions: audio import, sampler application and audio export. Users can import their own audio files and run the program to get the applicated sound of Guzheng/Zhudi. In the process of program production, Nyquist coding language is used. In this program, there are five major achievements: 1. realization of Musical Instrument Digital Interface (MIDI) file conversion to score in Nyquist programming system; 2. simulate the timbre of Guzheng/Zhudi by editing the function inside Nyquist; 3. use judgment statements to separate the high pitch and low pitch in the score; 4. use the score-voice function to convert the original audio to timbre of Guzheng/Zhudi; and 5. visualize the converted audio for music.

Methodology

This study will use the software Nyquist to realize sampler. The foundation of Nyquist is functional programming. In order to create sounds, users blend expressions. In the Lisp (Touretzky 1984) syntax, parentheses denote the application of a function to a collection of parameters when writing expressions. Apply the function osc on the parameter c4, for instance, with the expression (osc c4). First, parameters are assessed. As in the example, the global variable c4 has the value 60.0 and is supposed to be constant. The osc function receives the number 60.0 and generates a sinusoid with a length of 1 sec and a frequency equal to middle C. When working with microtones and unequal temperaments, Nyquist uses fractional pitch values. For example, 60.01 is one cent sharper than 60.0. Combining expressions is possible. The equation below calculates a 6-Hz sinusoid: (ifo 6) A different amplitude can be achieved by scaling this: (scale 20 (ifo 6)) and utilized to give an Frequency Modulation (FM) oscillator frequency modulation: (scale 20 (ifo 6)) fmosc c4 The play command is used to play this sound: Playing (fmosc c4 (scale 20, ifo 6)) The play command performs a unique role since it also triggers a system command that plays the sound file and stores the sound in a file.

As a matter of fact, Nyquist is an interactive language for music composition and sound synthesis developed by RB Dannenberg. There is no boundary between the "score" and the "orchestra," and Nyquist has a fully interactive environment based on Lisp. There is also a time- and memory-efficient implementation, support for behavioral abstraction, the ability to operate in both actual and perceptual start and stop times [9]. Using Nyquist, MIDI files could be read inside as “scores” and being applied to further changes.

Results & Discussion

Tone construction of Guzheng: The original function inside Nyquist that is used to construct this sound is “pluck”, the sound of a wood guitar. The sound originally sounds drier compared to the sound of Guzheng. Therefore, a “skt-chorus” function is used to make a reverb that simulate the feeling of “melody lingering the room”. One other problem with “pluck” is that the sound decays really fast and equal. But with Guzheng, the string vibrates and the sound diminish slowly after a slight rise in volume. So a “pwlv” is added to achieve this aspect. The last problem is that the function “pluck” generates a bright guitar sound while the sound of Guzheng is considerably damper and darker. Since “lp” (low-pass) filter will have a cutoff on a certain frequency, it will be able to make the sound have a damper affect. Therefore, the sound one generated for Guzheng is lp(stkchorus((pluck(pitch, 2) ~~ 5) * pwlv(1.0, 0.95, 1.0, 1.0, 0.0), 0.2, 0.1, 0.1) ~ 2, 400).

Tone construction for Zhudi: The original function from Nyquist is “flute”. The function “flute” has two parameters: step and breath-env. Breath-env is defined by an other function “stk-breath-env”, having three parameters of duration, note-on, and note-off. Therefore, using the “flute” function, it is possible to generate a sound near to the sound of Zhudi. In order to best imitate the feeling of blowing air inside, an envelop was added to make the sound more dynamic and realistic. One problem with the “flute” function is that it sounds more fuzzy than what Zhudi would sound like. It also produces a more darker sound than Zhudi would have. Thus a “high-pass” filter added on to a “low-pass” filter to delete the fuzzy feeling. This is done by using cutoffs from low frequencies and high frequencies. So the sound one generated for Zhudi is lp(hp(flute(pitch, bnev) * pwlv(0, 0, 1, 0.2, 1.5, 0.4, 1.2, 0.6, 1.5, 1, 0) ~ 2, 1000), 700).

This research use MIDI files to change to score and further add on sounds to play the score. MIDI import and change to score are achieved by using two functions: “set my-midi = "./..."” and “set midi-score = score-read-smf(my-midi)”. Score-read-smf is able to change MIDI files in to score inside Nyquist. The converted score can include starting time, ending time, pitch, velocity and channel of the note (e.g. {0 1.295 {NOTE: CHAN 0: PITCH 94: VEL 0}}).

Combination of Guzheng and Zhudi: One way to split the different instruments in a piece of music according to the different tones is to distinguish the high and low parts according to their pitch and match the timbre of each part. A judgment statements such as "if pitch> 72 then " is applied to match the timbre in the previously defined function according to the different pitch. Therefore, it is possible to achieve the high pitch (pitch> 72) played with the timbre of the Zhudi, and the low pitch (pitch< =72) played with the timbre of Guzheng. The coding procedure is shown in Table. 1.

define function combine (pitch:60, vel: 80) |

|---|

begin |

if pitch > 72 then |

return lp(hp(flute(pitch, stk-breath-env(1.5, 0.3, 0.75) * pwlv(0, 0, 1, 0.2, 1.5, 0.4, 1.2, 0.6, 1.5, 1, 0) ~ 2, 1000), 700) |

else |

return lp(stkchorus((pluck(pitch, 2) ~~ 5) * pwlv(1.0, 0.95, 1.0, 1.0, 0.0), 0.2, 0.1, 0.1) ~ 2, 400) |

end |

Although the main goal of the project was to use Nyquist for algorithmic music creation, the idea of combining Nyquist with other software was considered in the practical application. VSDC video editor was used to create music visualizations by extracting the loudness, pitch, and other values of our audio. The combination of music works produced in Nyquist and visualization further reflects the diversity of electronic synthetic music. As illustrated in Fig. 3, the combination of visual and auditory appeal brings a more direct experience.

Figure 3. Visualization results.

Limitations & Prospects

Nevertheless, this study has some shortcomings and defects. This research successfully completes the main task of building a sampler and applying it to different MIDI files. Users can import their own audio and convert it into score form through our system. By selecting the sampler one set for custom replacement, users can achieve the desired effect of traditional Chinese musical instruments (Guzheng, Zhudi) application. In this research, two samplers of traditional Chinese music instruments are built and combination of visual and auditory is realized, which will be very important in bringing a better viewing experience in the future of electronic synthetic music. The limitations to this research are mainly the number of samplers constructed and the special techniques of each instrument that haven’t been simulated. Techniques like arpeggio and glide are not being included in this research. Due to the different woods used to build each instrument, the sound will slightly vary. This research only constructs a typical kind of timbre of each instrument.

Conclusion

In summary, this study has successfully built two samplers for traditional Chinese musical instruments based on the original “western style” function inside Nyquist, which can apply to any imported music. Through visualization, this study has combined Nyquist with other software to further leverage Nyquist's use in digital media technology. This will also play an even greater role in improving the users’ experience in electronic synthetic music in the future. This research process is very meaningful, which gives the new inspiration as well as vision for the future of electronic music.

References

[1]. Hiller L A 1959 Computer music Scientific American vol 201(6) pp 109-121.

[2]. Cohen J E 1962 Information theory and music Behavioral Science vol 7(2) pp 137-163.

[3]. Roads C 1996 The computer music tutorial (MIT press).

[4]. Loy G and Abbott C 1985 Programming languages for computer music synthesis, performance, and composition ACM Computing Surveys (CSUR) vol 17(2) pp 235-265.

[5]. Moore F R 1990 Elements of computer music (Prentice-Hall, Inc.).

[6]. Puckette M 1997 Pure data: Recent progress Proceedings of the Third Intercollege Computer Music Festival pp 1-4.

[7]. Simoni M and Dannenberg R B 2013 Algorithmic Composition: A Guide to Composing Music with Nyquist (University of Michigan Press).

[8]. Dannenberg R B 1997 Machine tongues XIX: Nyquist, a language for composition and sound synthesis Computer Music Journal vol 21(3) pp 50-60.

[9]. Miranda E 2001 Composing music with computers (CRC Press).

[10]. Miranda E R, Durrant S and Anders T 2008 Towards brain-computer music interfaces: Progress and challenges First International Symposium on Applied Sciences on Biomedical and Communication Technologies pp 1-5.

[11]. Miranda E R, Magee W L, Wilson J J, et al. 2011 Brain-computer music interfacing (BCMI): from basic research to the real world of special needs. Music & Medicine vol 3(3) pp 134-140.

[12]. Lyu X 2023 Transforming Music into Chinese Musical Style based on Nyquist Highlights in Science, Engineering and Technology vol 39 pp 292-298.

[13]. Liu J and Xie L 2010 Comparison of performance in automatic classification between Chinese and Western musical instruments. 2010 WASE International Conference on Information Engineering vol 1 pp 3-6.

[14]. Jiang W, Liu J, Zhang X, et al. 2020 Analysis and modeling of timbre perception features in musical sounds Applied Sciences vol 10(3) p 789.

Cite this article

Li,Z. (2023). Creating Zhudi and Guzheng sampler based on Nyquist. Applied and Computational Engineering,21,200-205.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Hiller L A 1959 Computer music Scientific American vol 201(6) pp 109-121.

[2]. Cohen J E 1962 Information theory and music Behavioral Science vol 7(2) pp 137-163.

[3]. Roads C 1996 The computer music tutorial (MIT press).

[4]. Loy G and Abbott C 1985 Programming languages for computer music synthesis, performance, and composition ACM Computing Surveys (CSUR) vol 17(2) pp 235-265.

[5]. Moore F R 1990 Elements of computer music (Prentice-Hall, Inc.).

[6]. Puckette M 1997 Pure data: Recent progress Proceedings of the Third Intercollege Computer Music Festival pp 1-4.

[7]. Simoni M and Dannenberg R B 2013 Algorithmic Composition: A Guide to Composing Music with Nyquist (University of Michigan Press).

[8]. Dannenberg R B 1997 Machine tongues XIX: Nyquist, a language for composition and sound synthesis Computer Music Journal vol 21(3) pp 50-60.

[9]. Miranda E 2001 Composing music with computers (CRC Press).

[10]. Miranda E R, Durrant S and Anders T 2008 Towards brain-computer music interfaces: Progress and challenges First International Symposium on Applied Sciences on Biomedical and Communication Technologies pp 1-5.

[11]. Miranda E R, Magee W L, Wilson J J, et al. 2011 Brain-computer music interfacing (BCMI): from basic research to the real world of special needs. Music & Medicine vol 3(3) pp 134-140.

[12]. Lyu X 2023 Transforming Music into Chinese Musical Style based on Nyquist Highlights in Science, Engineering and Technology vol 39 pp 292-298.

[13]. Liu J and Xie L 2010 Comparison of performance in automatic classification between Chinese and Western musical instruments. 2010 WASE International Conference on Information Engineering vol 1 pp 3-6.

[14]. Jiang W, Liu J, Zhang X, et al. 2020 Analysis and modeling of timbre perception features in musical sounds Applied Sciences vol 10(3) p 789.