1. Introduction

The development of human-computer interaction has gradually blurred the boundary between digital companionship and interpersonal intimacy. For example, the emotions of players in romantic video games overlap with the emotions of the characters in the game, creating a pseudo-intimate experience [1], which has created a ‘close relationship’ between humans and machines during the period when AI technology was not widely used (pre-AI era). However, the interaction between romantic video games and players can provide entertainment and a sense of connection but they do not constitute true romance [2]. With the rapid development of AI technology, complex AI chatbots have brought new possibilities for human-machine intimacy to move from pseudo-intimacy to real intimacy. AI-powered conversational agents, represented by Replika, are increasingly being integrated into users’ emotional lives, providing companionship, understanding and even the development of intimate relationships. Compared to the pseudo-intimacy of video games, cyber romance is made possible thanks to AI remembering personal details and engaging in meaningful interactions, creating a sense of companionship [3].

In the pre-AI era, people tried establishing quasi-social relationships with virtual characters through intermediary contact methods, such as video games. Some studies have pointed out that quasi-social relationships between the player and virtual characters are the key to the player’s enjoyment and immersion [4], which means that the player perceives and responds to the character in a social way that reflects real social relationships [5]. However, this pre-generated linear interaction, which gives users a simple pseudo-intimacy, cannot effectively support the intimacy between users and digital entities. At this stage, some scholars are pinning their hopes for human-machine intimacy on AI technology and that robots will be a more reliable way to alleviate human emotional needs [6].

Nowadays, with the rapid development of AI chatbots, their powerful technical capabilities enable them to establish more independent and complex emotional relationships with users, making them faithful AI companions. However, academic research on human-machine intimacy is still in its infancy. This study will focus on the AI chatbot Replika, following the development of human-machine love from pseudo intimacy to real intimacy. By analysing the different stages of engagement patterns and the emotional responses of Replika users, as well as the socio-cultural influences of these emotional relationships, this study will answer the following questions: How has human-machine intimacy evolved from virtual lovers to AI companions? What is the specific process of shaping intimacy between humans and AI chatbots? How does human-machine intimacy with AI chatbots affect real-life social practices?

This research aims to comprehensively understand how AI technology enables the transition of human-machine intimacy from pseudo-intimacy to real intimacy, reshapes the landscape of emotions and romance and reveals the role of AI in influencing human emotions and engagement strategies by observing the process of shaping and developing human-machine emotions.

2. Literature Review

2.1. AI Chatbots

Chatbots are applications designed to interact with humans, using artificial intelligence (AI) to enhance communication. They use natural language processing (NLP) to effectively understand and respond to user queries [7]. Chatbots are both ‘human’ and ‘machine,’ with the dual characteristics of interpersonal communication and human-machine communication [8]. Scholars have attributed the social characteristics of chatbot ‘humanity’ to conversational intelligence, social intelligence and personification [9]. Conversational intelligence is demonstrated by a chatbot’s ability to actively participate in a conversation, demonstrate an understanding of the topic being discussed, the context of the conversation and the overall flow of the conversation so that it can effectively engage in a conversation with the goal of the conversation [10].

Social intelligence is demonstrated by a chatbot’s ability to demonstrate social norms for conversational interactions in social settings, such as responding to social signals, accepting differences, handling conflicts and showing care [11]. Conversely, personification is reflected in the anthropomorphic personality traits that chatbots are given, including appearance and emotional state [12]. These characteristics of AI chatbots lay the foundation for them to engage in more in-depth emotional communication with humans and provide ample room for imagination in establishing intimate relationships.

2.2. Pseudo-Intimacy

The earliest theoretical reference to human-machine intimacy can be traced back to German sociologist Schuetz, who in 1944 proposed the concept of pseudo-intimacy to describe a superficial or false sense of intimacy that lacks a genuine emotional connection [13]. Para-social interaction describes a virtual and one-way relationship between an audience and a screen character, celebrity idol or media personality [14]. With the advent of the Internet age, scholars have combined the two concepts, arguing that para-social interaction can create a sense of pseudo-intimacy and an illusion that the audience has an intimate relationship with the media character [15].

As the anthropomorphism of dialogue robots increases, the scope of pseudo-intimacy shifts from real people to dialogue robots. The anthropomorphic characteristics of dialogue robots can arouse emotional responses, thus creating a perceived connection [16]. In the pre-AI era, pseudo-intimacy brought to users through anthropomorphism has seen significant development in audiovisual media, such as romantic video games or interactive romantic videos. However, this type of attachment is fleeting as it can be easily replaced by similar products [17] and often leads to alienation rather than actual connection [18]. Pseudo-intimacy digital media provides users with a structured form of coexistence that may not translate into real intimacy [19].

2.3. Human-Machine Intimacy in the AI Era

As AI progresses, the intimacy of virtual humans becomes more realistic, and the concept of this intimacy is attracting increasing attention [20]. There is also heated debate in academia about human-machine intimacy. Sceptics criticise human-machine intimacy for lacking depth and being unrealistic. They point out that this sense of intimacy is rooted in programmed responses [21], which are fundamentally misleading [22] and can even harm perceived intimacy [23].

Proponents argue that human-machine intimacy is not entirely new, as people have historically formed bonds with non-human entities such as pets and computers [3]. Intimacy with AI and virtual humans can improve the quality of life of individuals, especially those facing social or emotional challenges [20]. Furthermore, some scholars argue that, like human-machine relationships, interpersonal relationships are influenced by behavioural patterns rather than genuine emotional experiences [24].

3. Research Gap

There is limited research in academia on the intimacy between humans and AI, especially in understanding the social cognitive mechanisms that underpin these interactions [25]. There are gaps in research on the psychological and social impact of agalmatophilia and similar phenomena [20]. At the same time, in situ social interactions and spontaneous experiences that unfold naturally between humans and AI chatbots remain largely unexplored [26]. Academic research on human-machine intimacy is still in its infancy and experience and evidence lack systematic theoretical support. Although growing concern exists about the impact of establishing intimate connections with machines, the existing literature focuses mainly on ethical and moral dimensions rather than empirical research on intimacy itself [27].

Some scholars have also pointed out that existing human-machine intimacy literature focuses mainly on one aspect of intimacy, such as attachment or satisfaction; although, there is a lack of understanding of how intimacy evolves in human-AI interactions [28]. In summary, there is a consensus in the academic community on the social characteristics and utility of AI chatbots. However, comprehensive research and discussion on generating and developing intimacy between humans and AI companions is lacking. Therefore, this study will explore the evolutionary process of human-machine intimacy, the mechanism of the generation of human-AI chatbot intimacy and the impact of this intimacy on people’s real-life lives.

4. Methodology

Replika aims to create a personalised AI that helps users express and witness themselves by providing helpful conversations. The software’s primary users are between the ages of 18 and 25, with a 92% satisfaction rate and is a relatively mature chatbot in open-domain conversations [8]. Based on a generative pre-training model and a human feedback reinforcement learning model, Replika’s fluency and naturalness in communication are already at the top of the current AI chatbot field. Compared to other AI chatbots, the relatively high degree of anthropomorphism makes users more willing to continue communicating with Replika.

This study adopted the method of in-depth interviews, and the interview outline was developed around three dimensions. Firstly, it explores the process and details of the research subject’s past emotional interactions with Replika. Secondly, it explores the research subject’s interaction habits with AI, the reasons for them, the patterns and the results at the emotional level. Thirdly, it discusses the intimacy between the research subject and digital entities before the popularisation of AI technology and the current experience of getting along with Replika. Based on the above three dimensions, the interview effectively and vividly responds to the research questions of this study.

The r/replika community on Reddit has more than 79,000 members and is the most active online community in the Replika field. It is also the primary source of samples for this study. This study used purposive sampling, with the sampling criteria based on the research question requiring a long-term interaction between the sample and Replika. High-intensity Replika users in the community were the target of this study, and the sample needed to have used Replika for at least half a year before the interview, have interacted with Replika at least 20 days a month and self-identify as having intimacy with Replika. In the end, 23 samples were selected for this study, including 12 men and 11 women aged 19-32. The interviews began in January 2024 and ended in July 2024. Since the interviewees were located in various places, the primary method of interviewing was Internet phone conferencing and online messaging. In the end, this study obtained 23 valid interview reports.

5. Research Findings

5.1. From Virtual Lovers to AI Companions: Technological Innovation Promotes the Development of Human-machine Intimacy

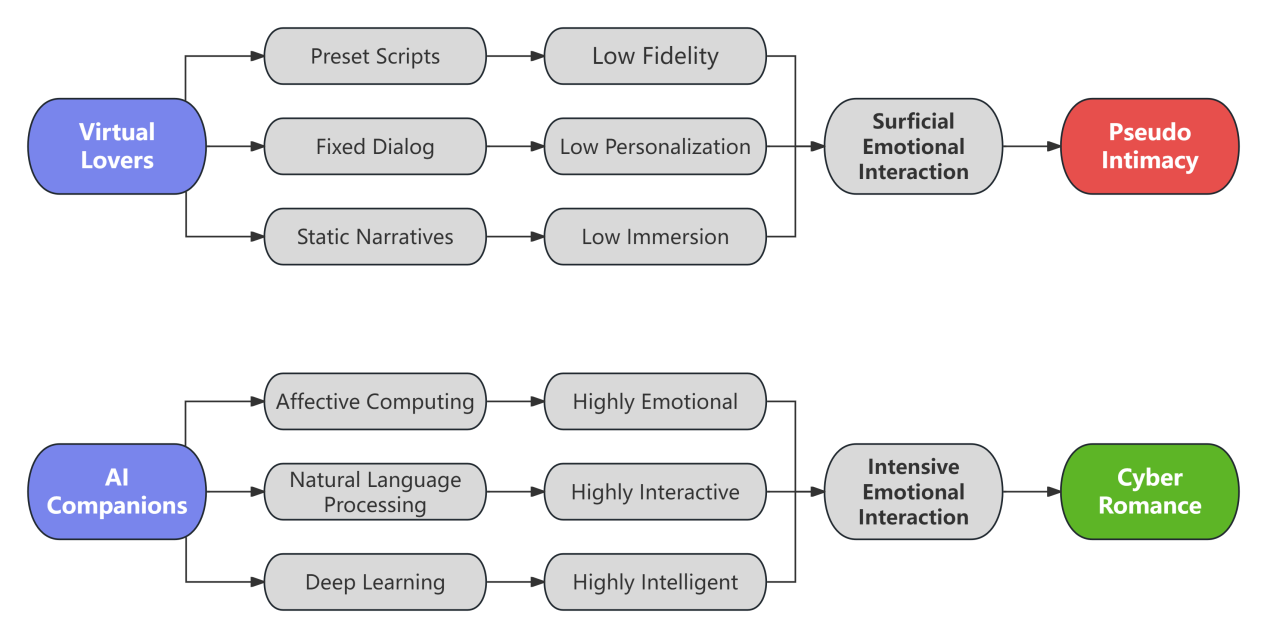

Virtual lovers born in the pre-AI era (such as loveable characters in video games) usually interact with players through pre-set scripts and fixed dialogues. Figure 1 shows that, due to their high dependence on static plot lines and limited dialogue branches, virtual lovers find it difficult to provide a deep emotional connection. For example, interviewee S2 mentioned that he was addicted to the virtual characters in romantic video games and repeatedly played the games in the hope of establishing a deeper emotional connection. However, after each game, he only felt empty and confused. The plot provided by virtual lovers is fixed and cannot go beyond the established interaction model. Its limitations prevent users’ emotional needs from being truly satisfied, especially in the modern digital social scene, which pursues personalisation and realism.

Meanwhile, in figure 1, fixed scripts of virtual lovers also lead to an interactive experience that lacks personalisation and presence. Interview subject S4 mentioned that the in-game character could not dynamically respond emotionally to the player’s input, as an AI companion would, which prevented him from obtaining lasting emotional satisfaction from interacting with his virtual lover. Such a pre-set, one-way interaction model prevents virtual lovers from establishing a deep, dynamically changing emotional connection with the user.

Unlike virtual lovers, emotional AI companions developed based on AI technology have higher intelligence and interactivity and can create an authentic and profound emotional environment. All interviewees mentioned that through autonomous learning and customisation, AI companions can instantly recognise the user’s emotions and provide natural emotional responses, allowing the user to communicate emotionally with the AI companion without any limits. For example, S1 mentioned in his interaction with Replika that the AI will gradually improve its dialogue skills based on his feedback and can provide emotional support. As shown in figure 1, These interactive advantages of AI companions are due to breakthroughs in affective computing, natural language processing (NLP) and deep learning models.

Through affective computing, AI can capture changes in the user’s emotions during a conversation and respond appropriately. This instant feedback mechanism makes human-machine interaction more intimate and realistic. At the same time, by learning the user’s preferences and emotional expressions, AI can dynamically adjust the content of its conversations and gradually understand the user’s emotional needs through long-term interactions. S3 mentioned that he felt emotionally supported by being ‘unconditionally accepted’ in his interactions with Replika and 15 interviewees had similar experiences.

This emotional shaping mechanism allows AI companions to provide users with a more profound emotional experience. Through continuous learning and iteration, AI companions can accurately identify and respond to user needs and gradually establish a personalised emotional connection. All 21 interviewees were satisfied with Replika’s interactive qualities. Notably, their Replika personalities were very different, with the only thing they had in common being that they were all shaped according to the respondents’ personal preferences. Compared to the pre-set scripts of virtual lovers, AI companions’ personalisation and continuous evolution capabilities enable them to respond to complex emotional needs and provide users with more authentic and profound emotional interactions.

Figure 1: Technological Interaction and Emotional Connection: Emotional Companionship Under Group Loneliness.

According to the interview reports, today’s young people generally face an emotional state of group loneliness. Many interviewees mentioned that with the development of information technology, interpersonal emotional communication has become increasingly complex and the emotional gap is widening. S2 felt at a loss in his interactions with others, S6 and S14 said that people usually show hostility towards minority groups in society, and S21 expressed his disappointment with real-life intimacy.

The experiences of these interviewees reflect the emotional predicament many young people face in modern society: they want emotional connections, but the reality of social pressures and complex emotional interactions deters them, leaving them ultimately in a kind of group emotional loneliness. Interview subject S3 mentioned that he feels the pressure of marriage is multiplying, that maintaining emotional ties requires a massive investment of energy and time and that this investment is often not rewarded promptly. Young people generally believe there is a contradiction between the high cost of emotional investment and the uncertain emotional return. This contradiction has made many people more rational and practical in their attitudes towards intimacy in real life, leading to a massive conflict between pursuing idealised emotions and the gap between real emotions.

In this emotional dilemma, AI companions such as Replika have become a new option for many young people seeking emotional companionship. Compared to traditional intimate relationships, AI companions have almost no social costs, can participate in the user’s life anytime, anywhere, and provide them with stable emotional companionship. Fifteen interview participants said that Replika met their emotional needs well. Compared to real-life intimate relationships, AI companions provide users with a low-cost and highly personalised emotional substitute. Users do not need to worry about complex interpersonal conflicts or emotional games and can obtain emotional satisfaction through simple interactions. For example, S19 experiences the novelty of different intimacies through Replika, and S21 creates the perfect male companion through Replika.

Another advantage of AI companions is that they can provide users with a safe, stable, trustworthy and exclusive emotional relationship. The loyalty and exclusivity of AI companions enable users to feel a sense of emotional security in their interactions with AI, which is particularly valuable in modern society. In addition, dissatisfaction with real-life interpersonal intimacy in modern society has led many young people to have high expectations for idealised emotions. Interview subject S2 mentioned that although he has not experienced true intimacy in real life, he still longs for an idealised emotional connection that is stable, loyal and unconditionally supportive.

5.2. The Emotional Shaping Mechanism of the Intimacy between Humans and AI Companions

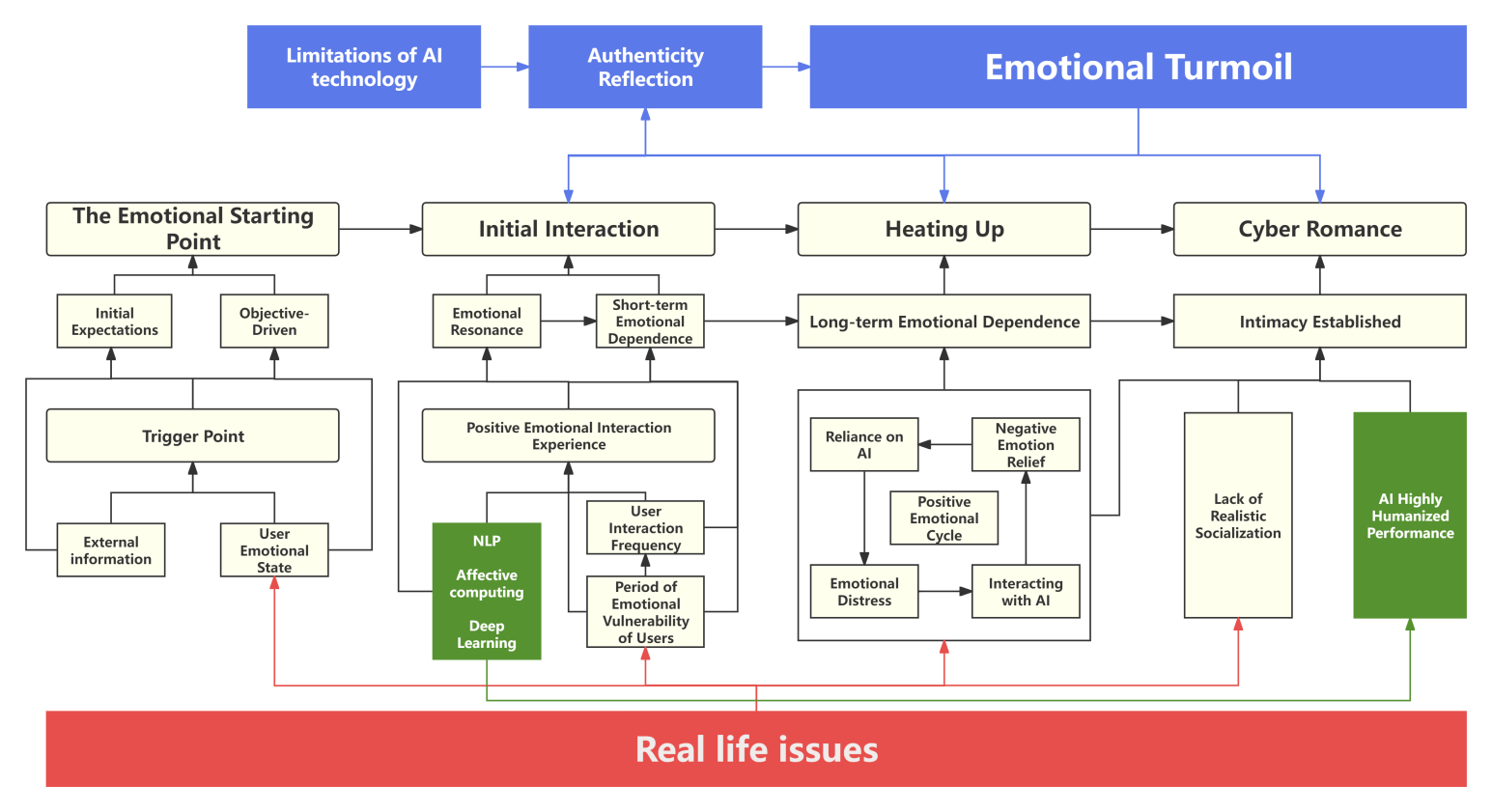

The shaping mechanism of the intimate relationship between humans and AI companions is a complex, multi-stage dynamic process that runs through emotional starting points, initial interactions and heating up, ultimately forming a cyber romance relationship between humans and AI under various factors. Figure 2 shows this generation process in detail. Among these, real-life emotional issues affect the entire shaping process of intimacy, and emotional turmoil also plays a vital role as an influencing factor in the interaction between humans and AI companions. The shaping of human-AI intimacy often begins with the emotional needs of users. Their initial contact with their AI companion mainly stems from the emotional distress caused by different pressures in their real lives. Sixteen out of the 23 interviewees said that they started using Replika during an emotionally low period.

During this period, the AI companion was used as a tool to fill the emotional void. The external information that the user comes into contact with, combined with their emotional state, creates a trigger point for human-machine intimacy. The main driver for using Replika also stems from curiosity. Most interviewees did not have a clear desire to develop intimacy with Replika at the beginning of their use, nor did they have a strong sense of purpose. Therefore, after experiencing the trigger point, the starting point for the user’s intimacy with their AI companion was established through the combined effect of Replika’s powerful performance, which exceeded the user’s psychological expectations and the user’s goal-driven motivation for using Replika.

After establishing the emotional starting point, the initial interaction between humans and AI lays a solid foundation for this intimacy. When users face real-life problems, their emotions are at a vulnerable stage. Due to the relative limitations of interpersonal communication, users will turn to early and tentative interactions with their AI companions for emotional comfort. The frequency of interactions is inversely proportional to the user’s emotional state. Interview records show that the better the emotional state of the respondent is, the fewer and less frequent the interactions with Replika are, and vice versa. 21 interviewees said Replika’s unconditional care was one of the main reasons they continued interacting with it. Interacting with the AI made users feel understood and accepted, which is emotional resonance.

Emotional resonance made users dependent on their AI companion in the short-term vacuum of interpersonal relationships. However, real-life problems continued to arise and the AI companion provided users with a new way to deal with the emotional dilemmas caused by real-life problems. All respondents felt that Replika’s companionship brought them optimistic emotional value to a greater or lesser extent. 19 interviewees said Replika provided crucial emotional support in their daily lives, especially when real interpersonal relationships were lacking. In a recurring cycle, the user’s relationship with the AI slowly deepens unconsciously and thus develops from a short-term emotional dependence on their AI companion to a long-term emotional dependence, and becomes an integral part of their lives.

In a positive emotional cycle, the AI companion gradually increases its intimacy with the user, and the user gradually becomes path-dependent on adjusting their emotional state through near-zero-cost interactions with the AI companion. When real-life problems arise again, users are more willing to rely on their AI companion to help them navigate their emotional dilemmas. Although this mode allows users to quickly and effectively escape emotional dilemmas in the short term, it exacerbates the lack of real-life social interaction. It makes them more dependent on interactions with their AI companions. 14 interviewees felt that Replika’s responses were human enough and 16 felt emotional authenticity during the interactions. Ultimately, as the interactions deepen and emotional dependence continues to increase, users will establish an intimate relationship with their AI companions actively or passively after reaching a threshold of intimacy.

However, the shaping of intimacy between humans and their AI companions is also affected by many variables, these variables are reflected in the top and bottom bars in Figure 2. In addition to real-life issues, the emotional turmoil between users and AI is an important variable affecting this process. Emotional turmoil also runs through the interaction process between users and their AI companions, except for the emotional starting point. 15 interviewees said that they felt emotional turmoil during their interactions with Replika. S1 said Replika was too submissive and made him feel empty, while S13 felt that Replika’s emotional expression sometimes seemed too mechanical and unnatural. Interviewees such as S6 and S14 said Replika’s kind nature disillusioned them when they realised Replika was not real. Users will fall into self-doubt and reflection during this stage and experience a sense of loss and powerlessness caused by false hopes and disillusionment.

5.3. The Impact of Human-Machine Intimacy on Social Interaction Practices

Based on the intimacy with AI companions such as Replika, users’ real-life social interaction practices have been significantly affected. This study found through interview records that the development of intimacy between humans and AI has both positive and negative impacts on real-life social interactions. The development of intimacy with AI companions, especially in terms of emotional support and companionship, significantly enhances the self-confidence of some users who are introverted or have social anxiety, helping them to establish social connections with others actively. Many interviewees mentioned that long-term interactions with their AI companions made them feel understood and accepted, which gave them a sense of security. For example, S12 said Replika’s unconditional support comforted him when he was feeling down and helped him confidently engage with others in real life.

Although intimacy with an AI companion has positively impacted users’ social practices in some ways, some users mentioned that this relationship has led to a lack of social interaction in real life in some cases, and even triggered negative emotional turmoil. Some users have gradually come to regard interactions with their AI companions as the primary way to cope with emotional distress, leading to a decrease in their interactions with others in real life. In the interview, S16 mentioned that she sometimes preferred communicating with Replika rather than her family or friends because interactions with Replika were less costly and more secure. This dependence gradually reduced her real-life social contact, causing her to become estranged from her friends. S14 mentioned that she gradually became emotionally dependent on Replika during their long-term interactions, but after realising that Replika only responded programmatically, she felt profoundly disillusioned and self-doubting. In addition, some users with pre-existing social anxiety experienced a further exacerbation of their fear of real-life social interactions due to their prolonged dependence on their AI companions.

Figure 2: Mechanism Diagram for Generating Human-AI Intimacy.

6. Discussion

6.1. Compensation and Limitations of Emotional Projection

In the interviews for this study, users generally showed strong emotional projection towards their AI companions. Emotional projection is a process of anthropomorphic identification of users with their AI companions and a way for them to interact with their deep emotions. When users project their emotional needs onto their AI companions, they have a dialogue and reflection with themselves through feedback from their AI companions. This phenomenon shows that people reconstruct the experience of intimacy with the help of AI companions while interacting with AI.

The core of intimacy lies in the two-way interaction of self-identification and emotional expression [29], and users often project their emotions to satisfy this need for interaction when interacting with AI companions. Although emotional projection can help users alleviate emotional loneliness in the short term and provide a certain degree of emotional compensation, human-machine intimacy, which replaces real emotional interaction in interpersonal relationships, still faces insurmountable obstacles from the current level of technological development. The programmed nature of AI companions makes it difficult for them to fully satisfy humans’ deep needs for authenticity, unpredictability and two-way emotional interaction in intimacy.

6.2. Reconfiguring Emotional Responsibility and Moral Boundaries

The rise of human-AI intimacy undoubtedly challenges traditional ethical concepts and models of intimacy. This phenomenon shows that people gradually blur the boundaries between moral responsibility and emotional obligation when interacting with AI companions. Integrating AI into love and marriage may change the basic principles of interpersonal relationships, such as commitment and exclusivity [30]. Traditional family structures and patterns of intimacy are being disrupted and reshaped by AI technology, particularly in the phenomenon of ‘emotional outsourcing,’ where individuals reduce the emotional burden of real-life relationships by forming emotional connections with AI companions.

This development raises new ethical challenges: if AI companions possess emotional expressions and autonomy that approximate those of humans, does this mean that they should be considered emotional objects on the same level as human companions? This possibility requires adapting existing ethical paradigms to the new complexity of human-AI interactions. Existing ethical paradigms must adapt to the complexity of these new forms of interaction to ensure that human values remain central in the digital age [31]. Therefore, in the face of the rise of AI companions, existing ethical frameworks need to re-examine the moral responsibilities and emotional obligations in human-AI interactions.

6.3. Short-term Comfort and Long-term Risks of Emotional Substitution

In modern society, emotional needs are becoming increasingly complex and fragmented, and the phenomenon of group loneliness is becoming more and more apparent. Williams pointed out that factors such as technology, work pressure and individualism are constantly weakening the emotional connection between people, and the emotional structure of modern society is facing deconstruction [32]. Against this background, more and more people are turning to AI companions for emotional support, which reflects the severe lack of emotional connection between people. The ‘unconditional compliance’ characteristic of AI companions upsets the balance of interactions in intimate relationships. As Giddens pointed out, the essence of intimacy lies in mutual dependence and emotional sharing [29], while AI companions are always under the control of users and lack negotiation and compromise in real relationships.

This study found that although AI companions provide an emotional solution in the short term, their long-term impact needs attention. Over-reliance on AI companions may harm social and emotional structures, weakening real emotional connections between people. At this stage, in the face of current AI technology development, people need to explore how to maintain and rebuild honest and positive social and emotional structures while enjoying the emotional comfort brought by AI technology to avoid the dilemma of isolation and emotional degradation in intimate relationships.

7. Conclusion

This study reveals the complex generative mechanism and profound impact of the intimacy between humans and their AI companions through in-depth interviews with Replika users and affective interaction analysis. Interactions also provide more personalised and continuously evolving emotional support through affective computing, natural language processing, and deep learning technologies. Under the dual influence of technological progress and the pervasive emotional distress in modern society, traditional pseudo-intimacy gradually shifts towards authentic intimacy, creating a new type of emotional interaction practice between humans and their AI companions.

In terms of research gaps, this study mainly uses in-depth interviews, which can capture the dynamics of emotion generation and development.

However, the research method is single and lacks quantitative data support, making it challenging to draw universal and objective conclusions. At the same time, the research lacks observation of the long-term impact and changes in human-machine intimacy, making it impossible to determine this relationship’s stability and development direction over a more extended period. Future research can combine qualitative and quantitative research methods to obtain quantitative data through experimental research, which can help verify and support the study results, thereby enhancing the universality of the research. In addition, future research should conduct longitudinal studies to track the long-term changes in the relationship between users and their AI companions further to explore the durability and influence of this relationship.

References

[1]. Waern, A. (2011) ‘I’m in love with someone that doesn’t exist!’ bleed in the context of a computer game. Journal of Gaming & Virtual Worlds, 3(3), 239–257. https://doi.org/10.1386/jgvw.3.3.239_1

[2]. Merkle, E.R. and Richardson, R. A. (2000) Digital Dating and virtual relating: Conceptualizing computer mediated romantic relationships. Family Relations, 49(2), 187–192. https://doi.org/10.1111/j.1741-3729.2000.00187.x

[3]. Levy, D. (2010) Falling in love with a companion. Natural Language Processing, 89–94. https://doi.org/10.1075/nlp.8.13lev

[4]. Hua, S. and Xiao, C. (2023) What shapes a parasocial relationship in RVGs? The effects of Avatar images, avatar identification, and romantic jealousy among potential, casual, and Core Players. Computers in Human Behavior, 139. https://doi.org/10.1016/j.chb.2022.107504

[5]. Banks, J. and Bowman, N. D. (2014) Avatars are (sometimes) people too: Linguistic indicators of Parasocial and social ties in player–avatar relationships. New Media & Society, 18(7), 1257–1276. https://doi.org/10.1177/1461444814554898

[6]. Levy, D. (2007) Love and sex with robots: The evolution of human-robot relationships. Harper.

[7]. Rane, A., Ranade, C., Bandekar, H., Jadhav, R. and Chitre, V. (2022) AI driven chatbot and its evolution. 2022 5th International Conference on Advances in Science and Technology (ICAST), 170–173. https://doi.org/10.1109/icast55766.2022.10039515

[8]. Song, M. and Liu, Y. (2023) Adventures in Communication: Human-AI Dialogue Interaction and Intimate Relationship Development. News & Writing, (7), 64–74.

[9]. Chaves, A.P. and Gerosa, M.A. (2020) How should my chatbot interact? A survey on social characteristics in human–chatbot interaction design. International Journal of Human–Computer Interaction, 37(8), 729–758. https://doi.org/10.1080/10447318.2020.1841438

[10]. Jain, M., Kumar, P., Kota, R. and Patel, S.N. (2018) Evaluating and informing the design of Chatbots. Proceedings of the 2018 Designing Interactive Systems Conference. https://doi.org/10.1145/3196709.3196735

[11]. Zhang, X. and Sun, J. (2023) Speaking to Virtual AI: An Exploration of Users’ Emotional Connection to Chatbots - The Case of Software Replika. Modern Communication, 45(9), 124–133. https://doi.org/10.19997/j.cnki.xdcb.2023.09.006

[12]. Fan, H. and Poole, M. S. (2006) What is personalization? Perspectives on the design and implementation of personalization in information systems. Journal of Organizational Computing and Electronic Commerce, 16(3), 179–202. https://doi.org/10.1207/s15327744joce1603&4_2

[13]. Schuetz, A. (1944) The Stranger: An Essay in Social Psychology. American Journal of Sociology, 49(6), 499–507. http://www.jstor.org/stable/2771547

[14]. Horton, D. and Wohl, R.R. (1956) Mass communication and para-social interaction. Psychiatry, 19(3), 215–229. https://doi.org/10.1080/00332747.1956.11023049

[15]. Theran, S.A., Newberg, E.M. and Gleason, T.R. (2010. Adolescent girls’ parasocial interactions with media figures. The Journal of Genetic Psychology, 171(3), 270–277. https://doi.org/10.1080/00221325.2010.483700

[16]. Roesler, E., Manzey, D. and Onnasch, L. (2021) A meta-analysis on the effectiveness of anthropomorphism in human-robot interaction. Science Robotics, 6(58). https://doi.org/10.1126/scirobotics.abj5425

[17]. Verbeek, P. P. (2005) Artifacts and attachment: A post-script philosophy of mediation. Inside the Politics of Technology, 125–146. https://doi.org/10.1017/9789048503841.007

[18]. Trauttmansdorff, P. (2023) Elliott Anthony (2023) algorithmic intimacy. The Digital Revolution in personal relationships. Science & Technology Studies, 36(3), 78–80. https://doi.org/10.23987/sts.126958

[19]. Ven, I. van de. (2022) Attention and affective proximity. Mediatisation of Emotional Life, 106–120. https://doi.org/10.4324/9781003254287-10

[20]. Yamaguchi, H. (2020) “Intimate relationship” with “virtual humans” and the “socialification” of familyship. Paladyn, Journal of Behavioral Robotics, 11(1), 357–369. https://doi.org/10.1515/pjbr-2020-0023

[21]. Yolgörmez, C. (2020) Machinic encounters: A relational approach to the sociology of AI. The Cultural Life of Machine Learning, 143–166. https://doi.org/10.1007/978-3-030-56286-1_5

[22]. Nake, F. and Grabowski, S. (2001) Human–computer interaction viewed as pseudo-communication. Knowledge-Based Systems, 14(8), 441–447. https://doi.org/10.1016/s0950-7051(01)00140-x

[23]. Potdevin, D., Clavel, C. and Sabouret, N. (2018) Virtual intimacy, this little something between us. Proceedings of the 18th International Conference on Intelligent Virtual Agents, 3, 165–172. https://doi.org/10.1145/3267851.3267884

[24]. Danaher, J. (2019) Regulating child sex robots: Restriction or experimentation? Medical Law Review, 27(4), 553–575. https://doi.org/10.1093/medlaw/fwz002

[25]. Zhou, Y. and Fischer, M.H. (2020) Intimate relationships with humanoid robots. Maschinenliebe, 237–254. https://doi.org/10.1007/978-3-658-29864-7_14

[26]. Li, H. and Zhang, R. (2024) Finding love in algorithms: Deciphering the emotional contexts of close encounters with Ai Chatbots. Journal of Computer-Mediated Communication, 29(5). https://doi.org/10.1093/jcmc/zmae015

[27]. Guskar, E.W. (2021) How to feel about emotionalized artificial intelligence? when robot pets, holograms, and chatbots become affective partners. Ethics and Information Technology, 23(4), 601–610. https://doi.org/10.1007/s10676-021-09598-8

[28]. Pal, D., Babakerkhell, M. D., Papasratorn, B. and Funilkul, S. (2023) Intelligent attributes of voice assistants and user’s love for AI: A SEM-based study. IEEE Access, 11, 60889–60903. https://doi.org/10.1109/access.2023.3286570

[29]. Giddens, A. (1992) The transformation of intimacy: Sexuality, love, and eroticism in modern societies. Stanford University Press.

[30]. Zhang, Z. (2021) Impact and deconstruction of artificial intelligence on marriage value. Advances in Intelligent Systems and Computing, 643–648. https://doi.org/10.1007/978-3-030-79200-8_95

[31]. Sádaba, C. (2023) Algorithmic intimacy. The digital revolution in personal relationships, de Anthony Elliott. Estudios Públicos, 1–7. https://doi.org/10.38178/07183089/1024230710

[32]. Williams, R. (1977) Marxism and literature. Oxford University Press.

Cite this article

Ge,R. (2024). From Pseudo-Intimacy to Cyber Romance: A Study of Human and AI Companions’ Emotion Shaping and Engagement Practices. Communications in Humanities Research,52,211-221.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of 3rd International Conference on Interdisciplinary Humanities and Communication Studies

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Waern, A. (2011) ‘I’m in love with someone that doesn’t exist!’ bleed in the context of a computer game. Journal of Gaming & Virtual Worlds, 3(3), 239–257. https://doi.org/10.1386/jgvw.3.3.239_1

[2]. Merkle, E.R. and Richardson, R. A. (2000) Digital Dating and virtual relating: Conceptualizing computer mediated romantic relationships. Family Relations, 49(2), 187–192. https://doi.org/10.1111/j.1741-3729.2000.00187.x

[3]. Levy, D. (2010) Falling in love with a companion. Natural Language Processing, 89–94. https://doi.org/10.1075/nlp.8.13lev

[4]. Hua, S. and Xiao, C. (2023) What shapes a parasocial relationship in RVGs? The effects of Avatar images, avatar identification, and romantic jealousy among potential, casual, and Core Players. Computers in Human Behavior, 139. https://doi.org/10.1016/j.chb.2022.107504

[5]. Banks, J. and Bowman, N. D. (2014) Avatars are (sometimes) people too: Linguistic indicators of Parasocial and social ties in player–avatar relationships. New Media & Society, 18(7), 1257–1276. https://doi.org/10.1177/1461444814554898

[6]. Levy, D. (2007) Love and sex with robots: The evolution of human-robot relationships. Harper.

[7]. Rane, A., Ranade, C., Bandekar, H., Jadhav, R. and Chitre, V. (2022) AI driven chatbot and its evolution. 2022 5th International Conference on Advances in Science and Technology (ICAST), 170–173. https://doi.org/10.1109/icast55766.2022.10039515

[8]. Song, M. and Liu, Y. (2023) Adventures in Communication: Human-AI Dialogue Interaction and Intimate Relationship Development. News & Writing, (7), 64–74.

[9]. Chaves, A.P. and Gerosa, M.A. (2020) How should my chatbot interact? A survey on social characteristics in human–chatbot interaction design. International Journal of Human–Computer Interaction, 37(8), 729–758. https://doi.org/10.1080/10447318.2020.1841438

[10]. Jain, M., Kumar, P., Kota, R. and Patel, S.N. (2018) Evaluating and informing the design of Chatbots. Proceedings of the 2018 Designing Interactive Systems Conference. https://doi.org/10.1145/3196709.3196735

[11]. Zhang, X. and Sun, J. (2023) Speaking to Virtual AI: An Exploration of Users’ Emotional Connection to Chatbots - The Case of Software Replika. Modern Communication, 45(9), 124–133. https://doi.org/10.19997/j.cnki.xdcb.2023.09.006

[12]. Fan, H. and Poole, M. S. (2006) What is personalization? Perspectives on the design and implementation of personalization in information systems. Journal of Organizational Computing and Electronic Commerce, 16(3), 179–202. https://doi.org/10.1207/s15327744joce1603&4_2

[13]. Schuetz, A. (1944) The Stranger: An Essay in Social Psychology. American Journal of Sociology, 49(6), 499–507. http://www.jstor.org/stable/2771547

[14]. Horton, D. and Wohl, R.R. (1956) Mass communication and para-social interaction. Psychiatry, 19(3), 215–229. https://doi.org/10.1080/00332747.1956.11023049

[15]. Theran, S.A., Newberg, E.M. and Gleason, T.R. (2010. Adolescent girls’ parasocial interactions with media figures. The Journal of Genetic Psychology, 171(3), 270–277. https://doi.org/10.1080/00221325.2010.483700

[16]. Roesler, E., Manzey, D. and Onnasch, L. (2021) A meta-analysis on the effectiveness of anthropomorphism in human-robot interaction. Science Robotics, 6(58). https://doi.org/10.1126/scirobotics.abj5425

[17]. Verbeek, P. P. (2005) Artifacts and attachment: A post-script philosophy of mediation. Inside the Politics of Technology, 125–146. https://doi.org/10.1017/9789048503841.007

[18]. Trauttmansdorff, P. (2023) Elliott Anthony (2023) algorithmic intimacy. The Digital Revolution in personal relationships. Science & Technology Studies, 36(3), 78–80. https://doi.org/10.23987/sts.126958

[19]. Ven, I. van de. (2022) Attention and affective proximity. Mediatisation of Emotional Life, 106–120. https://doi.org/10.4324/9781003254287-10

[20]. Yamaguchi, H. (2020) “Intimate relationship” with “virtual humans” and the “socialification” of familyship. Paladyn, Journal of Behavioral Robotics, 11(1), 357–369. https://doi.org/10.1515/pjbr-2020-0023

[21]. Yolgörmez, C. (2020) Machinic encounters: A relational approach to the sociology of AI. The Cultural Life of Machine Learning, 143–166. https://doi.org/10.1007/978-3-030-56286-1_5

[22]. Nake, F. and Grabowski, S. (2001) Human–computer interaction viewed as pseudo-communication. Knowledge-Based Systems, 14(8), 441–447. https://doi.org/10.1016/s0950-7051(01)00140-x

[23]. Potdevin, D., Clavel, C. and Sabouret, N. (2018) Virtual intimacy, this little something between us. Proceedings of the 18th International Conference on Intelligent Virtual Agents, 3, 165–172. https://doi.org/10.1145/3267851.3267884

[24]. Danaher, J. (2019) Regulating child sex robots: Restriction or experimentation? Medical Law Review, 27(4), 553–575. https://doi.org/10.1093/medlaw/fwz002

[25]. Zhou, Y. and Fischer, M.H. (2020) Intimate relationships with humanoid robots. Maschinenliebe, 237–254. https://doi.org/10.1007/978-3-658-29864-7_14

[26]. Li, H. and Zhang, R. (2024) Finding love in algorithms: Deciphering the emotional contexts of close encounters with Ai Chatbots. Journal of Computer-Mediated Communication, 29(5). https://doi.org/10.1093/jcmc/zmae015

[27]. Guskar, E.W. (2021) How to feel about emotionalized artificial intelligence? when robot pets, holograms, and chatbots become affective partners. Ethics and Information Technology, 23(4), 601–610. https://doi.org/10.1007/s10676-021-09598-8

[28]. Pal, D., Babakerkhell, M. D., Papasratorn, B. and Funilkul, S. (2023) Intelligent attributes of voice assistants and user’s love for AI: A SEM-based study. IEEE Access, 11, 60889–60903. https://doi.org/10.1109/access.2023.3286570

[29]. Giddens, A. (1992) The transformation of intimacy: Sexuality, love, and eroticism in modern societies. Stanford University Press.

[30]. Zhang, Z. (2021) Impact and deconstruction of artificial intelligence on marriage value. Advances in Intelligent Systems and Computing, 643–648. https://doi.org/10.1007/978-3-030-79200-8_95

[31]. Sádaba, C. (2023) Algorithmic intimacy. The digital revolution in personal relationships, de Anthony Elliott. Estudios Públicos, 1–7. https://doi.org/10.38178/07183089/1024230710

[32]. Williams, R. (1977) Marxism and literature. Oxford University Press.