1. Introduction

Eye-tracking technology is a technology that tracks eye movements to collect and analyze data. It is capable of playing a variety of roles in the field of human-computer interaction control, such as user surveys [1] and software development [2] in recent years. Relying on eye-tracking technology, the device with an eye tracker can gauge eye movement and positioning and obtain images seen by users to get analytical data [3], and then the data could be applied in many industries.

In the field of human-computer interaction control, although eye-tracking technology reveals its strong potential, its application under changeable occasions and usage costs are still challenges. To evaluate eye-tracking technology, two criteria worth concentrating on are applicability and cost, which are closely bound up with the practical application of this technology. The applicability of the technology determines its development prospect. And only if its cost decreases will eye-tracking technology be worthy of attracting a larger market. To increase the applicability and lower the cost, studies over the past years have provided valuable research results. In the beginning ages, the practical use of eye-tracking technology was generally confined to laboratories [4], but as cheaper devices were born [5], its applications in other fields began to blossom, including the computer area. Some researchers set up the experiments to assess whether users accepted the operations done by virtual devices, like virtual mouse and keyboards, under the eye-tracking technology. The feedback from users could be collected by questionnaire to improve the usability of the eye-tracking system [4]. Furthermore, some companies bring eye-tracking technology to practical work. Obaidellah et al [6]. apply eye-tracking technology to research computer programming to find out the method for helping novice programmers. They completed detailed statistical work to collect the conditions of eye-tracking technology in the process of programming. Additionally, some interesting non-academic fields also improve the interactive capability by eye-tracking technology. Almeida et al [7]. put forward their ideas about the combination of eye-tracking technology and the design of game scenery. All in all, eye-tracking technology has been paid attention to by experts in numerous fields, and the enthusiasm shown by the academic community also reflects the optimistic prospects of this technology. Nevertheless, the research gap still exists. For instance, the cost of new talent cultivation is a problem that has not obtained enough value. There is still a lack of systematic and professional tutorials for people to learn that satisfy daily usage and maintenance of eye-tracking equipment.

This paper aims to evaluate eye-tracking technology for human-computer interaction (HCI) control based on the criteria of applicability and cost-effectiveness. The remainder of the article is structured as follows: Section 2 elucidates the fundamental mechanisms of eye-tracking technology in HCI systems. Section 3 conducts a critical assessment of the technology’s applicability and total cost of ownership. Section 4 discusses prevailing challenges and optimization strategies, including technical limitations and ethical considerations. Finally, Section 5 synthesizes the findings and suggests future directions.

2. Operating principles

2.1. General principles

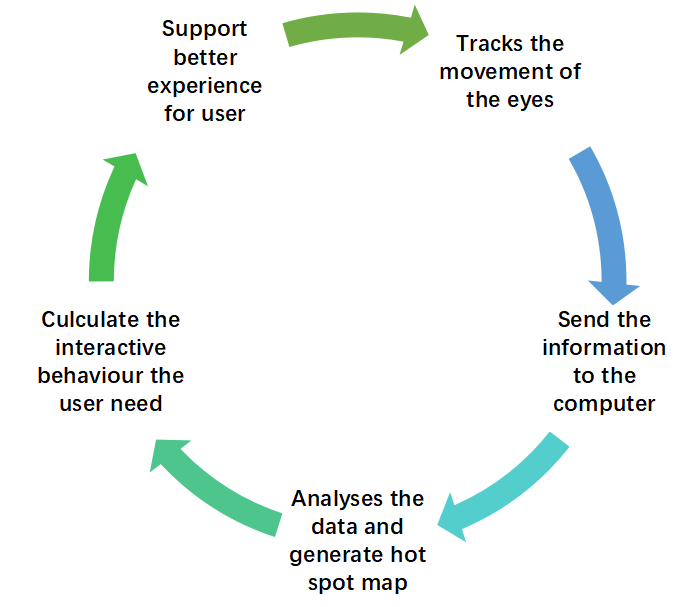

The general operating principle of the eye-tracking technology for human-computer interaction control is to use an eyes-monitoring device to capture the focus point the user is gazing at, then return the feedback to improve the experience by analyzing the data of eye movement [3].

2.2. Specific implementation

Firstly, as illustrated in Figure 1, the process begins with the user wearing a head-mounted eye-tracking device. This device captures eye movements through infrared sensors and corneal reflection algorithms, generating precise pupil center coordinates in real-time [3,4]. Subsequently (Step 2 in Fig. 1), these gaze coordinates alongside application usage metadata (e.g., active UI elements) are transmitted to a computer for processing. The computational phase (Step 3, Fig. 1) involves aggregating raw gaze points into a hot spot map (or heat map) (Figure 2), which visually quantifies gaze dwell time frequency across the application’s interface [3]. Leveraging this spatial attention data (Step 4, Fig. 1), the system employs predefined interaction rules (e.g., dwell-time selection) to calculate and trigger responsive behaviors, such as activating UI controls or scrolling content, aimed at fulfilling user intent. Finally (Step 5, Fig. 1), the refined interface feedback completes the interaction loop, enabling continuous user engagement.

3. Critical evaluation

3.1. Evaluation for applicability

The first criterion is high applicability. Eye-tracking technology has shown the potential to support human-computer interaction in various industries. For instance, in interface design, eye-tracking devices can assess the usability and efficiency of a user interface by recording and analyzing gaze points and heat maps [1]. By understanding users’ visual attention and behavior patterns, designers can improve layouts and interaction flows to enhance the user experience.

In software development, as noted by Sharif and Shaffer [2], eye-tracking helps identify parts of the source code that developers frequently inspect, which aids debugging and code comprehension. Similarly, in education, eye-tracking serves as a bridge between learners’ cognitive processes and learning outcomes. As demonstrated in educational research [8], gaze data analysis can quantify attention distribution (e.g., 60% fixation on key content) and engagement levels (e.g., blink rate correlates with cognitive load), providing actionable insights for adaptive learning systems.

While eye-tracking technology demonstrates broad applicability, several technical limitations warrant consideration. The system's performance is primarily constrained by two key metrics: accuracy and precision.

Tracking accuracy (average angular deviation) typically ranges from 0.5° to 1.5° in commercial systems, with research-grade devices achieving ≤0.6° under optimal conditions [9]. Precision (RMS deviation) generally falls within 0.1°–0.3°, but degrades with head movement or poor lighting [9].

Another barrier is latency and real-time performance. Traditional systems exhibit latency exceeding 100 ms due to data transmission delays [4], while advanced methods like EyeTrAES reduce this to ≈12 ms [10].

A third challenge is robustness across users. Individual differences (e.g., glasses, eye shape, or cultural gaze patterns) introduce tracking errors, with studies reporting accuracy variations up to 20% across demographic groups [11]. These variations, particularly across demographic groups [11], limit universal adoption.

Despite these concerns, eye-tracking technology demonstrates broad applicability and is poised to expand with advancements in adaptive calibration [9] and edge computing [10].

3.2. Evaluation of costs

The second criterion is affordable costs, which plays a crucial role in the widespread adoption of eye-tracking technology.

In terms of hardware acquisition, traditional eye-trackers were prohibitively expensive, but recent advancements have introduced budget-friendly alternatives. Early low-cost models like GP3 ($495) and EyeTriber ($99) [5] expanded access to educational and small-scale research contexts. This price reduction signals a positive trend toward democratizing the technology.

However, the true financial burden extends far beyond upfront hardware expenses. Maintenance and training costs pose significant challenges, as eye-tracking systems demand specialized personnel for routine calibration, software updates, and data interpretation. The complexity of gaze data analytics often necessitates hiring experts in human-computer interaction or data science, creating ongoing staffing costs that many organizations underestimate [11]. Compounding this issue is the scarcity of comprehensive training resources for non-specialists, leaving many users dependent on professional support.

Less apparent but equally impactful are the operational constraints that inflate long-term expenditures. High-frequency sampling and continuous data processing impose substantial energy demands. High-frequency sampling imposes substantial energy demands, limiting battery life in mobile applications [4,11]. Many systems also require ideal operating conditions—stable ambient lighting, high-bandwidth USB 3.0+ connections, and desktop-grade power supplies—restricting deployment in field settings and adding hidden infrastructure costs.

While the declining price of hardware suggests progress toward affordability, the cumulative expenses of maintenance, specialized labor, and energy/equipment requirements reveal a more nuanced reality. These persistent cost barriers ultimately prevent current eye-tracking systems from achieving true cost-effectiveness for most potential adopters.

4. Discussion

4.1. Technical challenges and optimization directions

To improve the robustness of eye-tracking systems, algorithm-level enhancements are essential. Adaptive calibration and deep-learning models can help reduce the impact of individual differences such as eye shape, glasses, or skin tone [11]. Latency can be further minimized by optimizing hardware–software interactions, particularly through event-based gaze sampling and low-latency prediction techniques [10]. Additionally, automating the calibration process based on natural gaze behavior can reduce long-term maintenance costs and lower the technical barrier for end-users [12].

4.2. Considerations for privacy, security, and regulatory compliance

The increasing sensitivity of eye-tracking data, including inferred attention, emotion, and identity, raises concerns around data protection and compliance with regulations like GDPR, necessitating anonymization and ethical design frameworks [11].

5. Conclusion

This report has elaborated on the operating principles of eye-tracking technology for human-computer interaction control and evaluated its applicability and costs. Through detailed assessment, eye-tracking technology demonstrates enormous potential across diverse domains such as interface design, software development, and educational analytics. Notably, studies confirm its capacity to quantify user attention distribution (e.g., 60% fixation on critical interface elements [1]) and enhance code comprehension efficiency [2], enabling more intuitive interaction paradigms. Concurrently, regarding costs, the price of relevant devices has become increasingly affordable in recent years, with various brands offering budget-friendly options like the $99 EyeTriber [5].

However, the implementation faces significant challenges. Technically, limitations persist in tracking accuracy (typically 0.5°-1.5° angular deviation [9]), response latency (exceeding 100ms in conventional systems [4]), and robustness across demographics (e.g., reduced efficacy for users with corrective lenses [11]). Economically, while hardware costs decrease, substantial hidden expenditures emerge through ongoing calibration demands, specialized personnel requirements, and operational constraints like high energy consumption and lighting dependencies [11], collectively inflating total ownership costs.

This study acknowledges limitations, primarily that all data were collected from second-hand materials without original research, such as user questionnaires. Additionally, due to scope constraints, important criteria like longitudinal usability decay and ethical compliance were not fully evaluated.

Nevertheless, eye-tracking technology retains tremendous potential to transform human-computer interaction control, particularly in accessibility contexts and specialized applications. Looking forward, addressing technical barriers through adaptive calibration protocols [12] and edge computing solutions [10], while developing standardized training to reduce expertise dependencies, will be critical next steps. As these challenges are progressively overcome, the technology may ultimately create more natural interactive experiences, reshaping digital interaction paradigms globally.

References

[1]. S. Djamasbi, M. Siegel, and T. Tullis. (2010) Generation Y, web design, and eye tracking, International Journal of HumanComputer Studies, vol. 68, no. 5, pp. 307–323. doi: https: //doi.org/10.1016/j.ijhcs.2009.12.006.

[2]. B. Sharif and T. Shaffer. (2015) The Use of Eye Tracking in Software Development, ” in Foundations of Augmented Cognition, D. D. Schmorrow and Fidopiastis, Cali M, Eds., Cham: Springer International Publishing, pp. 807–816. Available: https: //doi.org/10.1007/978-3-319-20816-977.

[3]. S. Djamasbi. (2014) Eye Tracking and Web Experience. AIS Transactions on Human Computer Interaction, vol. 6, no. 2, pp. 37–54.

[4]. X. Zhang, X. Liu, S. Yuan, and S. Lin. (2017) Eye Tracking Based Control System for Natural Human-Computer Interaction, Computational Intelligence and Neuroscience, vol. 2017. doi: https: //doi.org/10.1155/2017/5739301.

[5]. V. Janthanasub and P. Meesad. (2015) Evaluation of a low-cost Eye Tracking System for Computer Input. Applied Science and Engineering Progress, vol. 8, Art. no. 3. doi: http: //dx.doi.org/10.14416/j.ijast.2015.07.001.

[6]. U. Obaidellah, A. Haek, and P. C. Cheng. (2018) A Survey on the Usage of Eye-Tracking in Computer Programming, ACM Computing Surveys (CSUR), vol. 51, no. 1, pp. 1–58. doi: https: //doi.org/10.1145/3145904.

[7]. S. Almeida, Ó. Mealha, and A. Veloso. (2016) Video game scenery analysis with eye tracking, Entertainment Computing, vol. 14, pp. 1–13. doi: https: //doi.org/10.1016/j.entcom.2015.12.001.

[8]. M. Lai et al. (2013) A review of using eye-tracking technology in exploring learning from 2000 to 2012, Educational Research Review, vol. 10, pp. 90–115. doi: https: //doi.org/10.1016/j.edurev.2013.10.001.

[9]. K. A. Dalrymple, M. D. Manner, K. A. Harmelink, E. P. Teska, and J. T. Elison. (2018) An examination of recording accuracy and precision from eye tracking data from toddlerhood to adulthood, Frontiers in psychology, vol. 9, pp. 803. doi: https: //doi.org/10.3389/fpsyg.2018.00803.

[10]. A. Sen, Bandara, Nuwan Sriyantha, I. Gokarn, T. Kandappu, and A. Misra. (2024) EyeTrAES: finegrained, low-latency eye tracking via adaptive event slicing. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 8, no. 4, pp. 1–32. doi: https: //doi.org/10.1145/3699745.

[11]. M. Burch, R. Haymoz, and S. Lindau. (2022) The Benefits and Drawbacks of Eye Tracking for Improving Educational Systems, Seattle, WA, USA: Association for Computing Machinery. doi: https: //doi.org/10.1145/3517031.3529242.

[12]. I. Schuetz and K. Fiehler. (2022) Eye tracking in virtual reality: Vive pro eye spatial accuracy, precision, and calibration reliability. Journal of Eye Movement Research, vol. 15, no. 3, September. doi: https: //doi.org/10.16910/jemr.15.3.3.

Cite this article

Chen,J. (2025). The Evaluation of Eye-tracking Technology for Human-Computer Interaction Control. Communications in Humanities Research,74,31-36.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of ICADSS 2025 Symposium: Art, Identity, and Society: Interdisciplinary Dialogues

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. S. Djamasbi, M. Siegel, and T. Tullis. (2010) Generation Y, web design, and eye tracking, International Journal of HumanComputer Studies, vol. 68, no. 5, pp. 307–323. doi: https: //doi.org/10.1016/j.ijhcs.2009.12.006.

[2]. B. Sharif and T. Shaffer. (2015) The Use of Eye Tracking in Software Development, ” in Foundations of Augmented Cognition, D. D. Schmorrow and Fidopiastis, Cali M, Eds., Cham: Springer International Publishing, pp. 807–816. Available: https: //doi.org/10.1007/978-3-319-20816-977.

[3]. S. Djamasbi. (2014) Eye Tracking and Web Experience. AIS Transactions on Human Computer Interaction, vol. 6, no. 2, pp. 37–54.

[4]. X. Zhang, X. Liu, S. Yuan, and S. Lin. (2017) Eye Tracking Based Control System for Natural Human-Computer Interaction, Computational Intelligence and Neuroscience, vol. 2017. doi: https: //doi.org/10.1155/2017/5739301.

[5]. V. Janthanasub and P. Meesad. (2015) Evaluation of a low-cost Eye Tracking System for Computer Input. Applied Science and Engineering Progress, vol. 8, Art. no. 3. doi: http: //dx.doi.org/10.14416/j.ijast.2015.07.001.

[6]. U. Obaidellah, A. Haek, and P. C. Cheng. (2018) A Survey on the Usage of Eye-Tracking in Computer Programming, ACM Computing Surveys (CSUR), vol. 51, no. 1, pp. 1–58. doi: https: //doi.org/10.1145/3145904.

[7]. S. Almeida, Ó. Mealha, and A. Veloso. (2016) Video game scenery analysis with eye tracking, Entertainment Computing, vol. 14, pp. 1–13. doi: https: //doi.org/10.1016/j.entcom.2015.12.001.

[8]. M. Lai et al. (2013) A review of using eye-tracking technology in exploring learning from 2000 to 2012, Educational Research Review, vol. 10, pp. 90–115. doi: https: //doi.org/10.1016/j.edurev.2013.10.001.

[9]. K. A. Dalrymple, M. D. Manner, K. A. Harmelink, E. P. Teska, and J. T. Elison. (2018) An examination of recording accuracy and precision from eye tracking data from toddlerhood to adulthood, Frontiers in psychology, vol. 9, pp. 803. doi: https: //doi.org/10.3389/fpsyg.2018.00803.

[10]. A. Sen, Bandara, Nuwan Sriyantha, I. Gokarn, T. Kandappu, and A. Misra. (2024) EyeTrAES: finegrained, low-latency eye tracking via adaptive event slicing. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 8, no. 4, pp. 1–32. doi: https: //doi.org/10.1145/3699745.

[11]. M. Burch, R. Haymoz, and S. Lindau. (2022) The Benefits and Drawbacks of Eye Tracking for Improving Educational Systems, Seattle, WA, USA: Association for Computing Machinery. doi: https: //doi.org/10.1145/3517031.3529242.

[12]. I. Schuetz and K. Fiehler. (2022) Eye tracking in virtual reality: Vive pro eye spatial accuracy, precision, and calibration reliability. Journal of Eye Movement Research, vol. 15, no. 3, September. doi: https: //doi.org/10.16910/jemr.15.3.3.