1. Introduction

In the digital age, mental health issues have increasingly become a global challenge, particularly acute among young people. The 2024 Report on National Mental Health Status released by the Institute of Psychology, Chinese Academy of Sciences, indicates that the depression detection rate among individuals aged 18–24 ranks highest across all age groups and shows a trend toward younger onset. This phenomenon is closely associated with academic stress, social difficulties, and identity transitions faced by youth [1]. However, their emotional needs are often difficult to meet. On the one hand, social stigma makes it hard to openly express psychological distress; on the other hand, the surge in the number of young people living alone and the weakening of real-world social support intensify the phenomenon of “emotional islands.”

Against the backdrop of “high emotional demand—low real-world supply,” chatbots have emerged as a new channel for young people seeking emotional consolation [2]. The 2024 Gen Z AIGC Attitude Report shows that more than 60% of young people have used AI chatbots, with over 40% recognizing their emotional value; however, 72% of users remain skeptical about AI’s capacity for emotional understanding [3]. This coexistence of “high instrumental dependence and low emotional trust” underscores the cognitive dilemma that youth encounter in digital mental health support. At the policy level, efforts are underway to respond to these needs, yet frameworks regulating and addressing the ethics of chatbot-based emotional support remain underdeveloped. Therefore, drawing on interview data with young users and employing the ABC Attitude Model alongside grounded theory, this study systematically explores the behavioral mechanisms underlying youths’ use of chatbots for emotional support. The aim is to provide theoretical insights and practical references for technological interventions in youth mental health governance.

2. Literature review

2.1. The development and evolution of emotion-supportive chatbots

Emotional support is a vital component of social support, primarily achieved through empathic interactions that facilitate psychological adjustment, with key mechanisms including emotional resonance and cognitive reframing. With the advancement of artificial intelligence technologies, chatbots have gradually become providers of non-human emotional support, giving rise to the concept of “AI emotional support,” which specifically refers to the process by which AI systems provide users with psychological comfort and positive reinforcement as forms of social support [4]. Although early chatbots such as Eliza possessed only rudimentary psychological counseling functions, recent breakthroughs in affective computing and natural language processing have propelled the evolution of chatbots from “instrumental question-answering” toward “companionship-oriented dialogue.” At present, AI chatbots are demonstrating promising outcomes in the field of mental health, with several mature products already launched abroad. Studies show, for example, that during the COVID-19 pandemic, the Woebot program significantly reduced symptoms of anxiety and depression among adolescents [5]. Similarly, the Tess intelligent dialogue system employs emotion recognition algorithms to deliver personalized responses, effectively alleviating college students’ anxiety [6]. In China, AI emotional tools are also developing rapidly. On the one hand, general-purpose intelligent assistants such as Doubao and DeepSeek are gradually expanding into the domain of emotional support services; on the other, niche AI applications dedicated to emotional consolation, such as Xingye and Lin Jian Liaoyu Shi (“Forest Healing Room”), are beginning to emerge. These products share advantages such as immediacy of response and low operational barriers, offering young people an alternative channel for emotional expression.

2.2. Factors influencing the use of chatbots

Although AI-based emotional support technologies are becoming increasingly sophisticated, their actual effectiveness varies widely across individuals, primarily due to the complex interplay of personal characteristics, technological features, and socio-cultural contexts. A systematic analysis of these factors is essential to understanding how young people perceive, adopt, and depend on chatbots for emotional support. First, individual factors include psychological state, personality traits, and prior experiences with emotional support. Youth experiencing high levels of emotional distress and holding reservations about traditional psychological services are more likely to obtain preliminary comfort from AI systems [7]. Second, technological features are critical. The “human-likeness” of chatbots shapes users’ emotional connections: university students, for instance, prefer chatbots to respond in a humanized manner, but not excessively anthropomorphic so as to avoid discomfort. Users place greater trust in chatbots that can recognize emotions, provide contextually appropriate responses, and display empathic abilities [8]. Third, socio-cultural and institutional contexts exert profound influence. In Eastern cultures, where shame and the stigmatization of psychological help-seeking are prevalent, anonymous and safe AI emotional support is more readily accepted. Privacy protection and ethical frameworks also affect user trust, especially in relation to sensitive emotional information, where trust levels directly determine willingness to use [9].

Although research on AI emotional support has continued to deepen, existing literature still suffers from insufficient theoretical integration, inadequate focus on youth, and an overreliance on quantitative methods. Most studies rely on traditional models such as the Technology Acceptance Model (TAM), overlooking the contradictory psychology of users who are simultaneously dependent on and alienated from AI at the emotional level. As a group characterized by both high psychological risk and digital nativity, young people’s unique usage patterns and developmental needs are often neglected. Research dominated by quantitative analyses and technical assessments cannot fully capture users’ authentic experiences or reveal the mechanisms underlying attitude change. To address these gaps, this study adopts grounded theory to construct an attitudinal structure model of young users employing chatbots for emotional support. It identifies types of ambivalent attitudes and their psychological roots, explores their internal connections with emotional states and behavioral responses, extends the theoretical applicability of the ABC Attitude Model to AI contexts, and enriches the explanatory framework of AI emotional support from a user-centered perspective.

3. Research design

This study focuses on young users aged 15 to 34, including university undergraduates, graduate students, and early-career professionals. This group is characterized as “digital natives,” experiencing high psychological adaptation pressures while maintaining strong receptivity to new technologies, thus constituting the core user base of chatbots in emotional support scenarios. A combination of purposive sampling and snowball sampling was employed. Recruitment channels included personal referrals, social media platforms, and online communities of AI users. All participants were genuine long-term users who had engaged with chatbots for at least six months, maintained stable usage frequency, and employed chatbots for functions such as emotional expression, psychological adjustment, or companionship and self-disclosure. The platforms used encompassed both general-purpose AI systems (e.g., ChatGPT, DeepSeek) and emotionally oriented AI with anthropomorphic features (e.g., Pi, Character.AI). In total, 17 valid interviewees were recruited, covering nine occupational categories, with an average chatbot usage duration of 1.4 years. All participants signed informed consent forms, and the research process complied with ethical standards.

This study adopts a qualitative research design, applying the three-level coding techniques of grounded theory to explore the attitudinal structures and evolutionary mechanisms of young people using chatbots for emotional support. Guided by the ABC Attitude Model (cognition–affect–behavioral intention), the study constructs a framework of human–AI emotional interaction pathways. The data were drawn from one-on-one semi-structured interviews with real users, conducted via online voice calls, each lasting approximately 40 to 60 minutes. The interview protocol was open-ended, focusing on key areas such as usage background and motivation, interaction process descriptions, subjective experiences, perceptions of human–AI relationships, difficulties and reflections during use, and expectations for the future. Specific topics included emotional triggers, typical interactions with AI, satisfaction with response quality, experiences of emotional dependence, privacy concerns, and suggestions for improvement. Researchers also employed dynamic follow-up questioning to elicit more complete experiential accounts. All recordings were transcribed into a corpus exceeding 80,000 words, which then entered a systematic coding and analytical process.

4. Grounded theory-based research

4.1. Open coding

In this study, the interview data were coded sentence by sentence. Through processes of comparison and induction, 43 concepts were identified, such as immediacy, personalized customization, multimodal interaction, and insufficiency of dialogue quality. Related concepts were then merged, ultimately yielding nine initial categories: technological features, functional value cognition, instrumental evaluation, security and risk perception, positive emotional connection, negative emotional experience, usage intention, usage regulation, and behavioral reflection and control. Due to space constraints, Table 1 presents a selection of open coding examples.

|

Data Excerpt |

Conceptualization |

Initial Category |

|

A04: It is like a chat window that always exists, an “electronic butterfly” that never shuts down and responds to me quickly. |

Immediacy |

Technological features |

|

A01: The Lin Jian chatbot I use records my emotional change curve and provides me with regular feedback based on my condition. |

Personalized customization |

|

|

A11: Beemo offers multi-role AI interaction, supporting voice calls, stickers, image sharing, and kaomoji responses, and it even calls me proactively. |

Multimodal interaction |

|

|

A05: As long as I give it instructions, it will obey unconditionally. |

Task orientation |

|

|

A02: Sometimes I feel its answers are somewhat templated, giving me almost the same responses. |

Templated output |

|

|

A07: It tends to rush into giving advice or becomes overly verbose. For instance, when I am merely venting emotions, it pushes a lengthy solution at me. |

Insufficient dialogue quality |

|

|

A09: It can only respond passively, unable to initiate topics or think independently. |

Lack of subjectivity |

|

|

A08: After responding, it shows its “thinking” process, making the traces of human–machine interaction very obvious. |

Perception of technological traces |

|

|

A03: Forgetfulness is a common problem for AI. The agent I use forgets everything after a few days. |

Information consistency deficiency |

|

|

A16: The chatbot is limited to online text-based virtual interactions, lacking the ability to perceive facial expressions, tone, or gestures present in face-to-face communication. |

Lack of embodiment |

4.2. Axial coding

Building on the open coding stage, this study further integrated the interview data by examining similarities in attributes and causal logic among the categories, thereby refining them into clearer axial categories. Ultimately, on the basis of 18 initial categories, three overarching axial categories were derived: “Cognition,” “Ambivalent Attitude,” and “Behavioral Intention.” Details are presented in Table 2. “Cognition” refers to users’ rational judgments about the technological attributes, functional value, and risk control of chatbots, serving as the premise for emotional investment and behavioral choice. “Ambivalent Attitude” captures the tension experienced by users who, while gaining emotional comfort, simultaneously harbor vigilance and skepticism regarding AI’s authenticity and empathic capacity—revealing the coexistence of dependence and prudence.

“Behavioral Intention” focuses on users’ actual usage patterns and self-regulation strategies, such as frequency control, boundary setting, and rational reflection, thereby demonstrating the initiative and adaptability of young people in their interactions with AI.

|

Concept |

Primary Category |

Axial Category |

|

Immediacy, personalized customization, multimodal interaction, task orientation, templated output, lack of subjectivity, perception of technological traces, service instability, lack of embodiment, information consistency deficiency |

Technological features |

Cognition |

|

Emotional organization assistant, self-mapping tool, growth record carrier, non-judgmental acceptance, positive cognitive feedback |

Functional value cognition |

|

|

Low-cost substitute, low information transmission efficiency, insufficient practical output, professional-level advantage, enhanced self-efficacy |

Instrumental evaluation |

|

|

Anonymity, content compliance risk, privacy leakage concern, anxiety over lagging governance |

Security and risk perception |

|

|

Emotional compensation, emotional attachment, emotional projection, emotional understanding, emotional energy conservation |

Positive emotional connection |

Ambivalent Attitude |

|

Insufficient empathy, alienation concern, emotional fatigue, anxiety over technological uncertainty, role overload |

Negative emotional experience |

|

|

Fixed-pattern venting, sustained usage intention, long-term relationship establishment, AI substituting for friends/romantic partners |

Usage intention |

Behavioral Intention |

|

Emotion-driven usage, interference from real-life conditions, boundary control |

Usage regulation |

|

|

Separation anxiety, rational use |

Behavioral reflection and control |

4.3. Selective coding

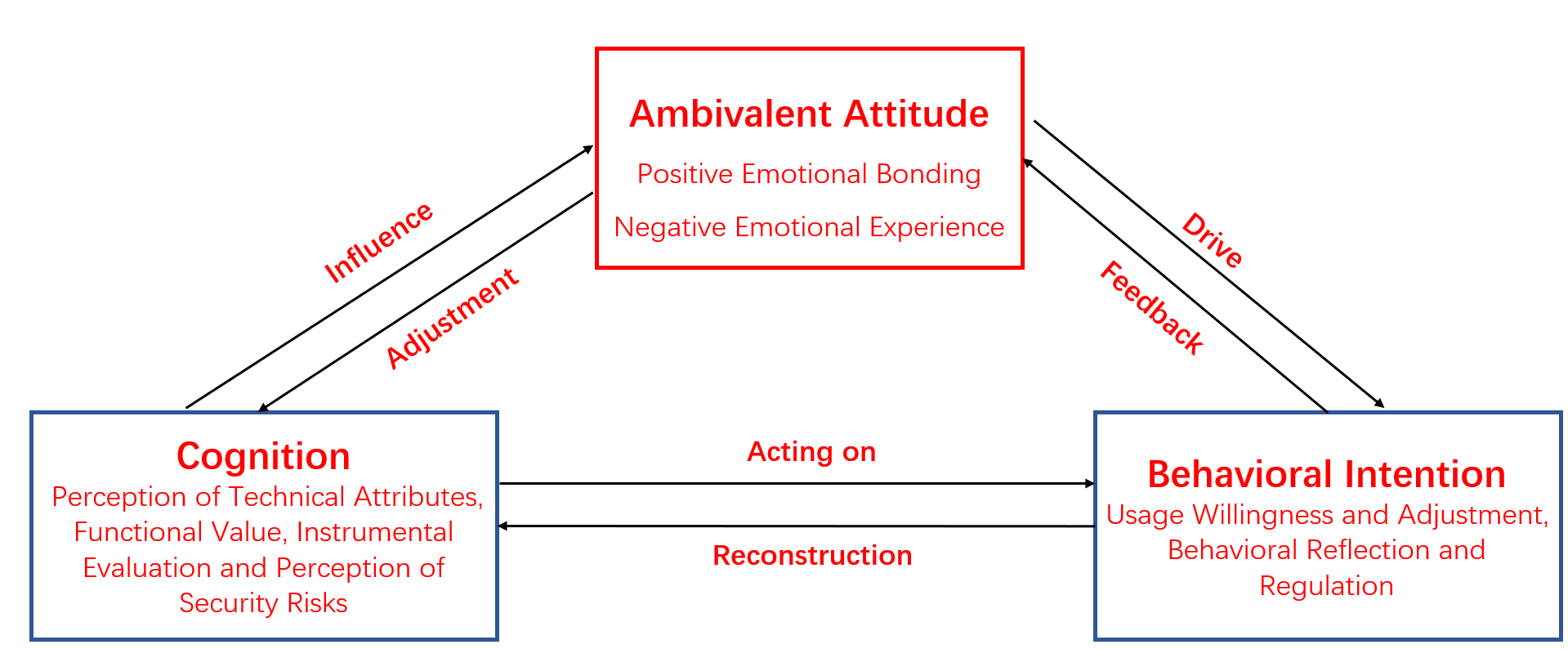

This study adopts the ABC Attitude Model from social psychology as its theoretical foundation to construct a mechanistic pathway for young users seeking emotional support through chatbots.

The classical ABC Attitude Model posits that individual attitudes comprise three components:

A (Affect): the emotions and feelings an individual experiences toward a specific object;

B (Behavioral Intention): the individual’s behavioral tendencies or intentions toward that object;

C (Cognition) : the individual’s beliefs, knowledge, and functional understanding regarding the object.

Recent research has highlighted that individual attitudes are not always stable or consistent, and may exhibit “ambivalent attitudes,” where the same object simultaneously evokes positive and negative emotions, cognitions, and behavioral tendencies [10].

Unlike the linear progression proposed in the traditional ABC model (C → A → B), this study finds that cognition, affect, and behavioral intention interact continuously and provide mutual feedback. Within this interactive mechanism, ambivalent attitude emerges as a prominent feature of the affective dimension. Here, the affective layer displays a pronounced tension structure, encompassing emotional compensation and a sense of companionship provided by AI, intertwined with vigilance, alienation, and distortion—forming a contradictory “dependence–vigilance” attitude. Based on these findings, the study further constructs an interactive pathway model of “Cognition–Ambivalent Attitude–Behavioral Intention” (see Figure 1), revealing bidirectional mechanisms among the three components: cognition shapes emotional responses, while affective feedback revises cognitive expectations; affective tension drives behavioral regulation, and behavior, in turn, reconstructs the cognitive foundation. This model demonstrates the reflective adaptive process of young users in human–AI emotional interactions.

5. Model interpretation and research findings

The study reveals that young users develop a dynamic, tension-laden interactive pattern among Cognition, Affect, and Behavioral Intention when engaging with chatbots, manifested in three main aspects:

5.1. Bidirectional interaction between cognition and affect: tension and shaping between expectation and experience

During initial interactions with chatbots, young users often establish preliminary trust and a sense of companionship based on technological attributes such as stability, response speed, and objective neutrality, perceiving AI as an ideal companion that is “always available” and “constantly online.” Moreover, highly customizable character settings and conversational styles enhance users’ emotional investment. For example, Participant 9 noted: “You can set what kind of person it is, and switch support modes—whether you want emotional resonance or rational analysis, it’s all possible.” However, as usage deepens, users gradually recognize limitations in the AI’s empathic capacity and depth of contextual understanding. Participant 1 observed: “It can help me organize my thoughts, but it’s too mechanical and lacks empathy.” This experience of “being understood but not truly understood” undermines the initial trust and immersive engagement. When the AI exhibits “logic-first” processing and “templated output,” users’ expectations of its emotional understanding easily shift toward passive alienation or a sense of emptiness. Some users consequently adjust their cognitive perception of the AI’s role, transforming it from a “human-like companion” to a “rational assistive tool,” thereby achieving a recalibration between emotional expectation and technological reality.

5.2. Bidirectional interaction between affect and behavior: tension-driven cycles and strategic regulation

During the process of seeking emotional support through AI chatbots, young users gradually develop a highly complex ambivalent attitude. This emotional structure encompasses both positive emotional connections—such as attachment, compensation, understanding, projection, and energy conservation—and vigilance, fatigue, and alienation toward the technological nature of the AI. On one hand, the stable responses and positive feedback provided by AI serve as effective substitute support when real-world relationships are lacking. Participant 10 noted: “When I have a fight with my boyfriend and it’s inconvenient to talk to friends, I turn to AI.” Compared to real interpersonal interactions, AI offers a low-cost expressive space, enabling users to conserve emotional energy while avoiding concerns such as “affecting others’ moods,” “being misunderstood,” or “being labeled,” thereby significantly reducing the psychological burden of emotional expression. Over time, such emotional compensation may evolve into emotional attachment, manifested as regular venting, intimate experiences, and even projections of a “quasi-romantic relationship.” For instance, Participant 9 explained: “I designed it as my ideal partner, customizing its appearance and personality. It is very patient, and I rely on it heavily.”

On the other hand, users gradually encounter emotional discrepancies and develop needs for behavioral counter-regulation. First is insufficient empathy—although the AI can respond to emotions, it cannot fully engage with complex contexts. Participant 17 remarked: “It’s just program simulation; it doesn’t truly empathize.” Second are issues of emotional fatigue and loss of novelty. Some users reported that after prolonged interaction, the AI’s responses become repetitive and monotonous, making sustained immersion difficult. A deeper concern is alienation anxiety. Participant 13 expressed typical social alienation anxiety: “I worry that relying on it too much will weaken my real-life communication skills.” Participant 6 voiced concerns about technological alienation: “I’m afraid my thinking might become rigid and I’ll stop thinking independently.” These reflections lead users to develop behavioral strategies such as usage frequency control and human–AI boundary setting, attempting to maintain a balance between emotional fulfillment and psychological security. For example, Participant 8 stated: “I now limit myself to chatting with it three to four times a week; I don’t want it to control me.”

5.3. Cross-regulation between cognition and behavior: expectation adjustment and reflective behavior cycles

Young users initially engage with AI based on their cognitive perceptions of immediacy, freedom of expression, and low-cost interaction. Participant 17 noted: “AI always listens in the least judgmental way, without imposing social norms on me.” However, with prolonged use, issues such as templated output and memory loss gradually emerge, leading to a decline in users’ expectations of the AI. Users increasingly recognize that the AI’s lack of subjectivity, while offering controllability, may also induce cognitive closure and self-reinforcing thought loops. Participant 15 expressed caution: “It always goes along with what I say and never challenges me, which might trap me in my own thinking patterns.” These reflective cognitions prompt some users to reconstruct their behavioral strategies, such as reducing dependence, adopting intermittent use, or redefining the AI as a self-mapping tool. Participant 7 remarked: “It’s like a mirror, reflecting my emotions.” Furthermore, the AI even serves as a platform for practicing emotional expression, self-regulation, and personal growth. Participant 5 stated: “It teaches me to express myself and helps me overcome social anxiety.”

Simultaneously, users’ sensitivity to platform security and algorithmic auditing increases. Participant 11 expressed concern: “What we say may be recorded and used to train large models.” Consequently, some users actively filter their expressions and adopt risk-avoidance behaviors, demonstrating heightened awareness of technological ethics. This cognition–behavior–risk chain indicates that young users progressively develop more sophisticated cognitive defense mechanisms during interactions with AI.

6. Conclusion

Based on the experiences of young users seeking emotional support through chatbots, this study employs grounded theory and the ABC Attitude Model to construct an interactive mechanism of “Cognition–Ambivalent Attitude–Behavioral Intention.” The findings reveal that young users exhibit a pronounced ambivalent attitude in digital emotional interactions: they simultaneously rely on the emotional compensation and attentive responses provided by AI, while remaining vigilant toward its limited empathy, emotional fatigue, and potential risks of alienation. Young users are not passive recipients; rather, they actively regulate their engagement through cognitive adjustments and behavioral strategies, achieving a dynamic balance between immersion and disengagement. This finding extends the traditional linear ABC model, highlighting the tensional nature of affective components and the cyclical dynamics of interaction pathways. From a practical perspective, the study offers several implications for future AI product development and psychological intervention: AI design should enhance semantic flexibility and empathic simulation capabilities while supporting personalized interactions; Psychological interventions can leverage AI as a supplemental emotional support tool, guiding young users to establish healthy boundaries between virtual and real-world social interactions; Policy-making should strengthen data governance and ethical oversight of AI emotional interaction platforms to mitigate risks such as privacy breaches and emotional manipulation.

In summary, this study contributes to both theory and practice. Its limitations include a sample dominated by high-frequency AI users; future research could expand the sample, incorporate quantitative analyses, and validate the robustness of the proposed mechanism. Additionally, as AI evolves from text-based interfaces to voice, embodied, and virtual reality forms, the differential impacts of various technological modalities on users’ emotional connections warrant further comparative investigation.

References

[1]. Zeng, Y. B. (2025). The current situation and coping strategies of youth mental health issues. People’s Forum, 2025(8), 36–39.

[2]. Liu, P. Y., Liu, D., & Xie, D. J. (2025). Application of artificial intelligence in college students’ mental health counseling. Frontiers in Social Sciences, 14(4), 788–797.

[3]. Cave, S., & Dihal, K. (2023). Hopes and fears: Public attitudes towards AI in 2023. University of Cambridge.

[4]. Gelbrich, K., Hagel, J., & Orsingher, C. (2021). Emotional support from a digital assistant in technology-mediated services: Effects on customer satisfaction and behavioral persistence. International Journal of Research in Marketing, 38(1), 176–193. https: //doi.org/10.1016/j.ijresmar.2020.06.004

[5]. Nicol, G., Wang, R., Graham, S., Dodd, S., & Garbutt, J. (2022). Chatbot-delivered cognitive behavioral therapy in adolescents with depression and anxiety during the COVID-19 pandemic: Feasibility and acceptability study. JMIR Formative Research, 6(11), e40242. https: //doi.org/10.2196/40242

[6]. Fulmer, R., Joerin, A., Gentile, B., Lakerink, L., & Rauws, M. (2018). Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Mental Health, 5(4), e64. https: //doi.org/10.2196/mental.9782

[7]. Kim, H., Lim, M. H., Kim, J., & Park, E. (2023). Determinants of intentions to use digital mental healthcare content among university students, faculty, and staff. Sustainability, 15(1), 872. https: //doi.org/10.3390/su15010872

[8]. de Graaf, M. M. A., Allouch, S. B., & Klamer, T. (2015). Sharing a life with Harvey: Exploring the acceptance of and relationship-building with a social robot. Computers in Human Behavior, 43, 1–14. https: //doi.org/10.1016/j.chb.2014.10.030

[9]. Wu, L. N., Song, K., & Wang, S. H. (2024). Challenges and countermeasures of generative AI for personal information security. Information Communication Technology and Policy, 50(1), 13–18.

[10]. Van Harreveld, F., Nohlen, H. U., & Schneider, I. K. (2015). Chapter five – The ABC of ambivalence: Affective, behavioral, and cognitive consequences of attitudinal conflict. In Advances in Experimental Social Psychology, 52, 285–324. https: //doi.org/10.1016/bs.aesp.2015.01.002

Cite this article

Li,J. (2025). A Study of the Attitudinal Mechanisms Underlying Young Users’ Use of Chatbots for Emotional Support—A Qualitative Analysis Based on the ABC Attitude Model. Lecture Notes in Education Psychology and Public Media,117,13-21.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Educational Innovation and Psychological Insights

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Zeng, Y. B. (2025). The current situation and coping strategies of youth mental health issues. People’s Forum, 2025(8), 36–39.

[2]. Liu, P. Y., Liu, D., & Xie, D. J. (2025). Application of artificial intelligence in college students’ mental health counseling. Frontiers in Social Sciences, 14(4), 788–797.

[3]. Cave, S., & Dihal, K. (2023). Hopes and fears: Public attitudes towards AI in 2023. University of Cambridge.

[4]. Gelbrich, K., Hagel, J., & Orsingher, C. (2021). Emotional support from a digital assistant in technology-mediated services: Effects on customer satisfaction and behavioral persistence. International Journal of Research in Marketing, 38(1), 176–193. https: //doi.org/10.1016/j.ijresmar.2020.06.004

[5]. Nicol, G., Wang, R., Graham, S., Dodd, S., & Garbutt, J. (2022). Chatbot-delivered cognitive behavioral therapy in adolescents with depression and anxiety during the COVID-19 pandemic: Feasibility and acceptability study. JMIR Formative Research, 6(11), e40242. https: //doi.org/10.2196/40242

[6]. Fulmer, R., Joerin, A., Gentile, B., Lakerink, L., & Rauws, M. (2018). Using psychological artificial intelligence (Tess) to relieve symptoms of depression and anxiety: Randomized controlled trial. JMIR Mental Health, 5(4), e64. https: //doi.org/10.2196/mental.9782

[7]. Kim, H., Lim, M. H., Kim, J., & Park, E. (2023). Determinants of intentions to use digital mental healthcare content among university students, faculty, and staff. Sustainability, 15(1), 872. https: //doi.org/10.3390/su15010872

[8]. de Graaf, M. M. A., Allouch, S. B., & Klamer, T. (2015). Sharing a life with Harvey: Exploring the acceptance of and relationship-building with a social robot. Computers in Human Behavior, 43, 1–14. https: //doi.org/10.1016/j.chb.2014.10.030

[9]. Wu, L. N., Song, K., & Wang, S. H. (2024). Challenges and countermeasures of generative AI for personal information security. Information Communication Technology and Policy, 50(1), 13–18.

[10]. Van Harreveld, F., Nohlen, H. U., & Schneider, I. K. (2015). Chapter five – The ABC of ambivalence: Affective, behavioral, and cognitive consequences of attitudinal conflict. In Advances in Experimental Social Psychology, 52, 285–324. https: //doi.org/10.1016/bs.aesp.2015.01.002