1. Introduction

Object detection involves identifying and locating objects of interest in images, determining their categories and positions. It is one of the core challenges in computer vision, centered on answering "what" and "where." [1] Due to variations in object appearance, shape, and posture, as well as interference from factors like lighting and occlusion, object detection remains highly challenging. Key components of object detection include feature extraction, classifier design, region proposal, and non-maximum suppression. The feature extraction capabilities of convolutional neural networks (CNNs) in deep learning enable the processing of complex visual information, making it a current research hotspot.Trends in object detection include lightweight models, multimodal fusion, weakly supervised or unsupervised learning, and the growing application of 3D object detection. Lightweight models reduce computational and memory demands for mobile and embedded devices. Multimodal fusion combines image, text, and audio data to improve accuracy and robustness. Weakly supervised learning minimizes annotation costs, while unsupervised learning explores self-learning and evolution. 3D object detection extends to spatial positioning and pose estimation. Challenges include detecting objects in complex backgrounds, oriented objects, and small-sized objects [2].

Data processing involves cleaning, transforming, fusing, and analyzing raw data collected by vehicle sensors to support environmental perception, route planning, and vehicle control. It ensures the safety and reliability of autonomous driving systems in diverse environments. Classic datasets like KITTI provide sensor configurations and classifications to facilitate dataset construction. Trends include deep learning dominance, BEV perception, end-to-end autonomous driving, multimodal fusion (e.g., lidar-camera fusion), self-supervised learning to reduce annotation dependency, and vehicle-to-everything (V2X) data integration. Positioning systems combine satellite, differential, inertial, and map-assisted methods. Simulation platforms are used for issue identification and scenario replication. Motion sensors include GNSS, IMU, and speed sensors. In order to promote the development of automatic driving technology, this paper systematically combs through the technology and application of data processing in the field of automatic driving, combined with the existing technology to point out the current problems and future development direction [3].Lidar point cloud technology, a critical component of perception, has seen significant breakthroughs [4].

Navigation technology in autonomous driving enables vehicles to safely and efficiently reach destinations in dynamic environments through environmental perception, high-precision maps, path planning, and control decision-making. Satellite navigation provides high-precision positioning, navigation, and timing services [5]. Core tasks include real-time positioning, global and local path planning, and motion control to ensure safety, comfort, and efficiency. Trajectory prediction analyzes dynamic interactions between traffic participants and their environment to inform driving decisions and avoid conflicts [6].Environmental modeling abstracts physical spaces for algorithmic processing, while path search and smoothing ensure feasible routes. Future challenges include data collection and annotation, algorithm optimization, legal and ethical considerations, and safety reliability.

Autonomous driving refers to a vehicle's ability to operate without human intervention, such as robotic vacuum cleaners. Breakthroughs in intelligent connected vehicles rely on AI models with superior perception, cognition, and decision-making capabilities, supported by advancements in assisted driving, smart cabins, and high-performance chips [7].Multisensor fusion integrates multimodal data (e.g., images and depth sensors) for enhanced performance [8]. Approaches to autonomous driving include single-vehicle intelligence, vehicle-road collaboration, and cloud control. High-precision maps provide lane-level prior information, improving localization and scene understanding. Predictive modules forecast obstacle trajectories, enhancing safety and intelligence.

2. Applications of AI in autonomous driving

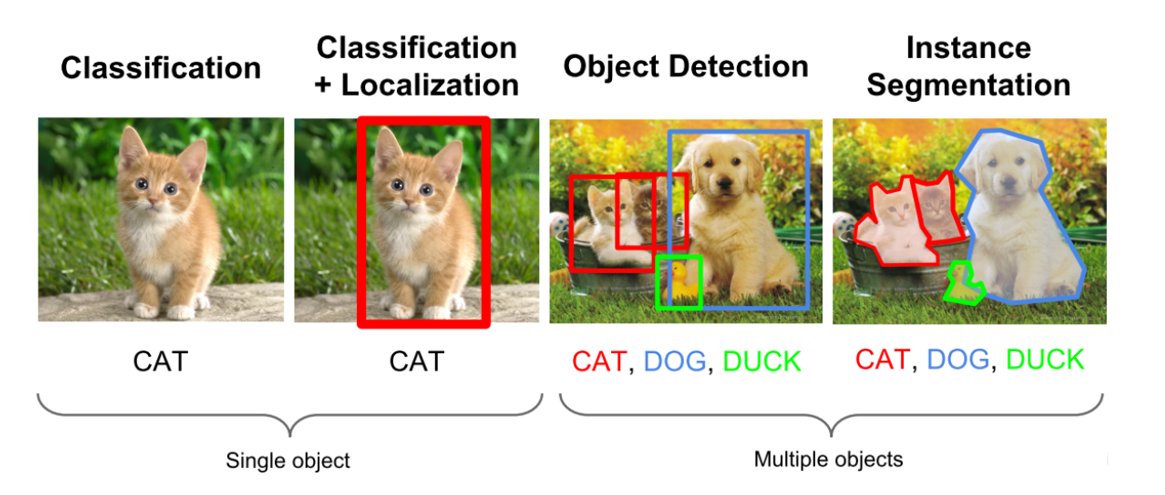

As shown in figure1, object detection identifies and locates objects in images, addressing "what" and "where." Challenges arise from object diversity and imaging conditions. Computer vision tasks include classification (identifying objects), localization (determining positions), detection (combining both), and segmentation (pixel-level labeling).

|

Detection Method |

Speed |

Accuracy |

Generalization |

Anti-Interference Ability |

|

Object Detection |

Data-dependent |

High (robust) |

Adaptive |

Strong |

|

Human Eye |

Fast |

Low (affected by noise) |

Poor |

Weak |

Table 1 compares the difference between target detection methods and human eye recognition, modern target detection is significantly better than human eye recognition in terms of accuracy, generalization and anti-jamming ability, but the speed is constrained by the complexity of the data; although the human eye recognition is fast, but it is susceptible to external interference and poor adaptability.

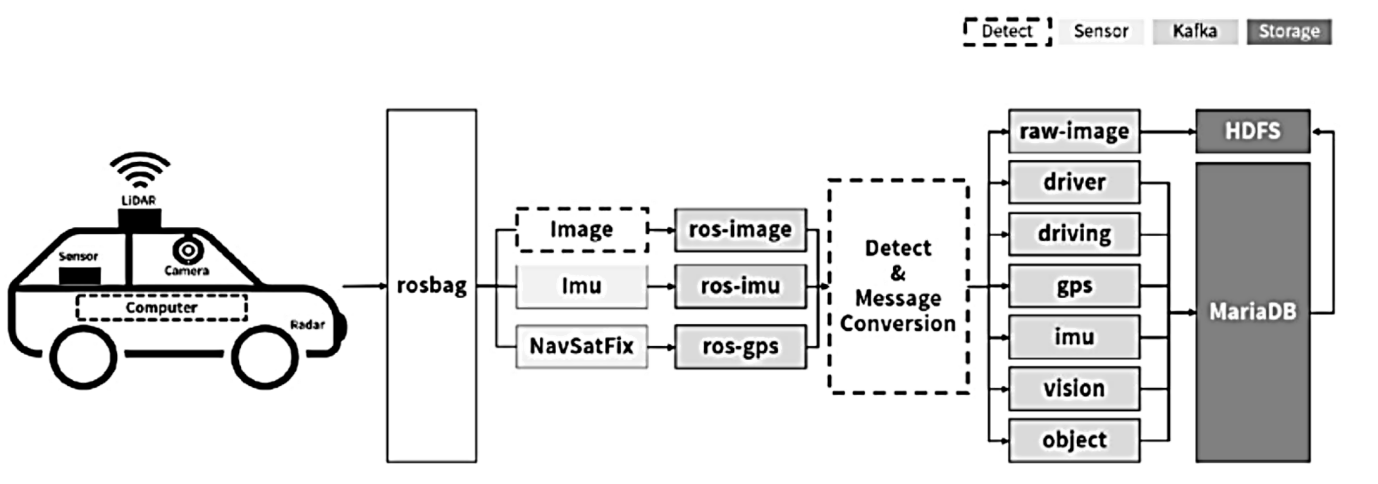

Autonomous driving integrates data from multiple sources (e.g., lidar, cameras, INS, GPS) into a unified warehouse for analysis and modeling. As shown in Figure 2 traditional vehicle data processing relies on single sensors and structured data for fixed scenarios, while autonomous driving requires multimodal sensors for unstructured data (images, point clouds) in dynamic environments.

As can be seen from Table 2, from the perspective of data processing, traditional in-vehicle data processing is an application based on "single sensor + structured data (numerical or status code)" processing, which is relatively easy to implement when applied to "fixed scenarios". However, for the data processing of autonomous driving, multimodal sensors must be used to process data in a "multi-pronged" manner, targeting unstructured data (images, point clouds), in order to meet the realization of characteristics such as "dynamic and complex environments (urban roads, harsh environments)".

|

Processing System |

Data Source |

Data Type |

Dynamic Updates |

Applicable Scenarios |

|

Traditional GPS |

Meter-level |

No real-time perception |

Offline maps |

Structured roads |

|

Autonomous Driving |

Centimeter-level |

Multisensor fusion |

Real-time crowd updates |

Complex, dynamic environments |

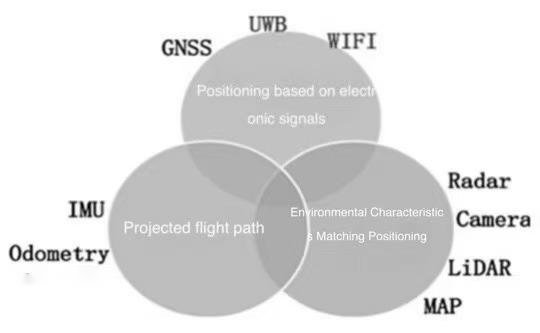

Navigation, as one of the core technologies in autonomous driving, primarily functions to accurately determine a vehicle's position and orientation within a specific coordinate system. Key factors in evaluating navigation performance include precision, robustness, and adaptability to diverse scenarios. For autonomous vehicles to operate reliably under all driving conditions, precise pose information is essential to ensure safety. Currently, mainstream positioning technologies can be categorized into three types (see Figure 3):

1.Radio Signal-Based Positioning: Technologies such as GNSS (Global Navigation Satellite System), UWB (Ultra-Wideband), Wi-Fi, and cellular network positioning (e.g., Cell Phone).

2.Dead Reckoning-Based Techniques: Including IMU (Inertial Measurement Unit), odometry, wheel speed sensors, and similar methods.

3.Environmental Feature Matching: Techniques such as visual positioning, lidar-based positioning, and multi-sensor fusion positioning.

Each of these technologies has its own strengths, providing reliable pose data to support autonomous vehicles in various navigation scenarios.

Table 3 compares traditional GPS navigation with autonomous driving navigation: traditional GPS navigation has meter-level positioning accuracy without real-time sensing, while autonomous driving navigation can reach centimeter-level positioning accuracy; and it can realize real-time sensing of the surrounding environment with the help of multiple sensors fusion technology in the car; and at the same time, it can update the map in time with the help of real-time crowdsourcing, and real-time updating of maps can be more adapted to real-time changes in the complex and changeable environment. Traditional GPS navigation cannot meet the complexity of roads other than structured roads.

|

Navigation System |

Positioning Accuracy |

Environmental Perception |

Dynamic Updates |

Applicable Scenarios |

||

|

Traditional GPS |

Meter-level |

No real-time perception |

Offline maps |

Structured roads |

||

|

Centimeter-level |

Multisensor fusion |

Real-time crowd updates |

Complex, dynamic environments |

Artificial intelligence plays a crucial decision-making role in autonomous driving. Vehicles may encounter various challenging conditions during operation, including bumps, vibrations, dust, and even high temperatures - environments where conventional computer systems cannot operate reliably for extended periods.

As shown in Table 4, the autonomous driving software system consists of four key modules:

1.Support Module: Provides fundamental services to upper-layer software modules can be seen in Table 5, including:

• Virtual Communication Module: Facilitates inter-module communication

• Log Management Module: Handles log recording, retrieval, and playback

• Process Monitoring Module: Oversees system operation status, alerts operators and takes automatic corrective actions when abnormalities occur

• Interactive Debugging Module: Enables developer interaction with the autonomous system

2.Perception Module: Utilizes sensors to acquire external environmental data

3.Cognition Module: Employs machine learning techniques to interpret sensor information

4.Behavior Module: Ultimately initiates and controls the movement of the autonomous vehicle's chassis

This modular architecture ensures reliable operation of autonomous vehicles in diverse and challenging driving conditions while maintaining system stability and decision-making capabilities

|

Unmanned Driving System |

||

|

Perception Module |

Cognition Module |

Behavior Module |

|

Obstacle Recognition Module |

DrivingEnvironment ModelingModule |

Lateral control module |

|

Traffic Marking Recognition Module |

Driving Behavior Planning Module |

Longitudinal control module |

|

Traffic Sigmal Light Recogmition Modue |

DrivingPath Planning Module |

Body electronic control module |

|

Pose Perception Module |

Driving Map Module |

|

|

Body Information Perception Module |

Human-Computer InteractionModule |

|

|

Support Module |

||||

|

Virtual Exchange Module |

LogManagement Module |

Process MonitoringModule |

Interactive Debuging Module |

|

There are differences between intelligent driving and manual driving, as shown in Table 6: The reaction time of manual driving is 1-2 seconds, while autonomous driving has a fast reaction speed in milliseconds; manual driving is restricted by human thinking, psychology and other conditions, and human fatigue, mood and other factors will affect driving; while intelligent driving monitors in all directions (360 degrees) without fatigue, which can minimize the occurrence of accidents and is safer.

|

Driving Mode |

Reaction Speed |

Perception Ability |

Safety |

|

Intelligent Driving |

Millisecond level |

Multi-sensor fusion, 360-degree (dead-angle-free) monitoring, not affected by fatigue |

The theoretical accident rate is low (no drunk driving, fatigue driving) |

|

Manual Driving |

1-2 seconds |

Limited vision and hearing |

90% of driving accidents are caused by humans (such as distraction, speeding) |

3. Conclusion

Modern object detection surpasses human vision in accuracy, generalization, and anti-interference but is constrained by data complexity. Traditional vehicle systems suit fixed scenarios, while autonomous driving uses multimodal sensors for unstructured data in complex environments. Traditional GPS offers meter-level accuracy without real-time perception, whereas autonomous navigation achieves centimeter-level precision with dynamic updates. Human drivers react slowly (1–2 seconds) and are prone to errors, while autonomous vehicles react instantly with 360° monitoring and no fatigue. Despite progress, challenges remain in AI applications for autonomous driving, and ongoing technological updates are essential.

References

[1]. Li C, Zhang Z, Liang Z, Yao C, Zhang J, Yan R & Wu P. (2024). A Review of Object Detection Models.Computer Research and Development, 62, . 1-35.

[2]. Wang Y.(2025). Review of Object Detection Algorithms Based on Deep Learning Science.Technology Information, 23 (02) 64-66. doi: 10.16661 / j.carol carroll nki. 1672-3791.2407-5042-8334.

[3]. Cai Z.(2025). Research Progress and Development Direction of Bridge Health Monitoring Data Processing and Analysis Technology.The Bridge in the World, 53 (3), 102-110. doi: 10.20052 / j.i SSN. 1671-7767.2025.03.015.

[4]. Wang Y, Bian L, Yang T, Zhang Z, Du E, Xu Q & Zhang L.(2025) A Review of the Development of Satellite Navigation Technology.Space Electronics Technology, 22(S1), 20-34.

[5]. Wang X. (2025). Research on Group Financial Decision Support System Based on Big Data Analysis.Metallurgical Finance and Accounting, 44(02), 41-43.

[6]. Liu X, Chen H, Yu D, Chen Y & Zhou Y. (2025). A Review of Trajectory Prediction for Long and Short Duration Characteristics of Autonomous Vehicles.Journal of Frontiers of Computer Science and Technology, 1-23.

[7]. Liu M.(2025). Research on Path Planning Algorithm for Autonomous Vehicles Based on Deep Learning.Automobile Knowledge, 25(06), 152-154.

[8]. Liu Z, Zhang H & Li Y.(2025). A Review of Integrated Perception and Decision-making for Autonomous Driving.Journal of Intelligent Science and Technology, 7(01), 4-20.

Cite this article

Zeng,Z. (2025). Application of artificial intelligence in the field of autonomous driving. Advances in Engineering Innovation,16(8),23-27.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Journal:Advances in Engineering Innovation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Li C, Zhang Z, Liang Z, Yao C, Zhang J, Yan R & Wu P. (2024). A Review of Object Detection Models.Computer Research and Development, 62, . 1-35.

[2]. Wang Y.(2025). Review of Object Detection Algorithms Based on Deep Learning Science.Technology Information, 23 (02) 64-66. doi: 10.16661 / j.carol carroll nki. 1672-3791.2407-5042-8334.

[3]. Cai Z.(2025). Research Progress and Development Direction of Bridge Health Monitoring Data Processing and Analysis Technology.The Bridge in the World, 53 (3), 102-110. doi: 10.20052 / j.i SSN. 1671-7767.2025.03.015.

[4]. Wang Y, Bian L, Yang T, Zhang Z, Du E, Xu Q & Zhang L.(2025) A Review of the Development of Satellite Navigation Technology.Space Electronics Technology, 22(S1), 20-34.

[5]. Wang X. (2025). Research on Group Financial Decision Support System Based on Big Data Analysis.Metallurgical Finance and Accounting, 44(02), 41-43.

[6]. Liu X, Chen H, Yu D, Chen Y & Zhou Y. (2025). A Review of Trajectory Prediction for Long and Short Duration Characteristics of Autonomous Vehicles.Journal of Frontiers of Computer Science and Technology, 1-23.

[7]. Liu M.(2025). Research on Path Planning Algorithm for Autonomous Vehicles Based on Deep Learning.Automobile Knowledge, 25(06), 152-154.

[8]. Liu Z, Zhang H & Li Y.(2025). A Review of Integrated Perception and Decision-making for Autonomous Driving.Journal of Intelligent Science and Technology, 7(01), 4-20.