1. Introduction

In recent years, due to the emergence of more and more new energy vehicles, autonomous driving has appeared in public life. In the implementation of autonomous driving, field programmable gate arrays (FPGAs) are widely used due to their unique technical advantages. Born in the early 1980s, FPGAs were initially embraced for their reprogram ability and parallel processing abilities. As the autonomous driving field burgeoned, demanding high-speed data processing and real-time responses, the adoption of FPGAs surged [1]. Their role has become indispensable in harnessing real-time data analytics, deep learning, and facilitating secure communications — attributes central to advancing autonomous driving technologies. Hence, FPGAs stand as a cornerstone in the macro backdrop, synergizing with the autonomous driving ecosystem’s needs and advancing its potentials.

In the article, the author uses many cases and techniques to explain the real-time processing capabilities, parallel computing properties and high customization potential of FPGA in autonomous driving [2]. These features play a large role in enhancing the robustness and efficiency of intelligent driving systems. Parallel computing through FPGA can speed up the processing of many tasks, which is an important condition for vehicles to be able to load a large number of sensors.

By deeply studying the field of FPGA technology and clarifying its salient features, this review aims to deepen our understanding of the important role of FPGA in the field of intelligent driving and provide some knowledge base for car company staff, media workers, and amateurs. This article analyzes the cutting-edge development of FPGA autonomous driving systems to explore innovative solutions that will feature efficient, safe and complex autonomous driving in the future [3].

2. The role of FPGA in autonomous driving

2.1. FPGA technology and characteristics

2.1.1. Theoretical basis definition

Autonomous driving stands at the intersection of various advanced technologies ranging from deep learning to real-time sensor fusion. Field-Programmable Gate Arrays (FPGA) have emerged as a pivotal technology in this landscape, catalyzing developments with its unique real-time processing, parallel computing, and customization features [4]. This article delineates the crucial characteristics and roles of FPGA in fostering a new era of autonomous driving. The FPGA automatic driving characteristics is shown in figure 1.

Figure 1. FPGA automatic driving characteristics [4].

The introduction of autonomous vehicles requires a complete shift in the computing paradigm, with computing demands skyrocketing due to the need to process and analyze data streams from multiple sensors in real time. FPGAs, with their tailor-made architectural flexibility and computational capabilities, have proven to be indispensable for meeting these computing needs. Understanding the role and characteristics of FPGAs is the cornerstone of mastering the complexities of autonomous driving technology.

2.1.2. Real-time Processing

In the realm of autonomous vehicles, decisions must be made in fractions of a second to ensure safety and efficacy. FPGAs facilitate real-time processing through their configurable logic blocks and interconnected arrays, allowing for the swift and simultaneous handling of multiple data streams. This attribute is crucial in autonomous driving where sensor data, encompassing LiDAR, radar, and camera inputs, must be processed concurrently to make instantaneous driving decisions [4].

Moreover, the low-latency attribute of FPGAs enhances real-time processing, ensuring rapid data throughput which is vital for autonomous vehicles that rely on instantaneous decision-making processes [5].

2.1.3. Parallel Computing

Central to FPGA’s utility in autonomous driving is its parallel computing capability. Unlike traditional microprocessors that handle tasks sequentially, FPGAs can process multiple data streams in parallel, significantly reducing the time required for complex computations.

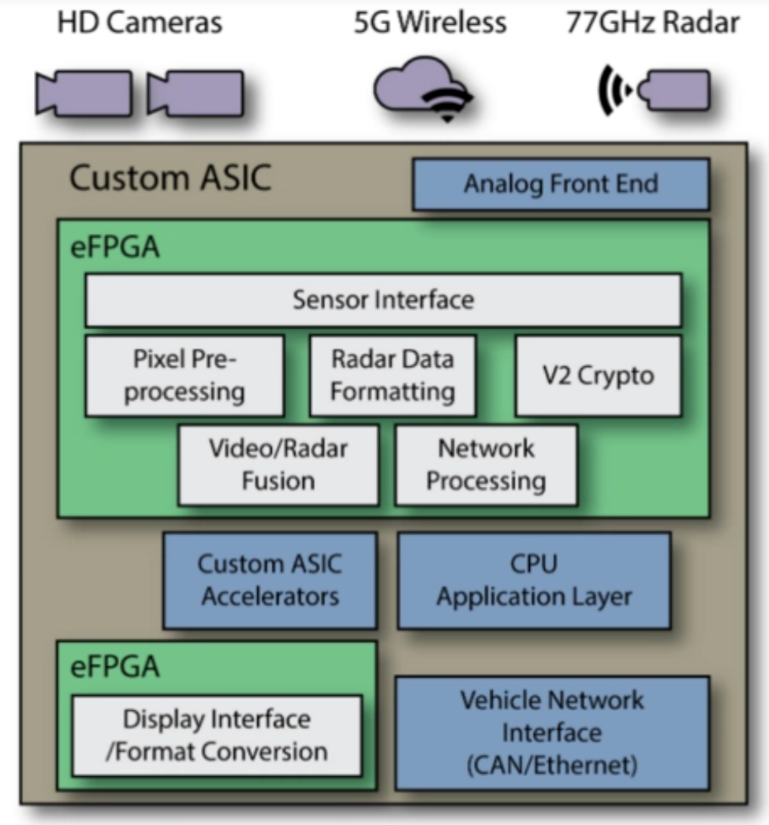

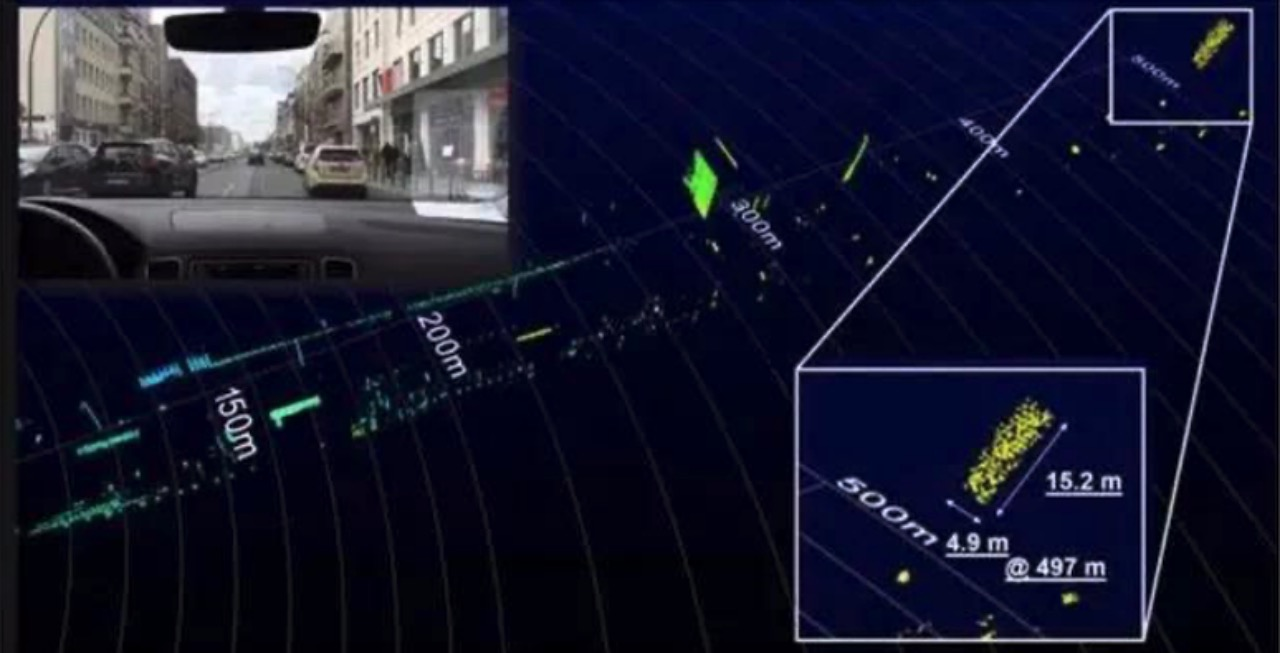

In the context of autonomous vehicles, this translates to faster and more efficient data processing, where different subsystems such as sensor inputs, control algorithms, and communication protocols can operate simultaneously, fostering a seamless driving experience [6]. The FPGA autonomous driving real-time processing is shown in figure 2.

Figure 2. FPGA autonomous driving real-time processing [6].

2.1.4. Applications in Autonomous Driving

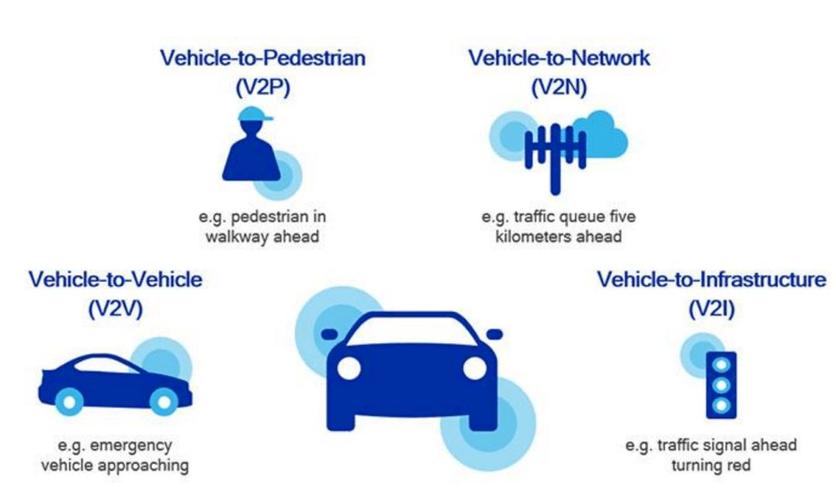

FPGA is widely used in the field of autonomous driving and is crucial. FPGA is mainly used to improve the efficiency and safety of the autonomous driving system. Sensor fusion is where its advantages can be seen in the vehicle’s autonomous driving module. FPGA can receive and coordinate data from various sensors to achieve the vehicle’s “vision” of the surrounding environment, which is the core process of safe and efficient autonomous driving [7]. Additionally, they facilitate learning in deep learning to recognize objects and carefully plan vehicle paths, avoiding obstacles while navigating smoothly. FPGA is a very important part of vehicle-to-everything (V2X) communication. The FPGA autonomous driving V2X is shown in figure 3.

Figure 3. FPGA autonomous driving V2X [7].

This system ensures safe and fast communication between vehicles and external elements such as other vehicles or infrastructure, and plays a decisive role in the safety and navigation capabilities of autonomous vehicles [8]. FPGAs allow vehicles to communicate not only with each other but also with the larger transportation ecosystem to ensure a seamless and safe driving experience.

2.2. Composition of FPGA-based autonomous driving system

2.2.1. Sensor

In FPGA-based autonomous driving systems, sensor components constitute the primary data acquisition medium, helping to perceive the environment and facilitate real-time decision-making. These systems utilize a large number of sensors, including LiDAR (Light Detection and Ranging), RADAR (Radio Detection and Ranging), ultrasonic sensors and cameras, to provide a comprehensive understanding of the environment around the vehicle. A powerful sensor framework ensures the acquisition of detailed environmental data and sensor fusion to eliminate inconsistencies and create a comprehensive environment model [9]. Using the parallel processing capabilities of FPGAs, these data streams can be processed simultaneously, thereby enhancing the real-time response capabilities of autonomous vehicles. The FPGA automatic driving sensor is shown in figure 4.

Figure 4. FPGA automatic driving sensor [9].

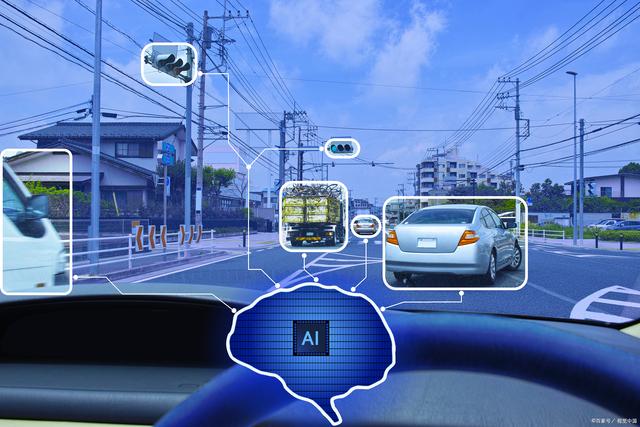

2.2.2. Image recognition algorithm

Image recognition stands as a linchpin in autonomous driving systems, offering the ability to discern and classify objects in the vehicle’s vicinity. In FPGA-based systems, image recognition algorithms are executed with high efficiency due to the inherent parallel computing capabilities of FPGAs. These algorithms, often rooted in deep learning and neural networks, analyze imagery data procured from onboard cameras and LiDAR systems [10]. Through techniques such as convolutional neural networks (CNNs), the system can identify pedestrians, other vehicles, and road signs, thereby constructing a dynamic map of the surroundings. FPGAs offer the advantage of reconfigurability, enabling continuous optimization and adaptation of image recognition algorithms to emerging technologies and standards. The FPGA automatic driving image algorithm is shown in figure 5.

Figure 5. FPGA automatic driving image recognition algorithm [10].

2.2.3. Control algorithm

Central to the autonomous driving system is the control algorithm, which navigates the vehicle based on the input from the sensor fusion and image recognition systems. Control algorithms are responsible for all the autonomous actions executed by the vehicle, including speed regulation, lane-keeping, and collision avoidance [11]. Leveraging FPGA’s customizable and reprogrammable nature, control algorithms can be continuously updated and optimized to enhance safety and efficiency. Furthermore, FPGA’s low-latency processing ensures that control signals are transmitted promptly, ensuring timely responses to dynamic road conditions.

2.3. Discuss the outstanding performance of FPGA in the field of autonomous driving

In the context of autonomous driving, FPGA’s (Field-Programmable Gate Arrays) outstanding performance can be particularly seen in applications such as Tesla’s autonomous driving solutions. In such highly advanced systems, FPGAs serve as critical components that facilitate real-time, high-throughput processing of data emanating from a complex sensor network encompassing LiDAR, radars, and cameras.

Furthermore, Tesla continuously optimizes its autonomous driving algorithms based on real-world data, and FPGAs play a crucial role here by offering the flexibility to update and adapt the hardware configuration, thereby enhancing the performance over time.

By integrating FPGA technology, companies like Tesla are not only enhancing the safety and efficiency of autonomous vehicles but also forging a path for innovations that can redefine mobility and transportation in the coming years [12].

3. Specific cases of FPGA in autonomous driving

3.1. YOLO technical analysis.

In the context of intelligent driving, understanding the role and technical intricacies of YOLO (You Only Look Once) is paramount. YOLO fundamentally revolutionized object detection by employing a single neural network to scan an image only once rather than multiple times, which is the case in traditional frameworks, thereby significantly speeding up the detection process [13].

3.1.1. Evolution and Version Differentiation

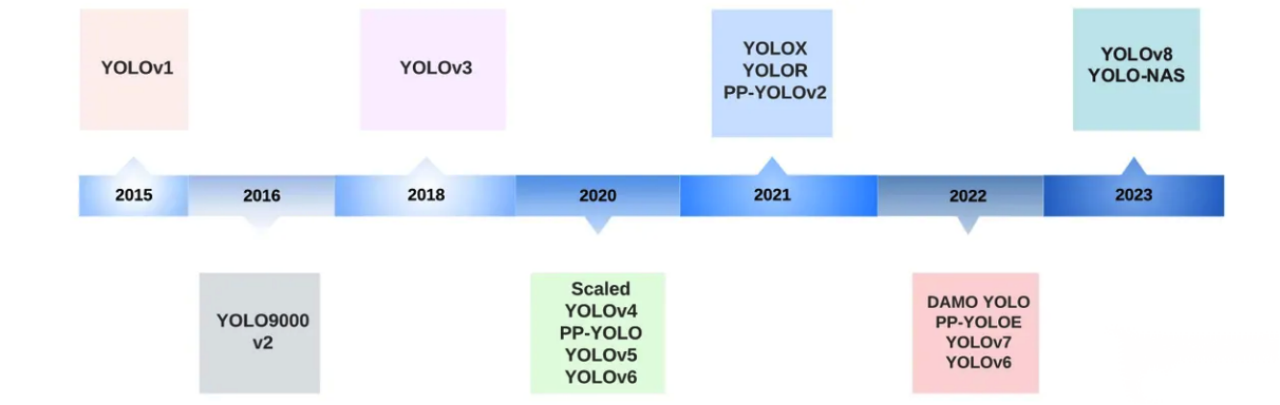

The figure 6 below shows the evolutionary trajectory of YOLO from the first generation to the present, depicting its progress from the first version to the latest iteration, focusing on the increase in the number of layers, enhanced detection accuracy and computational optimization to promote real-time target detection, which is a key goal . Intelligent driving attributes.

From YOLOv1, a simple but groundbreaking approach, to YOLOv4, which uses CSPDarknet53 as the backbone, to YOLOv8, which proposes a new SOTA model, significant progress has been made, including the incorporation of panoramic segmentation, a necessary feature for a comprehensive understanding of traffic scenes [14].

The YOLO framework stands out for its excellent speed and accuracy and has become the framework (MobileNet) used by many autonomous driving companies. It can quickly and reliably identify objects in images. Since its inception, the YOLO series has evolved through multiple iterative versions, solving limitations, and improving performance based on previous versions. The YOLO evolution history is shown in figure 6. This article aims to comprehensively review the development of the YOLO framework, from the initial YOLOv1 to the latest YOLOv8, with relatively complete innovations, differences and improvements between each version [14].

Figure 6. YOLO evolution history (Photo/Picture credit: Original).

The new model uses the basic concepts and architecture of the original YOLO model to lay the foundation for subsequent development. Improvements and enhancements introduced with each version from YOLOv2 to YOLOv8 [14]. These improvements involve various aspects such as network design, loss function modification, anchor box adaptability and input resolution scaling. By studying these developments, the evolution of the YOLO framework and its impact on object detection is gradually refined.

3.1.2. YOLO Integration with FPGA

An essential aspect to focus on is the synthesis of YOLO with FPGA technologies, where FPGA facilitates parallel processing capabilities that optimize the YOLO algorithm for high-speed computations, essential in real-time object detections. Here, a comprehensive understanding of the hardware-software co-design is pivotal, allowing the YOLO algorithm to be tailored specifically to leverage FPGA’s parallel processing capabilities, thus forging a path for more efficient and robust intelligent driving systems [13].

3.2. FPGA in Customization of Algorithms and Hardware Acceleration

3.2.1. daptive Cruise

Analyzing FPGA’s role in adaptive cruise control showcases how it engenders smooth traffic flow and safety. The FPGA technology enables the rapid processing of sensory inputs, thus allowing for more reactive and intelligent adaptive cruise control systems, which can respond in real-time to dynamic traffic conditions, thereby fostering safer and more efficient driving experiences.

3.2.2. Obstacle Recognition

In obstacle recognition, FPGA’s parallel processing capabilities can be harnessed to quickly process inputs from various sensors and cameras to recognize and respond to obstacles in real-time [4]. Detailed insights into the algorithms and methodologies employed in FPGA facilitated obstacle recognition would be provided, highlighting the technologically advanced safety feature it brings to modern vehicles.

3.2.3. Lane Keeping

Demonstrate the role of FPGAs in real-time processing by delving into lane keeping. FPGA technology is synchronized with YOLO’s real-time object detection, which not only keeps lanes accurate but also adapts to changing road conditions, taking a critical step towards fully autonomous driving [4][13].

3.3. Specific Cases of FPGA Technology in Intelligent Assisted Driving

3.3.1. Autonomous emergency braking

The first example is an autonomous emergency braking system that leverages the fast parallel processing of FPGAs to instantly analyze data from vehicle sensors to facilitate immediate braking responses to prevent collisions. This case illustrates the practical applicability of FPGA technology in preventing accidents and protecting lives.

3.3.2. Intelligent parking assistance

The second case is the role of FPGA in the intelligent parking assistance system. FPGAs help quickly process data from cameras and sensors to help people park in tight spaces. This scenario illustrates the important role of FPGAs in enhancing the utility and safety of modern parking solutions.

4. Analysis of FPGA in the Field of Autonomous Driving

4.1. Applicability Analysis

Autonomous driving stands as a beacon in the future of transportation, merging sophisticated technologies to foster safer and more efficient roadways. At the crux of this advancement lies FPGA, a technology endowed with unparalleled flexibility and computational efficiency. This segment sheds light on the multifaceted applicability of FPGA in autonomous driving [15].

4.1.1. Security

Security in autonomous driving encompasses both the physical safety of passengers and the cybersecurity of the vehicle’s control systems. FPGAs exhibit a heightened resilience to cyber threats due to their configurational nature, making it inherently difficult for unauthorized interventions to meddle with the system operations [16].

Furthermore, by facilitating real-time processing and parallel computing, FPGAs enhance physical safety. They foster quicker response times, enabling autonomous vehicles to swiftly adapt to dynamic road conditions and potential hazards, thereby reducing the likelihood of accidents and collisions.

4.1.2. Power Consumption

As the push for greener technologies accelerates, the power consumption of computational units in autonomous vehicles comes into focus. FPGAs stand as a beacon in this front, offering lower power consumption compared to traditional CPU and GPU setups [1]. They allow for customized hardware solutions, enabling developers to create optimized pathways that substantially reduce power drain while maintaining high performance, thus fostering energy-efficient autonomous driving solutions.

4.1.3. Road Traffic Operation

At a societal level, FPGAs have the potential to revolutionize road traffic operations. Their implementation in Vehicle-to-Everything communication systems promises to enhance traffic flow, reducing congestions and fostering a smoother traffic operation.

Moreover, FPGA’s facilitation of deep learning algorithms in autonomous vehicles can potentially harmonize traffic operations, leveraging predictive analytics to foresee and mitigate traffic congestion points, and effectively optimizing road use, which translates to a more organized and efficient road traffic operation, reducing travel time and environmental footprint [17].

4.2. Prospects of FPGA in the Field of Autonomous Driving

Looking to the future, FPGAs are an important part of the future of autonomous driving. The inherent characteristics of parallel processing and low latency provide a good foundation for building highly responsive and efficient autonomous driving systems.

The flexibility of FPGAs facilitates continued advancement and optimization, allowing hardware to be reconfigured to adapt to evolving technologies and standards, thus future-proofing autonomous driving systems. This adaptability is particularly important to ensure that the algorithm logic of FPGA in autonomous driving does not become outdated.

The role of FPGAs in facilitating vehicle-to-infrastructure communications, enhancing safety and traffic management through real-time data exchange between vehicles and transportation infrastructure, will be greatly enhanced [18]. This evolution shows that the system can dynamically anticipate and respond to challenges, making roads safer and more efficient.

Furthermore, the role of FPGAs in facilitating machine learning and artificial intelligence has not yet reached its peak. Future advancements may see more sophisticated deep learning algorithms being integrated into autonomous driving systems, providing improved object recognition, predictive analytics, and ultimately a safer, more intuitive autonomous driving experience.

5. Conclusion

This article takes an in-depth look at the integral role of FPGAs in autonomous driving technology, focusing on their unique capabilities in parallel computing, real-time processing, and customization. Detailed analysis and case studies demonstrate the usefulness of FPGAs in enhancing various subsystems, such as sensor fusion, deep learning, and vehicle-to-everything (V2X) communications, thereby helping to provide a safer and smoother driving experience. The continuous improvement of FPGA in the automatic driving algorithm for real-time target detection through YOLO technology shows the important position of this technology in the field of automatic driving.

A comprehensive exploration of FPGAs highlights their adaptability and ability to navigate the evolving autonomous technology landscape. As deep learning algorithms mature and sensor technology is integrated, FPGAs are increasingly important in autonomous driving. Highly customizable to maintain and increase levels of efficiency and security without requiring extensive hardware modifications.

In the future, FPGA is becoming an important part of the V2X intelligent transportation ecosystem. Communication between vehicles and between vehicles and transportation infrastructure will become the core of autonomous vehicle driving in the future, thereby avoiding congestion, reducing traffic accidents, and achieving efficient, safe and sustainable transportation solutions. Integrated with smart city infrastructure, these smart systems can guide the development of safer, more efficient, and sustainable cities of the future. The continuous advancement of FPGA technology is ushering in a new era of intelligent interaction in the field of autonomous driving.

References

[1]. Hao C, Sarwari A, Jin Z, et al. A hybrid GPU+ FPGA system design for autonomous driving cars. 2019 IEEE International Workshop on Signal Processing Systems (SiPS). IEEE, 2019: 121-126.

[2]. Liu S, Liu L, Tang J, et al. Edge computing for autonomous driving: Opportunities and challenges. Proceedings of the IEEE, 2019, 107(8): 1697-1716.

[3]. Padmaja B, Moorthy C H V, Venkateswarulu N, et al. Exploration of issues, challenges and latest developments in autonomous cars. Journal of Big Data, 2023, 10(1): 61.

[4]. Tatar G, Bayar S. Real-Time Multi-Task ADAS Implementation on Reconfigurable Heterogeneous MPSoC Architecture. IEEE Access, 2023.

[5]. Whig P, Kouser S, Velu A, et al. Fog-IoT-Assisted-Based Smart Agriculture Application. Demystifying Federated Learning for Blockchain and Industrial Internet of Things. IGI Global, 2022: 74-93.

[6]. Liu L, Lu S, Zhong R, et al. Computing systems for autonomous driving: State of the art and challenges. IEEE Internet of Things Journal, 2020, 8(8): 6469-6486.

[7]. Grigorescu S, Trasnea B, Cocias T, et al. A survey of deep learning techniques for autonomous driving. Journal of Field Robotics, 2020, 37(3): 362-386.

[8]. Jing H, Gao Y, Shahbeigi S, et al. Integrity monitoring of GNSS/INS based positioning systems for autonomous vehicles: State-of-the-art and open challenges. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(9): 14166-14187.

[9]. Fayyad J, Jaradat M A, Gruyer D, et al. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors, 2020, 20(15): 422.

[10]. Carver B, Esposito T, Lyke J. Cloud-based computation, and networking for space. Open Architecture/Open Business Model Net-Centric Systems and Defense Transformation 2019. SPIE, 2019, 11015: 221-236.

[11]. Gupta A, Anpalagan A, Guan L, et al. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array, 2021, 10: 100057.

[12]. Butt F A, Chattha J N, Ahmad J, et al. On the integration of enabling wireless technologies and sensor fusion for next-generation connected and autonomous vehicles. IEEE Access, 2022, 10: 14643-14668.

[13]. Silva J, Coelho P, Saraiva L, et al. Validating the Use of Mixed Reality in Industrial Quality Control: A Case Study. 2023.

[14]. Sun Y, Li Y, Li S, et al. PBA-YOLOv7: An Object Detection Method Based on an Improved YOLOv7 Network. Applied Sciences, 2023, 13(18): 10436.

[15]. López C. Artificial intelligence and advanced materials. Advanced Materials, 2023, 35(23): 2208683.

[16]. Tcholtchev N. Scalable and efficient distributed self-healing with self-optimization features in fixed IP networks. Technische Universitaet Berlin (Germany), 2019.

[17]. Athavale J, Baldovin A, Mo S, et al. Chip-level considerations to enable dependability for eVTOL and Urban Air Mobility systems. 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC). IEEE, 2020: 1-6.

[18]. Trubia S, Severino A, Curto S, et al. Smart roads: An overview of what future mobility will look like. Infrastructures, 2020, 5(12): 107.

Cite this article

Yue,P. (2024). Analysis and prospects of automobile intelligent assisted driving characteristics based on FPGA technology. Applied and Computational Engineering,50,52-60.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 4th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Hao C, Sarwari A, Jin Z, et al. A hybrid GPU+ FPGA system design for autonomous driving cars. 2019 IEEE International Workshop on Signal Processing Systems (SiPS). IEEE, 2019: 121-126.

[2]. Liu S, Liu L, Tang J, et al. Edge computing for autonomous driving: Opportunities and challenges. Proceedings of the IEEE, 2019, 107(8): 1697-1716.

[3]. Padmaja B, Moorthy C H V, Venkateswarulu N, et al. Exploration of issues, challenges and latest developments in autonomous cars. Journal of Big Data, 2023, 10(1): 61.

[4]. Tatar G, Bayar S. Real-Time Multi-Task ADAS Implementation on Reconfigurable Heterogeneous MPSoC Architecture. IEEE Access, 2023.

[5]. Whig P, Kouser S, Velu A, et al. Fog-IoT-Assisted-Based Smart Agriculture Application. Demystifying Federated Learning for Blockchain and Industrial Internet of Things. IGI Global, 2022: 74-93.

[6]. Liu L, Lu S, Zhong R, et al. Computing systems for autonomous driving: State of the art and challenges. IEEE Internet of Things Journal, 2020, 8(8): 6469-6486.

[7]. Grigorescu S, Trasnea B, Cocias T, et al. A survey of deep learning techniques for autonomous driving. Journal of Field Robotics, 2020, 37(3): 362-386.

[8]. Jing H, Gao Y, Shahbeigi S, et al. Integrity monitoring of GNSS/INS based positioning systems for autonomous vehicles: State-of-the-art and open challenges. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(9): 14166-14187.

[9]. Fayyad J, Jaradat M A, Gruyer D, et al. Deep learning sensor fusion for autonomous vehicle perception and localization: A review. Sensors, 2020, 20(15): 422.

[10]. Carver B, Esposito T, Lyke J. Cloud-based computation, and networking for space. Open Architecture/Open Business Model Net-Centric Systems and Defense Transformation 2019. SPIE, 2019, 11015: 221-236.

[11]. Gupta A, Anpalagan A, Guan L, et al. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array, 2021, 10: 100057.

[12]. Butt F A, Chattha J N, Ahmad J, et al. On the integration of enabling wireless technologies and sensor fusion for next-generation connected and autonomous vehicles. IEEE Access, 2022, 10: 14643-14668.

[13]. Silva J, Coelho P, Saraiva L, et al. Validating the Use of Mixed Reality in Industrial Quality Control: A Case Study. 2023.

[14]. Sun Y, Li Y, Li S, et al. PBA-YOLOv7: An Object Detection Method Based on an Improved YOLOv7 Network. Applied Sciences, 2023, 13(18): 10436.

[15]. López C. Artificial intelligence and advanced materials. Advanced Materials, 2023, 35(23): 2208683.

[16]. Tcholtchev N. Scalable and efficient distributed self-healing with self-optimization features in fixed IP networks. Technische Universitaet Berlin (Germany), 2019.

[17]. Athavale J, Baldovin A, Mo S, et al. Chip-level considerations to enable dependability for eVTOL and Urban Air Mobility systems. 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC). IEEE, 2020: 1-6.

[18]. Trubia S, Severino A, Curto S, et al. Smart roads: An overview of what future mobility will look like. Infrastructures, 2020, 5(12): 107.