1. Introduction

According to the data of the World Health Organization, the global death toll caused by traffic accidents continues to rise every year [1,2], with about 1.35 million people die from road traffic accidents, about 3,700 deaths every day, and the mortality rate per thousand vehicles reaches 6.4[3]. This grim situation forces countries to seek effective solutions to deal with the deteriorating traffic safety situation.

In this context, autonomous driving technology has attracted much attention as a potential solution. By utilizing advanced sensors, computer vision and artificial intelligence technologies, autonomous driving technology enables vehicles to drive autonomously without human intervention, greatly improving traffic safety and the ease of travel. Automatic driving technology can not only effectively reduce the incidence of traffic accidents [4], reduce casualties and property losses, but also provide people with a safer and more convenient travel experience [5].

The end-to-end approach plays a key role in autonomous driving technology, especially when dealing with complex traffic scenarios. Liu Guoqing et al. optimized the automatic emergency braking system (AEB) by improving the Honda model and improved safety and driving comfort [6]. Cui Shihai et al. studied the impact of AEB system on the head injury of children in buses and found that the system could significantly reduce the injury risk of children during emergency braking [7]. The lane change model proposed by Zhen et al has realized more comfortable and safe lane change operation by considering human driving behavior [8]. The dynamic coordinated control strategy proposed by LanieAbi et al can effectively reduce vehicle trajectory errors when road conditions are inconsistent [9]. In addition, multi-sensor fusion technology has also been enhanced with the support of an end-to-end approach, although challenges remain. End-to-end methods based on deep learning, such as DDPG algorithm, have been successfully applied to automatic lane change tasks, enabling vehicles to safely and efficiently complete lane change operations in complex traffic scenarios [10,11]. These studies show that the end-to-end approach is widely used in trajectory planning and decision control of autonomous vehicles, and also shows significant advantages in complex scenarios. However, although the end-to-end method has great potential in theory, it still faces many challenges in practical application.

This review aims at providing a comprehensive analysis of the current advancements, challenges, and future directions in vehicle trajectory planning and decision control, focusing on both end-to-end and traditional rule-based approaches. Firstly, through literature research, this paper collect research papers using end-to-end and rule-based methods in the field of vehicle trajectory planning and decision control in recent years, focusing on deep learning, reinforcement learning and other technologies. Then, different methods are classified according to their application scenarios and algorithm architectures, and their performance in different complex scenarios is compared. Then, by selecting typical application cases, this paper analyze in depth the different effects of the end-to-end approach and the traditional rule-based approach in solving specific problems. This paper will comprehensively evaluate the advantages and disadvantages of end-to-end approach and rule-based approach in complex scenarios, and propose possible directions for future research. Finally, a systematic review and analysis of vehicle trajectory planning and decision control technology based on end-to-end approach is carried out to provide guidance for future research and exploration of safer and more efficient transportation modes.

2. Modular approach

The modular approach divides the automatic driving system into different modules to solve different tasks, such as perception, pedestrian detection, lane following, etc. [12]. Traditional autonomous driving technology may rely heavily on high-precision maps, and this dependence may limit its flexibility and adaptability in practical applications. In contrast, end-to-end technology is more flexible and can directly map perceptual information to steering commands for autonomous driving [13]. However, despite the great potential in this field, there are still many challenges and unanswered questions, including multimodality, interpretability, causal confusion, robustness, and world models [14]. The following categories will be based on the application of the modular approach to specific complex scenarios, including urban traffic scenarios, highway scenarios, and so on.

2.1. Urban traffic scenes

Modular systems can have good effects in some complex scenarios, such as road conditions with multiple traffic lights, pedestrian crossings and roundabouts. Kunz F et al developed a modular, robust and sense-independent environment sensing system [15]. The article describes a modified Mercedes E-Class experimental car, which is equipped with a variety of sensors including lidar, monochrome cameras and radar, covering a wide range of perception in front and back of the vehicle. The system uses the classical occupancy grid mapping method to estimate the occupancy state of each grid unit through detailed grid division of the environment, and uses the maximum stable extreme value region (MSER) feature for positioning, and realizes the high precision vehicle self-positioning through particle filters. The paper also details the design of the behavior layer, which is responsible for generating the vehicle's target state and driving strategy, such as maintaining the target speed, following the vehicle ahead, or stopping at a specific location, and the trajectory planning module, which is based on optimal control theory, generates a smooth and comfortable driving trajectory and adjusts when encountering obstacles or kinematic constraints.

Extensive testing of the system on public roads near Ulm University, including traffic lights, pedestrian crossings and roundabouts, demonstrated the robustness and reliability of the system in complex traffic environments.

Under adverse environmental conditions such as sunset, darkness or rainy days, the system performs well in a single scene, mainly due to its robust design of multi-sensor fusion, but there is still a weak problem in the interaction of diverse complex scenes.

2.2. Highway Scene

The modular system remains safe and reliable at high vehicle speeds. Ardelt et, al., presents a probabilistic framework for highly automated driving on highways and explores its application in real traffic in detail [16]. The system features fully automatic lane change (LC) and does not require driver approval. In this paper, a fully integrated probabilistic model is proposed to optimize driving strategies and decision-making processes by considering measurement uncertainties in the whole driving process.

The main goal of the research is to achieve 100% autonomous driving on the A9 motorway (Munich-Ingolstadt) without the need for driver intervention. The system is based on the fusion of data from multiple sensors, such as lidar, radar and cameras, combined with high-precision digital maps, to provide an all-round perception of the vehicle's environment. The paper emphasizes that through the modular system architecture, it can be more flexible for development and expansion. The driving strategy module makes decisions through a multi-level decision tree structure to determine the best driving behavior.

Figure 1. Automatic lane change operation on the highway |

In the experimental part, the system was evaluated on public roads and closed test sites. The test shows that the system can safely and reliably conduct automatic driving under high-speed driving and complex traffic conditions, especially automatic lane change operation (Shown on Fig.1).

2.3. Advantages and disadvantages of modular autonomous driving method

Advantages:

(i)Task decomposition and team collaboration: Complex tasks are decomposed into multiple independent subtasks, which enables the engineering team to focus on the optimization of each subtask.

(ii)System predictability: With clear input and output definitions and deterministic rules between modules, the modular system behaves relatively predictably within its known capabilities, enhancing the stability and reliability of the system.

(iii)Interpretability: The explanation of system behavior becomes easier. When an error or unexpected behavior occurs, intermediate results between modules can be traced to locate the root cause of the problem and improve the debugging efficiency of the system.

Disadvantages:

(i)Lack of global optimality: Since the input and output of each module are predefined, in some cases these fixed inputs and outputs may not be optimal. For example, different road and traffic conditions may require consideration of different information extracted from the environment, and modular systems often struggle to respond flexibly to these changes.

(ii)Information loss and uncertainty processing: Information transfer between modules may be missing certain details or uncertainties.

(iii)Difficulties in covering scenes: Detailed systems are needed to deal with complex scenarios. In addition, certain long-tail cases are difficult to cover comprehensively, resulting in systems that may perform poorly in the face of rare situations.

(iv)Waste of resources: The resolution of some sub-problems can become unnecessarily complex and resource-intensive. For example, the perception module may attempt to detect all objects, when in fact the detection of some objects has little impact on the decision, resulting in wasted resources and extended computation time.

The modular autonomous driving approach improves the predictability and interpretability of the system through task decomposition and module independence, but also brings challenges such as lack of global optimality, loss of information, and difficulty in covering complex scenarios.

3. End to end approach

End-to-end autonomous driving is a deep learning-based approach for training autonomous driving systems. Traditional autonomous driving systems typically consist of multiple modules, such as perception, prediction, planning, and control. These modules need to be designed and optimized separately and then put together sequentially to complete the autonomous driving task. The end-to-end approach is different in that it attempts to establish a single end-to-end mapping directly from input (e.g., sensor data) to output (e.g., vehicle control instructions), that is, mapping the original input to the final output without explicitly defining or designing intermediate steps [17].

3.1. End-to-end application in urban road conditions

With the continuous deepening of the research in the field of automatic driving, the research has gone from a single constraint to a multi-constraint condition, and the urban road conditions are complicated, automatic driving can not only from the route and signal light constraints and other simple considerations, but also include more information on the road, which is not only complex, but also dynamic.

Zhihui Guo et al. put forward a kind of urban driving algorithm based on hierarchical conditions to mimic - Hierarchical Conditional Imitation In the end-to-end method proposed in this paper, although it is only necessary to input roadside buildings, pedestrians, signal light information, navigation information and other information to the input terminal, deep learning will lead to problems such as reduced decision-making effect and cost of computing resources as the complexity of task input increases and parameters increase. In order to solve these problems, the idea of simplifying complexity is adopted in this paper. HCIL uses an end-to-end approach to reduce manual processing and subsequent processing, thereby giving the system more room for automatic control. The vehicle sensor obtains the environment and vehicle information, and controls the vehicle vertically and horizontally after processing [18]. Feature networks such as Resnet image feature extraction network, speed feature mapping network and global planning navigation instructions are used to extract image feature, vehicle speed feature and navigation command feature respectively. Information is passed to sub-tasks of the lower layer through decision-making of the upper layer network and speed-assisted tasks, so as to decide which distance task the vehicle will perform.

In order to solve the problems such as difficulty in feature extraction and unstable output of traditional end-to-end methods in dynamic interaction scenarios, [19] is proposed based on a figure convolution imitative learning network (GCN) and conditions (CIL) end-to-end automated driving lane changing method. Specifically, the dynamic information in the driving scene is represented in the form of a graph structure, and GCN is used to efficiently aggregate these information to generate driving instructions that the self-driving car should take. These commands are then used as high-level commands for the CIL, combined with other perceptual data, and ultimately mapped to specific vehicle control actions to enable collision-free autonomous lane changes in complex environments. GCN represents each vehicle in the dynamic driving scene as a node in the graph structure, and effectively aggregates the interactive information between vehicles by connecting the self-driving node and the surrounding vehicle nodes, and generates the driving behavior instructions of the self-driving vehicle. CIL uses a branch structure, with each branch learning a different driving task (such as going straight, changing lanes left, and changing lanes right) to reduce cumulative error and more accurately reflect the differences between driving actions. The experiment was verified on CARLA simulation platform, and the results show that the proposed method is significantly superior to the traditional end-to-end method in terms of success rate, collision rate and the accuracy of lane change selection, especially in complex dynamic scenes. In addition, this paper also compare and analyze the traditional IL, IL using GCN and traditional CIL, which further proves the effectiveness and superiority of the proposed method in dealing with dynamic interactive environment.

3.2. End-to-end application in blind areas of visual field

End-to-end approach can be used to deal with dangerous driving environments through special methods, such as in the process of driving, the driver and the vehicle often encounter the problem of blind field of vision, in recent years with the continuous progress of technology, these problems can be solved through some methods.

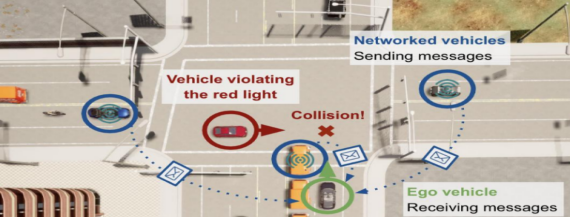

Cui Jiaxun et al. proposed that the blind spot information is utilized to avoid traffic accidents through inter-vehicle communication, and an end-to-end learning framework is proposed to improve decision making using LiDAR data shared between vehicles to enhance autonomous driving in hazardous or emergency situations [20]. Fig.2 by using an end-to-end learning model called “COOPERNAUT”, which mainly uses cross-vehicle perception for vision-based cooperative driving. Experiments were conducted on the AUTOCASTSIM platform, then using time-to-completion (SCT) and SCT ratios as measures of success, comparing the COOPERNAUT model to both non-V2V-communicating and V2V-communicating driving baselines, and finally evaluating the various V2V approaches in a fair manner, using the same neighbor selection process. The final experimental results show that the COOPERNAUT model has a 40% higher average success rate in handling complex traffic situations and reduces the required bandwidth requirement by a factor of five compared to the traditional self-driving model. In multiple repeated runs COOPERNAUT model shows lower collision rate and higher average success rate and also achieves 5.10Mbps communication throughput without data compression so COOPERNAUT performs better in dealing with the constraints as compared to the traditional single-vehicle sensing system.

Y. Ma et al. point out that vision-centered Bird 's eye View (BEV) perception provides an intuitive way to represent the world, which is helpful to reduce the blind area of visual field, because the BEV perspective can provide a broader and unobstructed view of the environment [21].The vision method is not only limited to single sensor data, but also can effectively integrate with the data of other sensors such as radar, lidar, etc., to further improve the ability to capture and process information in the blind area. The paper details the rapid progress that deep learning has made in advancing BEV perception in recent years, including several new approaches to solving the BEV perception challenge. These methods can train neural networks to extract features from image data and then build a more complete environment model, effectively reducing blind areas.

Figure 2. Using communication to obtain information of blind spot

Although the current results have certain limitations, with the development of future technologies, it is believed that the problem of visual blind areas can be well solved.

3.3. End-to-end application in extreme weather

In extreme weather, the original sensing system of the vehicle may fail. At this time, some special methods can be uesd to perceive the surrounding information of the vehicle.

Rivera Velázquez JM, et al. focuses on exploring the performance of thermal imaging sensors in extreme haze conditions, especially for autonomous driving applications [22]. The aim is to explore the operational limits of thermal imaging technology in harsh conditions and whether thermal imaging cameras can provide reliable target detection capabilities under haze conditions. Two approaches to test scenarios, static and dynamic, were utilized. Firstly, thermal targets of different temperatures were used in the static test to evaluate the contrast and visibility changes of the thermal imaging camera under different haze concentrations. Secondly, in the dynamic test, a real-world scenario is simulated to test the detection capability under extreme haze by moving electric cars and pedestrians then the operational limits of the thermal imaging camera are investigated by analyzing the camera's field of view (AOV), target temperature, distance, and haze intensity (MOR). The results show that under extreme haze conditions, thermal imaging cameras with 18° and 30° field of view are able to reliably detect pedestrians at a meteorological optical distance (MOR) of 13 meters with a detection rate of 90%. The field of view of the camera and the intensity of the haze have a significant effect on the detection capability, and a narrower field of view can maintain a higher detection rate at a lower MOR.

In addition to thermal imaging technology, the application of deep learning-driven radar processing technology to actual autonomous driving systems can also effectively deal with perception problems in extreme weather.

As pointed out by Z. Chen and X. Huang, radar performs particularly well in bad weather, such as rain, fog, sandstorms, etc., because they can penetrate these obstacles and provide accurate information about the surrounding environment [23].Radar not only measures the distance of an object, but also its radial velocity at the same time, which is crucial for obstacle avoidance and path planning in autonomous driving. The importance of different radar signal representations, such as point cloud and spectrogram, in deep learning models is emphasized. These representations help models understand and utilize radar data better. It also discusses a variety of deep learning models for autonomous driving tasks such as detection and classification, which can be trained to recognize and understand key information in radar signals. The technology mainly combines the multi-sensor fusion model of radar signals and camera images, which can provide a more comprehensive and robust environment perception, especially in extreme weather, when the camera may be severely affected, radar data can be an important supplement. Radar and camera have their advantages and disadvantages, radar performs better in bad weather, while camera can provide rich visual information when the light is good.

In conclusion, these two special sensing technologies are not yet fully popular for use in extreme environmental conditions, but it is optimistic that they can be improved and applied in all aspects in the future.

3.4. Advantages and disadvantages of end-to-end autonomous driving

Advantages:

(i)Simple structure: By mapping raw sensor data directly to the control signal, the system architecture is simplified, reducing the complex feature engineering and sensor fusion required in a modular approach. This makes the development and integration process more intuitive and efficient.

(ii)Self-optimization: The model is trained on large amounts of data, optimizing itself to achieve maximum overall performance. There is no need to manually define rules or intermediate representations, and the model can be automatically adjusted according to different driving scenarios, which improves the robustness of the system.

(iii)Handle complex interactions: It deals more effectively with complex interactions between the vehicle and the environment by learning global information in the environment directly, without having to process the information layer by layer through predefined modules.

(iv)Data-driven improvements: As the system handles more driving scenarios, the end-to-end model has the potential to continuously improve its performance by being data-driven, reducing the reliance on human intervention.

Disadvantages:

(i)Complexity of sensor fusion: Since end-to-end systems rely on data from multiple sensors to make decisions, it is a challenge to effectively fuse sensor data with these different characteristics.

(ii)Visual abstraction and presentation design challenges: The end-to-end approach relies on visual abstraction, but how to design an effective intermediate representation remains a challenge.

(iii)Complexity of the world model: In a complex and dynamic driving environment, it is difficult to accurately predict key details in the raw image space.

(iv)Difficulties with multitasking learning: Rely on multi-tasking learning (MTL) to share knowledge and improve generalization. However, how to choose the best task combination and adjust the weight of the loss function is a complex problem, and the sparse supervisory signal also increases the difficulty of extracting useful information.

(v)Lack of interpretability: End-to-end models are often seen as "black boxes" that make it difficult to explain the decisions they make or trace the sources of errors. This uninterpretability challenges both the debugging and public acceptance of the system. Although attention mechanisms and saliency maps can provide some clues, their validity and fidelity remain to be verified [24].

Overall, the end-to-end approach to autonomous driving improves system performance and flexibility through simplified architecture and self-optimization, but also faces significant challenges in sensor fusion, presentation design, interpretability, and more.

4. Discussion

In the development of autonomous driving technology, modular traditional autonomous driving and end-to-end autonomous driving represent two distinct methodologies. Both of them have their advantages and disadvantages in system architecture, computing requirements, performance optimization and interpretability, which are worthy of further discussion.

4.1. Comparison between end-to-end and modular futures

(i)Structure comparison: The end-to-end approach simplifies the system architecture by mapping raw sensor data directly to the control signal, reducing the complex feature engineering and sensor fusion required in the modular approach.

(ii)System predictability comparison: Because of clear input and output definitions and deterministic rules between modules, modular systems behave relatively predictably within their known capabilities. However, the performance of the end-to-end model based reinforcement learning is limited in the highly dynamic environment, and it is difficult to accurately predict key details in the original image space.

(iii)Adaptability comparison: Different road and traffic conditions may require consideration of different information extracted from the environment, and modular systems often struggle to respond flexibly to these changes. In the end-to-end approach, the model is trained based on a large amount of data, without manually defining rules or intermediate representations, and the model can be automatically adjusted according to different driving scenarios.

(iv)Interpretability comparison: When errors or unexpected behaviors occur in modular design, intermediate results between modules can be tracked to locate the root cause of the problem, thus improving the debugging efficiency of the system. End-to-end models are often seen as "black boxes" that make it difficult to explain the decisions they make or trace the sources of errors.

4.2. Analysis and comparison of end-to-end and modular advantages and disadvantages

Modular Traditional autonomous driving system builds a highly structured architecture by separating functional modules, and its advantages lie in the independence and replaceable of modules, which facilitates the debugging, optimization and troubleshooting of the system. However, this modular design also brings the limitations of reduced information transfer efficiency, limited system performance, and local optimal decision.

In contrast, end-to-end autonomous driving takes a more holistic approach, enabling direct mapping from sensor input to control output through techniques such as deep learning. The main advantages of this approach are its potential global optimization capabilities and faster response times to better deal with complex driving environments. Although end-to-end autonomous driving also faces the challenges of poor interpretability, large training data requirements, and difficulty in dealing with extremely rare scenarios, its global optimization and rapid response capabilities make it a clear advantage in handling variable scenarios and making optimal driving decisions.

4.3. Comprehensive evaluation and future development direction

Modular and end-to-end autonomous driving each have their own unique advantages and limitations. From the current development situation, the modular approach is still dominant in many commercial autonomous driving systems because of its better controllability and interpretability. Especially in scenarios where gradual transition and validation is required, a modular approach provides a more robust path.

However, as deep learning technology continues to advance and data-driven approaches continue to improve, end-to-end autonomous driving shows great potential, especially in its ability to handle complex, dynamic scenarios. Therefore, the future direction of development may not be the complete replacement of one of the two, but through the integration of the two, combining the modular controllability and the global optimization capabilities of the end-to-end approach to build safer and more efficient autonomous driving systems.

This integration can include adopting a modular design on the overall framework, but introducing an end-to-end learning approach in individual key modules such as perception or decision making, or leveraging data generated by the end-to-end system to enhance the performance of the modular system. Either way, the goal is to improve the system's decision-making ability and response speed in complex environments while ensuring its safety and interpretability.

5. Conclusion

This paper aims to compare and evaluate the combined performance of modular and end-to-end autonomous driving systems. The modular approach, which subdivides the autonomous driving system into independent modules such as perception, positioning, planning and control, provides a highly structured solution with the advantages of controllability and interpretability, but faces the problem of low efficiency of information transfer between modules and local optimization. In contrast, end-to-end autonomous driving systems utilize deep learning to achieve direct mapping of perceived control, demonstrating global optimization capabilities and the potential to handle complex scenarios, but their characteristics and high requirements for training data pose challenges to the safety and interpretability of the system.

In addition, the modular traditional automatic driving system has good maintainability and interpretability, which is suitable for the scenario of gradual development and verification, but its main limitation is that the coupling between modules may restrict the system performance, and it may not achieve global optimization when dealing with complex scenarios. The end-to-end autonomous driving system, by mapping directly from sensor data to control commands, shows strong global optimization capabilities and adaptability to complex scenarios, but its dependence on large amounts of labeled data makes the safety and reliability of the system face challenges. And future research should focus on the integration of modularity and end-to-end, and explore the possibility of combining modular design with end-to-end learning methods to improve the decision-making ability of the system in complex environments. At the same time, the research on how to improve the interpretability of the end-to-end system should be continued, ensure its security in practical applications, and improve the training method of the end-to-end system, reduce the dependence on many labeled data, and improve the performance of the system in special scenarios.

Authors Contribution

All the authors contributed equally and their names were listed in alphabetical order.

References

[1]. Bin Qiu, Fang Wang.The development trend of China's automobile industry in 2023[J].Automotive Industry Research, 2023, (01):29.

[2]. Ahmed S K, Mohammed M G, Abdulqadir S O, et al. Road traffic accidental injuries and deaths: A neglected global health issue[J]. Health science reports, 2023, 6(5): e1240.

[3]. Kewei Wang.An Empirical Analysis of the Factors Affecting Traffic Accident Casualties[D]. Shanghai University of Finance and Economics, 2023.

[4]. Park J, Kim D, Huh K. Emergency collision avoidance by steering in critical situations[J]. International journal of automotive technology, 2021, 22(1): 173-184.

[5]. Petrović Đ, Mijailović R, Pešić D. Traffic accidents with autonomous vehicles: type of collisions, manoeuvres and errors of conventional vehicles’ drivers[J]. Transportation research procedia, 2020, 45: 161-168.

[6]. Guoqing Liu, Zhendong Zhao, Jinguo Wu.AEB Simulation Test Based on Comfort[J]. Automobile Applied Technology, 2022, 47(17):2024.DOI:10.16638/j.cnki.16717988.2022.017.004.

[7]. Shihai Cui, Wei Gao, Haiyan Li, Lijuan He, Shijie Ruan, Wenle Lv.Effects of Autonomous Emergency Brakingon Brain Injury of Pediatric Occupants in Buses[J].Journal of Medical Biomechanics, 2023, 38(06):12411247.DOI:10.16156/j.10047220.2023.06.028.

[8]. Zhao Y, Ito D, Mizuno K. AEB effectiveness evaluation based on car-to-cyclist accident reconstructions using video of drive recorder[J]. Traffic injury prevention, 2019, 20(1): 100-106.

[9]. Abi L, Jin D, Zheng S, et al. Dynamic coordinated control strategy of autonomous vehicles during emergency braking under split friction conditions[J]. IET Intelligent Transport Systems, 2021, 15(10): 1215-1227.

[10]. Hu H, Lu Z, Wang Q, et al. End-to-End automated lane-change maneuvering considering driving style using a deep deterministic policy gradient algorithm[J]. Sensors, 2020, 20(18): 5443.

[11]. Tang J, Li L, Ai Y, et al. Improvement of end-to-end automatic driving algorithm based on reinforcement learning[C]//2019 Chinese Automation Congress (CAC). IEEE, 2019: 5086-5091.

[12]. Tampuu A, Matiisen T, Semikin M, et al. A survey of end-to-end driving: Architectures and training methods[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 33(4): 1364-1384.

[13]. Grigorescu S, Trasnea B, Cocias T, et al. A survey of deep learning techniques for autonomous driving[J]. Journal of field robotics, 2020, 37(3): 362-386.

[14]. ChenL, WuP, ChittaK, etal. End to end autonomous driving:Challenges and frontiers[J].arXivpreprintarXiv:2306.16927, 2023.

[15]. Kunz F, Nuss D, Wiest J, et al. Autonomous driving at Ulm University: A modular, robust, and sensor-independent fusion approach[C]//2015 IEEE intelligent vehicles symposium (IV). IEEE, 2015: 666-673.g Systems, 2020, 33(4): 1364-1384.

[16]. Ardelt M, Coester C, Kaempchen N. Highly automated driving on freeways in real traffic using a probabilistic framework[J]. IEEE Transactions on Intelligent Transportation Systems, 2012, 13(4): 1576-1585.

[17]. Teng S, Hu X, Deng P, et al. Motion planning for autonomous driving: The state of the art and future perspectives[J]. IEEE Transactions on Intelligent Vehicles, 2023, 8(6): 3692-3711.

[18]. Zhihui Guo.Research on Hierarchical Conditional Imitation Learning Algorithm for Multi-Scenario Urban Driving[D]. Beijing Jiaotong University, 2022.

[19]. Lü Yanzhi, Chao Wei, Yuanhao He. An End-to-End Lane Change Method for Autonomous Driving Based on GCN and CIL[J]. Automotive Engineering, 2023, 45(12): 2310-2317.

[20]. Cui J, Qiu H, Chen D, et al. Coopernaut: End-to-end driving with cooperative perception for networked vehicles[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 17252-17262.

[21]. Ma Y, Wang T, Bai X, et al. Vision-centric bev perception: A survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

[22]. Velázquez J M R, Khoudour L, Saint Pierre G, et al. Analysis of thermal imaging performance under extreme foggy conditions: Applications to autonomous driving[J]. journal of Imaging, 2022, 8(11).

[23]. Chen Z, Huang X. End-to-end learning for lane keeping of self-driving cars[C]//2017 IEEE intelligent vehicles symposium (IV). IEEE, 2017: 1856-1860.

[24]. Chen L, Wu P, Chitta K, et al. End-to-end autonomous driving: Challenges and frontiers[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

Cite this article

Liu,Z.;Ma,L.;Yu,X.;Zhang,Z. (2024). Traditional Modular and End-to-End Methods in Vehicle Trajectory Planning and Decision Control in Complex Scenario: Review. Applied and Computational Engineering,111,35-44.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-MLA 2024 Workshop: Mastering the Art of GANs: Unleashing Creativity with Generative Adversarial Networks

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Bin Qiu, Fang Wang.The development trend of China's automobile industry in 2023[J].Automotive Industry Research, 2023, (01):29.

[2]. Ahmed S K, Mohammed M G, Abdulqadir S O, et al. Road traffic accidental injuries and deaths: A neglected global health issue[J]. Health science reports, 2023, 6(5): e1240.

[3]. Kewei Wang.An Empirical Analysis of the Factors Affecting Traffic Accident Casualties[D]. Shanghai University of Finance and Economics, 2023.

[4]. Park J, Kim D, Huh K. Emergency collision avoidance by steering in critical situations[J]. International journal of automotive technology, 2021, 22(1): 173-184.

[5]. Petrović Đ, Mijailović R, Pešić D. Traffic accidents with autonomous vehicles: type of collisions, manoeuvres and errors of conventional vehicles’ drivers[J]. Transportation research procedia, 2020, 45: 161-168.

[6]. Guoqing Liu, Zhendong Zhao, Jinguo Wu.AEB Simulation Test Based on Comfort[J]. Automobile Applied Technology, 2022, 47(17):2024.DOI:10.16638/j.cnki.16717988.2022.017.004.

[7]. Shihai Cui, Wei Gao, Haiyan Li, Lijuan He, Shijie Ruan, Wenle Lv.Effects of Autonomous Emergency Brakingon Brain Injury of Pediatric Occupants in Buses[J].Journal of Medical Biomechanics, 2023, 38(06):12411247.DOI:10.16156/j.10047220.2023.06.028.

[8]. Zhao Y, Ito D, Mizuno K. AEB effectiveness evaluation based on car-to-cyclist accident reconstructions using video of drive recorder[J]. Traffic injury prevention, 2019, 20(1): 100-106.

[9]. Abi L, Jin D, Zheng S, et al. Dynamic coordinated control strategy of autonomous vehicles during emergency braking under split friction conditions[J]. IET Intelligent Transport Systems, 2021, 15(10): 1215-1227.

[10]. Hu H, Lu Z, Wang Q, et al. End-to-End automated lane-change maneuvering considering driving style using a deep deterministic policy gradient algorithm[J]. Sensors, 2020, 20(18): 5443.

[11]. Tang J, Li L, Ai Y, et al. Improvement of end-to-end automatic driving algorithm based on reinforcement learning[C]//2019 Chinese Automation Congress (CAC). IEEE, 2019: 5086-5091.

[12]. Tampuu A, Matiisen T, Semikin M, et al. A survey of end-to-end driving: Architectures and training methods[J]. IEEE Transactions on Neural Networks and Learning Systems, 2020, 33(4): 1364-1384.

[13]. Grigorescu S, Trasnea B, Cocias T, et al. A survey of deep learning techniques for autonomous driving[J]. Journal of field robotics, 2020, 37(3): 362-386.

[14]. ChenL, WuP, ChittaK, etal. End to end autonomous driving:Challenges and frontiers[J].arXivpreprintarXiv:2306.16927, 2023.

[15]. Kunz F, Nuss D, Wiest J, et al. Autonomous driving at Ulm University: A modular, robust, and sensor-independent fusion approach[C]//2015 IEEE intelligent vehicles symposium (IV). IEEE, 2015: 666-673.g Systems, 2020, 33(4): 1364-1384.

[16]. Ardelt M, Coester C, Kaempchen N. Highly automated driving on freeways in real traffic using a probabilistic framework[J]. IEEE Transactions on Intelligent Transportation Systems, 2012, 13(4): 1576-1585.

[17]. Teng S, Hu X, Deng P, et al. Motion planning for autonomous driving: The state of the art and future perspectives[J]. IEEE Transactions on Intelligent Vehicles, 2023, 8(6): 3692-3711.

[18]. Zhihui Guo.Research on Hierarchical Conditional Imitation Learning Algorithm for Multi-Scenario Urban Driving[D]. Beijing Jiaotong University, 2022.

[19]. Lü Yanzhi, Chao Wei, Yuanhao He. An End-to-End Lane Change Method for Autonomous Driving Based on GCN and CIL[J]. Automotive Engineering, 2023, 45(12): 2310-2317.

[20]. Cui J, Qiu H, Chen D, et al. Coopernaut: End-to-end driving with cooperative perception for networked vehicles[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2022: 17252-17262.

[21]. Ma Y, Wang T, Bai X, et al. Vision-centric bev perception: A survey[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.

[22]. Velázquez J M R, Khoudour L, Saint Pierre G, et al. Analysis of thermal imaging performance under extreme foggy conditions: Applications to autonomous driving[J]. journal of Imaging, 2022, 8(11).

[23]. Chen Z, Huang X. End-to-end learning for lane keeping of self-driving cars[C]//2017 IEEE intelligent vehicles symposium (IV). IEEE, 2017: 1856-1860.

[24]. Chen L, Wu P, Chitta K, et al. End-to-end autonomous driving: Challenges and frontiers[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2024.