1. Introduction

The mobile robot environment perception system plays a crucial role in modern automation and intelligence fields. With the advancement of technology, mobile robots have not only been widely used in industrial production, but also demonstrated enormous potential in multiple fields such as services, healthcare, and agriculture [1]. However, the perception ability of a single sensor is limited in complex environments, making it difficult to meet the requirements of high precision and high reliability. Multimodal sensor fusion technology can significantly improve the comprehensiveness and accuracy of environmental perception by integrating data from multiple sensors. For example, LiDAR provides high-precision distance information, enabling accurate depth perception, while cameras capture rich visual details such as color and texture. The combination of these complementary data types allows for a more comprehensive understanding of the environment. The combination of the two can achieve more accurate environmental modeling and target recognition.

Research on multimodal sensor fusion technology improves both the autonomous navigation capability and the adaptability of mobile robots in complex environments. For example, in the field of autonomous driving, multimodal sensor fusion can effectively address the diversity of lighting changes, weather conditions, and road conditions, thereby improving driving safety. In addition, the application of this technology in agricultural robots has also shown great potential. By integrating multiple sensor data, robots can more accurately identify crop status, soil conditions, and pest and disease situations, thereby achieving precision agricultural management. The research on multimodal sensor fusion for mobile robot environment perception system has important theoretical and practical significance. From a theoretical perspective, this study contributes to a deeper understanding of sensor fusion algorithms and data processing technologies, and promotes technological progress in related fields. From a practical application perspective, this research result can significantly improve the performance of mobile robots, promote their widespread application in various industries, and bring significant economic and social benefits. Therefore, this study not only has academic value, but also has important application prospects.

Currently, multimodal sensor technology is gradually becoming the foundation of Mobile Robot Environmental Perception Systems. With the development of modern computing technologies such as Artificial Intelligence and Machine Learning, its application prospects are becoming increasingly broad [2]. In recent years, research has shown that effectively integrating multiple sensors such as Vision Sensors, LiDAR, Ultrasonic Sensors, and Inertial Measurement Units (IMUs) can significantly enhance the perception ability of robots in complex environments [3]. For example, in Dynamic Obstacle Recognition, multimodal fusion technology can be used to more accurately identify and predict the motion trajectory of obstacles, thereby optimizing the decision-making process.

2. Mobile robot environment perception system

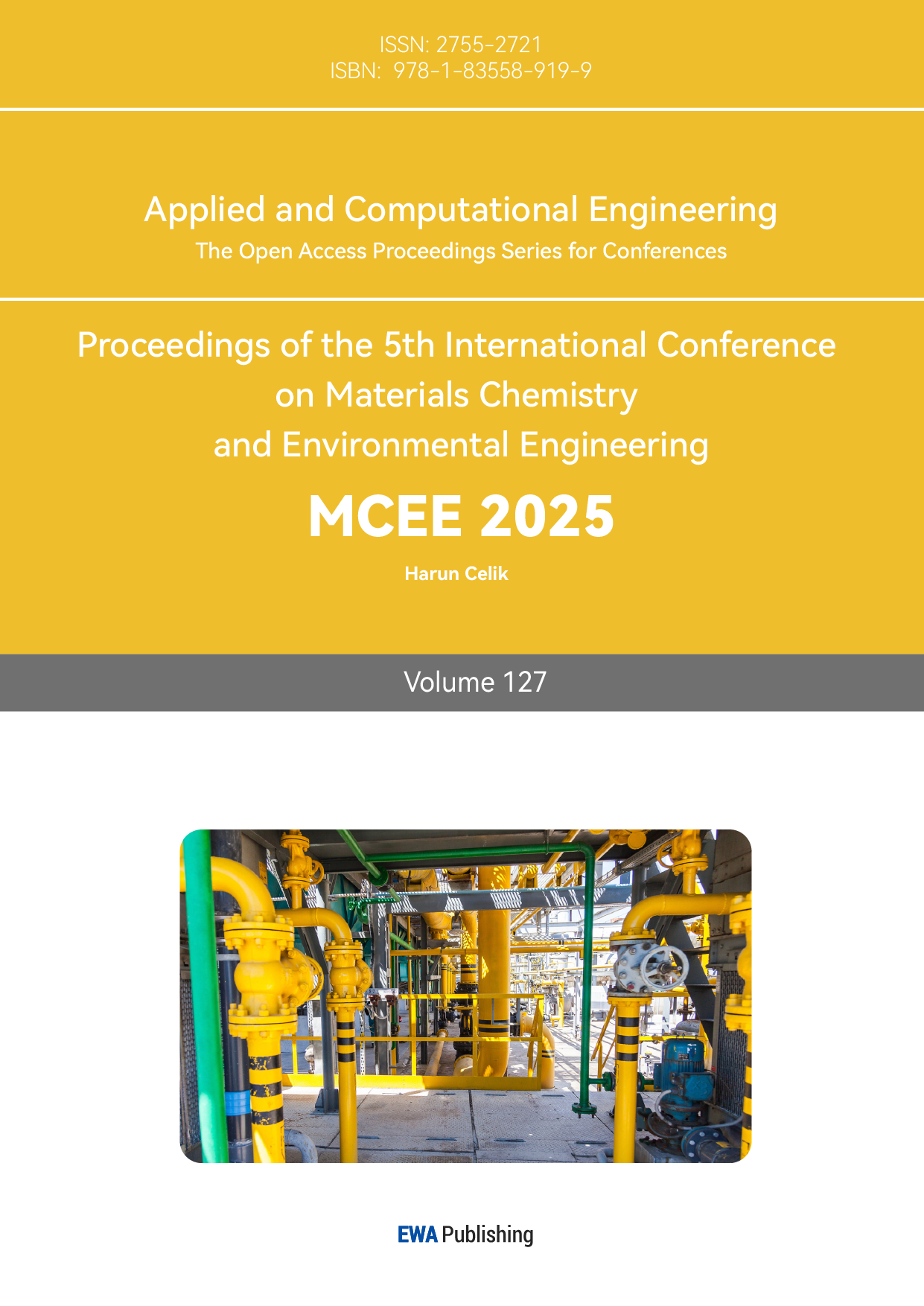

Multimodal sensors refer to intelligent sensors that can simultaneously acquire multiple forms of data, mainly including "optical sensors", "acoustic sensors", "mechanical sensors", "chemical sensors", etc [4]. Framework of vehicle-detection algorithm, as shown in Figure 1. Each type of sensor has its specific functions and application scenarios. For example, optical sensors have shown excellent performance in environmental lighting, obstacle detection, and image recognition, which has promoted the navigation capability of mobile robots in complex environments; Acoustic sensors can achieve target localization and path planning under noisy conditions through the propagation characteristics of sound waves.

Figure 1: Framework of vehicle-detection algorithm [4]

2.1. Sensor Fusion Technology

Sensor fusion technology plays a crucial role in the environmental perception system of mobile robots. Its core concept involves integrating data from multiple sensors to obtain more accurate and reliable environmental information. Sensor fusion technology aims to enhance the overall performance of the system through the complementarity and redundancy of information [5]. This process mainly includes steps such as data preprocessing, feature extraction, information fusion, and decision making. In current applications, Extended Kalman Filter (EKF) and Particle Filter (PF) are two widely used fusion algorithms. EKF can achieve real-time state estimation with fewer parameters by linearizing nonlinear systems; PF deals with complex nonlinear and non-Gaussian noise systems, especially suitable for dynamically changing environments.

2.2. Basic Principles of Mobile Robot Environment Perception

Multimodal sensor fusion technology has gained significant attention in mobile robot environmental perception research due to its crucial role in synthesizing information and analyzing environments [6]. As the complexity of the environment increases, the limitations of traditional single sensors become increasingly prominent, especially in dynamic and uncertain environments. Fusion methods based on multimodal sensors have shown significant advantages [7]. This diagram illustrates the architecture and working mechanism of multimodal sensor fusion, which integrates data from different types of sensors to achieve comprehensive perception and understanding of the environment.

The multimodal sensors used in mobile robot applications typically include infrared sensors (IR), LIDAR, cameras, and ultrasonic sensors. Different sensors can provide unique information in environmental awareness. For example, LiDAR has shown good performance in obstacle recognition in complex environments due to its high-precision distance measurement capability; and cameras can be used for scene understanding and object recognition, extracting rich visual information through image processing technology. While each of these sensors has its unique advantages, they also come with limitations such as restricted field of view, sensitivity to environmental lighting, and measurement inaccuracies. Therefore, it is particularly important to adopt the strategy of multimodal sensor fusion, which can complement the deficiencies between different sensors and provide more accurate and stable environmental awareness data.

At the core of multimodal sensor information integration lies data fusion technology, which encompasses methods such as Kalman filters, particle filters, and deep learning techniques, among others [8]. The Kalman filter estimates state variables in real-time dynamic environments by optimizing the combination of prior information and observations. In contrast, particle filters are suitable for nonlinear and non-Gaussian environments, and can express the possibility of states through sample distribution; in recent years, the rise and development of deep learning have enabled us to use big data for deep level feature extraction and pattern recognition of sensor data, providing more effective environmental understanding capabilities for mobile robots.

Figure 2: Service-oriented robots [9]

In the design of the mobile robot environment perception system, the comprehensive use of data processing algorithms aims to achieve rapid information fusion and efficient decision-making [9]. Service-oriented robots, as shown in Figure 2. Applying data filtering and noise reduction techniques to address data pollution and noise issues can help improve the reliability of sensor data. In real-time processing and decision-making mechanisms, fast algorithms are used to control processing delays within an acceptable range, ensuring that mobile robots can respond promptly in dynamic environments. For example, in multi robot collaborative tasks, the real-time and accurate perception of the environment directly affects the collaboration efficiency and task completion quality among multiple robots. Therefore, optimizing algorithms and accelerating processing flow has become an important direction of current research.

Multimodal sensor fusion technology can not only fully utilize the characteristics of each sensor to improve the accuracy and real-time perception of the environment, but also enhance the autonomous decision-making ability of mobile robots in constantly changing environments. These advancements in multimodal sensor fusion technology have laid a solid foundation for various applications, including intelligent navigation, autonomous driving, and complex service fields.

2.3. System architecture and composition

System functionality and module partitioning are key components in designing an effective multimodal sensor fusion-based environment perception system for mobile robots [10]. The system is mainly divided into multiple modules, each responsible for specific functions, thus achieving comprehensive perception of the environment. These modules typically include data acquisition module, data processing module, and decision-making module. The data acquisition module collects and transmits data from multimodal sensors (e.g., LiDAR, cameras, and sound sensors) to the data processing module. The data processing module generates a more accurate environment model by fusing information from different sensors, and then provides support for the decision-making module. The decision-making module utilizes processed data for path planning and obstacle avoidance, ensuring that mobile robots can operate safely and efficiently in dynamic environments.

In the process of multimodal sensor fusion, a commonly used mathematical model is the weighted average formula to measure the importance of different sensor data. Assuming there are n sensors, each with an output of xi and a weight of wi, the fused sensor output can be represented as:

\( X=\frac{\sum _{i=1}^{n}{w_{i}}{x_{i}}}{\sum _{i=1}^{n}{w_{i}}} \) (1)

Here, X is the fused output, and wi needs to be adjusted based on the confidence level of the sensor to ensure the overall perception accuracy and reliability of the system. In this way, through reasonable module division and functional design, the system can efficiently perceive the environment and provide necessary support for mobile robots.

Hardware design and selection are crucial steps in developing a multimodal sensor fusion-based environment perception system for mobile robots [11]. Choosing the appropriate hardware not only affects the performance and stability of the system, but also directly relates to the accuracy and real-time perception of the environment. In this process, factors such as sensor type, performance, cost, and system scalability must be comprehensively considered.

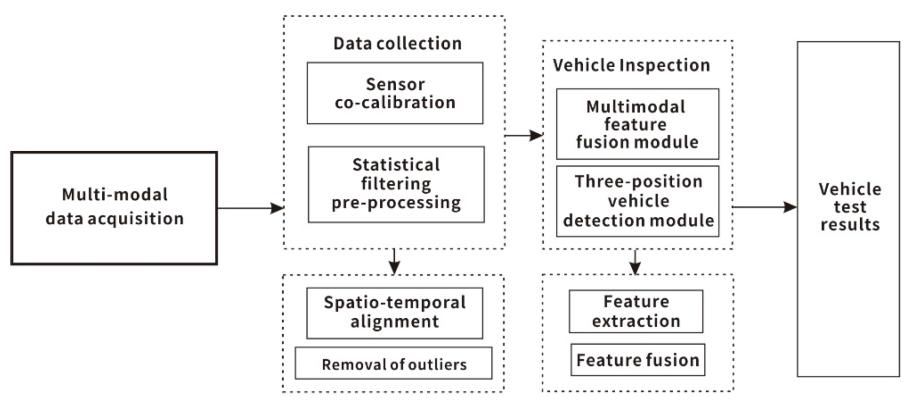

In the development of modern mobile robot technology, the fusion of multimodal sensors has become one of the key research directions [12]. This technology can effectively enhance the perception ability of robots in complex environments, ensuring the accuracy and reliability of their decision-making process. Fusion technology involves the selection and application of various sensors. Additionally, it encompasses deep analysis at multiple levels, including data processing, information reconstruction, and feedback mechanisms. Based on this, this article will explore the basic principles of multimodal sensor fusion and its importance in mobile robot environment perception [13]. Image restoration based on generative adversarial networks, as shown in Figure 3.

Figure 3: Image restoration based on generative adversarial networks [13]

The design of mobile robot environment perception system relies on the integration and collaborative work of multimodal sensors [14]. For example, by integrating data from sensors such as LiDAR, Camera, and Inertial Measurement Unit (IMU), the spatial cognition and event discrimination abilities of robots can be significantly improved. The heterogeneity and redundancy of data often complicate sensor fusion, potentially impacting the effectiveness of environmental perception. In order to effectively overcome this problem, advanced algorithms such as Kalman Filter, Particle Filter, etc. must be used to process and optimize the raw signals obtained by sensors, and achieve optimal data fusion through adaptive methods.

In the current research status, many scholars have proposed different theoretical frameworks and application models for the key technology of multimodal sensor fusion [15]. In terms of the combination of vision and depth perception, the accuracy and real-time performance of environmental modeling have been improved by combining computer vision technology with visual SLAM (Simultaneous Localization and Mapping) methods. For example, models based on deep learning algorithms can extract more complex feature information, thereby achieving effective tracking and understanding of dynamic environments. Using machine learning techniques for pattern recognition of data can improve the response speed and processing capability of perception systems to abnormal situations. For example, other researchers have utilized machine learning to assist in research and proposed a concept of using PFAS fingerprints from fish tissues in surface water to classify multiple sources of PFAS, with classification accuracy ranging from 85% to 94% [16].

However, it's important to note that the effectiveness of multimodal sensor fusion in practical applications isn't solely dependent on the algorithm's strengths and weaknesses. Other factors, such as sensor quality, layout, and working environment, also play crucial roles. Therefore, it is crucial to conduct systematic theoretical analysis and experimental verification. In many specific cases, researchers compare different sensor combinations and their fusion effects to evaluate the advantages and disadvantages of various methods. For example, research has conducted targeted experiments on perception tasks under different environmental conditions, and the results show that selecting sensors reasonably and fusing them through effective algorithms can significantly improve the robustness of environmental perception systems. The mobile robot environment perception system leveraging multimodal sensor fusion technology opens up new avenues for robotic development, particularly in areas such as autonomous navigation and adaptive decision-making [17].With the in-depth research of relevant theories and the continuous accumulation of technology, future environmental perception systems will be more intelligent and efficient, achieving a wider range of application scenarios.

2.4. Data Fusion and Real time Processing Algorithms

The implementation of data filtering and noise reduction techniques is crucial in the multimodal sensor fusion mobile robot environment perception system [18]. This process aims to improve data quality, ensure information accuracy, and provide a reliable foundation for subsequent environmental understanding and decision-making. Data filtering technology can effectively remove external noise from sensor data and improve the recognizability of effective signals. Common data filtering methods include Kalman filtering, median filtering, etc. These techniques weight the raw information to reduce the impact of random and systematic errors on the final judgment results.

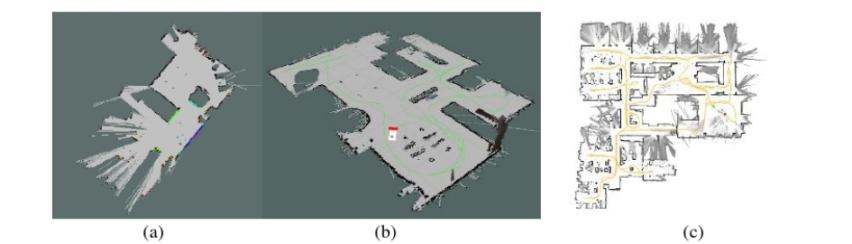

Real-time processing and decision-making mechanisms is core, indispensable components in multimodal sensor fusion-based mobile robot environment perception systems [19]. The Real Time Processing Framework is an important cornerstone for achieving efficient environmental perception, data integration, and rapid decision-making. Through the Stream Processing Model, perception data is effectively analyzed and processed, enabling robots to quickly respond to changes in dynamic environments [20]. The implementation of this framework enables robots to perform high-frequency data acquisition and fusion based on multimodal sensors such as LIDAR, infrared sensors, and cameras during perception activities [21]. Common laser SLAM, as shown in Figure 4.

Figure 4: Common laser SLAM. (a) Gmapping; (b) Hector; (c) Cartographer [20]

In modern mobile robot environment perception systems, optimizing data fusion and real-time processing algorithms is crucial for improving the overall performance of the system. Multimodal sensors like LiDAR, cameras, and IMUs generate large amounts of heterogeneous data. Consequently, the complexity and real-time performance of processing algorithms directly impact robot decision-making and behavior. If the algorithm efficiency is not high, it may lead to delays in data processing, thereby affecting the real-time response capability and environmental adaptability of mobile robots.

In the rapidly developing field of intelligent mobile robots today, the effective application of real-time data processing and fusion algorithms is the core of environmental perception systems. To better understand the practical implications of real-time processing in mobile robot systems, let's examine a specific case study. In this analysis, this paper will explore the success factors, challenges, and research insights derived from a real-time processing implementation.

3. Conclusion

In the context of rapid technological development today, multimodal sensor fusion technology has become increasingly important. This technology plays a crucial role in the environmental perception system of mobile robots. By integrating different types of sensor data, mobile robots can establish more comprehensive and accurate environmental models in complex and dynamic environments, thereby enhancing their perception ability and decision-making level. In this paper, the necessity of multimodal sensor fusion and its specific applications in robot navigation, obstacle recognition and path planning are discussed in detail, and the complementarity and synergy between different sensors are emphasized. Meanwhile, existing technologies face challenges of high integration complexity and strict real-time requirements. To address these issues, innovative solutions supported by algorithms such as deep learning and Kalman filtering have been proposed, aiming to improve the real-time performance, reliability, and effectiveness of data processing. The SWOT (Strengths, Weaknesses, Opportunities, and Threats) analysis framework has been employed to systematically evaluate the merits, limitations, and future prospects of multimodal sensor fusion technology, thereby providing strategic insights for future research directions. This study conducts an in-depth analysis of hardware selection and design for mobile robots' environmental perception systems. It emphasizes how diverse application scenarios impact sensor performance and underscores the crucial role of thoughtful hardware design in enhancing overall system performance. Meanwhile, this article focuses on the optimization of real-time data processing and decision-making mechanisms, and introduces how adaptive filtering and dynamic adjustment strategies can enhance the system's adaptability and response speed in complex environments. The presentation of the case analysis further supports the theoretical framework and verifies the effectiveness of multimodal sensor fusion technology in improving navigation accuracy and obstacle avoidance capabilities through practical applications, providing practical experience and lessons for future research. By continuously exploring and optimizing multimodal sensor fusion technology, mobile robots can not only achieve intelligent environmental perception, but also contribute to promoting technological progress and application popularization in related fields, reflecting the broad application prospects in intelligent transportation, service robots, and other fields. Therefore, in-depth research on multimodal sensor fusion technology is not only a necessary measure to solve current technological challenges, but also an important direction to promote the sustainable development of the mobile robot field, laying the foundation for comprehensively improving the intelligence level of robot operations. This paper hopes that this field can achieve more intelligent and efficient practical applications in the future, such as advanced autonomous navigation in urban environments or precise object manipulation in complex industrial settings.

References

[1]. Quan, Z., Lai, Y., Yu, H., Zhang, R., Jing, X., & Luo, L. (2023). Multi-modal fusion for millimeter-wave communication systems: A spatio-temporal enabled approach. Neurocomputing. https://doi.org/10.48550/arXiv.2212.05265

[2]. Reily, B., Reardon, C., & Zhang, H. (2021). Multi-Modal Sensor Fusion and Selection for Enhanced Situational Awareness. In Proceedings of Virtual, Augmented, and Mixed Reality Technology for Multi-domain Operations II (Vol. 11759). https://doi.org/10.1117/12.2587985

[3]. Zhang, K., Cui, H., & Yan, X. (2024). Simultaneous localization and mapping of mobile robots with multi-sensor fusion. Applied Mathematics and Nonlinear Sciences, 9(1). https://doi.org/10.2478/amns.2023.2.00488

[4]. Wang, Y., Liu, H., & Chen, N. (2022). Vehicle Detection for Unmanned Systems Based on Multimodal Feature Fusion. Applied Sciences, 12(12), 6198. https://doi.org/10.3390/app12126198

[5]. Tu, D., Wang, E., & Yu, Y. (2024). Design and Implementation of Intelligent Fire Monitoring System Based on Multi-Sensor Data Fusion. Applied Mathematics and Nonlinear Sciences, 9(1). https://doi.org/10.2478/amns.2023.2.01715

[6]. Li, J., Zhang, Q., Chen, H., Li, J., Wang, L., & Pei, E. (2023). Continuous emotion recognition based on perceptual resampling and multimodal fusion. Computer Application Research, 40(12), 3816-3820. https://doi.org/10.19734/j.issn.1001-3695.2023.04.0217

[7]. Luo, Y. (2023). Research on Water Environment Quality Monitoring Based on Multimodal Information Fusion. Environment and Development, 35(5), 67-72. https://doi.org/10.16647/j.cnki.cn15-1369/X.2023.05.010

[8]. Kalamkar, S., & Mary, A. G. (2023). Multimodal image fusion: A systematic review. Decision Analytics Journal, 9. https://doi.org/10.1016/J.DAJOUR.2023.100327

[9]. Wang, L. (2023). Design and Implementation of Autonomous Navigation System for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, Shanghai Institute of Technology]. https://doi.org/10.27801/d.cnki.gshyy.2023.000465

[10]. Wu, S., & Ma, J. (2023). Multi task and Multi modal Emotion Analysis Model Based on Perception Fusion. Data Analysis and Knowledge Discovery, 7(10), 74-84.

[11]. Long, Y., Ding, M., Lin, G., Liu, H., & Zeng, B. (2021). Multimodal Emotion Recognition Based on Audiovisual Perception System. Computer System Application, 30(12), 218-225. https://doi.org/10.15888/j.cnki.csa.008235

[12]. Bai, R., Ju, Z., Zhang, Y., Zhang, Y., & Feng, M. (2023). Research on Multi source and Multi modal Data Fusion Method for Intelligence Perception. Intelligence Journal, 42(10), 124-131.

[13]. Han, X. (2021). Accurate 3D reconstruction of natural environment based on multimodal perception fusion [Doctoral dissertation, Nankai University]. https://doi.org/10.27254/d.cnki.gnkau.2021.000173

[14]. Qi, H. (2023). Research on 3D Environment Perception Algorithm Based on Multimodal Fusion [Doctoral dissertation, Anhui University of Engineering]. https://doi.org/10.27763/d.cnki.gahgc.2023.000308

[15]. Xu, Z. (2022). Research on Multimodal Sensor Fusion Technology [Doctoral dissertation, University of Electronic Science and Technology of China]. https://doi.org/10.27005/d.cnki.gdzku.2022.003803

[16]. Liu, S., Zhang, B., & Li, X. (2024). Research progress on the application of machine learning in environmental analysis and detection. Journal of Analytical Testing, 43(8), 1105-1116.

[17]. Dai, M. (2023). Research on Multi Sensor Fusion SLAM System for Mobile Robots [Doctoral dissertation, Beijing University of Posts and Telecommunications]. https://doi.org/10.26969/d.cnki.gbydu.2023.002056

[18]. Yu, Z., Song, G., Jiang, Z., Li, W., & Mao, Y. (2023). Analysis of wear and tear of titanium alloy machining tools based on multimodal perception fusion. Manufacturing Technology and Machine Tools, 5, 57-63. https://doi.org/10.19287/j.mtmt.1005-2402.2023.05.007

[19]. Li, J. (2022). Research on Autonomous Navigation System for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, Beijing University of Posts and Telecommunications]. https://doi.org/10.26969/d.cnki.gbydu.2022.002565

[20]. Li, X., & Fang, X. (2021). Multistream Sensor Fusion-Based Prognostics Model for Systems Under Multiple Operational Conditions. https://doi.org/10.1115/MSEC2021-62348

[21]. Yang, W. (2023). Research on SLAM Algorithm for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, University of Electronic Science and Technology of China]. https://doi.org/10.27005/d.cnki.gdzku.2023.002868

Cite this article

Wang,X. (2025). Mobile Robot Environment Perception System Based on Multimodal Sensor Fusion. Applied and Computational Engineering,127,42-49.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Materials Chemistry and Environmental Engineering

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Quan, Z., Lai, Y., Yu, H., Zhang, R., Jing, X., & Luo, L. (2023). Multi-modal fusion for millimeter-wave communication systems: A spatio-temporal enabled approach. Neurocomputing. https://doi.org/10.48550/arXiv.2212.05265

[2]. Reily, B., Reardon, C., & Zhang, H. (2021). Multi-Modal Sensor Fusion and Selection for Enhanced Situational Awareness. In Proceedings of Virtual, Augmented, and Mixed Reality Technology for Multi-domain Operations II (Vol. 11759). https://doi.org/10.1117/12.2587985

[3]. Zhang, K., Cui, H., & Yan, X. (2024). Simultaneous localization and mapping of mobile robots with multi-sensor fusion. Applied Mathematics and Nonlinear Sciences, 9(1). https://doi.org/10.2478/amns.2023.2.00488

[4]. Wang, Y., Liu, H., & Chen, N. (2022). Vehicle Detection for Unmanned Systems Based on Multimodal Feature Fusion. Applied Sciences, 12(12), 6198. https://doi.org/10.3390/app12126198

[5]. Tu, D., Wang, E., & Yu, Y. (2024). Design and Implementation of Intelligent Fire Monitoring System Based on Multi-Sensor Data Fusion. Applied Mathematics and Nonlinear Sciences, 9(1). https://doi.org/10.2478/amns.2023.2.01715

[6]. Li, J., Zhang, Q., Chen, H., Li, J., Wang, L., & Pei, E. (2023). Continuous emotion recognition based on perceptual resampling and multimodal fusion. Computer Application Research, 40(12), 3816-3820. https://doi.org/10.19734/j.issn.1001-3695.2023.04.0217

[7]. Luo, Y. (2023). Research on Water Environment Quality Monitoring Based on Multimodal Information Fusion. Environment and Development, 35(5), 67-72. https://doi.org/10.16647/j.cnki.cn15-1369/X.2023.05.010

[8]. Kalamkar, S., & Mary, A. G. (2023). Multimodal image fusion: A systematic review. Decision Analytics Journal, 9. https://doi.org/10.1016/J.DAJOUR.2023.100327

[9]. Wang, L. (2023). Design and Implementation of Autonomous Navigation System for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, Shanghai Institute of Technology]. https://doi.org/10.27801/d.cnki.gshyy.2023.000465

[10]. Wu, S., & Ma, J. (2023). Multi task and Multi modal Emotion Analysis Model Based on Perception Fusion. Data Analysis and Knowledge Discovery, 7(10), 74-84.

[11]. Long, Y., Ding, M., Lin, G., Liu, H., & Zeng, B. (2021). Multimodal Emotion Recognition Based on Audiovisual Perception System. Computer System Application, 30(12), 218-225. https://doi.org/10.15888/j.cnki.csa.008235

[12]. Bai, R., Ju, Z., Zhang, Y., Zhang, Y., & Feng, M. (2023). Research on Multi source and Multi modal Data Fusion Method for Intelligence Perception. Intelligence Journal, 42(10), 124-131.

[13]. Han, X. (2021). Accurate 3D reconstruction of natural environment based on multimodal perception fusion [Doctoral dissertation, Nankai University]. https://doi.org/10.27254/d.cnki.gnkau.2021.000173

[14]. Qi, H. (2023). Research on 3D Environment Perception Algorithm Based on Multimodal Fusion [Doctoral dissertation, Anhui University of Engineering]. https://doi.org/10.27763/d.cnki.gahgc.2023.000308

[15]. Xu, Z. (2022). Research on Multimodal Sensor Fusion Technology [Doctoral dissertation, University of Electronic Science and Technology of China]. https://doi.org/10.27005/d.cnki.gdzku.2022.003803

[16]. Liu, S., Zhang, B., & Li, X. (2024). Research progress on the application of machine learning in environmental analysis and detection. Journal of Analytical Testing, 43(8), 1105-1116.

[17]. Dai, M. (2023). Research on Multi Sensor Fusion SLAM System for Mobile Robots [Doctoral dissertation, Beijing University of Posts and Telecommunications]. https://doi.org/10.26969/d.cnki.gbydu.2023.002056

[18]. Yu, Z., Song, G., Jiang, Z., Li, W., & Mao, Y. (2023). Analysis of wear and tear of titanium alloy machining tools based on multimodal perception fusion. Manufacturing Technology and Machine Tools, 5, 57-63. https://doi.org/10.19287/j.mtmt.1005-2402.2023.05.007

[19]. Li, J. (2022). Research on Autonomous Navigation System for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, Beijing University of Posts and Telecommunications]. https://doi.org/10.26969/d.cnki.gbydu.2022.002565

[20]. Li, X., & Fang, X. (2021). Multistream Sensor Fusion-Based Prognostics Model for Systems Under Multiple Operational Conditions. https://doi.org/10.1115/MSEC2021-62348

[21]. Yang, W. (2023). Research on SLAM Algorithm for Mobile Robots Based on Multi Sensor Fusion [Doctoral dissertation, University of Electronic Science and Technology of China]. https://doi.org/10.27005/d.cnki.gdzku.2023.002868