1. Introduction

Robot vision is an important branch in the field of artificial intelligence. It enables robots to simulate the visual function of human eyes. Through robot vision, robots can obtain visual information from observed objects and digitize it for detection and control. Robot vision system is mainly composed of optical lighting, imaging system and visual information processing, involving image processing, artificial intelligence, pattern recognition and other disciplines.

Robot vision has a wide range of applications, including: In the industrial field, robot vision can be used for quality inspection, object sorting, assembly line automation, etc. In agriculture, robot vision can be used for various applications such as crop monitoring, automatic harvesting, and disease and pest detection. These applications can significantly improve the efficiency and precision of agricultural production.

In the field of transportation, robot vision technology can be used for vehicle detection, traffic flow analysis, pedestrian detection, and more. These applications can greatly improve road safety and traffic efficiency. Robot vision has various applications in the medical industry, including medical image analysis, surgical navigation, and rehabilitation aids. These technologies can assist doctors in making more accurate diagnoses and providing more effective treatments. In the service industry, robot vision can be used for navigation, obstacle avoidance, object recognition, etc., to improve the autonomy and interaction ability of service robots.

2. Adaptive imaging technology of robot vision sensor

The Oxford English Dictionary defines a robot as a machine capable of automatically performing a series of complex actions. So the core of the robot is action. A robot that can act must have perception, and robot vision is the main way for a robot to obtain perception. RGBD cameras have revolutionized robotic vision technology by providing direct geometric perception, offering two senses of vision. Externally, they extend the classical RGB image (where geometry must be inferred from a direct scene-depth profile). Internally, RGBD cameras are vision sensors based on traditional cameras and structured light. From the inside, they are actually vision sensors based on traditional cameras and structured light.

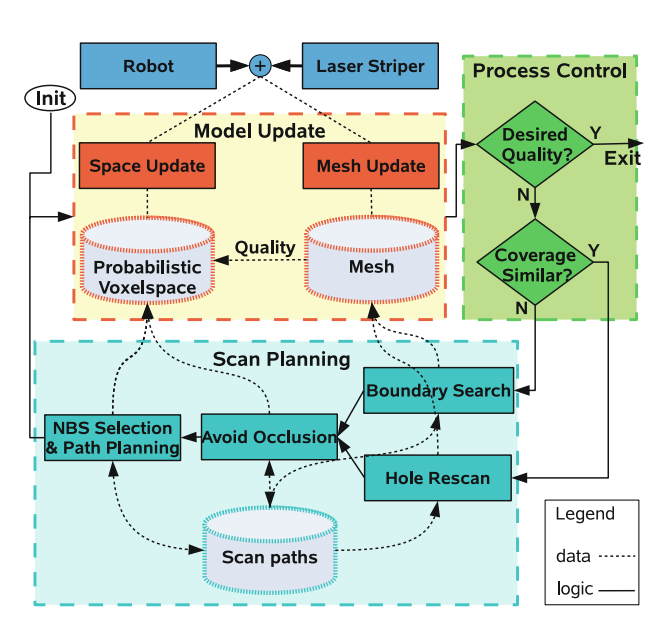

Stuckler et al. build a 3D model with a moving RGBD camera and label the various parts of the model with different objects such as ground, people. They propose a method for learning semantic maps of mobile RGBD cameras in real time, modeling the geometry, appearance, and semantic markers of surfaces, using synchronous positioning to recover camera poses, and identifying object classes in segmented images. This approach is particularly useful since robots performing complex tasks in unstructured environments require the ability to classify surfaces semantically. Propose a semantic mapping system that uses depth to achieve scale invariance and improve the overall segmentation quality in maps. They evaluate the runtime recognition performance of the object segmentation method. This evaluation demonstrates the benefits of integrating recognition from multiple views in 3D maps, leading to more adequate and reasonable data [1]. Kriegel focuses on location-specific objects in the environment, using robots and 3d sensors for autonomous surface reconstruction of objects at small scales. Obtain high-quality 3d surface models quickly by applying NBS options and selection criteria to minimize and stop errors between scanning patches and maximize utility functions inherited from exploration and grid quality components [2] (Figure 1).

Figure 1: Overview of autonomous 3D modeling process [1]

In the paper "Delay Fusion of real-time Visual aided inertial Navigation", this paper discuss the delay caused by real-time image processing and feature tracking in the visual enhanced inertial navigation system. This paper simulate it using three different delay fusion methods and find that once the image is processed by the computer, the moving robot moves farther than displayed. This effectively addresses the localization problem in real-time navigation systems [3].

The following two experiments address the question of how robots perceive and identify objects they need to interact with. Ports et al propose a hard-based 3d feature extraction and object recognition to accelerate the entire RGBD computer vision system [4]. Wang, in their paper mainly discusses the method of texture/untexture object recognition and attitude estimation using RGB-D images. To achieve this, this paper proposes a new global object descriptor called Viewpoint oriented Color-Shape Histogram (VCSH), which combines the Color and Shape features of 3D objects. This paper proposes a new global object descriptor called Viewpoint oriented Color-Shape Histogram (VCSH), which combines the Color and Shape features of 3D objects. This descriptor is used efficiently in real-time textured/untextured object recognition and 6D pose estimation systems, and is also applied to object localization in uniform semantic maps [5].

2.1. Adaptive imaging technology of robot vision sensor

The adaptive imaging technology of robot vision sensors dynamically adjusts imaging parameters based on environmental changes, resulting in clearer and more accurate images.

This technology has applications in several fields, including industrial testing, medical diagnostics, autonomous driving, and more.

The key components of adaptive imaging technology include optical illumination, imaging systems and visual information processing. Optical lighting system can provide stable light source, reduce the interference of ambient light; Imaging systems are responsible for capturing images, while visual information processing is the analysis and interpretation of images to extract useful information.

Among Adaptive imaging techniques, one of the main techniques is Adaptive Optics (AO). Adaptive optics is a technique for correcting dynamic optical wavefront errors, which improves the image quality by adjusting deformable mirrors or other optical elements in the optical system to compensate for wavefront distortion caused by atmospheric disturbance or other factors.

In the paper vision science and adaptive optics, the state of the field, in salter and martin's adaptive optics in laser processing, the application of adaptive optics in laser processing is discussed [6]. Adaptive optics is a valuable tool that provides enhanced functionality and flexibility for laser processing in a variety of systems. By using a single adaptive element, it is possible to correct aberrations introduced when focusing inside the workpiece. This element can also customize the focus intensity distribution for specific manufacturing tasks and provide parallelization to reduce processing time.

This is particularly promising for applications that use ultrafast lasers for 3D manufacturing. The article also reviews the latest developments in adaptive laser processing, including methods and applications, and discusses future prospects.

This paper mainly discusses the application and development of adaptive optics (AO) in vision science. The paper demonstrates that the application of AO in vision science provides researchers with more precise control of visual stimuli, higher resolution retinal imaging, and the ability to directly measure retinal chemical and physiological responses, and is expected to become an important tool for scientific research.

2.2. The future of Adaptive imaging technology of robot vision sensor

The paper Real-Time and Resource-Efficient Multi-Scale Adaptive Robotics Vision for Underwater Object Detection and Domain Generalization, mainly introduces how to provide accuracy and adaptability for underwater vehicles when they encounter many challenges brought by complex environments and different lighting conditions [7]. The article mentions MARS, a pioneering underwater target detection method, which uses a perfect target detection architecture to improve the detection accuracy and adaptability of different underwater fields. ROV vision is critical for marine safety and environmental protection. It helps identify underwater hazards, facilitates the repair of underwater infrastructure, detects debris and pollutants, and aids in protecting marine life. The study used the MARS model architecture to augment training data, addressing the performance degradation caused by underwater domain transfer. While this approach improves the effectiveness of target detection models in real-world scenarios, ensuring adequate and representative target data remains a persistent challenge.

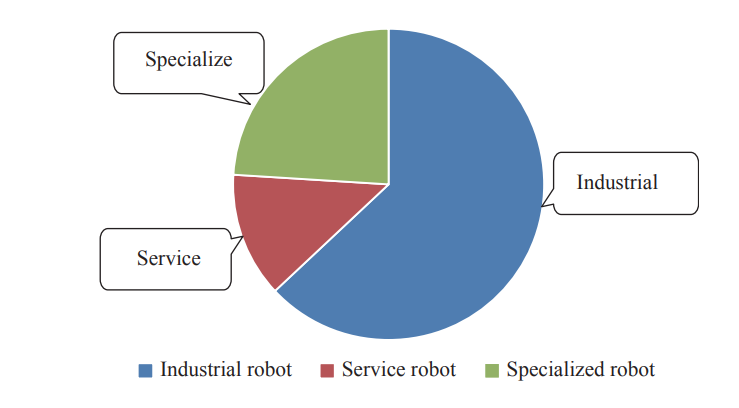

This article discusses current research and future trends in intelligent robots. The article highlights the explosive growth in the global robot market, driven by industrial reform, changing social needs, and technological advancements [8]. The figure below represents global robot market size. Intelligent robots play a crucial role in industrial transformation, both representing traditional manufacturing and accelerating innovation in the process (Figure 2).

Figure 2: Global robot market size in 2017 [2]

The new generation of intelligent robots will feature connectivity, virtual-physical integration, software definition and human-machine integration. Specifically, it can collect various data through multiple sensors and upload it to the cloud for initial processing, thus enabling information sharing. Virtual signals and actual devices are deeply integrated, forming a closed-loop process of data collection, processing, analysis, feedback and execution, and realizing the "real-virtual-real" transformation. Intelligent algorithms for large-scale data analysis rely on excellent software applications, which has led to the development of a new generation of intelligent robots in a software-oriented, content-based, platform and API focused manner. Robots can achieve human-computer cooperation based on images and videos, and can even read human mental activity and communicate emotions through deep learning. The article also mentioned that China is the world's largest robot market, with the promotion of the national strategy and the development of the industrial chain, more and more organizations and individuals participate in the construction of the robot industry, laying a good foundation for the positive and healthy development of robots.

Adaptive imaging technology enhances robot vision system performance by providing high-quality images in complex environments through dynamic parameter adjustment. With the continuous progress of technology, adaptive imaging technology is expected to be applied in more fields in the future.

3. Conclusion

The experiment by Stuckler verifies the advantages of multi-view recognition in 3D map and the improvement of navigation accuracy by delay fusion. Stuckler's RGBD camera model is particularly useful because robots performing complex tasks in unstructured environments require the ability to classify surfaces in one order, while Kriegel's experiments maximize utility functions inherited from the exploration and grid-quality components, making their models of high quality According to the research of Stuckler et al., the ability of robots to perceive and recognize objects has become mature. Meanwhile, adaptive imaging technology can ensure that robots can dynamically adjust imaging parameters according to environmental changes to obtain clearer and more accurate images. The application of adaptive imaging technology is also very broad, can be used for human exploration of unknown areas such as the seabed, but also can bring outstanding contributions to human medical treatment. The future of robot. The future of robotic adaptive imaging technology is mostly the focus of the development direction of the subsequent scientific community, which can help people solve many unknown and previously powerless areas of the problem, from this point of view, its future is very bright.

References

[1]. Stückler, J., Waldvogel, B., Schulz, H., & Behnke, S. (2015). Dense real-time mapping of object-class semantics from RGB-D video. Journal of Real-Time Image Processing, 10(4), 599-609. https://doi.org/10.1007/s11554-013-0379-5

[2]. Kriegel, S., Rink, C., Bodenmüller, T., & Suppa, M. (2015). Efficient next-best-scan planning for autonomous 3D surface reconstruction of unknown objects. Journal of Real-Time Image Processing, 10(4), 611-631. https://doi.org/10.1007/s11554-013-0386-6

[3]. Asadi, E., & Bottasso, C. L. (2015). Delayed fusion for real-time vision-aided inertial navigation. Journal of Real-Time Image Processing, 10(4), 633-646. https://doi.org/10.1007/s11554-013-0376-8

[4]. Orts-Escolano, S., Morell, V., Garcia-Rodriguez, J., & Cazorla, M. (2015). Real-time 3D semi-local surface patch extraction using GPGPU. Journal of Real-Time Image Processing, 10(4), 647-666. https://doi.org/10.1007/s11554-013-0385-7

[5]. Wang, W., Chen, L., Liu, Z., Kühnlenz, K., & Burschka, D. (2015). Textured/textureless object recognition and pose estimation using RGB-D image. Journal of Real-Time Image Processing, 10(4), 667-682. https://doi.org/10.1007/s11554-013-0380-z

[6]. Marcos, S., Werner, J. S., Burns, S. A., Merigan, W. H., Artal, P., Atchison, D. A., & Zhang, Y. (2017). Vision science and adaptive optics, the state of the field. Vision Research, 132, 3-33. https://doi.org/10.1016/j.visres.2017.01.006

[7]. Saoud, L. S., Niu, Z., Seneviratne, L., & Hussain, I. (2024). Real-Time and Resource-Efficient Multi-Scale Adaptive Robotics Vision for Underwater Object Detection and Domain Generalization. In Proceedings of the IEEE International Conference on Image Processing (pp. 3917-3923).

[8]. Wang, T. M., Tao, Y., & Liu, H. (2018). Current Researches and Future Development Trend of Intelligent Robot: A Review. Machine Intelligence Research, 15(5), 525-546. https://doi.org/10.1007/s11633-018-1115-1

Cite this article

Zhou,F. (2025). Adaptive Imaging Technology of Robot Vision Sensor. Applied and Computational Engineering,127,129-133.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Materials Chemistry and Environmental Engineering

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Stückler, J., Waldvogel, B., Schulz, H., & Behnke, S. (2015). Dense real-time mapping of object-class semantics from RGB-D video. Journal of Real-Time Image Processing, 10(4), 599-609. https://doi.org/10.1007/s11554-013-0379-5

[2]. Kriegel, S., Rink, C., Bodenmüller, T., & Suppa, M. (2015). Efficient next-best-scan planning for autonomous 3D surface reconstruction of unknown objects. Journal of Real-Time Image Processing, 10(4), 611-631. https://doi.org/10.1007/s11554-013-0386-6

[3]. Asadi, E., & Bottasso, C. L. (2015). Delayed fusion for real-time vision-aided inertial navigation. Journal of Real-Time Image Processing, 10(4), 633-646. https://doi.org/10.1007/s11554-013-0376-8

[4]. Orts-Escolano, S., Morell, V., Garcia-Rodriguez, J., & Cazorla, M. (2015). Real-time 3D semi-local surface patch extraction using GPGPU. Journal of Real-Time Image Processing, 10(4), 647-666. https://doi.org/10.1007/s11554-013-0385-7

[5]. Wang, W., Chen, L., Liu, Z., Kühnlenz, K., & Burschka, D. (2015). Textured/textureless object recognition and pose estimation using RGB-D image. Journal of Real-Time Image Processing, 10(4), 667-682. https://doi.org/10.1007/s11554-013-0380-z

[6]. Marcos, S., Werner, J. S., Burns, S. A., Merigan, W. H., Artal, P., Atchison, D. A., & Zhang, Y. (2017). Vision science and adaptive optics, the state of the field. Vision Research, 132, 3-33. https://doi.org/10.1016/j.visres.2017.01.006

[7]. Saoud, L. S., Niu, Z., Seneviratne, L., & Hussain, I. (2024). Real-Time and Resource-Efficient Multi-Scale Adaptive Robotics Vision for Underwater Object Detection and Domain Generalization. In Proceedings of the IEEE International Conference on Image Processing (pp. 3917-3923).

[8]. Wang, T. M., Tao, Y., & Liu, H. (2018). Current Researches and Future Development Trend of Intelligent Robot: A Review. Machine Intelligence Research, 15(5), 525-546. https://doi.org/10.1007/s11633-018-1115-1