1. Introduction

In decades, autonomous navigation robots are a hot research topic, more and more researchers begin to do research on the mapping and self-navigation robots in unknow environments [1], and such robots can be widely used in unmanned transportation logistics. With the leading of the Tesla's research and developments, most of the car manufacturers are rushing to develop autonomous navigation vehicles, of which Simulation Localization and Mapping (SLAM) technology is widely used in autonomous navigation vehicles. The SLAM is based on the ROS, Robotics software is created using the incredibly flexible ROS software architecture [2]. Furthermore, the SLAM can be used in many areas like, construction sites, big warehouses, in the transportations and urban cites. In large-scale situations, reliable and computationally effective localization has been made possible by 2D SLAM and localization algorithms [3]. The basic 2D-SLAM can only solve some fundamental problems such as the 2D lidar-based SLAM [4]. The next wave of robotics applications will only be possible with reliable and established methods that rely on RGB-D sensors and cameras that are reasonably inexpensive [5]. This report will use virtual machine to simulate the autonomous navigation in various environments with RTAB-MAP algorithm and intelligent inspection to see the performance of this method.

2. The construction of the robot

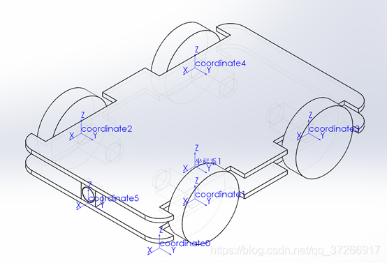

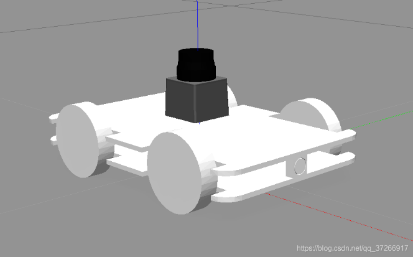

To simulate a virtual four-wheeled robot (Figure 1-2), the chassis is the x-y plane coordinate system, the centre of the chassis is the origin of the coordinate system, and the head direction of the car is x. The coordinate system of the four wheels has the same direction as that of the chassis and the origin of the wheels are just their wheel axis. The four-wheeled car robot is equipped with a lidar and an RGB-D camera in the middle.

|

Figure 1. Robot model. |

|

Figure 2. Robot Model in Simulation Environment. |

3. Method

3.1. RTAB-MAP

The Real-time appearance-based mapping (RTAB-Map) are used in this report [6]. It was initially launched in 2013, and maintenance and support are still ongoing. [7]. It includes a broad range of input sensors, such as stereo, RGB-D, and fisheye cameras as well as odometry and 2D/3D lidar data. The Robot Operating System (ROS) has long included RTAB-Map as an alternative to 2D SLAM [3]. The RGB-D graph-based SLAM approach is used in this report, and it is also a loop closure detection, which means the in the short time the loop closure approach can help the robot to process in long-term and big scale environments. The three components of the RTAB-MAP approach are sensor measurement, the front end, and the back end. In the frontend step, geometric characteristics are taken from the subsequent picture frames once the sensor data has been processed. Odometry estimation is therefore carried out in the frontend stage, while the backend is focused on finding a solution to the drift detection issue. Finally, RTAB-Map creates 3D maps using the g2o technique [8]. The RGB-D is built on the SLAM frame [9].

3.2. BA RGB-D algorithm

By reducing the 2D re-projection inaccuracy in the keyframes, BA improves the camera postures and the 3D points they saw. The local BA cost function is given by [10]:

\( {ε_{visual}}( \lbrace {P_{j}}\rbrace _{j=0}^{{N_{c}}}, \lbrace {Q_{i}}\rbrace _{i=0}^{{N_{p}}} ) =\sum _{i=0}^{{N_{p}}}\sum _{j∈Ai}({q_{i,j}} - π(K {P_{j }}{Q_{i}}))\ \ \ (1) \)

\( {ε_{visual}}: \) 2D error

\( {N_{c}} \) : pose of the latest cameras

\( {N_{p}}: \) 3D points Observed

\( {P_{j }}: \) pose of frame j

\( {q_{i,j}}: \) the observation of 3D point \( {Q_{i}} \)

\( {A_{i}} \) represents the collection of keyframe indices that \( {Q_{i}} \)

With the \( ( j∈{A_{i}},k≠j) \) , 2D projection error of each frame j in which the reconstructed 3D point \( {Q_{i}} \) occurs [10]. This is the cost function:

\( {ε_{depth}}( \lbrace {P_{j}}\rbrace _{j=0}^{{N_{c}}} ) =\sum _{i=0}^{{N_{p}}}\sum _{j∈Ai}\sum _{k∈Ai}^{k≠j}({q_{i,j}} - π(K {P_{j }}P_{k}^{-1}{π^{-1}} ({q_{i,k}}, {d_{i,k}})) )\ \ \ (2) \)

K: camera calibration matrix

\( {q_{i,k}}: \) the observation of the frame \( k \prime s \) 3D points \( {Q_{i }} \)

\( {d_{i,k}}: \) depth

As a result, the total error reduced in RGBD-BA SLAM's may be summed up as follows: [10]:

\( {ε_{visual.depth}} = {ε_{visual}} +{ ε_{depth}}\ \ \ (3) \)

4. Environment for Experiments

In order to better and more comprehensively evaluate the navigation system, this paper has carried out simulation verification, such as real experimental scenarios. The simulation tool selects the terrace simulation platform of ROS to build different types of warehouse simulation. The simulation uses a turtle robot, and the laser radar is a single line laser radar. Computer CPU is Intel (R) core (TM) i5-10210u CPU@1.60ghz The Ubuntu version is 18.04, and the ROS version is medic.

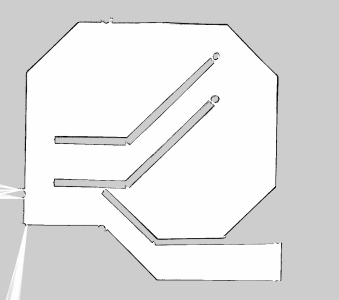

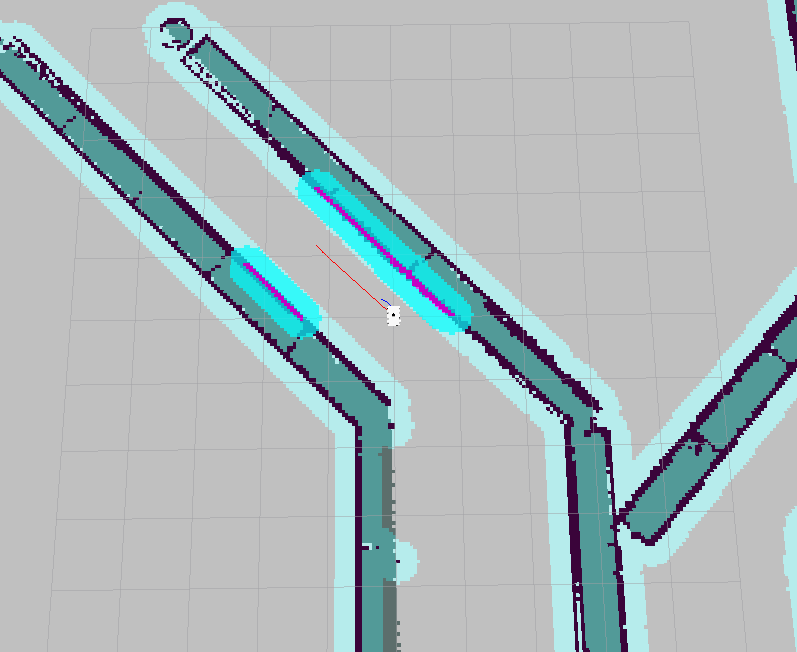

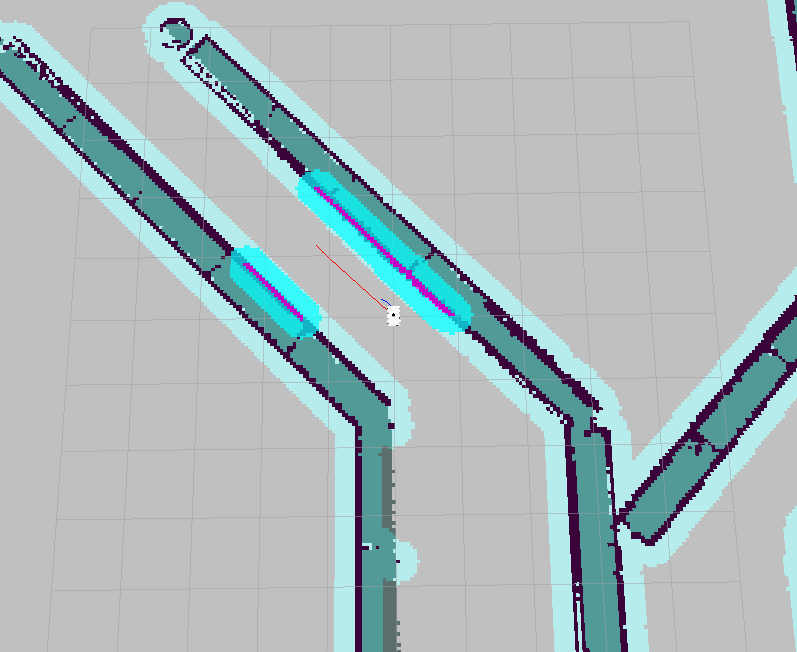

The simulation environment is shown in Figure 2. The construction drawing under Scenario Figure 1 (c) is shown in Figure a, the global planning in Figures B and c is shown in the red line, and the local planning is shown in the blue line. It can well implement global and local planning. Two-point repeated accuracy test: in the real scene, the online speed of the machine is 1m/s, and the angular speed is 1.5rad/s. Set the position coordinates of the initial robot as (0, 0, 0), and the coordinates of some endpoints are shown in Table 1. A total of 50 groups are selected. The average error of X and Y is less than 2cm, and the angle is less than 0.1 radian, reaching a high level. Multipoint patrol analysis: in order to test the navigation accuracy performance under the actual application state, conduct multi-point repeated accuracy test, that is, continuous multi-point navigation and circulation. Select four lines in turn, and use six point coordinates to navigate each line. In the multi-point repeated navigation accuracy test, the distance error is less than 3cm, θ The error is less than 0.1 rad. Meet the navigation requirements of the logistics robot in the warehouse environment.

|

(a) scene map. |

|

(b) navigation map. |

|

(c) navigation enlarged map. |

Figure 3. Path planning diagram. |

5. Conclusion

The application of intelligent inspection robot can improve the efficiency of production and living operations. In this paper, a high precision navigation system integrating multi-sensor information is designed for practical complex scenes. In order to better evaluate the navigation performance, simulation and real scene experiments are carried out at the same time. The results show that the navigation system can complete the navigation and positioning of complex scenes, which provides a new idea for robot navigation and has important application significance. However, it is found in the experiment that the performance of feature matching is greatly reduced with the acceleration of the speed. How to realize high-speed navigation and positioning will be the focus and difficulty of future research.

References

[1]. K. Ismail, R. Liu, Z. Qin, A. Athukorala, B. -P -L Lau, M. Shalihan, C. Yuen, U. Tan, “Efficient WiFi LiDAR SLAM for Autonomous Robots in Large Environments” 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), 2022, pp, doi: 10.48550/arXiv.2206.08733

[2]. J. Cheng, L. Zhu, X. Cai and H. Wu, "Mapping and Path Planning Simulation of Mobile Robot Slam Based on Ros," 2022 International Seminar on Computer Science and Engineering Technology (SCSET), 2022, pp. 10-14, doi: 10.1109/SCSET55041.2022.00012.

[3]. Merzlyakov and S. Macenski, "A Comparison of Modern General-Purpose Visual SLAM Approaches," 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021, pp. 9190-9197, doi: 10.1109/IROS51168.2021.9636615.

[4]. S. Zhao and S. -H. Hwang, "Path planning of ROS autonomous robot based on 2D lidar-based SLAM," 2021 International Conference on Information and Communication Technology Convergence (ICTC), 2021, pp. 1870-1872, doi: 10.1109/ICTC52510.2021.9620783.

[5]. M. Bertoni, S. Michieletto and G. Michieletto, "Towards a Low-Cost Robot Navigation Approach based on a RGB-D Sensor Network," 2022 IEEE 17th International Conference on Advanced Motion Control (AMC), 2022, pp. 458-463, doi: 10.1109/AMC51637.2022.9729292.

[6]. M. Labbe and F. Michaud, “Appearance-based loop closure detection for online large-scale and long-term operation,” IEEE Transactions on Robotics, vol. 29, no. 3, pp. 734–745, Jun. 2013. [Online]. Available

[7]. Labbe, Mathieu, and Franc¸ois Michaud. ”RTAB-Map as an open- ´ source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation.” Journal of Field Robotics 36.2 (2019): 416-446. Available: https://github. com/introlab/rtabmap

[8]. M. Kulkarni, P. Junare, M. Deshmukh and P. P. Rege, "Visual SLAM Combined with Object Detection for Autonomous Indoor Navigation Using Kinect V2 and ROS," 2021 IEEE 6th International Conference on Computing, Communication and Automation (ICCCA), 2021, pp. 478-482, doi: 10.1109/ICCCA52192.2021.9666426.

[9]. E. Mouragnon, M. Lhuillier, M. Dhome, F. Dekeyser and P. Sayd, "Real Time Localization and 3D Reconstruction," 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06), 2006, pp. 363-370, doi: 10.1109/CVPR.2006.236.

[10]. Naudet-Collette, S., Melbouci, K., Gay-Bellile, V., Ait-Aider, O., & Dhome, M. (2021). Constrained RGBD-SLAM. Robotica, 39(2), 277-290.

Cite this article

Wen,Z. (2023). SLAM based vision self-navigation robot with RTAB-MAP algorithm. Applied and Computational Engineering,6,1-5.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. K. Ismail, R. Liu, Z. Qin, A. Athukorala, B. -P -L Lau, M. Shalihan, C. Yuen, U. Tan, “Efficient WiFi LiDAR SLAM for Autonomous Robots in Large Environments” 2022 IEEE 18th International Conference on Automation Science and Engineering (CASE), 2022, pp, doi: 10.48550/arXiv.2206.08733

[2]. J. Cheng, L. Zhu, X. Cai and H. Wu, "Mapping and Path Planning Simulation of Mobile Robot Slam Based on Ros," 2022 International Seminar on Computer Science and Engineering Technology (SCSET), 2022, pp. 10-14, doi: 10.1109/SCSET55041.2022.00012.

[3]. Merzlyakov and S. Macenski, "A Comparison of Modern General-Purpose Visual SLAM Approaches," 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021, pp. 9190-9197, doi: 10.1109/IROS51168.2021.9636615.

[4]. S. Zhao and S. -H. Hwang, "Path planning of ROS autonomous robot based on 2D lidar-based SLAM," 2021 International Conference on Information and Communication Technology Convergence (ICTC), 2021, pp. 1870-1872, doi: 10.1109/ICTC52510.2021.9620783.

[5]. M. Bertoni, S. Michieletto and G. Michieletto, "Towards a Low-Cost Robot Navigation Approach based on a RGB-D Sensor Network," 2022 IEEE 17th International Conference on Advanced Motion Control (AMC), 2022, pp. 458-463, doi: 10.1109/AMC51637.2022.9729292.

[6]. M. Labbe and F. Michaud, “Appearance-based loop closure detection for online large-scale and long-term operation,” IEEE Transactions on Robotics, vol. 29, no. 3, pp. 734–745, Jun. 2013. [Online]. Available

[7]. Labbe, Mathieu, and Franc¸ois Michaud. ”RTAB-Map as an open- ´ source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation.” Journal of Field Robotics 36.2 (2019): 416-446. Available: https://github. com/introlab/rtabmap

[8]. M. Kulkarni, P. Junare, M. Deshmukh and P. P. Rege, "Visual SLAM Combined with Object Detection for Autonomous Indoor Navigation Using Kinect V2 and ROS," 2021 IEEE 6th International Conference on Computing, Communication and Automation (ICCCA), 2021, pp. 478-482, doi: 10.1109/ICCCA52192.2021.9666426.

[9]. E. Mouragnon, M. Lhuillier, M. Dhome, F. Dekeyser and P. Sayd, "Real Time Localization and 3D Reconstruction," 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06), 2006, pp. 363-370, doi: 10.1109/CVPR.2006.236.

[10]. Naudet-Collette, S., Melbouci, K., Gay-Bellile, V., Ait-Aider, O., & Dhome, M. (2021). Constrained RGBD-SLAM. Robotica, 39(2), 277-290.