1. Introduction

Deep learning is a subset of machine learning that focuses on using artificial neural networks to model and understand complex patterns in data.

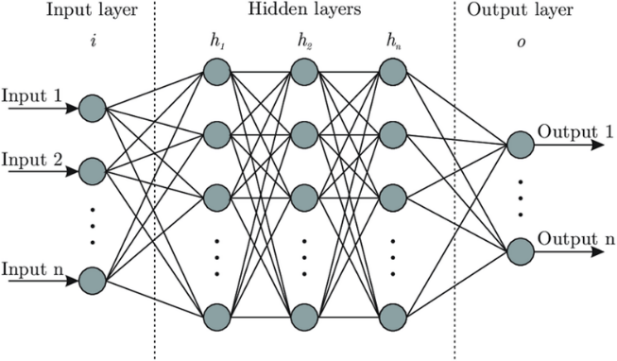

Neural networks are computational models inspired by the human brain. They consist of layers of nodes, each layer transforming the input data through mathematical functions. The first layer receives the raw input data, while hidden layers extract higher-level features. The final layer produces the output, such as classifications or predictions.

Figure 1: A typical neural network structure.

Neural networks have numerous applications in science and engineering due to their ability to model complex relationships and make predictions based on data. Image Recognition, Natural Language Processing, and Predictive Modeling are some of the popular use cases of neural networks.

However, this traditional neural network structure only focuses on the data it is given and neglects the vast amount of prior knowledge, like laws of physics or some empirically validated rules. This means that traditional neural networks are extremely dependent on the quantity and quality of the training data they are given. However, often, while analyzing complex physical, biological, or engineering systems, the cost of data acquisition is prohibitive, and we are inevitably faced with the challenge of drawing conclusions and making decisions under partial information. In this small data regime, most state-of-the-art machine learning techniques (e.g., deep/convolutional/recurrent neural networks) lack robustness and fail to provide any guarantees of convergence.[1]

The concept of Physics-Informed Neural Networks (PINNs) was proposed to solve the mentioned problem by M. Raissi, P. Perdikaris, and G.E. Karniadakis [1]. Physics-Informed Neural Networks utilize artificial neural networks to solve problems governed by partial differential equations (PDEs) or other physics laws.

PINNs extend traditional neural networks by computing things like partial derivatives of the output variables in terms of the input variables. Then, we use the results to create additional loss functions called physics losses that are governed by PDEs or other physics laws so that the neural network is physics informed. Physics losses and data losses are combined to form the total loss. The neural network will try to satisfy both the data and the physical constraints.

By incorporating the laws of physics, PINNs have several advantages. The first is higher data efficiency. By computing the input and output variables, we are creating more data from the original data set to work with, and we have additional information from the physics or PDEs, so the trained model can be more accurate while using less training data. The second is that the method is intuitive and easy to explain since the mathematics are from established laws of physics, which are much less abstract and easier to understand than a pure neural network.

PINNs can be used in a lot of fields where there are established laws that are described in math, like physics, geophysics, biomedicine, etc.

2. Applications of PINN in Various Fields

2.1. Biomedical Engineering

MRI image plays an important role in the medical field, especially in the diagnosis and treatment of cardiovascular diseases. However, traditional MRI image processing methods have some limitations, mainly in terms of low resolution and noise interference. The resolution of MRI images is usually low, which makes it difficult to clearly show the fine structure of blood vessels and complex blood flow patterns, which restricts the accurate reconstruction of vascular structure and hemodynamics. MRI images are susceptible to the interference of various types of noise, such as equipment noise, motion artifacts, physiological noise, etc. These noises will mask the real vascular structure and blood flow information, thus affecting the accuracy of diagnosis. The emergence of physical information neural networks provides a new idea to solve these problems.

PINNs can incorporate the Navier-Stokes equations (partial differential equations describing fluid motion) to construct constrained deep neural networks. Through training, PINNs can learn to remove noise from MRI data and reconstruct clearer and more accurate vascular structures and hemodynamics. PINNs can also construct DNNs constrained by the Navier-Stokes equations to efficiently remove noise from MRI data and perform physically consistent reconstructions, ensuring mass and momentum conservation at arbitrarily high spatial and temporal resolutions[2]. Trained PINN models can also automatically segment arterial wall geometry and infer important biomarkers such as blood pressure and wall shear stress. This information is critical for assessing vascular function and predicting heart disease risk, providing physicians with a more accurate basis for diagnosis and treatment. In the field of tissue engineering, cell growth and tissue development can be predicted by PINNs. PINNs can be used to predict the process of cell growth and tissue development, providing important guidance information for tissue engineering. This helps to develop more effective tissue engineering methods, such as constructing artificial blood vessels and tissue organs. PINN technology brings new opportunities for MRI image processing and is expected to revolutionize the diagnosis and treatment of cardiovascular diseases in the future.

2.2. Geophysics

PINNs are used to solve a wide range of complex geophysical inversion problems. PINNs provide high-resolution information on subsurface structures by tightly integrating with full-waveform inversion, an advanced seismic data processing method. At the same time, PINNs are integrated with simulations of subsurface flow processes and rock physics models that describe how the physical properties of rocks affect seismic wave propagation. Through this integrated application, PINNs can extract key subsurface properties such as rock permeability and porosity from seismic data, which are critical for oil exploration, groundwater management, and other subsurface resource development[3].

The application of PINNs in seismology has also been further extended, especially in combining deep neural networks (DNNs) with numerical partial differential equation (PDE) solvers. This combination allows PINNs to effectively solve a wide range of seismic inversion problems. For example, in velocity estimation, PINNs can provide an accurate velocity structure of the Earth's interior, which is critical for seismic wave propagation simulations and seismic risk analysis. In fault rupture imaging, PINNs help to reveal fault networks and potential rupture zones in the subsurface, which is important for understanding earthquake mechanisms and assessing seismic hazard risks. In addition, seismic localization is another application area that benefits from PINNs. By accurately determining the location of the origin of a seismic event, PINNs help to monitor seismic activity and support seismic early warning systems, and PINNs can extract detailed information about the source of an earthquake over time from seismic records, which is irreplaceable for the study of the dynamics of the earthquake process and the characteristics of the source of the earthquake.

2.3. Revealing Edge Plasma Dynamics through Deep Learning of Partial Observations

Revealing Edge Plasma Dynamics through Deep Learning of Partial Observations Predicting turbulent transport at the edges of magnetic confinement fusion devices is a long-term goal spanning several decades, and currently, there are significant uncertainties in the particle and energy constraints on fusion power plants. PINNs can accurately learn, by only partially observing synthetic plasma, turbulent field dynamics consistent with two-fluid theory for plasma diagnostics and model validation in challenging thermonuclear environments[2].

2.4. Material science

By embedding the laws of physics into the training process of neural networks, an innovative solution is provided to break through the limitations existing in traditional research methods, e.g., in the field of fluid dynamics, where the acquisition of experimental data is often costly and may become infeasible due to technological constraints in some cases. In such cases, PINNs enable accurate predictions of fluid behavior based on limited experimental data and known physical laws. This embedding of physical knowledge not only significantly enhances the generalization ability of the model, enabling the model to maintain high predictive performance on new and unseen data, but is also particularly suitable for data-scarce environments and can improve the predictive accuracy and reliability of the model with limited samples[4]. In the application of stress analysis, PINNs provide a powerful tool for engineers and researchers by simulating the stress distribution of a material when subjected to external forces. This approach not only predicts the response of materials under complex loading conditions but also optimizes the design process and reduces reliance on physical experimentation, resulting in cost savings and shorter R&D cycles. In addition, PINNs help predict the fracture patterns and processing of materials when subjected to cyclic loading or extreme conditions, which is critical to understanding the fatigue life and reliability of materials[5]. By modeling crack initiation, extension, and eventual fracture, PINNs can provide a scientific basis for material design and improvement, leading to improved material performance and durability.

2.5. Energy

PINNs have skillfully combined the efficient learning capabilities of neural networks with precise descriptions of the laws of physics to transform the field of renewable energy. In the development and utilization of renewable energy, PINNs can accurately predict the geographical distribution of energy sources and provide a scientific basis for the siting and layout of power generation systems such as wind and solar, thereby optimizing the performance of these energy facilities. In addition, PINNs can also simulate and predict the energy demand in different regions and periods, which is important for rationalizing energy production, storage, and distribution and realizing the efficient use of green energy. In the field of petroleum engineering, PINNs can help engineers simulate complex fluid flows in reservoirs, which is crucial for understanding the behavior of reservoirs, predicting the transport paths of hydrocarbons, and optimizing extraction strategies[6]. The simulation of PINNs allows for more effective planning of well locations and selection of extraction methods, thereby improving the efficiency and economics of oil extraction. In addition, the application of PINNs in the field of environmental science provides a new perspective on environmental protection and sustainable development. It can be used to simulate and predict climate change trends, including changes in key climate factors such as temperature, precipitation, wind speed, etc., providing a solid scientific basis for formulating environmental protection policies and responding to climate change. Through the simulation results of PINNs, policymakers can better understand the impact of human activities on the environment and thus take effective measures to reduce environmental pollution, protect natural resources, and promote the construction of an ecological civilization.

3. Current Challenges in PINN Application

3.1. Difficulty in learning high-frequency components

PINNs have difficulty in learning high-frequency functions, which is due to the frequency bias that may occur during the training process of the neural network. High-frequency features usually cause the gradient to become very steep, making it difficult for PINNs to accurately penalize the PDE constraint term. To solve this problem, techniques such as domain decomposition, Fourier features, and multi-scale DNNs can be used to help the network learn the high-frequency components.

3.2. Computational overhead for multi-physics field problems

Learning multiple physics fields at the same time may consume a large number of computational resources[2]. To address this problem, each physical field can be learned individually and then coupled together, or the physical fields can be learned in smaller domains using fine-scale simulation data.

3.3. Non-convexity of the loss function

PINNs usually involve training large neural networks whose loss function usually consists of multiple terms and is, therefore, a highly non-convex optimization problem. During the training process, the terms in the loss function may compete, resulting in an unstable training process with no guarantee of convergence to the global minimum. To solve this problem, more robust neural network architectures and training algorithms need to be developed, such as adaptively modifying the activation function, sampling data points, and residual evaluation points during the training process. Also, loss functions for PINNs are mainly defined in a point-valued manner, which may be effective in some high-dimensional problems but may fail in some special low-dimensional cases, such as the diffusion equation for non-smooth conductivity/permeability.

3.4. Lack of rigorous mathematical analysis

There is a lack of rigorous mathematical analysis to understand the capabilities and limitations of physically constrained neural networks such as PINNs, e.g., the approximation capabilities of neural networks. New theories need to be developed to better understand the training dynamics of PINNs and the stability and convergence of the training process, as well as to analyze the goodness of PDE problems involving random parameters or uncertain initial/boundary conditions.

In the physical sciences and chemistry, full-field data are often required for data-driven modeling, but such data are difficult to obtain experimentally and are computationally resource-intensive. There is a need to develop new methods of data generation and benchmarking to assess the accuracy and speed of learning physical information and to establish meaningful metrics to measure the performance of different methods[7].

3.5. Challenges of new mathematics

New mathematical theories need to be developed to analyze physical information learning models, such as analyzing the convergence of PINNs for solving PDE problems and analyzing the goodness of PDE problems involving random parameters or uncertain initial/boundary conditions.

4. Possible Solutions

In response to the above challenges, researchers have proposed various improvement strategies for PINNs to enhance the model's performance and stability. Causality constraints by adjusting the model's loss function to ensure causality in time-dependent problems, thereby improving the model's stability and predictive accuracy [8]. Hard constraint handling of boundary conditions enables PINNs to more accurately satisfy boundary conditions, thereby enhancing the model's adaptability and convergence [9]. Meta-learning methods can also be applied, allowing the model to perform better in transfer across different physical systems [10]. At last, deep spectral feature aggregation is an improved method for spectral feature aggregation, addressing the issues of PINNs in multiphase fluid modeling and enhancing the predictive accuracy in fluid dynamics problems [11].

5. Numerical Experiment on PINN

To demonstrate the application of Physics-Informed Neural Networks (PINNs) in practical problems, we choose a typical partial differential equation problem—the heat equation. We use PINNs to solve the one-dimensional heat conduction equation.

The one-dimensional heat conduction equation can be expressed as

\( \frac{∂u}{∂t}=α\frac{{∂^{2}}u}{∂{x^{2}}} \)

u(x, t) represents the temperature distribution, x is the spatial coordinate, t is time, and α is the thermal diffusivity coefficient.

To simplify, we set the initial conditions and boundary conditions as follows:

Initial conditions:

\( u(x, 0)=sin(πx) \)

Boundary conditions:

\( u(0, t)=u(1, t)=0 \)

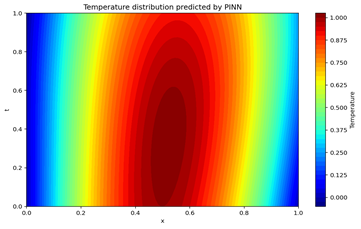

The PINN class uses three hidden layers, each with 20 neurons and the tanh activation function. The pinn_loss calculates the error term of the partial differential equation; initial_condition_loss and boundary_loss compute the losses for the initial and boundary conditions, respectively, to ensure that the model predictions satisfy the physical boundary conditions of the problem. The Adam optimizer is used to minimize the loss function, which combines the losses from the equation, initial conditions, and boundary conditions. After training is complete, predicted values of the temperature distribution are generated, and contour plots of the temperature distribution are created using matplotlib.

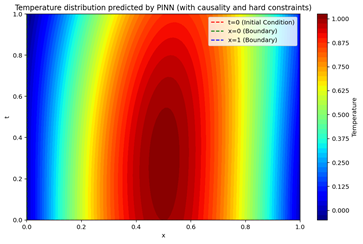

In this example, we will optimize PINNs for solving the one-dimensional heat conduction equation through causality constraints and hard boundary condition handling. The compute_loss function introduces a causality_weight, which reduces the influence of time by using an exponential decay function of the time point t. This way, the model will prioritize learning information from earlier time points.In the boundary_loss function, the model is forced to satisfy the zero-temperature requirement at the boundary conditions by selecting a single time point (e.g., t=0).

By incorporating causality constraints and hard boundary conditions, the model's loss function includes both the physical constraints of the partial differential equation and the constraints of the boundary and initial conditions. The final plotted graph represents the variation of temperature distribution u(x,t) concerning time t and space x, which is the numerical solution of the heat conduction equation.

Figure 2: Temperature distribution predicted by PINN without causality and hard constraints.

Figure 3: Temperature distribution predicted by PINN with causality and hard constraints.

By incorporating causality constraints and hard boundary condition handling, the model can solve the heat conduction equation more stably. The model converges faster at earlier time points and more accurately satisfies physical conditions at boundary points. This improvement allows the model to better handle boundary conditions and time series data, providing beneficial enhancements for the application of physics-informed neural networks in more complex problems.

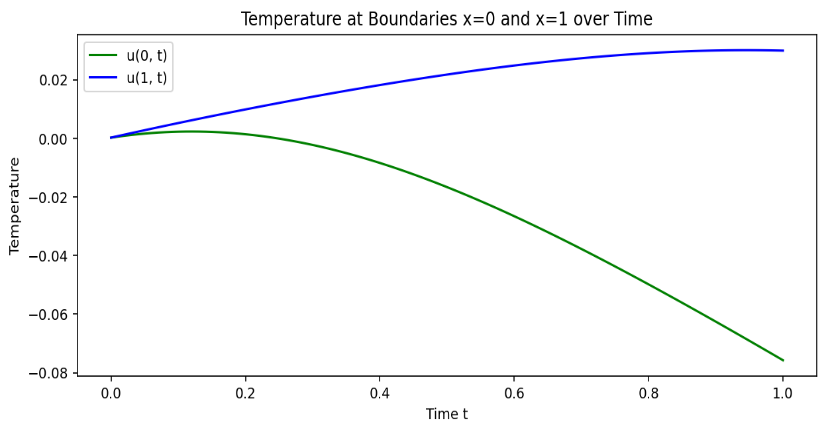

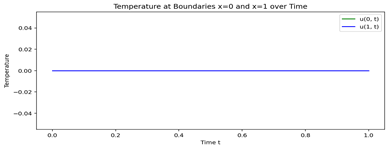

Figure 4: Boundary temperature curve before optimization.

Figure 5: Boundary temperature curve after optimization.

The model before optimization did not adequately adhere to the boundary conditions, exhibiting certain flaws that prevented it from accurately representing the actual situation. In contrast, after optimization, the use of hard boundary condition handling ensured that the model adhered to the boundary conditions, resulting in more accurate predictions.

6. Conclusion

Physics-Informed Neural Networks (PINNs) represent a groundbreaking advancement in bridging the gap between traditional machine learning models and the governing laws of physics. By embedding physical constraints into neural network architectures, PINNs offer unparalleled capabilities in solving partial differential equations and modeling complex physical systems with limited data. This paper has highlighted their diverse applications across various fields, including biomedical engineering, geophysics, material science, and renewable energy. From improving MRI image reconstruction and seismic data interpretation to enhancing stress analysis and energy optimization, PINNs demonstrate their potential to revolutionize scientific and engineering practices.

In conclusion, PINNs offer a transformative approach to integrating physics and machine learning, paving the way for innovative solutions in data-scarce environments. Future efforts should focus on overcoming current challenges, developing more efficient architectures, and expanding their applicability to increasingly complex multi-physics problems. By continuing to refine and expand this technology, PINNs have the potential to unlock new frontiers in scientific discovery and industrial applications.

References

[1]. M. Raissi, P. Perdikaris, G.E. Karniadakis, “Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, ” Journal of Computational Physics, Volume 378, 2019, Pages 686-707, ISSN 0021-9991. https://doi.org/10.1016/j.jcp.2018.10.045 .

[2]. Karniadakis, G.E., Kevrekidis, I.G., Lu, L. et al. Physics-informed machine learning. Nat Rev Phys 3, 422–440 (2021). https://doi.org/10.1038/s42254-021-00314-5 .

[3]. Xin Zhang, Yun-Hu Lu, Yan Jin, Mian Chen, Bo Zhou. An adaptive physics-informed deep learning method for pore pressure prediction using seismic data. Petroleum Science, Volume 21, Issue 2, 2024, Pages 885-902, ISSN 1995-8226, https://doi.org/10.1016/j.petsci.2023.11.006 .

[4]. Jaemin Seo.(2024).Past rewinding of fluid dynamics from noisy observation via physics-informed neural computing. Physical Review, Volume 110, Issue 2, 2024, Pages 025302, https://doi.org/10.1103/PhysRevE.110.025302 .

[5]. Myeong-Seok Go, Hong-Kyun Noh, Jae Hyuk Lim, Real-time full-field inference of displacement and stress from sparse local measurements using physics-informed neural networks, Mechanical Systems and Signal Processing, Volume 224, 2025, 112009, ISSN 0888-3270, https://doi.org/10.1016/j.ymssp.2024.112009 .

[6]. Jiang-Xia Han, Liang Xue, Yun-Sheng Wei, Ya-Dong Qi, Jun-Lei Wang, Yue-Tian Liu, Yu-Qi Zhang, Physics-informed neural network-based petroleum reservoir simulation with sparse data using domain decomposition, Petroleum Science, Volume 20, Issue 6, 2023, Pages 3450-3460, ISSN 1995-8226, https://doi.org/10.1016/j.petsci.2023.10.019 .

[7]. Sifan Wang, Xinling Yu, Paris Perdikaris, When and why PINNs fail to train: A neural tangent kernel perspective, Journal of Computational Physics, Volume 449, 2022, 110768, ISSN 0021-9991, https://doi.org/10.1016/j.jcp.2021.110768 .

[8]. Wang, S., Sankaran, S., & Perdikaris, P., “Respecting causality for training physics-informed neural networks, ” Computer Methods in Applied Mechanics and Engineering, vol. 421, p. 116813, 2024. [Online]. Available: https://www.researchgate.net/publication/370074660_Respecting_causality_for_training_physics-informed_neural_networks .

[9]. Ihunde, T. A., & Olorode, O., "The application of physics informed neural networks to compositional modeling, " Master's Thesis, Louisiana State University, Baton Rouge, LA, USA, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/abs/pii/S0920410522000675 .

[10]. Rao, D., Zhang, J., &Karniadakis, G.E., "A meta-learning approach for physics-informed neural networks (PINNs): Application to parameterized PDEs, "Journal of Computational Physics, vol. 448, p.110692, 2021.[Online]. Available: https://www.researchgate.net/publication/350749128_A_Metalearning_Approach_for_Physics-Informed_Neural_Networks_PINNs_Application_to_Parameterized_PDEs.

[11]. Rafiq, M., Rafiq, G., & Choi, G. S., "DSFA-PINN: Deep spectral feature aggregation physics informed neural network, " IEEE Access, vol. 10, pp. 22247-22259, 2022. [Online]. Available: https://doi.org/10.1109/ACCESS.2022.3146893

Cite this article

Li,Z. (2025). A Review of Physics-Informed Neural Networks. Applied and Computational Engineering,133,164-172.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. M. Raissi, P. Perdikaris, G.E. Karniadakis, “Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, ” Journal of Computational Physics, Volume 378, 2019, Pages 686-707, ISSN 0021-9991. https://doi.org/10.1016/j.jcp.2018.10.045 .

[2]. Karniadakis, G.E., Kevrekidis, I.G., Lu, L. et al. Physics-informed machine learning. Nat Rev Phys 3, 422–440 (2021). https://doi.org/10.1038/s42254-021-00314-5 .

[3]. Xin Zhang, Yun-Hu Lu, Yan Jin, Mian Chen, Bo Zhou. An adaptive physics-informed deep learning method for pore pressure prediction using seismic data. Petroleum Science, Volume 21, Issue 2, 2024, Pages 885-902, ISSN 1995-8226, https://doi.org/10.1016/j.petsci.2023.11.006 .

[4]. Jaemin Seo.(2024).Past rewinding of fluid dynamics from noisy observation via physics-informed neural computing. Physical Review, Volume 110, Issue 2, 2024, Pages 025302, https://doi.org/10.1103/PhysRevE.110.025302 .

[5]. Myeong-Seok Go, Hong-Kyun Noh, Jae Hyuk Lim, Real-time full-field inference of displacement and stress from sparse local measurements using physics-informed neural networks, Mechanical Systems and Signal Processing, Volume 224, 2025, 112009, ISSN 0888-3270, https://doi.org/10.1016/j.ymssp.2024.112009 .

[6]. Jiang-Xia Han, Liang Xue, Yun-Sheng Wei, Ya-Dong Qi, Jun-Lei Wang, Yue-Tian Liu, Yu-Qi Zhang, Physics-informed neural network-based petroleum reservoir simulation with sparse data using domain decomposition, Petroleum Science, Volume 20, Issue 6, 2023, Pages 3450-3460, ISSN 1995-8226, https://doi.org/10.1016/j.petsci.2023.10.019 .

[7]. Sifan Wang, Xinling Yu, Paris Perdikaris, When and why PINNs fail to train: A neural tangent kernel perspective, Journal of Computational Physics, Volume 449, 2022, 110768, ISSN 0021-9991, https://doi.org/10.1016/j.jcp.2021.110768 .

[8]. Wang, S., Sankaran, S., & Perdikaris, P., “Respecting causality for training physics-informed neural networks, ” Computer Methods in Applied Mechanics and Engineering, vol. 421, p. 116813, 2024. [Online]. Available: https://www.researchgate.net/publication/370074660_Respecting_causality_for_training_physics-informed_neural_networks .

[9]. Ihunde, T. A., & Olorode, O., "The application of physics informed neural networks to compositional modeling, " Master's Thesis, Louisiana State University, Baton Rouge, LA, USA, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/abs/pii/S0920410522000675 .

[10]. Rao, D., Zhang, J., &Karniadakis, G.E., "A meta-learning approach for physics-informed neural networks (PINNs): Application to parameterized PDEs, "Journal of Computational Physics, vol. 448, p.110692, 2021.[Online]. Available: https://www.researchgate.net/publication/350749128_A_Metalearning_Approach_for_Physics-Informed_Neural_Networks_PINNs_Application_to_Parameterized_PDEs.

[11]. Rafiq, M., Rafiq, G., & Choi, G. S., "DSFA-PINN: Deep spectral feature aggregation physics informed neural network, " IEEE Access, vol. 10, pp. 22247-22259, 2022. [Online]. Available: https://doi.org/10.1109/ACCESS.2022.3146893