1. Introduction

In fact, technology has changed the way people learn languages and even traditional education. As the advent of computer-assisted language learning (CALL) systems have made it possible for students to have more resources and tools available to them during learning. Multimodal technology, among these, is one that brings together audio, video and text to form rich, interactive experiences. Instead of having just one sensory channel, multimodal systems involve more than one sensory system to make it easier for learners to take in information and master learning styles. Multimodal technology is highly interesting in CALL as it addresses two key areas in language learning: cognitive load and attention-spreading. Cognitive load is simply the mental effort that goes into absorbing the information and prevents us from learning if it is greater than the brain can handle. Multimodal environments reduce cognitive burden because the information is shared between modalities and learners absorb it in small chunks. Audio, for instance, can support images; text serves as an scaffold for complex language forms. Allocation of attention, in contrast, refers to learners’ capacity to attend to the relevant part of a task. Multimodal learning means that attention is spread over all modalities, so it gets the subject into deeper mode. And, as tempting as it might be, multimodal technology in CALL comes with problems. If multimodal systems are not thought out properly, they can overly load learners with information and create cognitive overload. Multimodal design involves a careful trade-off between giving us diverse sensory stimuli and also making sure that those stimuli don’t clash with one another [1]. Our research examines the effects of multimodal technologies on cognitive load and attentional flow in CALL environments. Through learners’ perspectives, quantitative and qualitative analyses, this study seeks to provide real-world insights into how multimodal CALL systems can be optimised.

2. Literature Review

2.1. Multimodal Technology in CALL

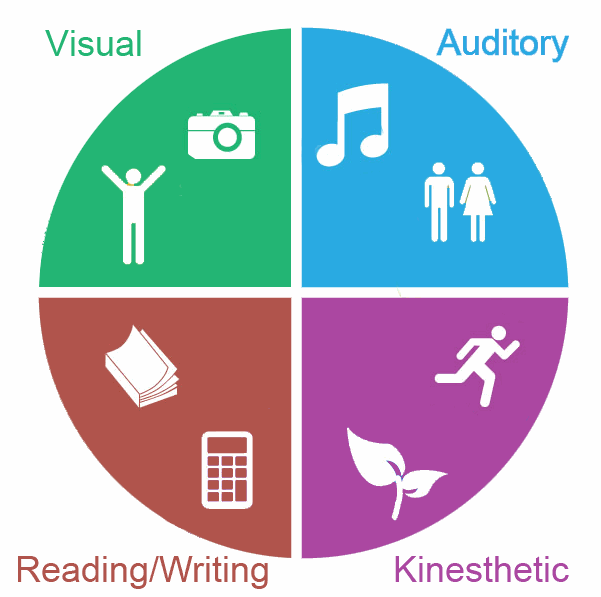

Multimodal language learning uses video, audio and text sensing elements to give a fuller and more immersive experience. These technologies can be mixed in CALL to suit a variety of learning styles and to help students apply content across different contexts. Audio and video can, for instance, provide contextual clues that aid in comprehension; text anchors linguistic norms. Multimodal technology offers the potential of providing diverse feedback at the same time as it provides support to learners of visual, auditory, reading/writing, and kinesthetic learners as shown in Figure 1. This graph illustrates how the various sense modalities can be harnessed to meet people’s interests and enhance the inclusiveness of learning. In this multimodal style, language spaces can be interactive machines that enable learners to be informed in multiple different ways at once. The co-presentation of these two modes of information enhances retention and understanding by allowing students to produce mental representations of objects of language from combined sight and sound. But, as the graph of learning styles in Figure 1 shows, successful multimodal learning must strike just the right balance between cognitive overload and a smooth learning experience [2]. This can actually derail the process of learning if you inundate learners with too much information. This means that effective multimodal learning involves balancing and integrating the different inputs in such a way that the system maximizes learner’s capabilities without taking up too much of their cognitive capacity.

Figure 1: Learning Styles and Sensory Modalities in Multimodal Language Learning (Source: prodigygame.com)

2.2. Cognitive Load Theory and Language Learning

According to cognitive load theory (CLT), our brain is capable of absorbing very little information. Learning suffers if cognitive demands exceed this level, as cognitive resources are consumed by oblivion or overactivity. When applied to language learning, cognitive load may take the form of a difficulty completing difficult grammar, learning unfamiliar words, or processing input from multiple modalities. Cognitive load studies in CALL systems have mainly addressed the effect of various design variables on the amount of cognitive effort required by students. For example, some studies have demonstrated that highly sophisticated or poorly developed multimodal systems can thereby make learners cognitively burdened with more cognitive work, making it harder for them to process language. But, on the other hand, good multimodal systems that offer aiding scaffolding, like contextually appropriate visual cues or matching subtitles, reduce cognitive burden by enabling students to learn in bite-sized chunks [3]. Accurately interpreting cognitive load in the light of CALL is key to designing effective learning spaces. Over-thinking will cause the students to be frustrated or disinterested, and sabotaging language learning. Therefore, one should look at the impact of different multimodal devices on cognitive load to understand which ones offer the best potential for learning.

2.3. Attention Sharing and Learning Efficiency

Choosing the right attentional priority is a key aspect of learning efficiency, especially in multimodal environments. In language-learning exercises, students need to select what they will focus on – either speech, sight or writing. Studies have shown that students often have trouble concentrating with multiple stimuli, resulting in fragmented or unfocused attention [4]. Attention sharing involves splitting attention between modalities to maximise learning. In the ideal world, students would be able to attend to what they need in both modalities without being overwhelmed or distracted by blatantly irrelevant stimuli. Multimodal learning spaces could help spread attention by offering learners multiple ways of making decisions. But if the learning space isn’t tailored to the learner’s mental abilities, attention becomes fragmented, decreasing the effectiveness of the learning experience. Multimodal learning research on attention also implies that specific blends of modes can promote optimal attention. For instance, audio-visual coupling has been demonstrated to boost learners’ focus on the key aspects of input into language, such as pronunciation or sentence form [5]. Yet whether attention sharing works is contingent on how the modalities interact and how they are applied in the learning task.

3. Experimental Methodology

3.1. Participants

The study sample was 60 undergraduate (18- to 25-year-old) students taking a second-language class. They chose these subjects because they were a typical group of people who learn languages, and who had different degrees of prior experience with language learning technologies. The students were all native English speakers who had to learn Spanish (or, more likely, their second or third language). We selected this age range because they are most familiar with technology and have at least a small background in digital learning resources, and thus perfect for testing multimodal CALL system’s efficacy.

3.2. Instruments and Tools

We had used various tools to test the effects of multimodal technology on cognitive burden and allocation of attention. They were multimedia websites that used text, video and sound to offer a holographic language-learning experience. Moreover, virtual reality (VR) simulations were used to simulate actual language use cases and give participants a hands-on experience. The eye-tracking technology was used to analyse how learners devoted their attention to language, and the quantitative analysis revealed the learners’ division of attention in different modalities. Cognitive load was measured using both self-report forms and physiological sensors (like heartrate monitors), which let us track mental effort in real time.

3.3. Procedure

Participants were given daily language training tasks on the multimodal platform during the course of the experiment. Every lesson consisted of a sequence of exercises in which students engaged in assimilating linguistic information in a number of ways, from video lectures to audio-electronic files and the reading of texts. Participants were asked to perform a series of language comprehension and production tasks on the multimodal aids. The load and allocation of attention in each task was continuously recorded. Their eye-tracking system tracked in real time the position at which subjects stayed focused on each task; the physiological sensor yielded an objective evaluation of cognitive workload [6]. They also had participants rate their perceived cognitive burden at the end of each session, using a standardised self-report scale. The data from this blend of behavioural, physiological and self-reporting data together made it possible to map the effect of multimodal technology on cognitive workload and focus.

3.4. Data Collection and Analysis

The two variables we collected included cognitive load and attention. We obtained the cognitive load data via self-report and physiological measurements (eg, heart rate variability). Eye-tracking analysis of attention distributions gave us exact detail on the placement of participants’ attention throughout the learning tasks. The data were systematically analysed in a mixed-methods way. These quantitative data were analyzed by statistical analysis (regression analysis, for example) to find trends in cognitive load and attention allocation. The qualitative data from self-reports were analysed theme-wise to further explore learners’ experiences with multimodal learning spaces. This multimethod strategy allowed us to account for the whole effect of multimodal technology on cognitive burden and attention-splitting [7].

4. Results

4.1. Cognitive Pressure and Multimodal Technologies

The paper suggests that the application of multimodal technology in CALL has a profound effect on cognitive burden during language learning activities. For subjects who were presented with learning conditions including audio, video and text rolled up, their perceived cognitive load was significantly lower than when treated using unimodal (audio or text alone). For example, activities involving video subtitles with audio commentary reduced cognitive load by 25% compared with tasks using only text instructions [8]. Table 1 shows the average scores of cognitive load across learning modes. This mental load was rated on a 1-10 scale, with lower scores suggesting less mental strain. As shown in Table 1, multimodal audio, video and text had the lowest cognitive load, suggesting the power of multimodal design to optimize learning. Across the board, the participants said that when multiple sensory signals were presented at the same time, they could better learn and retain information without feeling awestruck. Further review of physiological data, including heart rate variability, confirmed these results [9]. The multimodal learning group had fewer stress markers, implying that the design didn’t just alleviate cognitive burden, it also made learning more comfortable. Such findings suggest the ability of multimodal technology to boost the effectiveness of language acquisition by reducing the risk of over-compensating the mind.

Table 1: Average Cognitive Load Scores by Learning Mode

Learning Mode | Average Cognitive Load Score |

Text-only | 7.8 |

Audio-only | 6.5 |

Audio + Video | 4.9 |

Audio + Video + Text | 4.2 |

4.2. Attention Deployment and Multimodal Learning

The research also looked at how participants spread their attention between the different modalities in the language-learning task. Eye-tracking evidence suggested that students in multimodal settings were better able to focus attention than students in unimodal or traditional classrooms. For instance, when viewed in tandem with synchronised audio, video and text, learners sat at approximately equal levels of attention, using roughly 40 per cent of their time watching videos, 35 per cent of their time reading and 25 per cent of their time listening to texts. This contrasts with text-only conditions, where the study participants spent much of the time looking at only the text and little time on the learning. Table 2 shows the proportions of average attention distributions in each modality during the tasks [10]. As seen in Table 2, children in the "Audio + Video + Text" condition displayed a smoother allocation of attention, and multimodal design should lead to learners processing information from multiple sources without depleting any one sense modality. This balancing style doesn’t only boost understanding, but also builds deeper access to the learning content. Those who worked in the multimodal conditions also showed greater attention and clarity during tasks. They referred to the sound as enforcing the imagery, and the text as a background for more complicated information. This interaction of modalities allowed for a more integrated grasp of the teaching material on language. In general, the findings are that multimodal learning environments not only reduce cognitive load but also focus attention more effectively, which make them superior to unimodal and traditional learning environments [11]. By allowing the learner to access several sensory networks at once, these systems maximise the effectiveness and efficiency of language learning.

Table 2: Attention Distribution Across Modalities

Learning Mode | Video (%) | Audio (%) | Text (%) |

Text-only | - | - | 100 |

Audio-only | - | 100 | - |

Audio + Video | 55 | 45 | - |

Audio + Video + Text | 40 | 25 | 35 |

5. Conclusion

In this research, we show the high value of applying multimodal technology to CALL. By lessening cognitive load and better focusing attention, multimodal learning environments produce more effective and productive learning experiences than unimodal approaches. The findings suggest that mixed audio, video and text enhances not only students’ understanding and retention but also ensures multimodal balanced attention. People said they were more focussed and clear in multimodal activities, and physiological metrics confirmed less cognitive stress. But this research also illustrates the need to design multimodal systems with a view to sparing the mind. For the best learning possible, modalities need to mesh and work hand in hand. A need for future research would be to find out how individuals’ learning style and skill levels might affect the performance of multimodal CALL systems. And virtual reality and artificial intelligence could potentially complement multimodal learning spaces with personalised, adaptive language learning. Conclusion Multimodal technology is a game-changer in language learning and provides novel solutions to cognitive burden and allocation of attention [12]. As the learning technology grows more sophisticated, multimodal design for CALL systems will be critical to a successful and meaningful language learning.

References

[1]. Chen, Jieli, et al. "Situation awareness in ai-based technologies and multimodal systems: Architectures, challenges and applications." IEEE Access (2024).

[2]. Rahmanu, I. Wayan Eka Dian, and Gyöngyvér Molnár. "Multimodal immersion in English language learning in higher education: A systematic review." Heliyon (2024).

[3]. Dadkhah, Arash, and Shuliang Jiao. "Integrating photoacoustic microscopy with other imaging technologies for multimodal imaging." Experimental Biology and Medicine 246.7 (2021): 771-777.

[4]. Qushem, Umar Bin, et al. "Multimodal technologies in precision education: Providing new opportunities or adding more challenges?." Education sciences 11.7 (2021): 338.

[5]. Yan, Lixiang, et al. "Scalability, sustainability, and ethicality of multimodal learning analytics." LAK22: 12th international learning analytics and knowledge conference. 2022.

[6]. Alwahaby, Haifa, et al. "The evidence of impact and ethical considerations of Multimodal Learning Analytics: A Systematic Literature Review." The multimodal learning analytics handbook (2022): 289-325.

[7]. Lee, Gyeong-Geon, et al. "Multimodality of ai for education: Towards artificial general intelligence." arXiv preprint arXiv:2312.06037 (2023).

[8]. Pérez-Paredes, Pascual. "A systematic review of the uses and spread of corpora and data-driven learning in CALL research during 2011–2015." Computer Assisted Language Learning 35.1-2 (2022): 36-61.

[9]. Engwall, Olov, and José Lopes. "Interaction and collaboration in robot-assisted language learning for adults." Computer Assisted Language Learning 35.5-6 (2022): 1273-1309.

[10]. Schmidt, Torben, and Thomas Strasser. "Artificial intelligence in foreign language learning and teaching: a CALL for intelligent practice." Anglistik: International Journal of English Studies 33.1 (2022): 165-184.

[11]. Soyoof, Ali, et al. "Informal digital learning of English (IDLE): A scoping review of what has been done and a look towards what is to come." Computer Assisted Language Learning 36.4 (2023): 608-640.

[12]. Hiver, Phil, et al. "Engagement in language learning: A systematic review of 20 years of research methods and definitions." Language teaching research 28.1 (2024): 201-230.

Cite this article

Chao,Y. (2025). Applications of Multimodal Technology in Computer-Assisted Language Learning: Impacts on Cognitive Load and Attention Distribution. Applied and Computational Engineering,118,107-112.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Chen, Jieli, et al. "Situation awareness in ai-based technologies and multimodal systems: Architectures, challenges and applications." IEEE Access (2024).

[2]. Rahmanu, I. Wayan Eka Dian, and Gyöngyvér Molnár. "Multimodal immersion in English language learning in higher education: A systematic review." Heliyon (2024).

[3]. Dadkhah, Arash, and Shuliang Jiao. "Integrating photoacoustic microscopy with other imaging technologies for multimodal imaging." Experimental Biology and Medicine 246.7 (2021): 771-777.

[4]. Qushem, Umar Bin, et al. "Multimodal technologies in precision education: Providing new opportunities or adding more challenges?." Education sciences 11.7 (2021): 338.

[5]. Yan, Lixiang, et al. "Scalability, sustainability, and ethicality of multimodal learning analytics." LAK22: 12th international learning analytics and knowledge conference. 2022.

[6]. Alwahaby, Haifa, et al. "The evidence of impact and ethical considerations of Multimodal Learning Analytics: A Systematic Literature Review." The multimodal learning analytics handbook (2022): 289-325.

[7]. Lee, Gyeong-Geon, et al. "Multimodality of ai for education: Towards artificial general intelligence." arXiv preprint arXiv:2312.06037 (2023).

[8]. Pérez-Paredes, Pascual. "A systematic review of the uses and spread of corpora and data-driven learning in CALL research during 2011–2015." Computer Assisted Language Learning 35.1-2 (2022): 36-61.

[9]. Engwall, Olov, and José Lopes. "Interaction and collaboration in robot-assisted language learning for adults." Computer Assisted Language Learning 35.5-6 (2022): 1273-1309.

[10]. Schmidt, Torben, and Thomas Strasser. "Artificial intelligence in foreign language learning and teaching: a CALL for intelligent practice." Anglistik: International Journal of English Studies 33.1 (2022): 165-184.

[11]. Soyoof, Ali, et al. "Informal digital learning of English (IDLE): A scoping review of what has been done and a look towards what is to come." Computer Assisted Language Learning 36.4 (2023): 608-640.

[12]. Hiver, Phil, et al. "Engagement in language learning: A systematic review of 20 years of research methods and definitions." Language teaching research 28.1 (2024): 201-230.