1. Introduction

Stock price prediction is a complex and challenging field, and its research background can be traced back to the early stages of financial market development. With the development of the economy and the increasing maturity of financial markets, investors' attention to changes in stock prices continues to rise. Traditional stock price prediction methods often rely on fundamental analysis and technical analysis. The former relies on analyzing basic data such as company financial statements and industry conditions to make predictions, while the latter mainly relies on technical indicators such as historical prices and trading volumes. However, these methods often seem inadequate when facing highly dynamic and complex market environments.

In recent years, the rapid development of financial technology has brought new opportunities for stock price prediction. Especially with the rise of big data and machine learning technologies, it has driven a revolution in this field. Machine learning algorithms, with their powerful data processing and self-learning abilities, have gradually become important tools for analyzing market trends and predicting stock prices. Machine learning can process large amounts of historical data, enabling predictive models to better grasp the complexity of the market through multi-dimensional feature extraction and pattern recognition.

Machine learning algorithms have a wide range of applications in stock price prediction. Firstly, regression analysis is one of the most common methods used to predict future price levels. Linear regression, decision tree regression, support vector regression, and other techniques can all establish predictive models by learning the relationship between historical price data and other related features. Secondly, classification algorithms also play an important role in predicting the rise and fall of stock prices. For example, using models such as logistic regression, random forest, and gradient boosting tree, future price trends can be classified as rising, falling, or stable, providing investors with decision-making basis.

In addition, deep learning, as an important branch of machine learning, has also been widely applied in stock price prediction in recent years. By constructing neural networks, especially recurrent neural networks (RNNs) and long short-term memory networks (LSTMs), researchers can better capture nonlinear features and temporal dependencies in time series data. These deep learning models can automatically extract features through end-to-end learning, thereby reducing the burden of manual feature selection and improving prediction accuracy. This article provides a new approach to optimizing long short-term memory networks for stock price prediction based on the triangle topology aggregation optimizer.

2. Data set source

GOOGL, also known as Google's stock, is a favored choice for investors, thanks to the company's outstanding financial performance and its dominant position in the technology industry. Despite experiencing market volatility, Google's stock is considered a robust long-term investment based on its financial condition and industry position. The Google stock price dataset contains historical pricing data from June 14, 2016 to September 21, 2024. The parts of the dataset are shown in Table 1.

Table 1: Some data sets.

Open Date | High | Low | Close |

2016-06-14 00:00:00-04:00 | 35.736238 | 36.035004 | 35.568647 |

2016-06-15 00:00:00-04:00 | 35.861929 | 36.060439 | 35.777636 |

2016-06-16 00:00:00-04:00 | 35.657932 | 35.744721 | 35.076858 |

2016-06-17 00:00:00-04:00 | 35.345697 | 35.354179 | 34.338272 |

2016-06-20 00:00:00-04:00 | 34.852907 | 35.037954 | 34.585566 |

3. Method

3.1. Triangle topology aggregation optimizer

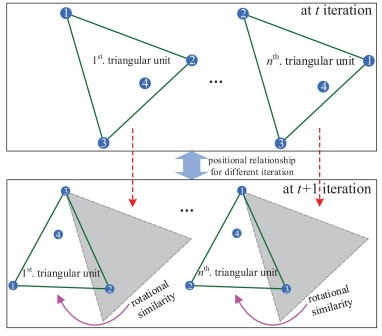

Triangle topology aggregation optimizer is an emerging optimization algorithm mainly used to solve a series of challenges encountered in large-scale optimization problems, such as high-dimensional, nonlinear, and complex constraint conditions. The core concept of this algorithm is to effectively explore the optimization space by constructing and utilizing the geometric properties of triangles, thereby improving convergence speed and solution quality [6]. The structure of the triangle topology aggregation optimizer model is shown in Figure 1.

Figure 1: The structure of the triangle topology aggregation optimizer model.

Firstly, the triangle topology aggregation optimizer relies on a hierarchical understanding of the problem space and uses triangles as basic elements to describe the local characteristics of the function. By decomposing the optimization problem into multiple interdependent triangular structures, the algorithm can better capture the local variations of the objective function. This hierarchical representation enables the algorithm to perform independent optimization at each level while maintaining the coherence of the overall structure. By adjusting the vertex positions of the triangle, the algorithm can gradually approach the optimal solution.

Secondly, the optimizer combines local and global search strategies during the search process. In the local search stage, the algorithm analyzes the information inside the triangle and finely adjusts the local minimum to ensure a deeper search near the already found solution. In the global search phase, the algorithm utilizes the geometric properties of triangles to explore a wider solution space across different local extremum regions. This dual strategy enables the triangular topology aggregation optimizer to maintain high search efficiency while avoiding getting stuck in local optima [7].

Another innovation of algorithms lies in their adaptability. The triangle topology aggregation optimizer dynamically adjusts parameters based on historical search experience to optimize search strategies. This adaptive mechanism enables the algorithm to adjust the search direction and step size in real-time to adapt to different optimization environments and problem characteristics, thereby enhancing its applicability in various application scenarios.

Finally, the performance of the triangle topology aggregation optimizer in practical applications is also quite outstanding. It is widely used in engineering design, machine learning parameter tuning, and other fields that require efficient optimization. By simulating the complexity of real-world problems, the algorithm demonstrates good convergence characteristics and solution stability. Due to its geometric foundation, the triangular topology aggregation optimizer is not only capable of handling standard optimization problems, but also suitable for complex constraint conditions and discontinuous objective functions.

3.2. Long Short Term Memory Network

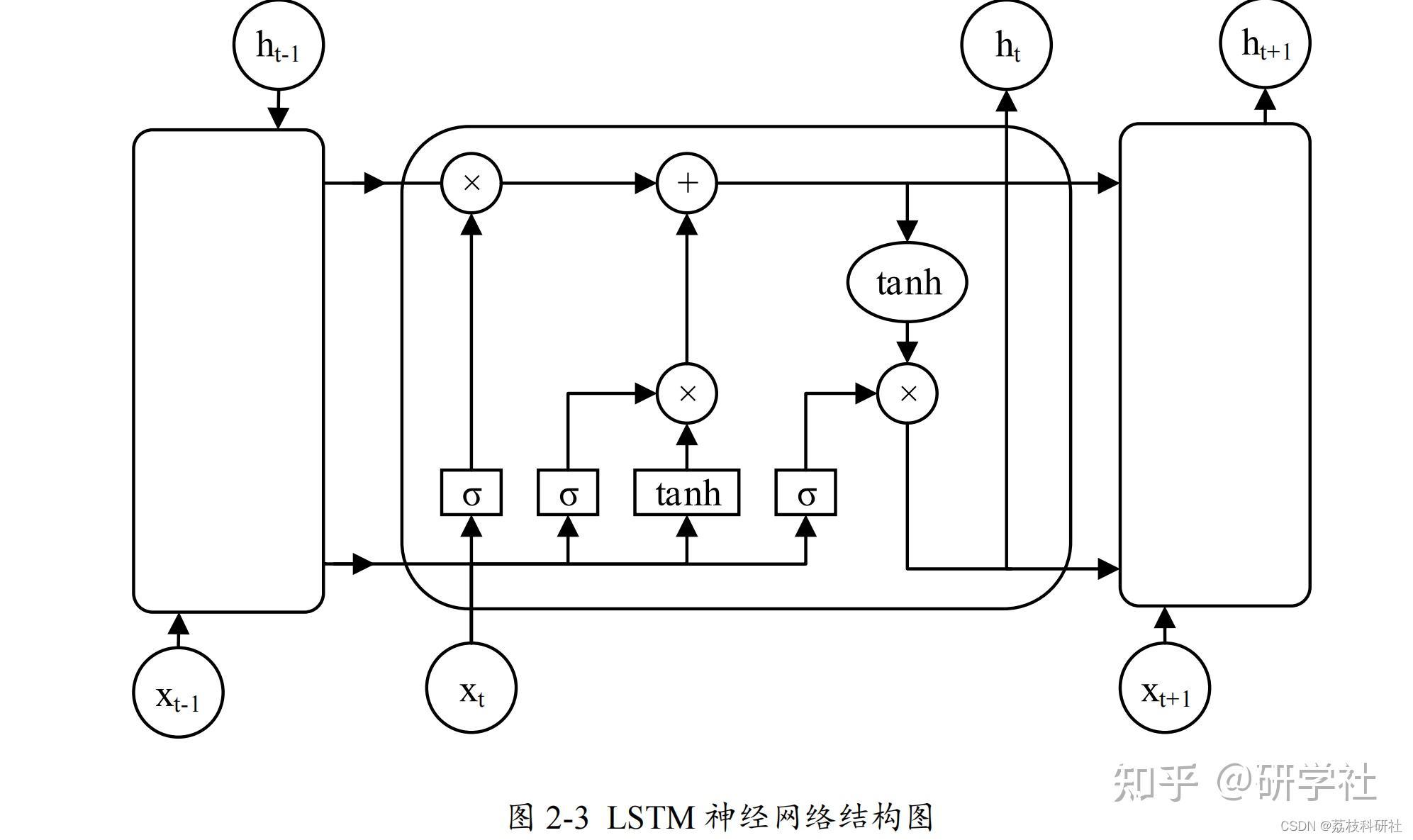

Long Short Term Memory Network (LSTM) is a special type of Recurrent Neural Network (RNN) designed to solve the problems of gradient vanishing and exploding in traditional RNNs when processing long sequence data. The core of LSTM lies in its unique gating mechanism, which enables these gating units to learn dependencies with longer time intervals in the data.

The LSTM network consists of three main gating units: forget gate, input gate, and output gate. The forget gate is responsible for determining which information should be forgotten, that is, removing which information that is no longer important from the cellular state. The input gate determines which new information should be stored in the cellular state. Finally, the output gate determines what information should be included in the next hidden state, which is typically a function based on the current cell state and the previous hidden state.

At each time step, the LSTM unit updates its cellular state and hidden state through these gating units. Cell states are transmitted between LSTM units, carrying information about previous time steps, while hidden states are passed on to the next layer of the network. This design enables LSTM to capture long-term dependencies, as it can selectively retain or forget information instead of simply evenly distributing all information to each time step.

The training of LSTM usually uses backpropagation algorithm, but due to its gating mechanism, it can better handle long sequence data and avoid gradient vanishing problem. This makes LSTM perform well in fields such as natural language processing, speech recognition, and time series prediction, especially in tasks that require processing large amounts of time series data and long-distance dependencies [9]. The principle structure of LSTM is shown in Figure 2.

Figure 2: The principle structure of LSTM.

3.3. LSTM optimized by triangle topology aggregation optimizer

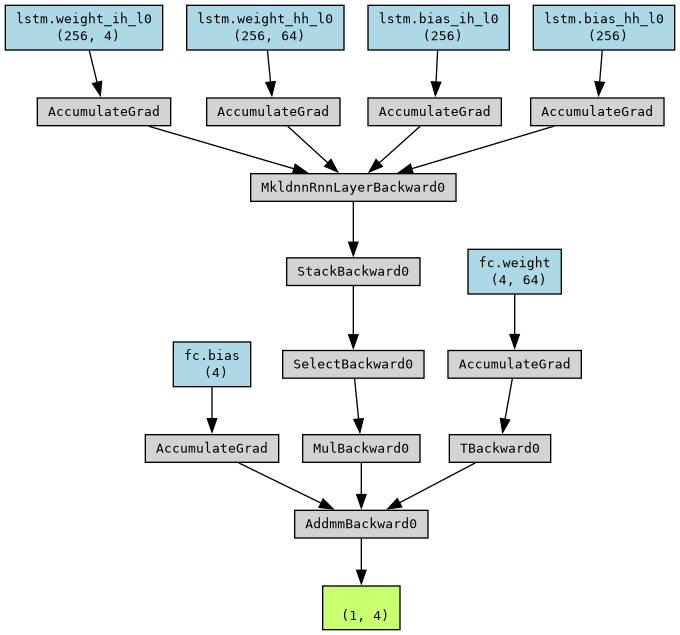

Triangle Topology Aggregation Optimizer (TTAO) is an optimization algorithm based on triangle topology structure, which optimizes the parameters of LSTM network by simulating the interactions between triangle vertices. In the LSTM optimization process, TTAO utilizes global and local aggregation strategies, combined with individual information in triangular units, to achieve a balance between global and local search, thereby improving the convergence speed and performance of the LSTM model [10]. The structure of the model is shown in Figure 3.

Figure 3: The structure of the model

The TTAO algorithm dynamically updates the weights of LSTM by performing linear combinations and fitness comparisons between individuals within triangular topological units. This method not only maintains the diversity of weight updates, but also effectively avoids getting stuck in local optima and enhances the model's generalization ability.

4. Result

When optimizing LSTM for regression prediction, the parameters of the Triangular Topology Aggregation Optimizer (TTAO) are set as follows: the input feature dimension (input_2) of the LSTM layer is set to 10, the number of hidden layer features (hidden_2) is set to 128, the number of layers (num_1ayers) is set to 2, the bias weight (bias) is set to True, the input-output dimension order (batch_first) is set to True, the dropout probability of the Dropout layer is set to 0.2, the bidirectional LSTM is set to True, and the projection layer feature dimension (proj_2) is set to 0.

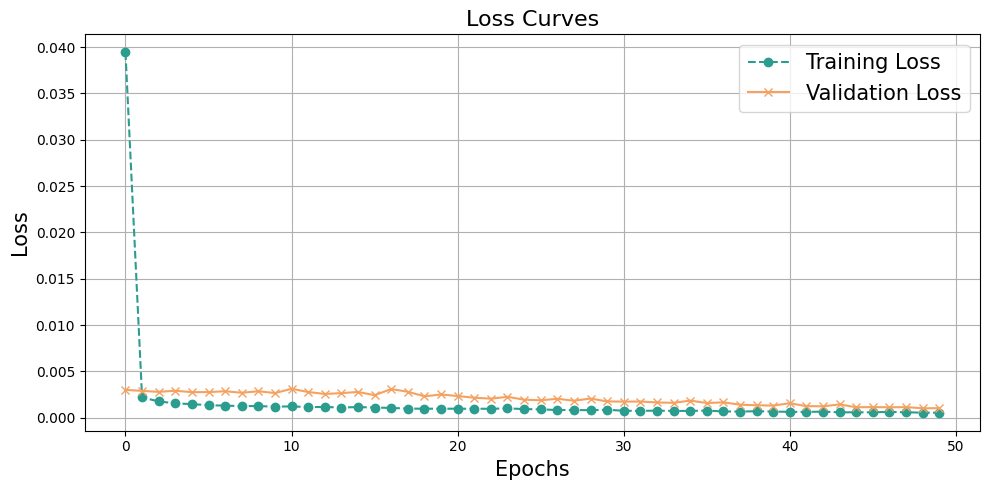

After setting the model parameters, introduce the model and input it into the dataset to predict stock prices. Set the epoch to 50 and output the numerical change curves of loss for the training and validation sets, as shown in Figure 4.

Figure 4: The numerical change curves of loss for the training and validation sets.

From the changes in loss between the training and validation sets, it can be seen that the loss value of the training set has decreased from 0.04 to below 0.005 and tends to converge, while the loss of the validation set remains below 0.005. From this, it can be seen that this model has achieved very good results in predicting stock prices in both the training and validation sets.

We tested the trained model using a test set and compared the actual value of stock prices with the predicted value of stock prices. The results are shown in Figure 5.

Figure 5: The actual value of stock prices with the predicted value of stock prices.

According to the results of the test set, it can be seen that the predicted value of the stock price by this model is very close to the actual value. Both numerically and trendwise, this model has made very accurate predictions.

Output model evaluation metrics as shown in Table 2.

Table 2: Model evaluation parameter.

MSE | RMSE | MAE | MAPE | R² | |

Training set | 1.938 | 1.392 | 1.353 | 103.974 | -133.917 |

Test set | 1.944 | 1.394 | 1.36 | 104.208 | -145.967 |

According to the evaluation indicators of the model, the MSE of the training set is 1.938, the MSE of the testing set is 1.944, the RMSE of the training set is 1.392, and the RMSE of the validation set is 1.394 for stock price prediction. The numerical difference between the training set and the test set in terms of model evaluation indicators is not significant, indicating that the model has good generalization ability and can perform well in predicting on new datasets.

5. Conclusion

Stock price prediction is a complex and challenging research field, and in recent years, with the development of deep learning technology, more and more algorithms have been applied to it. This article proposes a new method for optimizing long short-term memory networks (LSTM) and predicting stock prices by introducing a triangular topology aggregation optimizer. This approach provides new perspectives and possibilities for research in this field.

In the process of model construction, we used a historical stock price dataset, which was divided into a training set and a validation set, in order to evaluate and optimize the model. When we observe the changes in the loss function (loss) of the training and validation sets, we can find that the loss of the training set has decreased from the initial 0.04 to below 0.005 and gradually converged. This indicates that the model achieved significant learning outcomes during the training process. At the same time, the loss of the validation set remained below 0.005, which further validates the effectiveness of the model and indicates that its performance on unseen data is also quite excellent.

In the testing phase of the model, we evaluated the prediction results using a test set different from the training set. The results indicate that the predicted values of the model are very close to the actual values, and the model can accurately capture changes in stock prices both numerically and trendwise. This indicates that the introduced triangular topology aggregation optimizer effectively improves the performance of LSTM in time series prediction.

From the evaluation metrics of the model, the mean square error (MSE) of the training set is 1.938, and the MSE of the testing set is 1.944, indicating that the results between the training and testing sets are very close. The root mean square error (RMSE) of the training set is 1.392, and the RMSE of the validation set is 1.394, which is also a very good result, indicating that the model not only performs well on the training data, but also has good generalization ability. Therefore, we can conclude that the model can maintain good predictive performance on the new dataset.

In summary, this article provides a novel and effective solution for stock price prediction by combining the triangle topology aggregation optimizer with LSTM. Through training and evaluation of the model, we have demonstrated its excellent performance in both accuracy and generalization ability, indicating its broad potential for application in the financial market field. Future research can further explore the combination of different optimizers and model architectures in order to achieve better predictive performance. Profit and loss have always been risks faced by investors, and through effective predictive tools, we can reduce this risk to a certain extent and improve investment returns.

References

[1]. Jiyang C ,Sunil T ,Djebbouri K , et al.Forecasting Bitcoin prices using artificial intelligence: Combination of ML, SARIMA, and Facebook Prophet models[J].Technological Forecasting Social Change,2024,198122938-.

[2]. Harish K ,Sudhir S ,P. N , et al.A two level ensemble classification approach to forecast bitcoin prices[J].Kybernetes,2023,52(11):5041-5067.

[3]. Ruchi G ,E. J N .Metaheuristic Assisted Hybrid Classifier for Bitcoin Price Prediction[J].Cybernetics and Systems,2023,54(7):1037-1061.

[4]. Tyson M .Use TensorFlow to predict Bitcoin prices[J].InfoWorld.com,2023.

[5]. Sina F .Designing a forecasting assistant of the Bitcoin price based on deep learning using market sentiment analysis and multiple feature extraction[J].Soft Computing,2023,27(24):18803-18827.

[6]. Moinak M ,B. D V ,Michael F .Quantifying the asymmetric information flow between Bitcoin prices and electricity consumption[J].Finance Research Letters,2023,57.

[7]. Xiangling W ,Shusheng D .The impact of the Bitcoin price on carbon neutrality: Evidence from futures markets[J].Finance Research Letters,2023,56.

[8]. W. J G ,Sami J B ,Foued S , et al. Explainable artificial intelligence modeling to forecast bitcoin prices[J].International Review of Financial Analysis,2023,88.

[9]. Brahim G ,Sahbi M N ,Jean-Michel S , et al.Interactions between investors’ fear and greed sentiment and Bitcoin prices[J].North American Journal of Economics and Finance,2023,67.

[10]. Zaman S ,Yaqub U ,Saleem T .Analysis of Bitcoin’s price spike in context of Elon Musk’s Twitter activity[J].Global Knowledge Memory and Communication,2023,72(4/5):341-355.

Cite this article

Jin,Y. (2025). Optimizing Stock Price Prediction Based on Triangular Topology Aggregation Optimizer Using Long Short-term Memory Network. Applied and Computational Engineering,136,27-34.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Materials Chemistry and Environmental Engineering

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Jiyang C ,Sunil T ,Djebbouri K , et al.Forecasting Bitcoin prices using artificial intelligence: Combination of ML, SARIMA, and Facebook Prophet models[J].Technological Forecasting Social Change,2024,198122938-.

[2]. Harish K ,Sudhir S ,P. N , et al.A two level ensemble classification approach to forecast bitcoin prices[J].Kybernetes,2023,52(11):5041-5067.

[3]. Ruchi G ,E. J N .Metaheuristic Assisted Hybrid Classifier for Bitcoin Price Prediction[J].Cybernetics and Systems,2023,54(7):1037-1061.

[4]. Tyson M .Use TensorFlow to predict Bitcoin prices[J].InfoWorld.com,2023.

[5]. Sina F .Designing a forecasting assistant of the Bitcoin price based on deep learning using market sentiment analysis and multiple feature extraction[J].Soft Computing,2023,27(24):18803-18827.

[6]. Moinak M ,B. D V ,Michael F .Quantifying the asymmetric information flow between Bitcoin prices and electricity consumption[J].Finance Research Letters,2023,57.

[7]. Xiangling W ,Shusheng D .The impact of the Bitcoin price on carbon neutrality: Evidence from futures markets[J].Finance Research Letters,2023,56.

[8]. W. J G ,Sami J B ,Foued S , et al. Explainable artificial intelligence modeling to forecast bitcoin prices[J].International Review of Financial Analysis,2023,88.

[9]. Brahim G ,Sahbi M N ,Jean-Michel S , et al.Interactions between investors’ fear and greed sentiment and Bitcoin prices[J].North American Journal of Economics and Finance,2023,67.

[10]. Zaman S ,Yaqub U ,Saleem T .Analysis of Bitcoin’s price spike in context of Elon Musk’s Twitter activity[J].Global Knowledge Memory and Communication,2023,72(4/5):341-355.