Volume 150

Published on April 2025Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

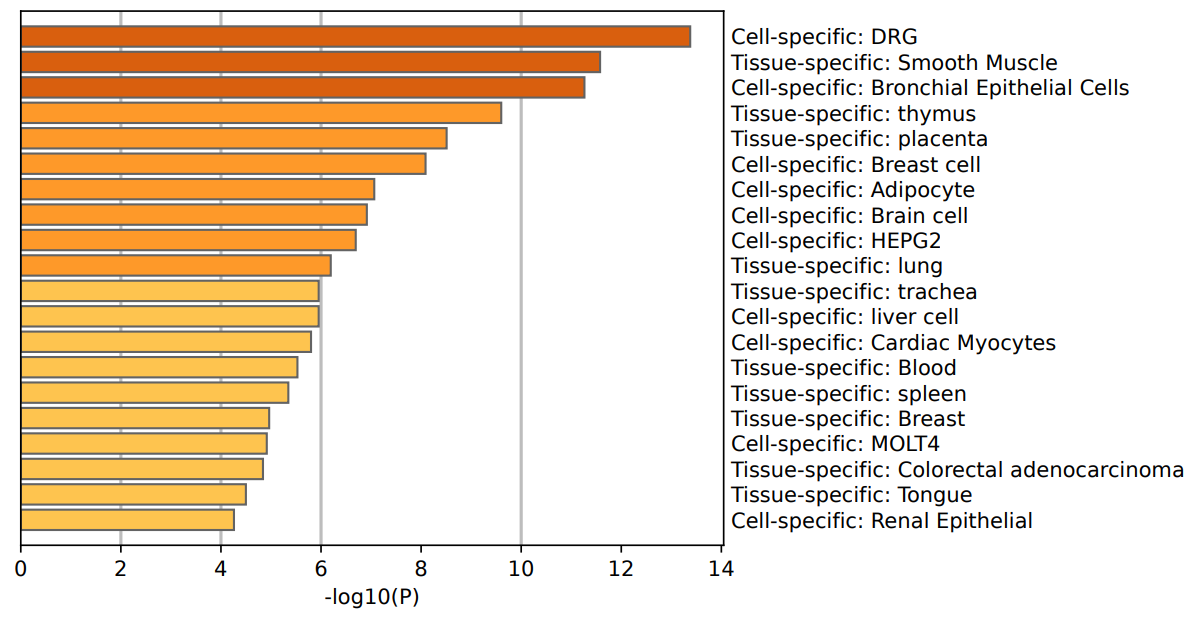

Breast cancer is one of the most common malignant tumors among women globally, posing significant threats to women's health and lives. Identifying specific molecular markers is crucial for early diagnosis, precision treatment, and accurate prognostic assessment of breast cancer. In this study, spatial transcriptomics technology combined with machine learning methods successfully identified molecular markers capable of effectively distinguishing invasive ductal carcinoma (IDC) from ductal carcinoma in situ (DCIS) and lobular carcinoma in situ (LCIS). By analyzing the gene expression profiles of 36,601 genes across 3,798 cells, significant differentially expressed genes (DEGs) were screened using the DEsingle method. Functional enrichment analysis indicated that these genes are significantly associated with breast cancer-related pathways, breast cell-specific expression, and the regulation of core transcription factors, such as TP53, SP1, and NFKB1. Further classification analysis employing machine learning models including random forest, decision tree, support vector machine, and logistic regression revealed that the random forest model demonstrated the highest performance, achieving an accuracy rate of 95.78%. Ultimately, ten key molecular markers were identified: MGP, ALB, S100G, KRT37, SERPINA3, AC087379.2, ZNF350-AS1, IGHG3, IGHG4, and IGKC. These markers exhibited robust discrimination between IDC and DCIS/LCIS, suggesting their potential roles in tumor invasion and metastasis. This study provides novel molecular evidence for early diagnosis, individualized treatment, and prognostic evaluation of breast cancer, contributing new research insights and theoretical support for precision medicine approaches in breast cancer.

View pdf

View pdf

The rapid advancement of artificial intelligence (AI) has revolutionized urban flash flood risk assessment, offering transformative solutions from real-time warning systems to long-term resilience planning. Coastal and low-lying urban areas, housing over 40% of the global population, face escalating flood risks due to climate change, sea-level rise, and intensified extreme weather. Traditional flood modeling, reliant on physical parameters, struggles with computational inefficiency and data scarcity. AI-driven approaches, particularly deep learning (DL) and neural networks address these gaps by leveraging multi-source data fusion, dynamic prediction, and reinforcement learning (RL) to enhance accuracy and efficiency. Techniques such as convolutional neural networks (CNNs) and U-Net architectures enable automated flood mapping using satellite and sensor data, while hybrid models integrating hydrodynamic simulations with machine learning (ML) improve inundation forecasting. Despite progress, challenges persist, including data quality in developing regions, model generalizability, and ethical concerns in AI deployment. This review highlights AI's potential to bridge technical gaps, optimize emergency responses, and inform resilient urban planning while underscoring the need for robust datasets, interdisciplinary collaboration, and ethical frameworks to ensure equitable and sustainable flood risk management.

View pdf

View pdf

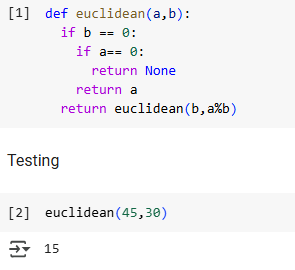

The Fibonacci sequence, introduced by the Italian mathematician Leonardo of Pisa (c. 1170–1250), also known as Fibonacci, was a pivotal concept in medieval mathematics. His influential book, Liber Abaci (The Book of Calculation, published in 1202), not only disseminated this sequence but also introduced the Hindu-Arabic numeral system to Europe. Beyond mathematics, this sequence has profound applications in fields such as computer science, natural sciences, finance, economics, art, and architecture, bridging both theoretical and practical domains. Given its widespread significance, understanding Fibonacci's contributions is crucial for advancing various disciplines. This study introduces the Fibonacci sequence and explores its applications in solving a diverse array of problems. Through the conduct of algorithmic experiments and the implementation of coded solutions, it aims to evaluate the computational efficiency of the sequence and delve into its wider mathematical significance.

View pdf

View pdf

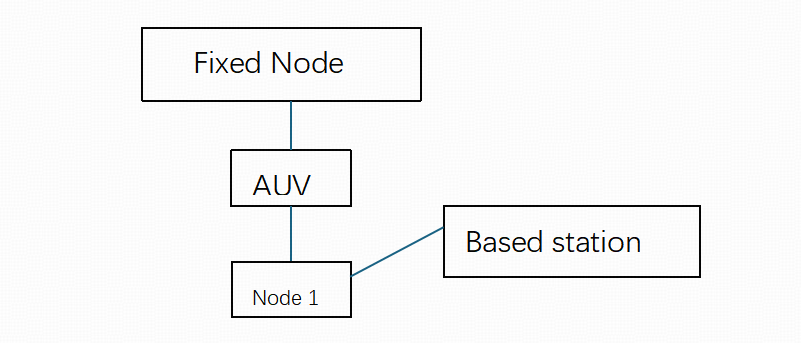

Underwater wireless sensor networks are essential for ocean exploration and military use. Acoustic communication is the predominant method due to the unique underwater conditions, though challenges like low bandwidth and multipath effects hinder its effectiveness. Terrestrial communication protocols are not directly applicable underwater, making network security and stable data transmission critical research areas. This paper reviews underwater sensor network communication technologies, architectures, protocol stacks, and security challenges, assessing the suitability of various communication methods and the components of these networks. It also analyzes the main topological structures and the security risks across the physical, data link, network, transport, and application layers. Additionally, the paper investigates positioning and navigation technologies in underwater communications and their future applications. Advancements in underwater optical communication and the development of intelligent underwater networks and the underwater Internet of Things (IoUT) are identified as key future directions.

View pdf

View pdf

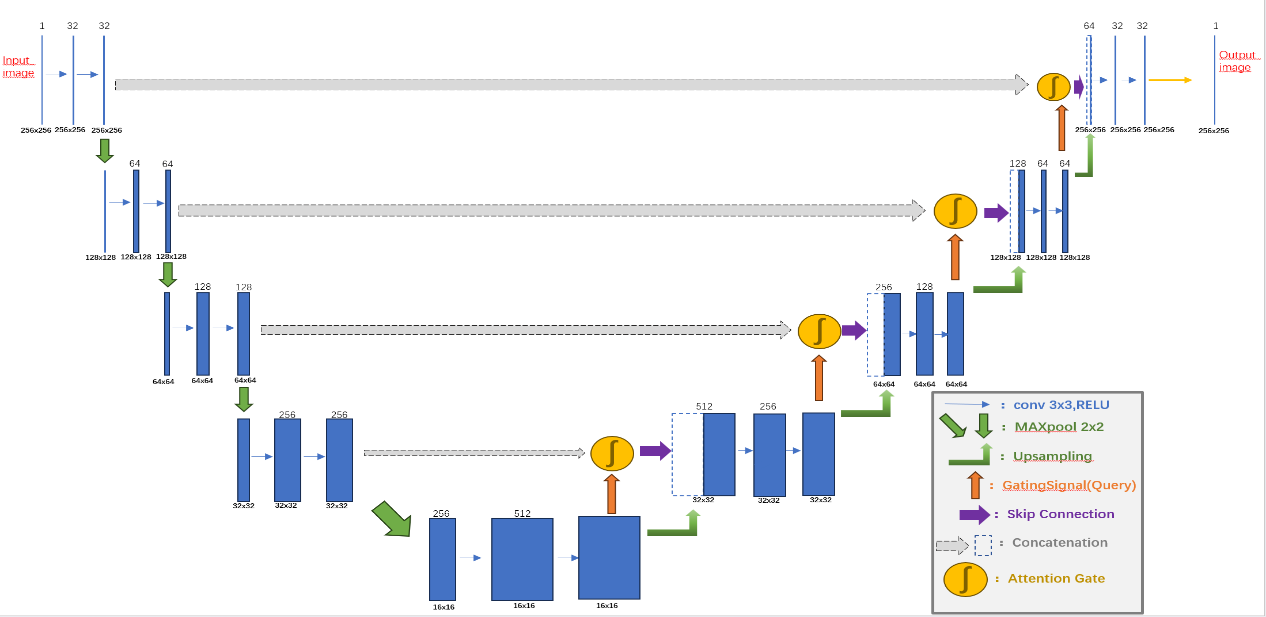

Breast cancer is the most common and one of the most lethal malignant tumors among women worldwide. Early and accurate diagnosis plays a crucial role in improving patient survival rates. As one of the primary imaging modalities, breast ultrasound imaging has been widely employed in clinical screening due to its low cost and lack of radiation exposure. However, limited by its imaging mechanism, ultrasound images often suffer from severe speckle noise interference, blurred boundaries, and complex tissue structures, which significantly hinder the performance of automatic lesion segmentation. To address this challenge, this paper proposes an improved Attention U-Net model. By introducing Attention Gate modules into the conventional U-Net architecture, the model is guided to focus on salient regions associated with lesions while suppressing background interference. Moreover, the network depth is increased to enhance feature representation capabilities. As a result, the proposed model achieves improved segmentation accuracy and boundary fitting performance in complex scenarios.

View pdf

View pdf

The world is undergoing technological innovation, with the integration of artificial intelligence technology into various fields bringing about profound changes. In the field of education, major countries have raced to develop and deploy artificial intelligence, fully preparing for the digital transformation of education. This paper focuses on three main aspects: existing achievements, the brought changes, and future prospects, and deeply analyzes the impact of artificial intelligence in the education field. Taking China and the United States as examples, in the United States, K-12 education has vigorously promoted the implementation of artificial intelligence tools in basic education through means such as financial support, policy promulgation, and improving the artificial intelligence capabilities of educators. In China, the government has carried out large-scale teacher training programs on teaching methods which were related with artificial intelligence, constructed an integrated education platform for artificial intelligence to provide rich digital learning resources, and developed artificial intelligence-based education courses to cultivate students' digital literacy and innovative thinking. Nevertheless, countries around the world face many challenges, such as data privacy and security, ethical issues in the application of artificial intelligence, and the digital divide in education access. In the future, the author firmly believes that artificial intelligence will be further integrated into the education field. Countries should focus on cultivating and introducing technical talents, constructing agile and efficient governance frameworks, and adhering to the education concept of putting people at the center and using artificial intelligence as a supplement.

View pdf

View pdf

Against the backdrop of intensified competition in the financial market and increased demand for risk management and control, the wave of digital transformation is profoundly reshaping the landscape of the financial industry. The accumulation of massive amounts of data has brought new opportunities and challenges for financial institutions to innovate business models and enhance risk management capabilities. This paper focuses on the application of data mining algorithms in bank credit risk assessment. Through literature review and case analysis, it explores the application status, effects, challenges faced, and looks ahead to future development trends. The findings domenstrate that data mining algorithms can significantly improve the accuracy of credit risk assessment and decision-making efficiency, but there are also issues such as data quality and algorithm interpretability. Future studies should focus onalgorithm integration, enhanced interpretability, and combination with emerging technologies will be the development directions. Banks should actively respond by improving data management and technology application strategies.

View pdf

View pdf

The study introduces a novel evaluation system designed to measure the metacognitive abilities of embodied agents. The system incorporates multiple metrics—including task success rate, self-monitoring accuracy (measured by AUC), error detection speed, and confidence calibration error—to provide a comprehensive assessment of an agent’s internal monitoring and self-regulatory processes. Experiments were conducted in simulated environments (using Meta-World, etc.) and on a real robotic platform performing target grasping tasks. Two types of agents were compared: baseline agents relying solely on external feedback and agents enhanced with integrated metacognitive modules. The results demonstrate that agents with metacognitive capabilities consistently achieve higher performance, exhibit more precise self-monitoring, and respond more swiftly to unexpected events. This evaluation system serves as a robust tool for assessing metacognitive functions and offers promising implications for the development of more adaptable and reliable autonomous systems in dynamic environments, thus significantly enhancing overall system performance continuously.

View pdf

View pdf

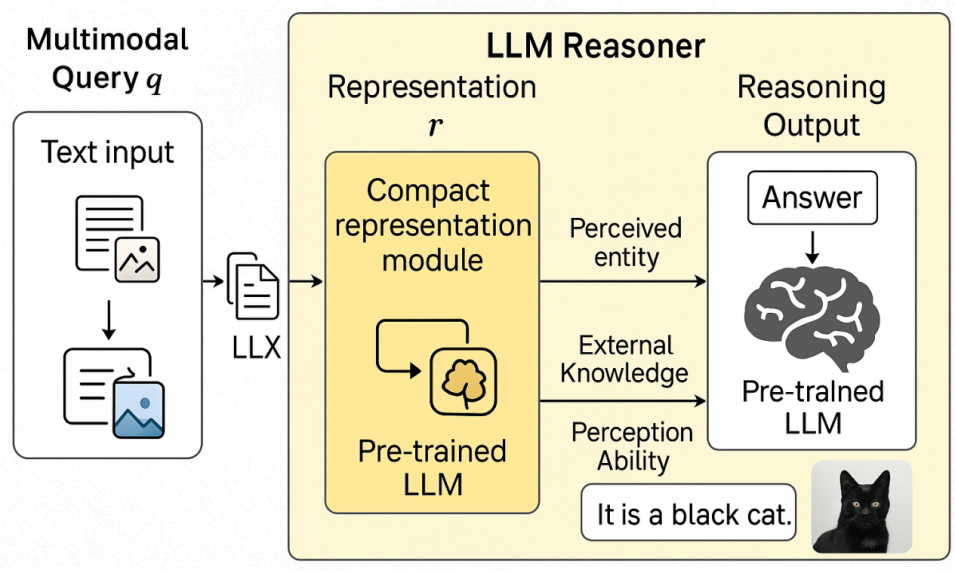

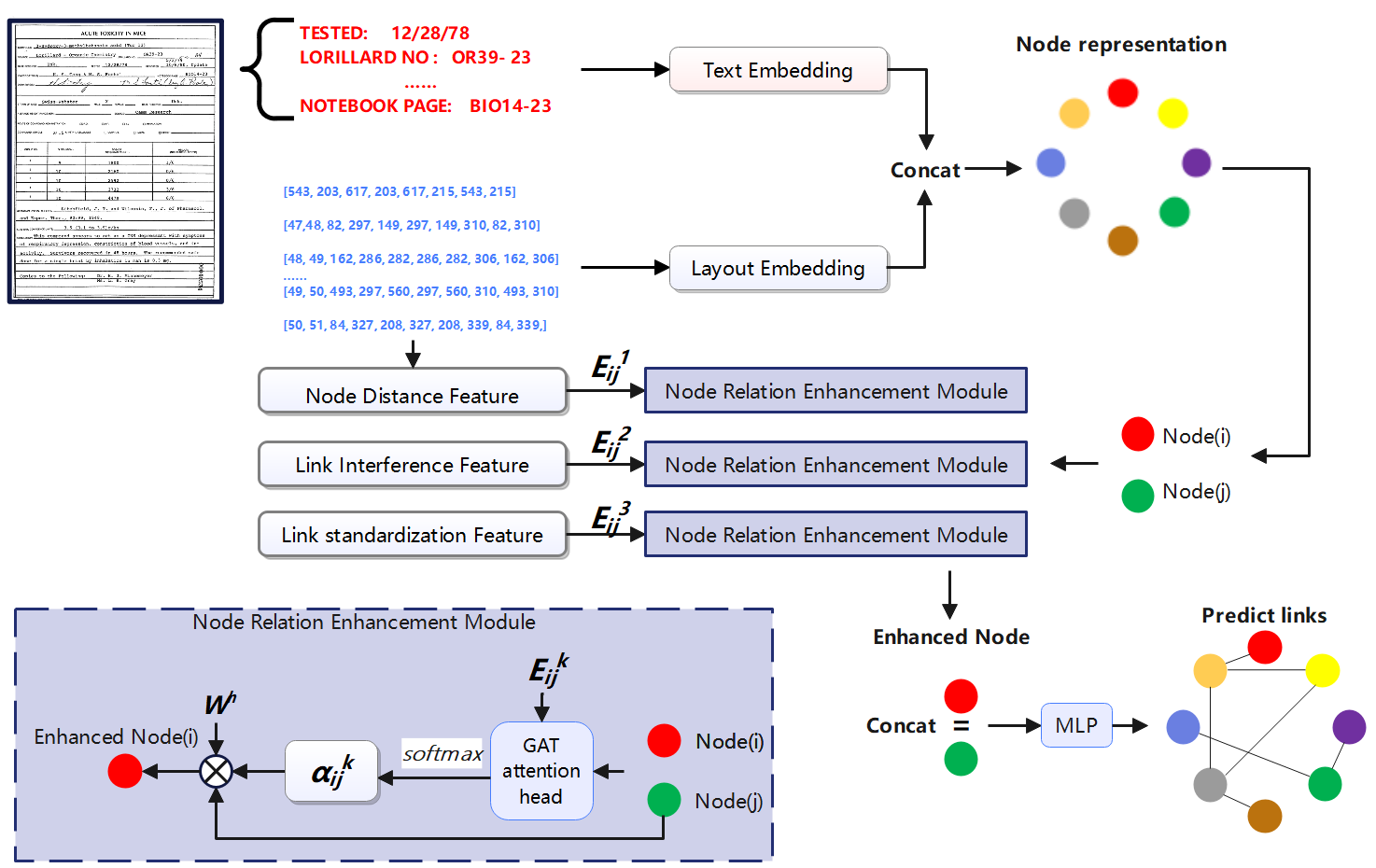

Entity linking in visually rich documents (VRDs) is critical for industrial automation but faces challenges from complex layouts and computational inefficiency in existing models. Traditional approaches relying on pre-trained transformers or graph networks struggle with noisy OCR outputs, a high number of parameters, and invalid edge predictions in industrial VRDs. To Vision Information Extraction (VIE) for Industrial VRDs, we introduce a lightweight multi-rule graph construction network for entity linking, integrating text and layout embeddings as graph nodes. A multi-rule filtering method reduces invalid edges using node distance, link interference, and standardization rules inspired by document production logic and reading habit. A node relation enhancement module with Graph Attention Networks (GAT) enhances nodes through multi-rule edges and attention scores, enabling robust reasoning for noisy and complex layouts. Evaluated on FUNSD and SIBR datasets, our model achieves F1 scores of 58.96% and 65.08% with only 18M parameters, outperforming non-pretrained baselines while maintaining deployability for resource-constrained environments.

View pdf

View pdf

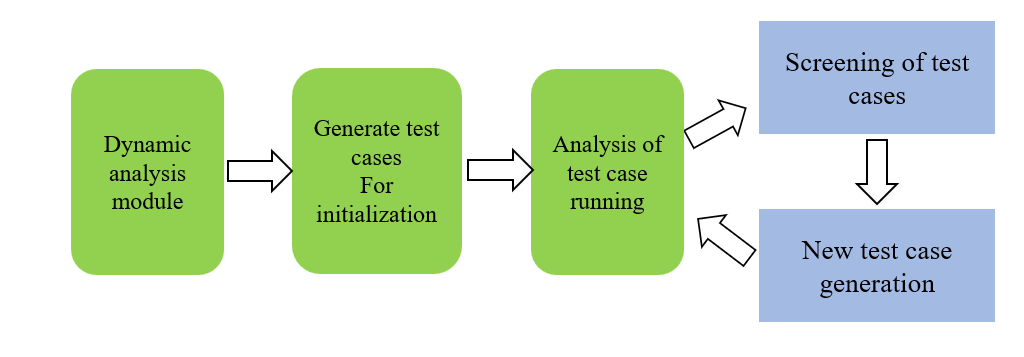

With the continuous development of blockchain technology, thousands of smart contracts have been deployed on the blockchain, and the number of smart contract vulnerabilities has increased significantly. In the task of smart contract vulnerability detection, fuzz testing methods are usually used for detection. Existing AFL-based methods are inefficient in generating test cases that meet complex path constraints. This study addresses the limitations of traditional fuzz testing techniques in detecting vulnerabilities related to strictly constrained conditional branches in Ethereum smart contracts. To overcome this challenge, we propose a hybrid framework that combines static semantic analysis with adaptive dynamic fuzz testing and combines a lightweight heuristic seed selection mechanism to prioritize path-sensitive mutations. Our method adopts semantic-aware operators to guide targeted exploration of protected execution paths while dynamically optimizing energy allocation among test cases. Experimental evaluation on benchmark contracts shows that compared with baseline methods, the proposed framework achieves significantly improved branch coverage and accelerated vulnerability detection, especially for critical security vulnerabilities such as reentrancy and arithmetic exceptions, without sacrificing detection accuracy. The results verify the effectiveness of our method in balancing exploration efficiency and analysis rigor for blockchain-oriented security verification.

View pdf

View pdf