1. Introduction

CNN is a neural network algorithm inspired by the human nervous system, which is mainly used for image task data processing, and it has now become one of the most representative neural networks in deep learning. For the processing of image tasks, CNN-based deep learning enables researchers to solve the problems arising from the design of BCI human brain-assisted computing, such as the information acquisition module and signal processing module in BCI.

In the signal acquisition module, the requirement for data input becomes the first problem. For example, the AlexNet module [1] has requirements for the pixels of the input image, which affects CNN's research on specific images. And for the signal processing module, the architecture of CNN is studied in feature extraction and classification problems. The research direction of designers today mainly lies in how to apply new models or algorithms to change the architecture of CNNs or solve the specific problems, which will be mentioned below, to maintain high accuracy and performance. Some researchers improve the accuracy and maintain high performance by changing the feature extraction method. For example, Yao Lu et al. [2] use short-time Fourier transform (STFT) for feature extraction. Some researchers will integrate other models to carry out research from the direction of data processing. For example, Y. M. Saidutta et al. [3] added an HMM module to CNN. There are also some studies that are focusing more on the use of classifiers and their methods, such as extracting features using Common Space Pattern (CSP) [4] and presenting them to classifiers such as SVM, which can achieve better accuracy.

The purpose of this review is to introduce these applications and problems of CNN in the BCI module in recent years, analyze the solutions proposed by researchers based on these problems to increase the accuracy and high-performance of BCI system, and propose possible future development directions and improvements to the CNN architecture.

2. Overviews of BCI and CNN

2.1. Overview of Brain-Computer Interface (BCI)

Brain-computer interface (BCI) is an emerging technology that allows the human brain to assist in controlling computational processing, for assisting brain control of people with physical disabilities. Specifically, through BCI’s information acquisition module, signal processing module, and application module, the brain-computer interface utilizes electrical signals, which allows people with physical impairments to manipulate mechanical devices through neural activity. According to Z. Li et al. [5], the implementation of BCI ranges from non-invasive, such as electroencephalography (EEG), magnetoencephalography (MEG), and so on, to invasive techniques. Invasive Brain-Computer Interfaces are designed to surgically implant electrodes under the scalp to transmit electrical signals in the human nervous system to get data, Because the immune response generated by the body receiving the invasive brain-computer interface can significantly affect the reception and processing of signals, this kind of implement is not suitable for the image processing capabilities that CNN produces in BCI, which the author will explain in the overview of CNN. So, non-invasive brain-computer interfaces [6] make neuroimaging in the human body an interface. In general, the CNN architecture can perform electrical signal processing functions to design BCI with higher accuracy and higher performance.

2.2. Overview of BCI principles

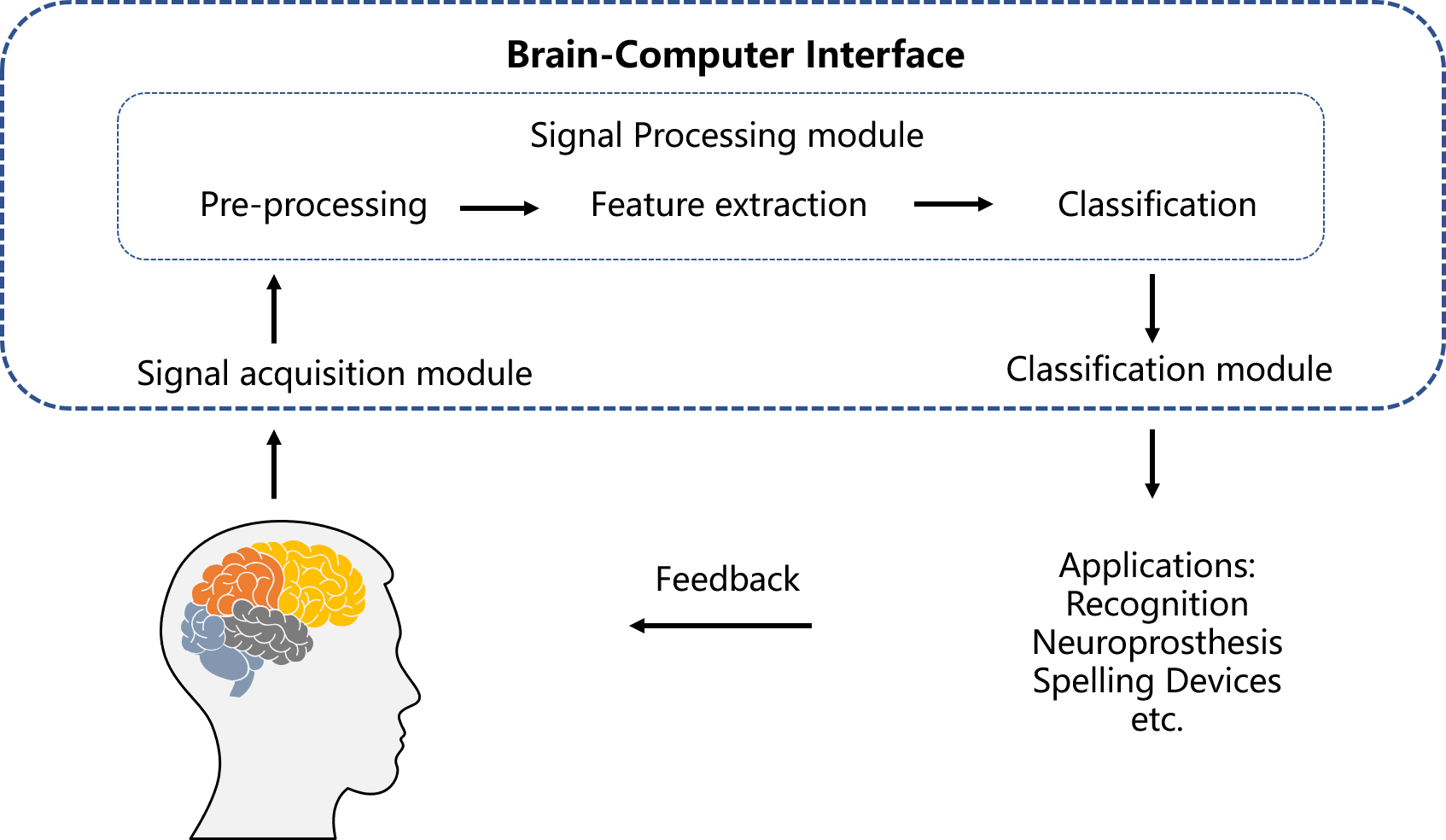

According to S. Aggarwal & N. Chugh [6], the BCI system is usually composed of the following three modules: the information acquisition module, signal processing module, and application module. As a module for initial contact with electrical signals, the signal acquisition module is used to collect electrical signals, which are recorded from the activities of the brain scalp or the brain nerve center, and finally provided to the BCI operation.

|

Figure 1. Basic Components in BCI System. |

The most important module is signal processing module, and many researches based on it aims to promote the accuracy and high-performance in the system.

The signal processing module typically processes information from the data receiving module in preprocessing, feature extraction, and classification methods. Preprocessing is equivalent to a supervised learning function in image processing tasks, and it can be used to remove artifacts in EEG signals [7] classified to obtain high-precision EEG signals for further signal processing. Feature extraction is to extract the features of information or commands in the time domain and frequency domain, so as to perform coding operations for other operations. Common feature extraction methods such as Short Time Fourier Transform (STFT), which some researchers used to make the feature extraction. In addition, the signal processing module also has a classification function, and its function is to convert the feature quantity provided by the feature extractor into the category of brain patterns with some algorithms.

In BCI design, the output target device of the application module is the computer in the BCI, which is also the feedback provided to the user, followed by the process of signal processing, especially feature extraction and classification, so as to improve the accuracy and high performance.

2.3. Introduction of Convolutional Neural Network (CNN)

In K. O’Shea et al [8] description, convolutional neural networks (CNN) are a category of artificial neural network (ANN), which is a computational processing system. Designers construct ANN's operations through studying the nervous systems of creatures, especially advanced creatures like humans. Like neurons in the human brain, ANN consists of a large number of interconnected computing nodes [3], which are connected in a distributed pattern and can learn from data inputs and produce relevant outputs.

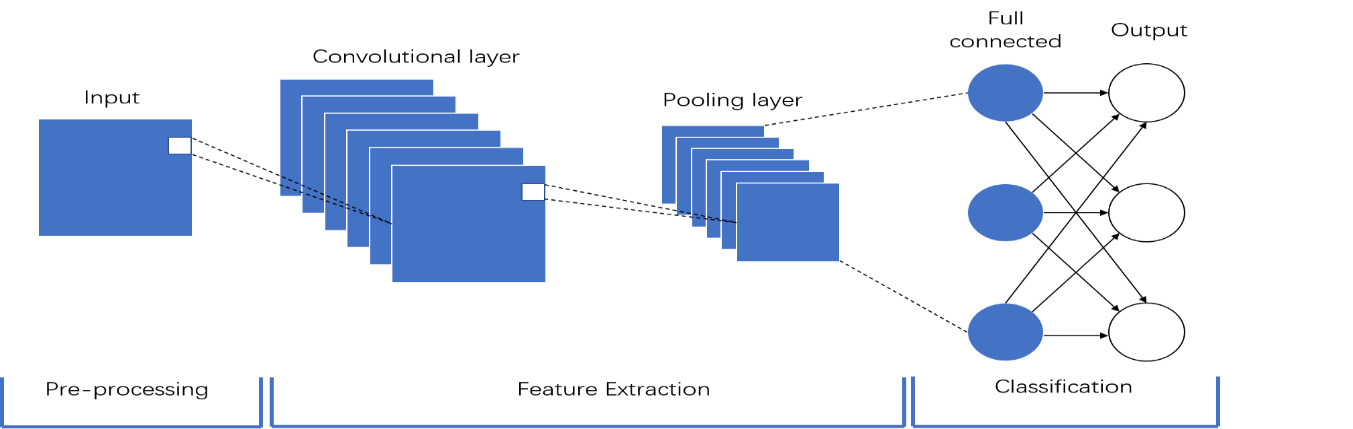

Generally speaking, CNN consists of convolutional layers, pooling layers, and fully connected layers. When these layers are stacked together, a CNN architecture is formed. First, CNN input layers can hold images in pixel values. The convolutional layer determines the output of the neuron by computing the scalar and the weights connected to the input region dimension, plus the interregional product connected to the input convolution. The pooling layer samples down different dimensions set by the designer, such as spatial, and further reduces the number of unnecessary dimensions according to the dimension of the input. In fact, as the Fig 2. demonstrates, the pooling layer is a non-linear form of down sampling, of which "Max pooling" is the most common. “Max pooling” divides the input image into several rectangles and inputs the maximum value for each sub-region. The final fully connected layer, like an artificial neural network (ANN), produces class scores with activations for classification and improves performance.

|

Figure 2. The architecture of Convolutional Neural Network. In CNN, there are three basic layers: convolutional layers, pooling layers, and full connected layers. |

But convolutional neural networks (CNN) are different from ANNs in the field of BCI. It is more suitable for the needs of BCI in image task processing. In image processing tasks, the convolutional neural network receives the original image vector input, performs learning operations, and finally outputs the class score. In the inner layer of CNN, neurons are composed of three dimensions (length, width, and depth), and this architecture can completely compress the input dimension into the cross-depth dimension to form smaller class scores. By forming a higher dimension, better BCI signal processing requirements, such as feature extraction, classification, and so on, are achieved.

3. CNN Application

3.1. Classic model: AlexNet

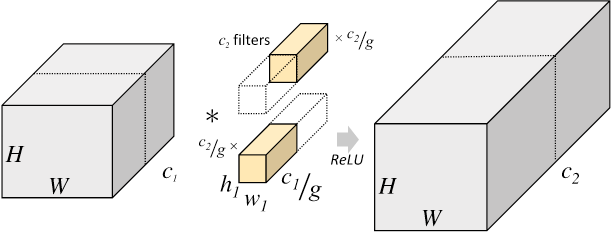

The AlexNet model is a group convolution that uses lower GPU resources to build wider networks for faster and more accurate CNN computations. AlexNet consists of five convolutional layers and three fully connected layers. In each convolutional layer, there are usually many kernels. This structure utilizes multiple convolutional kernels, also known as filters, for feature extraction. These filter banks are characterized by a spatially distributed diagonal structure. However, the input of the AlexNet model is an RGB image with a size of 256*256 pixels, which means that all images and preprocessed data in deep learning must be 256*256 pixels, which means that the input data must meet this requirement to be processed. In 2017, S. Xie et al. [9] found that only increasing the depth of the network may lead to larger experimental errors, so researchers fitted ResNet's convolution blocks by overlapping smaller convolution blocks, which can reduce the complexity of the model and maintain the original model accuracy, which is a deep learning study based on the AlexNet group convolution-based model.

This model performs better data processing by increasing the number of physical layers. The method described below is to improve system accuracy and high performance from the fusion of new models, algorithms, techniques, etc.

|

Figure 3. Group convolution module. |

The convolution layer will be divided into G groups to compute, and the result comes from the entail feature map [10].

3.2. Problem with the commands

Commands are converted by researchers using EEG, brain waves and other signals, and play a control role in brain-computer interaction. In the study of Y. M. Saidutta et al. [3], they added an HMM (Hidden Markov Model) model based on a mixed-dimensional CNN to increase the reception of moving image (MI) commands. In conventional MI, if the number of commands is increased by developing a high-precision classifier, this limits the increase in the number of commands itself. By arranging and combining a limited amount of brain electrical activity and correlating it with a series of commands, this can lead to errors in the decoding process. Therefore, the researchers hope that by outputting the probability of CNN, inputting the data into the HMM model to decode the EEG, and performing command classification, feature extraction and other operations through the automatic learning of CNN, the coding error can be reduced when the number of commands cannot be increased.

3.3. Changing the method of feature extraction

For the signal processing module in the brain-computer interface, designers can also choose other feature extraction methods, such as short-time Fourier transform (STFT). According to the research of Yao Lu et al. [2], these methods are used to extract the most salient features of motor imagery (MI) and then classify them into different orientations, which can efficiently process EEG signals: Fourier transform, wavelet transform (WT), common Spatial Patterns (CSP), etc. Motor imagery (MI) is an embodiment of the electrical signal encoding of BCI users, which is a dynamic process, and MI is closely related to the sensorimotor cortex of the human brain. These experimenters divided the brainwave signal into five frequency bands, used pictures to generate events in each frequency band, extracted the spectrum of the signal from the EEG signal by using the STFT method for these events, put it into the framework of CNN, and performed related training. They found that the STFT method converts the time domain signal into the time-frequency domain signal of the EEG, and then processes and classifies it through CNN, and calculates that the classification accuracy in different time periods can reach 89%, and the highest can reach 92.8%, which is It shows that the data processing module using STFT can effectively classify motion image data.

3.4. Feature extraction by adding dimension

According to Li et al. [1], for one-dimensional CNN, since one-dimensional CNN usually processes one bit of data with a one-dimensional convolution kernel, it is suitable for extracting features from a fixed length. As for the Bi-dimensional CNN [10], it is used for image processing. In recent years, significant progress has been made in image classification, image segmentation, and face recognition. Multi-dimensional CNN can be used for human action recognition and other fields, which is more in line with related research by machines through non-invasive (such as collecting EEG signals) brain-computer interaction mapping.

3.4.1. Bi-dimensional CNN research. Hernández-González et. al. [11] believe that when designing BCI, parameters such as signal conditioning, feature extraction, and classification need to be adjusted each time for preprocessing, which cannot take full advantage of CNN. Therefore, they used CNN with Long-Short Term Memory (LSTM) combined with a Fully Connected (FC) network. The design utilizing CNN layers and other algorithmic techniques allows the BCI process to be designed so that frequencies that are beneficial to experimental results can be organized into rows or columns and reflect the time and space of the EEG signal embedded in the electrodes of the user's scalp to collect information. In addition to this, they tested precise results on multiple instruction sets, with varying degrees of accuracy, so building models needed to consider the overall accuracy of the system. In addition, they constructed an image based on the frequency, time, and other information of the EEG signal and used the above-mentioned Short-time Fourier transform (STFT) and complex Morlet wavelet (CWT) to effectively process the data. The results show that this network using CNN and LSTM combined with FC can effectively classify the desired EEG signals. The design demonstrates that designing from the hierarchical structure of CNN can take advantage of the bi-dimensional CNN algorithm.

3.4.2. Multidimensional CNN research. In 2018, Huang et al. [12] designed a 3D-CNN structure to perform deep learning on videos by computing instantaneous human pose features, which enables researchers to effectively classify data in BCI construction. Maturana and Scherer [13] proposed VoxNet for RGBD image detection. VoxNet neural network technology is a 3D CNN that includes two convolutional layers, one pooling layer, and two fully connected (FC) layers for object recognition, which can provide relevant directions for BCI research.

3.5. Changing the method of classification to improve accuracy

EEG classification methods tend to lead to a significant drop in accuracy due to issues such as interpretation of what the neural network has learned and loss of information. To address the problem of interpreting what the network has learned, researchers Fatemeh Fahimi et al. [14] introduce a deep CNN framework that can discriminate relevant information in a topic-specific manner to classify the most informative features for each user. To solve the problem of information loss, the researchers learned from the original EEG and constructed a neural network with higher feature extraction and classification technology in the convolutional layer, max pooling layer, and dropout layer, thereby improving the accuracy of the system model.

In S. Aggarwal & N. Chugh research [6], changing the feature extraction method to obtain high performance and accuracy has the potential to extract many irrelevant input features. Since the accuracy of classification is heavily dependent on the amount of signal information, limited information leads to low accuracy, which limits the functional efficiency of CNNs. Researchers E. Lashgari et al. [15] reduce excessive information acquisition and improve the accuracy and stability of classifiers by studying data augmentation (DA) techniques, so they avoid the problem of classification inefficiency by augmenting the dataset with DA techniques. In their research, the different datasets demonstrate they need to adjust parameters to maintain high accuracy and high-performance of the BCI classification system.

In classification techniques, target variables are predicted or calculated based on a given input and then classified, and the feature-extracted data can be transformed into different motion tasks. So far, Common Space Pattern (CSP) [16] has deep application scenarios for the classification of EEG moving images. First, select a specific frequency range of the filter, the original EEG is extracted from the corresponding band-pass filter output, and then use CSP to extract features from it, and present it to a classifier or algorithm such as SVM, so as to achieve better classification accuracy and performance. Researchers H. Yang et al. [17] classify EEG signals based on the combination of enhanced CSP features and CNN. Their data showed that the frequency-averaged cross-validation accuracy was 68.45% and 69.27% on the BCI Competition IV dataset. This is significantly higher than the 4.53% and 5.34% calculated by randomly selecting feature maps, which shows that the model combined with CNN and CSP has a higher ability to discriminate features in EEG classification.

4. Conclusion

This paper first introduces BCI and CNN, and explains the significance of CNN architecture in BCI design. Second, the author discusses and analyses the application of the CNN architecture in the BCI data acquisition module and signal processing module. In order to solve various specific problems in the design of BCI, researchers have carried out model improvement and algorithm research according to the specific problems mentioned above. By collecting data, changing the feature extraction method, increasing the dimension of the CNN architecture, and changing the classification method to improve the accuracy and maintain the performance. But in image task processing, there are still many intractable problems to be solved. For example, if there is noise in the training samples, it is not easy to distinguish at the image level, and can even lead to wrong classification. The noise cannot be removed. The author thinks that the future development direction of CNN in BCI needs to consider the impact of new models or algorithms on the overall BCI design. Applying multiple solutions may lead to other problems with the architecture. The new techniques of BCI design tests the different samples could get different accuracy, so researchers need to measure whether the overall stability meets the preprocessing requirements; and help to build an accurate and efficient BCI system.

References

[1]. A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” in Proc. Adv. Neural Inf. Process. Syst., vol. 25, 2012, pp. 1097–1105.

[2]. Lu, Yao & Jiang, Huiping & Liu, Wenqiang. (2017). Classification of EEG Signal by STFT-CNN Framework: Identification of Right-/left-hand Motor Imagination in BCI Systems. 001. 10.22323/1.299.0001, pp. 2-3.

[3]. Saidutta, Y. M., Zou, J., & Fekri, F. (2018). “Increasing the learning Capacity of BCI Systems via CNN-HMM models.” Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2018, 1–4. https://doi.org/10.1109/EMBC.2018.8512714, pp. 1-2.

[4]. H. Yang, S. Sakhavi, K.K. Ang, C. Guan, On the use of convolutional neural networks and augmented csp features for multi-class motor imagery of eeg signals classification, in: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, 2015, pp. 2620–2623, plus 0.5em minus 0.4em.

[5]. Li, Z., Liu, F., Yang, W., Peng, S., & Zhou, J. (2021). “A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects.” IEEE transactions on neural networks and learning systems, PP: 2-21, 10.1109/TNNLS.2021.3084827. Advance online publication. https://doi.org/10.1109/TNNLS.2021.3084827, pp. 1-3, 12-14.

[6]. Aggarwal, Swati & Chugh, Nupur. (2019). Signal processing techniques for motor imagery brain computer interface: A review. Array. 1-2. 100003. 10.1016/j.array.2019.100003, pp.2-4.

[7]. Taheri, S., Ezoji, M. & Sakhaei, S.M. Convolutional neural network-based features for motor imagery EEG signals classification in brain–computer interface system. SN Appl. Sci. 2, 555 (2020). https://doi.org/10.1007/s42452-020-2378-z, pp. 1-2.

[8]. O’Shea, K., & Nash, R. (2015). An Introduction to Convolutional Neural Networks. ArXiv, abs/1511.08458, pp. 2-9.

[9]. S. Xie, R. Girshick, P. Dollár, Z. Tu, and K. He, “Aggregated residual transformations for deep neural networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jul. 2017, pp. 1492–1500.

[10]. Wu, Y., Yang, F., Liu, Y., Zha, X., & Yuan, S. (2018). A Comparison of 1-D and 2-D Deep Convolutional Neural Networks in ECG Classification. Conference proceedings: ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual Conference, 2018, pp. 324-327.

[11]. E. Hernández-González, P. Gómez-Gil, E. Bojorges-Valdez and M. Ramírez-Cortés, "Bi-dimensional representation of EEGs for BCI classification using CNN architectures," 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 2021, pp. 767-770, doi: 10.1109/EMBC46164.2021.9629958, pp. 1-4.

[12]. Y. Huang, S.-H. Lai, and S.-H. Tai, “Human action recognition based on temporal pose CNN and multi-dimensional fusion,” in Proc. Eur. Conf. Comput. Vis. (ECCV) Workshops, 2018, pp. 1-15.

[13]. D. Maturana and S. Scherer, “VoxNet: A 3D convolutional neural network for real-time object recognition,” in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), Sep. 2015, pp. 922–928.

[14]. Fahimi, F., Zhang, Z., Goh, W. B., Lee, T. S., Ang, K. K., & Guan, C. (2019). Inter-subject transfer learning with an end-to-end deep convolutional neural network for EEG-based BCI. Journal of neural engineering, 16(2), 026007. https://doi.org/10.1088/1741-2552/aaf3f6, pp. 2-6.

[15]. Lashgari, E., Ott, J., Connelly, A., Baldi, P., & Maoz, U. (2021). An end-to-end CNN with attentional mechanism applied to raw EEG in a BCI classification task. Journal of neural engineering, 18(4), 10.1088/1741-2552/ac1ade. https://doi.org/10.1088/1741-2552/ac1ade, pp. 1-11.

[16]. N. Korhan, Z. Dokur and T. Olmez, "Motor Imagery Based EEG Classification by Using Common Spatial Patterns and Convolutional Neural Networks," 2019 Scientific Meeting on Electrical-Electronics & Biomedical Engineering and Computer Science (EBBT), 2019, pp. 1-4, doi: 10.1109/EBBT.2019.8741832, pp. 1-4.

[17]. H. Yang, S. Sakhavi, K. K. Ang and C. Guan, "On the use of convolutional neural networks and augmented CSP features for multi-class motor imagery of EEG signals classification," 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2015, pp. 2620-2623, doi: 10.1109/EMBC.2015.7318929, pp. 1-4.

Cite this article

Xiao,Y. (2023). A review of CNN’s application on the BCI signal processing module. Applied and Computational Engineering,4,581-587.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” in Proc. Adv. Neural Inf. Process. Syst., vol. 25, 2012, pp. 1097–1105.

[2]. Lu, Yao & Jiang, Huiping & Liu, Wenqiang. (2017). Classification of EEG Signal by STFT-CNN Framework: Identification of Right-/left-hand Motor Imagination in BCI Systems. 001. 10.22323/1.299.0001, pp. 2-3.

[3]. Saidutta, Y. M., Zou, J., & Fekri, F. (2018). “Increasing the learning Capacity of BCI Systems via CNN-HMM models.” Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2018, 1–4. https://doi.org/10.1109/EMBC.2018.8512714, pp. 1-2.

[4]. H. Yang, S. Sakhavi, K.K. Ang, C. Guan, On the use of convolutional neural networks and augmented csp features for multi-class motor imagery of eeg signals classification, in: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, 2015, pp. 2620–2623, plus 0.5em minus 0.4em.

[5]. Li, Z., Liu, F., Yang, W., Peng, S., & Zhou, J. (2021). “A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects.” IEEE transactions on neural networks and learning systems, PP: 2-21, 10.1109/TNNLS.2021.3084827. Advance online publication. https://doi.org/10.1109/TNNLS.2021.3084827, pp. 1-3, 12-14.

[6]. Aggarwal, Swati & Chugh, Nupur. (2019). Signal processing techniques for motor imagery brain computer interface: A review. Array. 1-2. 100003. 10.1016/j.array.2019.100003, pp.2-4.

[7]. Taheri, S., Ezoji, M. & Sakhaei, S.M. Convolutional neural network-based features for motor imagery EEG signals classification in brain–computer interface system. SN Appl. Sci. 2, 555 (2020). https://doi.org/10.1007/s42452-020-2378-z, pp. 1-2.

[8]. O’Shea, K., & Nash, R. (2015). An Introduction to Convolutional Neural Networks. ArXiv, abs/1511.08458, pp. 2-9.

[9]. S. Xie, R. Girshick, P. Dollár, Z. Tu, and K. He, “Aggregated residual transformations for deep neural networks,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), Jul. 2017, pp. 1492–1500.

[10]. Wu, Y., Yang, F., Liu, Y., Zha, X., & Yuan, S. (2018). A Comparison of 1-D and 2-D Deep Convolutional Neural Networks in ECG Classification. Conference proceedings: ... Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual Conference, 2018, pp. 324-327.

[11]. E. Hernández-González, P. Gómez-Gil, E. Bojorges-Valdez and M. Ramírez-Cortés, "Bi-dimensional representation of EEGs for BCI classification using CNN architectures," 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), 2021, pp. 767-770, doi: 10.1109/EMBC46164.2021.9629958, pp. 1-4.

[12]. Y. Huang, S.-H. Lai, and S.-H. Tai, “Human action recognition based on temporal pose CNN and multi-dimensional fusion,” in Proc. Eur. Conf. Comput. Vis. (ECCV) Workshops, 2018, pp. 1-15.

[13]. D. Maturana and S. Scherer, “VoxNet: A 3D convolutional neural network for real-time object recognition,” in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst. (IROS), Sep. 2015, pp. 922–928.

[14]. Fahimi, F., Zhang, Z., Goh, W. B., Lee, T. S., Ang, K. K., & Guan, C. (2019). Inter-subject transfer learning with an end-to-end deep convolutional neural network for EEG-based BCI. Journal of neural engineering, 16(2), 026007. https://doi.org/10.1088/1741-2552/aaf3f6, pp. 2-6.

[15]. Lashgari, E., Ott, J., Connelly, A., Baldi, P., & Maoz, U. (2021). An end-to-end CNN with attentional mechanism applied to raw EEG in a BCI classification task. Journal of neural engineering, 18(4), 10.1088/1741-2552/ac1ade. https://doi.org/10.1088/1741-2552/ac1ade, pp. 1-11.

[16]. N. Korhan, Z. Dokur and T. Olmez, "Motor Imagery Based EEG Classification by Using Common Spatial Patterns and Convolutional Neural Networks," 2019 Scientific Meeting on Electrical-Electronics & Biomedical Engineering and Computer Science (EBBT), 2019, pp. 1-4, doi: 10.1109/EBBT.2019.8741832, pp. 1-4.

[17]. H. Yang, S. Sakhavi, K. K. Ang and C. Guan, "On the use of convolutional neural networks and augmented CSP features for multi-class motor imagery of EEG signals classification," 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), 2015, pp. 2620-2623, doi: 10.1109/EMBC.2015.7318929, pp. 1-4.