1. Introduction

Melanoma is the most serious kind of skin cancer. Only early detection has the potential to boost the victim's chance of survival. The statistics that have been gathered about melanoma in the USA are shown below [1, 2]. One person with melanoma passes away in the USA every hour. According to the report, around 87,110 new instances of melanoma were suspected in 2018. 9,730 of them, or more than 11% of them, will pass away from melanoma. Only 1% of occurrences of skin cancer are melanoma, yet the majority of these cases result in mortality. The radiation from the Sun is the primary cause of the great majority of melanomas. According to a UK university survey, ultraviolet (UV) radiation is responsible for 86% of melanomas. In general, if a person has more than five sunburns, their risk of developing melanoma doubles.

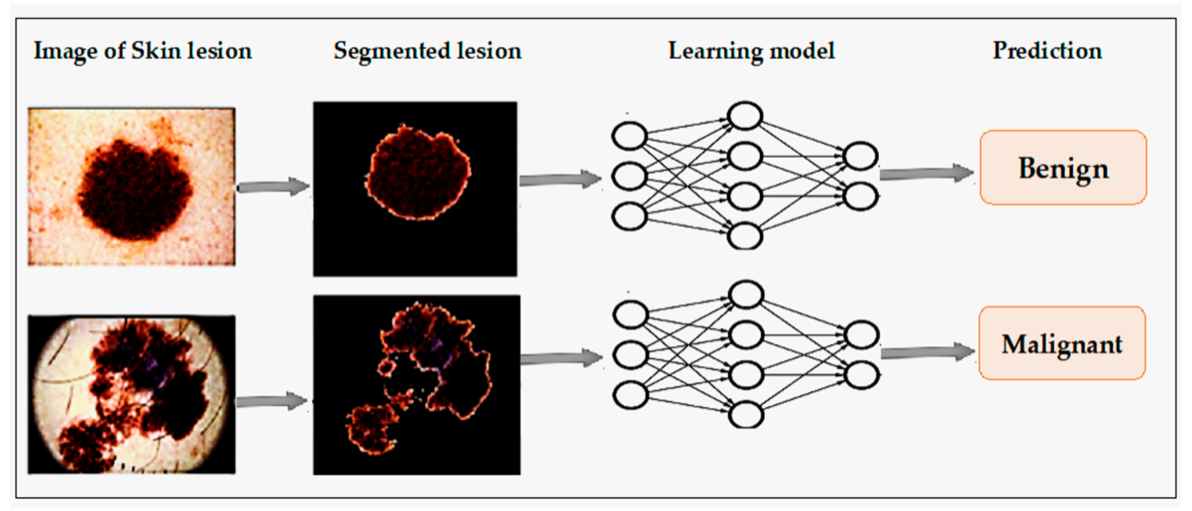

It is a serious condition that only affects people with white skin. Only early discovery of the victim's melanoma and other types of skin cancer can increase their probability of survival. As a result, there is a great demand for accurate, autonomous skin cancer screening systems. There are two different kinds of pictures that can be used to detect skin cancer. The dermoscopy image is acquired by a specific scheme at the pathology centre with increased concentration on the region of concern and high zoom, therefore it must be evaluated by a specialist dermatologist to establish if it is positive or negative (e.g. 20x). A computer system that is semi-automated could be used to classify these images [3, 4]. The major disadvantage of this method is that if the victim wants to consult a qualified dermatologist, they must physically go to the pathology centre. The goals of this review of the literature were to comprehend the most current advancements in the field of skin cancer cell detection tools and to construct deep learning-based autonomous scheme for skin cancer detection from digital images. The actions that a deep learning method will take are shown in Figure 1.

Figure 1. Deep Learning process.

2. Related Work

The processes used in computer-based skin cancer detection involves image capture, preparation, feature extraction and classification of segmented image into malignant and non-cancerous regions. The majority of the literature uses classification algorithms using machine learning and deep learning as their foundation to detect skin cancer.

1.1. Artificial Neural Networks (ANN)

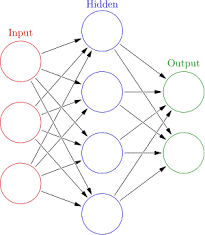

A statistical method for non-linear predictive modelling called an artificial neural network can be used to study the intricate correlations between input and output layers. ANN formation is based on the natal organisation of the neurons in our brain. Compute nodes in an ANN can be divided into three categories. An ANN learns the calculation at each node using back-propagation. Another name for an ANN is a neural network. Input layer refers to the top layer. Each input node in the input layer is related to the hidden layer. The finalised work is only visible in the output layer [5]. By using forward and backward propagation, an ANN can determine the importance of each connected connection. Depending on the total amount of input used, each ANN used to identify skin cancer may have one to several layers or nodes. The input layer analyses the data set it utilises and links to the hidden levels based on that analysis. Both trained and untrained data sets are categories that exist [6]. The accuracy is achieved by combining supervised and unsupervised learning methods with various network topologies, like back propagation and feed forward approaches, which use the data set at different stages. The ANN architecture is shown in Figure 2.

Figure 2. Artificial Neural Network Architecture.

1.2. Feed Forward Neural Network (FNN)

The connections between the nodes of a feed forward neural network are artificial neural networks in which a cycle is not formed. ANN consists of one output layer, ten hidden layers, and eight input units [7]. In order to train the ANN Classifier, a back propagation method is used. Identifies distinguishing characteristics in 326 different digital skin cancer photographs, including 87 intradermal nevi images, 136 melanoma images, 52 seborrheic keratosis, and 43 dysplastic nevi images. A neural network with 12 input layers and an output layer with 6 hidden neurons was used to train these photos. Results for training/testing ratios of 20/80, 40/60, and 60/40 were obtained in this network when all of the weights were inserted at random between 0 and 1 [8]. The ratio of training to testing that produced the best results was 60/40. When training and testing percentages are 60/40 and only melanoma photographs are used, the mean success rate is 86.0%. It specifies that if there is a 50% chance of a match, the outcome is 1 (high threat), and 0 (low threat).

1.3. Back Propagation Neural Network (BPN)

Neural networks use a technique called back propagation to determine how much each neuron contributed to the error (in image recognition, several images). The learning process for that example is completed by modifying the weights of each neuron using an enveloping optimization method. The loss function's gradient has been exactly determined [9, 10]. The gradient descent optimization algorithm frequently employs it. [11] uses four hidden neurons in a single hidden layer and seven input neurons at the input layer to provide the neural network seven properties. Divide the images in the data set into benign and non-benign images because the error is estimated at the output and propagates backward across the network layers [12]. There was only one output neuron with sigmoid activation function at the output layer.

1.4. Convolutional Neural Network (CNN)

Networks with convolutions are algorithms for deep learning that, like a multilayer neural network, first contain convolutional and pooling layers consecutively, then fully connected layers at the end. Any two-dimensional data structure can benefit from a class of techniques called CNN. Thus, one of the most popular algorithms for identifying photos is CNN. For jobs involving natural language processing, CNN also exhibits promise. CNN makes advantage of a local feature in an image to improve classification precision. CNN is one of the neural networks that uses less than a typical fully connected neural network in terms of hyper parameters. [12] used a convolutional neural network in their investigation that had a maximum pooling layer, 33 max pool, stride 2 size layers, 64 convolution layers, and 77 stride 2 size layers. The accuracy percentage for the FCRN8 network was 94.9%.

1.5. Support Vector Machine(SVM)

By constructing an ideal n-dimensional hyper plane, a supervised nonlinear classifier known as a support vector machine splits the input data points into two groups. By maximising the separation hyper plane between the datasets, the support vector machine makes an attempt to fix each data point in the training dataset. The highest margin classifier is SVM, which is another name for it [13]. SVM tries to keep the separating hyperplane as far away from both hyperplanes as possible when training. Using the SVM, one may study both linear and nonlinear classifiers. The lagrangian dual method is applied to train an SVM classifier [14]. The feature space is moved to a complex dimension by SVM using kernel when the data cannot be separated linearly in the original feature space.

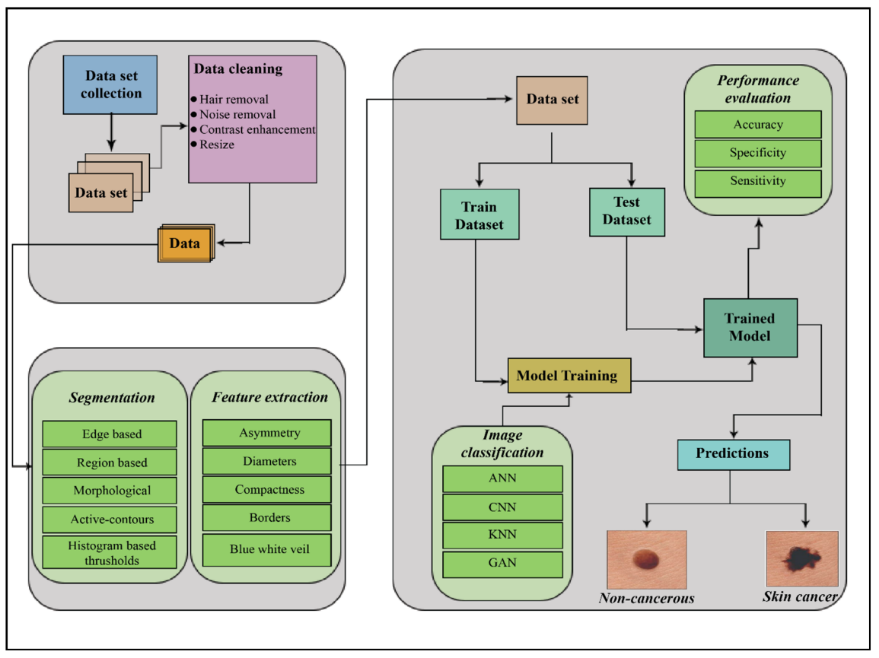

3. Methods Specific to Skin Cancer Classification

The general process for locating skin cancer of the carcinoma type is shown in Figure 3. It requires the pre-processed image that has been acquired to be captured, segmented, feature extracted, and classified. In recent years, deep learning has profoundly changed the machine learning field. It is regarded to be the most complex domain of machine learning and may make use of ANN-related methods [15, 16 and 17]. The structure and operation of the human brain served as the main source of inspiration for these algorithms. Deep learning methods are used in an astonishingly large number of journals. Deep learning systems have impressively outperformed other conventional machine learning methods in a number of applications.

Figure 3. Skin cancer detection process.

Numerous deep learning methods have been recently applied to enhance computer-based skin disease diagnosis. [18] The identification of the cancer in this study is done using Artificial Neural Networks (ANN), Convolutional Neural Networks (CNN), Generative Adversarial Networks (GAN) and Kohonen self-organizing Neural Networks (KNN).

A significant stage in the automated computer-aided diagnosis of melanoma is segmenting skin lesions. On the other hand, current segmentation techniques, which also perform poorly under these circumstances, are likely to over- or under-segment lesions with hazy borders, little background contrast, homogenous textures, or artefacts. These algorithms perform well when a huge number of parameters are precisely tuned, in addition to in appropriate pre-processing techniques, including illumination correction and hair removal. The problem of FCN is providing coarse segmentation borders for the challenging skin lesions (such as those with fuzzy boundaries and/or low textural contrast between the foreground and background) was addressed by using a multistage segmentation technique. Even for the most exciting skin lesions, a final segmentation gave the result with precise localization and clearly defined lesion boundaries with the help of a novel method for parallel integration that takes into account the additional information from each segmentation stage. This strategy outperforms current advanced skin lesion segmentation methods, as shown by wide experimental results on two well-known public standard datasets.

4. Comparative Study

Table 1 gives the comparison of various image classification techniques. A set of input neurons are activated by an input image but it takes more time.

Table 1. Comparative Study of various Image Classification Techniques.

S.no | Segmentation Techniques | Advantages | Disadvantages |

1 | Artificial Neural Network | An artificial neural network can learn any nonlinear function. | With an increase in the size of the image, the number of trainable parameters skyrockets. |

2 | Convolutional Neural Network | CNN does not indicate how the filters are learned automatically. These filters aid in the correct and pertinent features being extracted from the incoming data. | There must be lots of training data. |

3 | Recurrent Neural Network | When producing predictions, RNN takes into account the dependency between the words in the text that is present in the input data. | The disappearing and exploding gradient problem, which can be a common issue in many different types of neural networks, also affects deep RNNs (RNNs with an excessive amount of time steps). |

4 | Generative Adversarial Networks | GANs generate data that appears the identical as original data. If you give GAN a picture then it’ll generate a replacement version of the image which looks just similar to the initial image. Likewise, it can generate different versions of the text, video, audio. | Generating results from text or speech is incredibly complex |

5. Conclusion

In this review study, many neural network approaches for detecting and classifying skin cancer have been discussed. These are all minimally invasive techniques. Skin cancer diagnosis techniques include segmenting the images, extracting the features, and classifying the results. The classification of lesion images using ANNs, CNNs, RNNs, and GANs is the major objective of this review. Each algorithm has benefits and drawbacks. To get the best results, the proper classification approach must be chosen. Because CNN is more directly related to computer vision than other neural network types, it performs better at detecting picture data than other neural network kinds. Obtaining the correct diagnosis and treating any irregularities are of the utmost importance during this critical stage of skin cancer; expert help is needed to follow the proper protocols. Lesion segmentation, feature segmentation, feature development, and categorization should all be taken into account for a person's health.

References

[1]. Suganya, R. "An automated computer aided diagnosis of skin lesions detection and classification for dermoscopy images." In 2016 International Conference on Recent Trends in Information Technology (ICRTIT), pp. 1-5. IEEE, 2016.

[2]. Cancer Facts and Statistics. http://www.skincancer.org/ skin-cancer-information/skin-cancer-facts. Chiem, A. Al-Jumpily, and R. N. Khushaba, A Novel Hybrid System for Skin Lesion Detection, Dec 2007.

[3]. Farooq, Muhammad Ali, Muhammad Aatif Mobeen Azhar, and Rana Hammad Raza. "Automatic lesion detection system (ALDS) for skin cancer classification using SVM and neural classifiers." In 2016 IEEE 16th International Conference on Bioinformatics and Bioengineering (BIBE), pp. 301-308. IEEE, 2016.

[4]. Mhaske, H. R., and D. A. Phalke. "Melanoma skin cancer detection and classification based on supervised and unsupervised learning." In 2013 international conference on Circuits, Controls and Communications (CCUBE), pp. 1-5. IEEE, 2013.

[5]. Sundar, RS Shiyam, and M. Vadivel. "Performance analysis of melanoma early detection using skin lession classification system." In 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), pp. 1-5. IEEE, 2016.

[6]. Rashad, M. W., & Takruri, M. (2016, December). Automatic non-invasive recognition of melanoma using Support Vector Machines. In 2016 International Conference on Bio-engineering for Smart Technologies (BioSMART) (pp. 1-4). IEEE.

[7]. Lau, Ho Tak, and Adel Al-Jumaily. "Automatically early detection of skin cancer: Study based on nueral netwok classification." In 2009 International Conference of Soft Computing and Pattern Recognition, pp. 375-380. IEEE, 2009.

[8]. Satheesha, T. Y., D. Satyanarayana, M. N. Giriprasad, and K. N. Nagesh. "Detection of melanoma using distinct features." In 2016 3rd MEC International Conference on Big Data and Smart City (ICBDSC), pp. 1-6. IEEE, 2016.

[9]. Jain, Yogendra Kumar, and Megha Jain. "Skin cancer detection and classification using Wavelet Transform and Probabilistic Neural Network." (2012): 250-252.

[10]. M. H. Jafari, S. Samavi, S. M. R. Soroushmehr, H. Mohaghegh, N. Karimi, and K. Najarian, Set of descriptors for skin cancer diagnosis using non-dermoscopic color images,Sept 2016.

[11]. Yu, Lequan, Hao Chen, Qi Dou, Jing Qin, and Pheng-Ann Heng. "Automated melanoma recognition in dermoscopy images via very deep residual networks." IEEE transactions on medical imaging 36, no. 4 (2016): 994-1004.

[12]. R. J. Hijmans and J. van Etten, “Raster: Geographic analysis and modeling with raster data,” R Package Version 2.0-12, Jan. 12, 2012. [Online]. Available: http://CRAN.R- project.org/package=raster

[13]. Csabai, D., K. Szalai, and M. Gyöngy. "Automated classification of common skin lesions using bioinspired features." In 2016 IEEE International Ultrasonics Symposium (IUS), pp. 1-4. IEEE, 2016.

[14]. Takruri, Maen, Maram W. Rashad, and Hussain Attia. "Multi-classifier decision fusion for enhancing melanoma recognition accuracy." In 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA), pp. 1-5. IEEE, 2016.

[15]. Afifi, Shereen, Hamid GholamHosseini, and Roopak Sinha. "A low-cost FPGA-based SVM classifier for melanoma detection." In 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), pp. 631-636. IEEE, 2016.

[16]. Skin Lesion Analysis System for Melanoma Detection with an Effective Hair Segmentation Method Supriya Joseph

[17]. Udrea, Andreea, and George Daniel Mitra. "Generative adversarial neural networks for pigmented and non-pigmented skin lesions detection in clinical images." In 2017 21st international conference on control systems and computer science (CSCS), pp. 364-368. IEEE, 2017.

[18]. Jana, Enakshi, Ravi Subban, and S. Saraswathi. "Research on skin cancer cell detection using image processing." In 2017 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), pp. 1-8. IEEE, 2017.

Cite this article

Sivaranjani,M.;E.,S.V.;Shanmugavadivel,K.;Subramanian,M. (2023). Melanoma skin cancer cell detection using image processing: A survey. Applied and Computational Engineering,4,249-254.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Suganya, R. "An automated computer aided diagnosis of skin lesions detection and classification for dermoscopy images." In 2016 International Conference on Recent Trends in Information Technology (ICRTIT), pp. 1-5. IEEE, 2016.

[2]. Cancer Facts and Statistics. http://www.skincancer.org/ skin-cancer-information/skin-cancer-facts. Chiem, A. Al-Jumpily, and R. N. Khushaba, A Novel Hybrid System for Skin Lesion Detection, Dec 2007.

[3]. Farooq, Muhammad Ali, Muhammad Aatif Mobeen Azhar, and Rana Hammad Raza. "Automatic lesion detection system (ALDS) for skin cancer classification using SVM and neural classifiers." In 2016 IEEE 16th International Conference on Bioinformatics and Bioengineering (BIBE), pp. 301-308. IEEE, 2016.

[4]. Mhaske, H. R., and D. A. Phalke. "Melanoma skin cancer detection and classification based on supervised and unsupervised learning." In 2013 international conference on Circuits, Controls and Communications (CCUBE), pp. 1-5. IEEE, 2013.

[5]. Sundar, RS Shiyam, and M. Vadivel. "Performance analysis of melanoma early detection using skin lession classification system." In 2016 International Conference on Circuit, Power and Computing Technologies (ICCPCT), pp. 1-5. IEEE, 2016.

[6]. Rashad, M. W., & Takruri, M. (2016, December). Automatic non-invasive recognition of melanoma using Support Vector Machines. In 2016 International Conference on Bio-engineering for Smart Technologies (BioSMART) (pp. 1-4). IEEE.

[7]. Lau, Ho Tak, and Adel Al-Jumaily. "Automatically early detection of skin cancer: Study based on nueral netwok classification." In 2009 International Conference of Soft Computing and Pattern Recognition, pp. 375-380. IEEE, 2009.

[8]. Satheesha, T. Y., D. Satyanarayana, M. N. Giriprasad, and K. N. Nagesh. "Detection of melanoma using distinct features." In 2016 3rd MEC International Conference on Big Data and Smart City (ICBDSC), pp. 1-6. IEEE, 2016.

[9]. Jain, Yogendra Kumar, and Megha Jain. "Skin cancer detection and classification using Wavelet Transform and Probabilistic Neural Network." (2012): 250-252.

[10]. M. H. Jafari, S. Samavi, S. M. R. Soroushmehr, H. Mohaghegh, N. Karimi, and K. Najarian, Set of descriptors for skin cancer diagnosis using non-dermoscopic color images,Sept 2016.

[11]. Yu, Lequan, Hao Chen, Qi Dou, Jing Qin, and Pheng-Ann Heng. "Automated melanoma recognition in dermoscopy images via very deep residual networks." IEEE transactions on medical imaging 36, no. 4 (2016): 994-1004.

[12]. R. J. Hijmans and J. van Etten, “Raster: Geographic analysis and modeling with raster data,” R Package Version 2.0-12, Jan. 12, 2012. [Online]. Available: http://CRAN.R- project.org/package=raster

[13]. Csabai, D., K. Szalai, and M. Gyöngy. "Automated classification of common skin lesions using bioinspired features." In 2016 IEEE International Ultrasonics Symposium (IUS), pp. 1-4. IEEE, 2016.

[14]. Takruri, Maen, Maram W. Rashad, and Hussain Attia. "Multi-classifier decision fusion for enhancing melanoma recognition accuracy." In 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA), pp. 1-5. IEEE, 2016.

[15]. Afifi, Shereen, Hamid GholamHosseini, and Roopak Sinha. "A low-cost FPGA-based SVM classifier for melanoma detection." In 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), pp. 631-636. IEEE, 2016.

[16]. Skin Lesion Analysis System for Melanoma Detection with an Effective Hair Segmentation Method Supriya Joseph

[17]. Udrea, Andreea, and George Daniel Mitra. "Generative adversarial neural networks for pigmented and non-pigmented skin lesions detection in clinical images." In 2017 21st international conference on control systems and computer science (CSCS), pp. 364-368. IEEE, 2017.

[18]. Jana, Enakshi, Ravi Subban, and S. Saraswathi. "Research on skin cancer cell detection using image processing." In 2017 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), pp. 1-8. IEEE, 2017.