1. Introduction

The intersection of music and computation has a rich history dating back to ancient times when mathematicians like Pythagoras explored the relationship between numerical ratios and musical harmony. However, the formal concept of algorithmic composition—using well-defined procedures to compose music—emerged in the mid-20th century alongside the development of early computing systems. As Fernández and Vico note in their comprehensive survey, "Since the 1950s, different computational techniques related to Artificial Intelligence have been used for algorithmic composition, including grammatical representations, probabilistic methods, neural networks, symbolic rule-based systems, constraint programming and evolutionary algorithms" [1].

The evolution of computational music has been driven by both artistic exploration and scientific inquiry. Nierhaus describes how early experiments with algorithmic composition were often motivated by composers seeking new methods of musical expression, while computer scientists saw music as an ideal domain for testing artificial intelligence concepts [2]. This dual nature has led to a diverse field where artistic creativity and computational innovation continually influence each other. Computational music serves multiple functions in the modern technological landscape. Beyond its obvious role in creating musical compositions, it has become a valuable tool for music education, analysis, and research. According to Carnovalini and Rodà, computational creativity in music "tries to obtain creative behaviors from computers" while simultaneously helping us understand human creativity through computational modeling [3]. This bidirectional relationship between human and machine creativity represents one of the most fascinating aspects of the field.

The development of algorithmic composition has progressed through several distinct phases, each characterized by different technological approaches and artistic goals. Early systems from the 1950s and 1960s often relied on stochastic processes and formal grammars, with pioneering works like Iannis Xenakis's "Stochastic Music Program" and Lejaren Hiller's "Illiac Suite" demonstrating the potential of computer-assisted composition. The 1980s and 1990s saw the rise of rule-based systems and knowledge engineering approaches. As Papadopoulos and Wiggins explain, these systems attempted to codify musical knowledge and compositional rules explicitly, often drawing from established music theory and analysis [4]. While these systems produced interesting results, they were limited by the difficulty of formalizing the tacit knowledge that human composers employ.

The turn of the millennium brought significant advances in machine learning techniques, particularly neural networks, which have revolutionized algorithmic composition. According to Mycka and Mańdziuk, the interest in music-related research has significantly increased with the emergence of artificial intelligence (AI) demonstrated remarkable capabilities in generating music that captures stylistic elements of human compositions while introducing novel variations [5]. Most recently, transformer-based models have emerged as powerful tools for music generation. These models, originally developed for natural language processing, have proven remarkably effective at capturing long-range dependencies in musical sequences. Anselmo and Pendergrass demonstrate how mathematical models can be applied to generate musical structures with coherent patterns and progressions [6]. This convergence of mathematical modeling and deep learning represents the cutting edge of algorithmic composition today.

This research paper is motivated by the need to understand and compare different approaches to algorithmic music composition, particularly focusing on three distinct methodological categories: translational models, mathematical models, and AI tools. Each of these approaches offers unique perspectives on how computational systems can generate music, and a comparative analysis can provide valuable insights for both researchers and practitioners in the field. Translational models focus on converting concepts or structures from one domain to another, such as transforming visual patterns into musical sequences or translating emotional states into sonic expressions. Mathematical models employ formal mathematical structures and operations to generate musical material, often drawing from fields like chaos theory, fractals, and combinatorics. AI tools leverage machine learning techniques to learn patterns from existing music and generate new compositions that reflect or extend these patterns. By examining these three approaches in depth, this paper aims to identify their respective strengths, limitations, and potential applications. The comparative analysis will consider factors such as musical quality, creative flexibility, technical requirements, and accessibility to musicians without extensive technical backgrounds.

The paper is organized as follows. After the introduction, this paper provides a general description of algorithmic composition methods currently in use. Then, the paper examines each of the three focal methods in detail, presenting their underlying principles, notable applications, and representative results from the literature. Following these detailed examinations, the paper offers a comparative analysis that highlights the strengths and weaknesses of each approach, discusses current limitations in the field, and suggests promising directions for future research. The paper concludes with a summary of the findings and their implications for the continued development of algorithmic music composition. Through this structured exploration, this paper contributes to the ongoing dialogue between music and computation, providing a valuable resource for those interested in the fascinating intersection of artistic creativity and technological innovation.

2. Descriptions of algorithmic composition methods

Algorithmic composition represents a diverse field where computational processes are employed to create musical works. This section provides an overview of the primary methods currently used in algorithmic music composition, highlighting their fundamental principles, historical development, and general applications. Algorithmic composition can be broadly defined as "the partial or total automation of the process of music composition by using computers" [1]. This definition encompasses a wide range of approaches, from systems that generate complete compositions autonomously to those that serve as creative tools for human composers. The scope of algorithmic composition has expanded significantly with advances in computing technology, now including methods that can generate complex musical structures across various genres and styles. As Nierhaus explains, algorithmic composition is not merely a modern phenomenon but has historical precedents in techniques like the medieval use of isorhythm or Mozart's musical dice games [2]. What distinguishes contemporary algorithmic composition is the explicit formalization of compositional processes through computational means, allowing for more complex and systematic exploration of musical possibilities.

The field of algorithmic composition encompasses numerous methodological approaches, which can be categorized in several ways. This study focuses on three primary categories that represent distinct conceptual frameworks for computational music creation, i.e., translational models, mathematical models, and AI tools. Translational models convert structures or patterns from non-musical domains into musical parameters. These approaches establish mappings between external data sources and musical elements such as pitch, rhythm, timbre, and dynamics. Examples include:

• Data sonification: Transforming scientific or statistical data into musical representations

• Visual-to-audio mapping: Converting visual patterns, images, or movements into sound

• Emotional mapping: Translating emotional states or narratives into musical expressions

• Cross-domain translation: Using linguistic structures to inform musical composition

These models often serve both artistic and analytical purposes, creating novel musical experiences while potentially revealing patterns in the source data that might not be apparent through visual or textual analysis.

Mathematical models employ formal mathematical structures and operations to generate musical material. These approaches draw from various mathematical fields, including:

• Stochastic processes: Using probability distributions to make compositional decisions

• Fractal geometry: Generating self-similar patterns across different temporal scales

• Cellular automata: Creating evolving patterns based on simple rules and neighbor interactions

• Chaos theory: Exploring deterministic but unpredictable systems for musical material

• Combinatorial techniques: Systematically exploring permutations of musical elements

Mathematical models often produce music with distinctive structural properties that reflect their underlying mathematical principles, creating works that can range from highly ordered to seemingly random, depending on the specific mathematical approach employed.

AI-based approaches use machine learning techniques to analyze existing music and generate new compositions that reflect or extend learned patterns. These methods include:

• Neural networks: Using various neural architectures to model musical sequences

• Transformer models: Leveraging attention mechanisms to capture long-range dependencies

• Generative adversarial networks (GANs): Creating music through competitive training processes

• Reinforcement learning: Optimizing composition through reward-based training

• Evolutionary algorithms: Developing musical solutions through simulated evolution

AI tools have gained significant prominence in recent years due to their ability to generate music that closely mimics human compositional styles while also producing novel variations that extend beyond direct imitation. The following sections will examine each of the three focal methods, i.e., translational models, mathematical models, and AI tools, in greater detail, exploring their specific implementations, applications, and contributions to the field of algorithmic music composition.

3. Translational models in algorithmic music composition

Translational models bridge non-musical domains (e.g., visual art, text, or scientific data) with musical parameters, framing cross-domain mapping as both a compositional technique and a mechanism for creative inspiration. Braga and Pinto argue that such mappings can "model inspiration" [7], reflecting cognitive theories of creativity that emphasize analogical thinking. The framework involves three stages: source domain analysis to extract features (e.g., shapes from sculptures or emotional valence from text), mapping strategies to establish meaningful correspondences (e.g., structural or semantic links), and musical realization to generate compositions that retain essential qualities of the source. This approach enables applications ranging from artistic expression to scientific data sonification.

The process begins with feature extraction, where domain-specific attributes are identified—such as contours and textures in 3D sculptures [7], semantic content in text, or trends in datasets. These features inform mapping strategies, which can be direct (e.g., spatial height mapped to pitch), statistical, structural, or semantic. Recent advances explore bidirectional mappings, such as cross-modal systems where music influences non-musical outputs like text [8]. To refine outputs, algorithmic mediation techniques like constraint satisfaction, optimization, or machine learning are applied. For instance, Braga and Pinto used genetic algorithms to evolve musically coherent interpretations of sculptures while preserving source connections [7].

In cross-domain art, systems like Braga and Pinto’s sculpture-to-music generator achieved 4/5 listener recognition of source-music relationships [7], demonstrating how abstract mappings (e.g., geometric contours to melodic contours) can create perceptible interdisciplinary links. Data sonification transforms datasets—environmental metrics or social trends—into auditory formats, balancing analytical utility with artistic expression. Interactive systems extend this to real-time contexts, such as biofeedback sonification (e.g., heart rate modulating harmony) or gesture-driven music generation, enabling dynamic, responsive compositions.

Success hinges on dual criteria: musical quality and source fidelity. Braga and Pinto’s evaluation highlighted the tension between abstraction and recognizability, using genetic algorithms to enhance musicality without erasing source connections [7]. Aesthetic challenges include balancing literal representation with creative reinterpretation, a negotiation between technical mapping accuracy and artistic intent. Advances in AI, particularly deep learning, enable sophisticated feature extraction and style-aware generation. Emerging trends include bidirectional mappings (e.g., music-to-visual feedback) and multimodal systems that combine multiple domains. Future research may focus on personalized mappings tailored to individual perception or collaborative human-AI systems that blend algorithmic precision with human creativity. As computational tools evolve, translational models are poised to expand algorithmic composition’s role in interdisciplinary exploration.

4. Mathematical models in algorithmic music composition

Mathematical models in algorithmic composition use formal structures, from stochastic processes to quantum mechanics, as generative engines, building on historical foundations like Pythagorean theory. As Yang and Lee emphasize, these models unlock creative potential, with quantum principles offering "unprecedented opportunities for music creation" [9]. The process involves selecting a mathematical framework (e.g., fractals or probability distributions), mapping its variables to musical parameters (pitch, rhythm), generating material through mathematical operations, and applying musical constraints to ensure coherence.

Stochastic and probability models employ statistical distributions to balance predictability and surprise, with recent studies showing skewed distributions clarify "the effect of pitch distributions on music perception" [10]. Fractal and chaos-based approaches leverage self-similarity and deterministic unpredictability, translating geometric dimensions into rhythmic or melodic structures. Combinatorial and set-theoretical models extend twelve-tone techniques through systematic operations like permutations or group theory, while quantum computing models exploit superposition and entanglement for novel sonic outcomes. For instance, quantum bits enable "complex musical patterns and sound effects" [9], as demonstrated in Eduardo Miranda’s Spinnings—O1 Synth Trio, a landmark quantum computer music work.

Modern tools like Max/MSP and quantum algorithms have democratized access to these models. Notable applications include quantum music compositions, where quantum circuits generate "unique music through quantum computing characteristics" [9], and stochastic systems refined by skewed distributions that offer composers nuanced control over probabilistic outputs [10]. Fractal-based implementations often map iterative geometric transformations to evolving harmonic progressions, while combinatorial models algorithmically explore vast musical possibility spaces.

Success hinges on balancing mathematical rigor with musical engagement. Internal coherence—ensuring mathematical relationships translate to meaningful musical structures—is critical. Aesthetic evaluation extends beyond traditional metrics; Yang and Lee note quantum models create "new possibilities for integrating music, art, and technology" [9], emphasizing interdisciplinary innovation. The interplay between predictability (e.g., fractal self-similarity) and surprise (e.g., chaotic deviations) often defines the listener’s experience [11].

Hybrid approaches merge mathematical models with machine learning, such as training stochastic systems on existing music to adapt probability distributions dynamically. Interactive implementations enable real-time manipulation of parameters. e.g., adjusting fractal recursion depth to modulate musical complexity during performances. Interdisciplinary applications bridge music with fields like data science, using mathematical abstractions to sonify complex datasets or drive generative visual-musical installations. Table 1 and Table 2 illustrate the diverse mathematical frameworks employed in algorithmic composition, highlighting both traditional approaches like stochastic processes and emerging fields like quantum computing. Each branch represents a distinct mathematical paradigm with its own compositional affordances and aesthetic implications. From stochastic traditions to quantum frontiers, mathematical models continue redefining algorithmic composition. As Yang and Lee (2024) assert, these frameworks are "poised to contribute a distinctive dimension to global musical culture" [9], blending computational precision with artistic exploration to expand creative boundaries.

Table 1: Taxonomy of mathematical models in algorithmic music composition: core approaches

Stochastic Processes - Markov Chains - Probability Distributions - Random Walks | Geometric Models - Fractal Models - Chaos Theory - L-Systems | Combinatorial Models - Set Theory - Permutation Operations - Group Theory |

Table 2: Taxonomy of mathematical models in algorithmic music composition: advanced extensions

Quantum Computing - Superposition - Entanglement - Quantum Algorithms - Quantum Gates | Hybrid Approaches - Stochastic Neural Nets - Evolutionary Mathematics - Quantum-Classical |

5. AI tools in algorithmic music composition

AI tools revolutionize algorithmic composition by learning musical patterns directly from data rather than relying on predefined rules. As Chen et al. note, these systems "drive innovation in music creation" through machine learning techniques that analyze and replicate stylistic nuances [12]. Core components include data representation (e.g., MIDI or audio formats), model architectures (neural networks), training processes (exposure to musical datasets), and generation mechanisms that produce novel compositions. This data-driven approach captures subtle musical relationships difficult to codify manually.

Recurrent Neural Networks (RNNs), particularly LSTMs, excel at modeling temporal sequences like melodies by maintaining context across notes, as Liang highlights in their survey of machine learning applications in music [12]. Transformer architectures leverage attention mechanisms to process long-range dependencies, enabling coherent large-scale structures, a shift Mycka and Mańdziuk link to rising interest in AI’s creative potential [5]. Generative Adversarial Networks (GANs) pit generators against discriminators to produce realistic audio, while Variational Autoencoders (VAEs) learn latent spaces for controlled style interpolation. For instance, VAEs allow composers to morph between genres by manipulating encoded features.

Data representation choices balance symbolic precision (MIDI’s note events) with audio fidelity (waveforms capturing timbre). Training methodologies like transfer learning adapt pre-trained models to niche genres, while reinforcement learning steers outputs toward desired traits, such as emotional valence. Chen et al. emphasize AI’s versatility in handling both symbolic and audio data, enabling applications ranging from classical pastiche to experimental sound design [12].

One key application is style transfer, where AI models emulate specific composers or genres, aiding creative exploration and music education. Interactive composition tools, such as AI-powered DAW plugins, suggest harmonies or variations in real time, augmenting human creativity rather than replacing it. In gaming and interactive media, adaptive scores dynamically shift based on player actions or narrative cues, enhancing immersion. Liang draws parallels to recommendation systems, where similar algorithms predict user listening preferences [12].

Assessment combines subjective listening tests (e.g., human-rated authenticity) with objective metrics like tonal consistency. Mycka and Mańdziuk underscore ethical challenges, including copyright disputes and cultural appropriation risks inherent in training data sourced from diverse musical traditions [5].

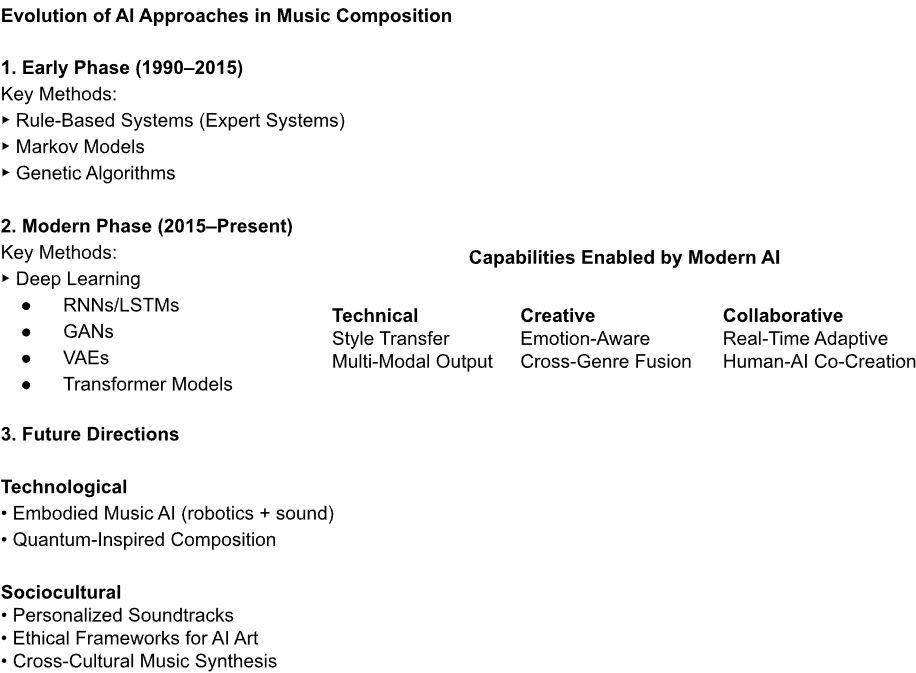

Emerging trends include multimodal systems that generate music from text prompts (e.g., “joyful orchestral piece”) or visual inputs, bridging auditory and visual creativity. Controllable generation techniques allow users to guide outputs via high-level parameters, such as adjusting “tension” or invoking “retro synthwave” aesthetics. Concurrently, researchers are developing ethical frameworks to address dataset biases and ensure fair attribution in AI-human collaborations. Figure 1 illustrates the evolution of AI approaches in music composition, from early rule-based systems to modern deep learning architectures. It highlights the expanding capabilities of these systems and points to future directions that emphasize collaboration, personalization, and ethical considerations. The transition from the early phase to the modern phase represents a paradigm shift from explicitly programmed rules to data-driven learning, enabling more sophisticated and nuanced musical generation. AI tools expand algorithmic composition’s creative scope, from emulating traditions to pioneering new forms. As Chen et al. assert, they "offer fresh perspectives on musical expression" while challenging notions of authorship [12]. Future advancements will likely prioritize user control, ethical practices, and seamless human-AI partnerships, reshaping music’s cultural and technical landscapes.

Figure 1: Evolution of AI approaches in music composition

6. Comparison, limitations and prospects

The three algorithmic composition methods, i.e., translational models, mathematical models, and AI tools, diverge in their conceptual foundations, technical demands, and creative outputs. Translational models are rooted in cross-domain mapping, establishing systematic connections between non-musical sources (e.g., visual art, text) and musical parameters. For instance, Braga and Pinto’s sculpture-to-music system [7] demonstrates how listeners perceive clear relationships between 3D forms and generated compositions, emphasizing multi-layered, interdisciplinary experiences. In contrast, mathematical models rely on formal structures such as stochastic processes or quantum computing to drive generation. Yang and Lee’s quantum music work [9], for example, leverages principles like superposition to produce patterns that defy traditional compositional logic, prioritizing structural elegance over external inspiration. AI tools, however, bypass explicit rules altogether, learning stylistic nuances directly from data. As Chen et al. highlight, their adaptability to both symbolic (MIDI) and audio data enables outputs ranging from classical pastiche to experimental soundscapes, blurring the line between imitation and innovation [12].

Translational models demand moderate technical skill, focusing on the design of intuitive mappings (e.g., color-to-timbre) rather than computational complexity. Mathematical models vary widely in accessibility: basic stochastic processes (e.g., Markov chains) are approachable for novices, but advanced frameworks like quantum computing, exemplified by Miranda’s Spinnings, O1 Synth Trio [9], require expertise in niche domains. AI tools, meanwhile, necessitate substantial resources, including curated datasets and GPU power, as well as machine learning proficiency. While pre-trained models have lowered entry barriers, fine-tuning outputs for artistic intent remains resource-intensive.

Translational models grant creators high control through explicit mappings (e.g., sculptural contours shaping melody), though results hinge on the richness of the source material. Their aesthetic appeal lies in cross-modal associations, inviting listeners to “hear” visual or textual narratives. Mathematical models balance determinism and chaos: combinatorial systems (e.g., group theory) enable precise rule-based composition, while fractal or chaos-driven systems embrace unpredictability, yielding outputs with mathematical beauty like self-similar rhythms. AI tools, historically criticized for opaque decision-making, are evolving toward greater controllability. Techniques like reinforcement learning now allow parameter-driven steering (e.g., adjusting “emotional valence”), enabling outputs that straddle stylistic mimicry and human-like creativity, as seen in recent systems [12].

Translational models face challenges in balancing creativity and fidelity. Designing cross-domain mappings (e.g., sculpture textures to musical motifs) is inherently subjective, often requiring iterative refinement—as seen in Braga and Pinto’s use of genetic algorithms to optimize musicality [7]. Even with systematic approaches, a semantic gap persists: abstract concepts like color or texture may lose their essence when translated into sound, risking oversimplification. Furthermore, evaluating these systems demands dual criteria—assessing both musical coherence and fidelity to the source material—a nuanced task that lacks standardized metrics [7]. Mathematical models, while structurally rigorous, struggle with aesthetic flexibility. Over-reliance on formulas can produce compositions perceived as mechanical or emotionally sterile, prioritizing logical elegance over expressive depth. Accessibility is another barrier: while basic stochastic methods (e.g., Markov chains) are widely adoptable, advanced frameworks like quantum computing—though capable of generating novel patterns—require specialized expertise, limiting their broader artistic utility [9]. Additionally, outputs may clash with traditional musical conventions, such as harmony rules, creating tension between mathematical innovation and listener expectations. AI tools, despite their versatility, grapple with ethical and technical constraints. Data bias remains pervasive: systems trained on Western classical or pop datasets often underrepresent non-Western genres, perpetuating cultural homogenization. The opacity of "black-box" models further complicates creative control, as artists cannot easily trace how inputs (e.g., emotional valence parameters) translate to outputs [12]. Ethical concerns also loom large, particularly around copyright (e.g., AI-generated music mimicking copyrighted styles) and cultural appropriation, as highlighted by Mycka and Mańdziuk’s analysis of uncredited folkloric motif usage [5].

Translational models hold promise through adaptive mapping systems that dynamically adjust cross-domain correspondences in real time, responding to shifts in source material (e.g., evolving visual inputs) or user feedback. Further enrichment could arise from multimodal integration, where combined domains, (e.g., text and image) collaboratively inform musical parameters, deepening interdisciplinary resonance. For instance, a system might blend poetic meter and brushstroke dynamics to shape rhythm and timbre, offering more layered creative outputs. Mathematical models are poised to bridge innovation and accessibility. Hybrid systems merging quantum principles (e.g., entanglement-driven harmony) with traditional structures like counterpoint could unlock novel expressive frontiers while retaining structural coherence. Simultaneously, user-friendly interfaces—such as fractal rhythm generators with intuitive sliders—could democratize advanced mathematics, empowering composers without STEM expertise to harness chaos theory or L-systems for organic, algorithmically textured works. AI tools face dual imperatives: enhancing creative control and addressing ethical gaps. Advances in controllable generation may enable artists to fine-tune outputs through granular parameters (e.g., “increase polyphony” or “dissonance threshold”), marrying AI’s data-driven pattern recognition with human intentionality. Meanwhile, robust ethical frameworks—such as attribution protocols for training data (e.g., crediting cultural heritage samples) and bias audits for underrepresented genres—could mitigate risks flagged by scholars [5], fostering equitable and transparent AI-aided composition.

The most promising frontier in algorithmic composition lies in the strategic integration of translational, mathematical, and AI-driven approaches, unlocking novel creative and technical potential. Hybrid systems exemplify this synergy: AI could dynamically optimize parameters in mathematical models (e.g., tuning chaos-theory-driven rhythms for emotional impact) or interpret ambiguous translational mappings (e.g., converting abstract poetry to melody), as demonstrated by Chen et al.’s AI-augmented sonification framework, which enhances ecological data into musically coherent works [12]. Collaborative workflows further bridge human and machine creativity, positioning AI and mathematical systems as “creative partners” rather than mere tools. Composers might iteratively guide quantum algorithms to explore harmonic spaces or refine AI-generated motifs through intuitive interfaces, blending human intuition with computational scale. This mirrors collaborative art-science projects where human curation shapes algorithmic outputs into emotionally resonant narratives. Beyond music, cross-disciplinary applications hint at broader cultural and scientific impacts. Quantum-generated compositions could drive adaptive visual installations in real time, creating immersive audiovisual ecosystems, while AI models might translate environmental sensor data (e.g., forest soundscapes) into therapeutic music for mental health interventions. Such integrations not only expand artistic possibilities but also deepen computational models’ responsiveness to human and ecological contexts. Each method offers unique strengths—translational models bridge disciplines, mathematical models unveil structural elegance, and AI tools democratize stylistic versatility. Their limitations (technical complexity, opacity, ethical gaps) highlight areas for refinement. Future progress hinges on integrating these paradigms, fostering tools that balance computational power with artistic intuition. As Yang and Lee posit [9], such synthesis could redefine creativity itself, blurring lines between human and algorithmic authorship in music’s evolving landscape.

7. Conclusion

To sum up, this research paper has examined three distinct approaches to algorithmic music composition: translational models, mathematical models, and AI tools. Each method represents a unique paradigm for leveraging computational processes in musical creation, with its own conceptual foundations, technical requirements, and aesthetic characteristics. Translational models establish meaningful connections between non-musical domains and musical parameters, creating compositions that reflect patterns and structures from diverse sources. Mathematical models harness the inherent order and complexity of mathematical structures to generate musical material with distinctive organizational principles. AI tools learn patterns directly from musical data, capturing nuanced stylistic elements and generating compositions that can reflect or extend these learned patterns. the comparative analysis has revealed that these approaches are not merely competing alternatives but complementary methodologies that illuminate different aspects of the relationship between computation and musical creativity. Each offers unique advantages while facing specific limitations in areas such as control, accessibility, and aesthetic flexibility. Looking forward, the most promising direction appears to be the integration of these different approaches, combining the cross-domain connections of translational models, the structural elegance of mathematical approaches, and the pattern-learning capabilities of AI tools. Such hybrid systems could leverage the strengths of each method while mitigating their individual limitations. As algorithmic composition continues to evolve, these methods have the potential to not only generate new forms of musical expression but also to deepen the understanding of creativity itself. By exploring the computational dimensions of music creation, one gains new perspectives on how music can be conceived, structured, and experienced in the digital age, expanding the creative possibilities available to composers and enriching the musical landscape.

References

[1]. Fernández, J.D., and Vico, F. (2013) AI methods in algorithmic composition: A comprehensive survey. Journal of Artificial Intelligence Research, 48, 513-582.

[2]. Nierhaus, G. (2009) Algorithmic composition: Paradigms of automated music generation. Springer Science & Business Media.

[3]. Carnovalini, F., and Rodà, A. (2020) Computational creativity and music generation systems: An introduction to the state of the art. Frontiers in Artificial Intelligence, 3, 14.

[4]. Papadopoulos, G., and Wiggins, G. (1999) AI methods for algorithmic composition: A survey, a critical view and future prospects. In AISB Symposium on Musical Creativity, 110-117.

[5]. Mycka, J., and Mańdziuk, J. (2024) Artificial intelligence in music: recent trends and challenges. Neural Computing and Applications, 37, 801-839.

[6]. Anselmo, C. J., and Pendergrass, M. (2019) Algorithmic composition: The music of mathematics. H-SC Journal of Sciences, 8.

[7]. Braga, F., and Pinto, H. S. (2022) Composing Music Inspired by Sculpture: A Cross-Domain Mapping and Genetic Algorithm Approach. Entropy, 24(4), 468.

[8]. Mao, Z., Zhao, M., Wu, Q., Zhong, Z., Liao, W.H., Wakaki, H. and Mitsufuji, Y. (2025) Cross-Modal Learning for Music-to-Music-Video Description Generation. arXiv preprint arXiv:2503.11190.

[9]. Yang, W., and Lee, J. (2024) The application of quantum computing in music composition. Online Journal of Music Sciences, 9(2), 415-429.

[10]. Anonymous. (2024) Musical composition based on skewed statistical distributions of stochastic processes. Cogent Arts & Humanities, 11(1), 2351656.

[11]. Chen, Y., Huang, L., and Gou, T. (2024) Applications and Advances of Artificial Intelligence in Music Generation: A Review. arXiv preprint arXiv:2409.03715.

[12]. Liang, J. (2023) Harmonizing minds and machines: survey on transformative power of machine learning in music. Frontiers in Neurorobotics, 17, 1267561.

Cite this article

Lu,J. (2025). Comparison of Algorithmic Music Composition: Translational Models, Mathematical Models, and AI Tools. Applied and Computational Engineering,160,45-54.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-SEML 2025 Symposium: Machine Learning Theory and Applications

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Fernández, J.D., and Vico, F. (2013) AI methods in algorithmic composition: A comprehensive survey. Journal of Artificial Intelligence Research, 48, 513-582.

[2]. Nierhaus, G. (2009) Algorithmic composition: Paradigms of automated music generation. Springer Science & Business Media.

[3]. Carnovalini, F., and Rodà, A. (2020) Computational creativity and music generation systems: An introduction to the state of the art. Frontiers in Artificial Intelligence, 3, 14.

[4]. Papadopoulos, G., and Wiggins, G. (1999) AI methods for algorithmic composition: A survey, a critical view and future prospects. In AISB Symposium on Musical Creativity, 110-117.

[5]. Mycka, J., and Mańdziuk, J. (2024) Artificial intelligence in music: recent trends and challenges. Neural Computing and Applications, 37, 801-839.

[6]. Anselmo, C. J., and Pendergrass, M. (2019) Algorithmic composition: The music of mathematics. H-SC Journal of Sciences, 8.

[7]. Braga, F., and Pinto, H. S. (2022) Composing Music Inspired by Sculpture: A Cross-Domain Mapping and Genetic Algorithm Approach. Entropy, 24(4), 468.

[8]. Mao, Z., Zhao, M., Wu, Q., Zhong, Z., Liao, W.H., Wakaki, H. and Mitsufuji, Y. (2025) Cross-Modal Learning for Music-to-Music-Video Description Generation. arXiv preprint arXiv:2503.11190.

[9]. Yang, W., and Lee, J. (2024) The application of quantum computing in music composition. Online Journal of Music Sciences, 9(2), 415-429.

[10]. Anonymous. (2024) Musical composition based on skewed statistical distributions of stochastic processes. Cogent Arts & Humanities, 11(1), 2351656.

[11]. Chen, Y., Huang, L., and Gou, T. (2024) Applications and Advances of Artificial Intelligence in Music Generation: A Review. arXiv preprint arXiv:2409.03715.

[12]. Liang, J. (2023) Harmonizing minds and machines: survey on transformative power of machine learning in music. Frontiers in Neurorobotics, 17, 1267561.