1. Introduction

In recent years, mental health problems have become a major public health challenge worldwide. According to the World Health Organization (WHO) in 2022 [1], the number of people suffering from depression has reached 300 million worldwide. The organization's experts further predict that if current trends continue, the total number of people with depression globally could exceed the total number of people with cardiovascular disease by 2030, and it will become a major global disability health problem.

However, early screening faces the twin challenges of difficult identification and high technical barriers to detection. Traditional diagnostic methods for depression rely on clinicians' subjective judgment and rating scale assessment of patients' external manifestations [2]. However, there are limitations to this subjective evaluation model, especially for patients with hidden depression who deliberately hide their negative emotions [3]. Studies have shown that if a person can become aware of their mental state, their depressive symptoms will be significantly alleviated [4].

The emergence of deep learning offers the possibility of solving this problem. In recent years, a variety of neural network algorithms have been applied to the field of emotion recognition and have shown a wide range of possible applications and advantages due to their powerful data processing capabilities and pattern recognition advantages [5]. For example, Priyadarshani et al. explored machine learning classifiers and proposed a high-accuracy EEG-LSTM model that gave better results than a more basic machine learning model [6]. Ramzan et al. attempted to fuse multiple classification models and achieved good results on EEG data [7]. However, despite the fact that EEG emotion recognition research shows great potential for mental health monitoring, most of the current research still focuses on algorithm optimization and still falls short in terms of device convenience and intelligent deployment [8]. Huang et al. have begun to consider applications on resource-constrained portable/wearable (P/W) devices and have conducted research on real-time EEG signal denoising [9], but the deployment of relevant classification models has not yet been fully explored.

To address the above challenges, this study constructed an STM32 microcontroller-based EEG deep learning emotion recognition system to extend depression screening from laboratory settings to daily life environments. The combined software and hardware microcontroller application makes the mood recognizer an affordable and convenient device to monitor the user's mood in near real time.

2. Methodology

2.1. Overall framework design

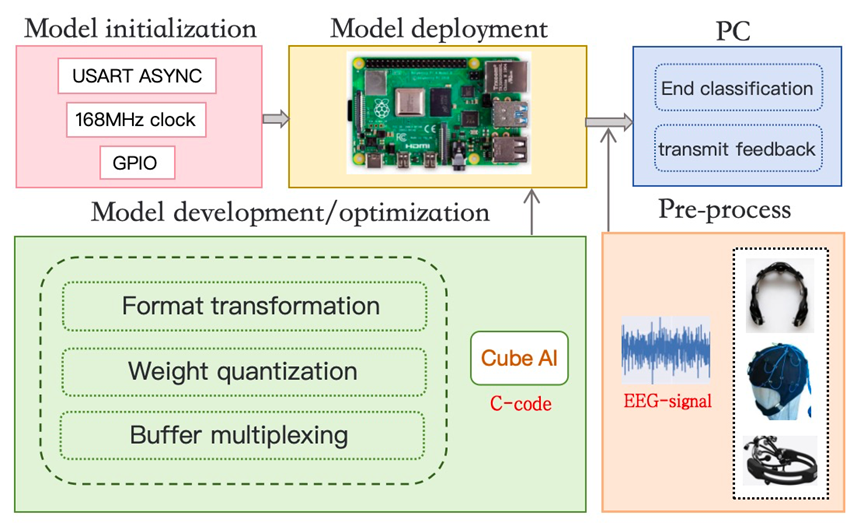

Our study adopts the hybrid framework of a re-processed model and embedded optimization, aiming to transform the existing CNN-based emotion classification into a lightweight model which can be deployed on a resource-constrained microcontroller such as the “STM32”. The framework that this article puts forward is able to process the real-time EEG emotional signal and produce the result in a very short time.

2.2. Dataset acquisition

In this work, we used a publicly accessible dataset from GitHub. The dataset consisted of two participants (one male and one female) who experienced mood swings by watching video clips of different emotional stimuli.

In the GitHub dataset, trials were conducted using a Muse headband to capture EEG signals from the subjects, with electrode layouts conforming to the 10-20 system extension criteria. Positive, neutral, and negative emotions were recorded for a duration of three minutes for each state, while six minutes of resting neutral data were also recorded.

2.3. Preprocessing

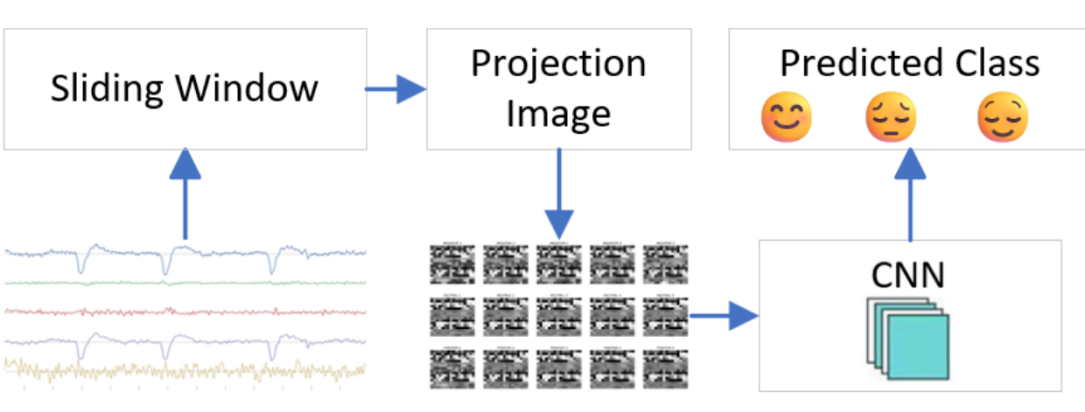

The EEG dataset was denoised and normalized as described in [10] to ensure data quality. Considering the autocorrelation and temporal properties of EEG, single-point features often do not provide enough information. To adapt to the subsequent model training, we adopted a sliding window-based feature extraction method [11] to extract the time and frequency domain features of the EEG signal. Specifically, under this extraction method, the EEG signal is divided into multiple consecutive time windows, each containing a 4ms fixed-length signal. With this approach, we are able to capture the dynamics of the signal over different time periods [12]. In the feature extraction phase of each time window, signal fluctuation patterns are characterized by time-domain statistical analysis (including signal maxima, means, and variances), while frequency-domain amplitude features are extracted using the Fast Fourier Transform to reveal underlying rhythmic patterns in the signal. This time-frequency domain feature fusion strategy achieves a joint representation of signal amplitude dynamics and spectral distribution features, which has been shown to significantly improve the completeness of sentiment features [13,14].

Next, in the feature selection stage, we combined time-domain statistics and frequency-domain components and selected the 256 most representative features from the original feature space based on estimated information gain[15]. This processing method effectively alleviates the problem of feature redundancy while preserving emotion-related physiological patterns. In addition, we use a feature space reconstruction technique to reorganize the 256 features of each sample into a 16 × 16 matrix, which allows the data to be input into the CNN as images, thus leveraging the power of the CNN for emotion classification tasks.

2.4. Model adaptation

In this study, considering performance and complexity, we chose to use a convolutional neural network (CNN) for the emotion recognition task. The model can be trained based on image data from EEG signals, with features automatically extracted from the images and classified into three categories. The model reduces unnecessary resource consumption by reducing the parameters and computations of the corresponding convolutional and fully connected layers while ensuring a certain level of accuracy. It also uses pooling layers for dimensionality reduction, which reduces the input size for subsequent layers. The data was split in a 7:3 ratio for training, and the trained model was saved for subsequent deployment.

2.5. Microcontroller deployment phase

The hardware part selects the STM32F4 series microcontroller, firstly for its computational capabilities and memory resources, which can fully support the quantized CNN model, and secondly for its relatively affordable cost. Communication between the microcontroller and the computer is established via USB-TTL serial port, enabling real-time transmission of EEG signals.

Software development utilized the CubeMX integrated environment for hardware configuration and framework construction. Implementation required installation of both the STM32F4 HAL library and X-CUBE-AI extension package. Our model was developed in Keras, then converted to TFLite format through TensorFlow to suit embedded deployment requirements.

To address resource constraints, we implemented model lightening through weight quantization with low compression, reducing memory requirements from 1.17MB to 371KB—a 67.4% reduction. We also employed activation buffer multiplexing, allowing input and output buffers to share memory space. The X-CUBE-AI toolchain performed graph optimization and operator fusion, generating C executable code compliant with CMSI-NN standards, enabling efficient model execution.

As illustrated in Figure 3, our implementation achieves end-to-end real-time inference through optimized design. The system performs hardware initialization, loads the lightweight model, and employs an asynchronous communication protocol where the host sends data frames to the STM32, which processes them and returns classification results. Our validation experiments confirmed successful data transmission and prediction reception, with appropriate error handling for timeout situations.

The system utilized USB-TTL serial communication to establish bidirectional data flow between the microcontroller and host computer, facilitating real-time EEG data upload and emotion classification feedback (positive/neutral/negative). Validation employed a two-phase approach: first testing communication protocols through CubeMX virtual serial port tools, then conducting hardware-in-the-loop[16] validation by injecting simulated EEG signals into the system. This process confirmed the complete data-to-decision pipeline functionality. Subsequently, we compared the classification performance of the PC-side and microcontroller-side using confusion matrices for further analysis.

2.6. Evaluation metrics

Regarding model optimization and compression, we evaluated aspects such as weight memory and RAM usage, as detailed in section 3.1. Subsequently, to assess the performance of the CNN model in classifying three sentiment categories (Negative, Neutral, Positive) and compare the performance of computer and microcontroller platforms, we selected the following evaluation metrics: Accuracy, which reflects the proportion of correctly classified samples relative to the total number of samples; Precision, which measures the proportion of correctly predicted samples for each category out of all predictions for that category; Recall, which indicates the ratio of correctly predicted samples to the total number of true samples in each category; F1-Score, which combines Precision and Recall through their harmonic mean to comprehensively assess model performance; and Confusion Matrix, which presents the model’s prediction results for each category in tabular form. Together, these metrics provide a comprehensive quantitative basis for evaluating the model’s classification capabilities and the platforms' performance in resource-constrained environments.

2.7. Embedded deployment of core effectiveness

|

Optimization Metric |

Weight Memory |

RAM Footprint |

MACCs per Inference |

|

Baseline Model |

1165.3 KB |

>128 KB |

830,672operations |

|

Efficient_Deployment |

379.9KB Flash |

29.7 KB |

830,672operations(Lossless) |

|

Hardware Constraints |

1 MB Flash |

128 KB RAM |

<1M operations/inference |

|

Achievement Ratio |

39.5% |

23.2% |

83.1% |

To adapt to the hardware limitations of the STM32F4, the model has been deployed after multi-strategy optimization. During the runtime phase, the Flash requirement is 379.9 KB, and the RAM requirement is only 29.7 KB, which account for 39.5% and 23.2% of the STM32F4 hardware capacity (Flash 1 MB, RAM 128 KB), respectively. Compared to the original model, this represents effective compression, indicating that these optimizations ensure the model can run on the microcontroller with low power consumption and high efficiency.

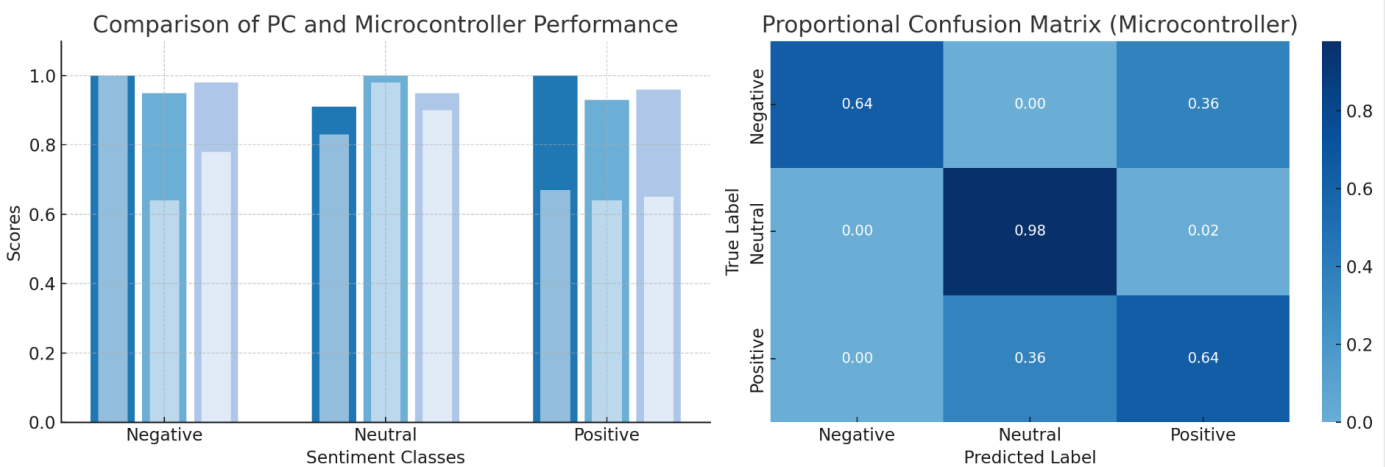

2.8. Classification performance in restricted environments vs. cross-platform model performance

The results show that the computer has higher precision, recall, and F1 scores across all sentiment categories. In the Positive and Negative categories, the computer outperforms the microcontroller. In contrast, the performance gap in the Neutral category is smaller. The confusion matrix on the right also shows that the microcontroller performs relatively accurately in the Neutral category, with almost all neutral samples correctly classified as neutral. However, the predictions for the Negative and Positive categories show some bias, particularly with negative samples being misclassified as positive. In the Positive category, some predictions are misclassified as Neutral, indicating that the microcontroller's recognition accuracy decreases to some extent for categories with more pronounced sentiment polarity.

The overall accuracy of the computer is 0.96, higher than the microcontroller's 0.81, with the gap mainly stemming from differences in hardware resources and computational power. However, considering that the microcontroller operates in a resource-constrained embedded environment, it still achieves an accuracy of 0.81, demonstrating better adaptability, especially in the Neutral category, where its performance is close to that of the computer. This suggests that despite the limitations of microcontrollers in complex emotion classification tasks, their performance in edge computing scenarios is of practical value, providing feasibility and optimization space for deploying neural networks on embedded devices.

3. Discussion

This study implemented an EEG-based emotion classification system on the STM32 microcontroller and encountered several issues worthy of in-depth discussion. Firstly, due to hardware limitations, we used an online dataset rather than actual data collection. Additionally, the dataset was collected using Muse headbands in a laboratory environment, which differs significantly from clinical or daily scenarios. Data collected in a controlled environment lacks the noise interference and signal variations present in real-world settings, potentially leading to suboptimal model performance in practical applications. Future research could integrate signal acquisition with subsequent deployment processes and consider collecting data across different environments, or directly integrate signal acquisition modules onto microcontrollers to reduce signal distortion caused by intermediate processes.

When migrating the model from computer platforms to the STM32 microcontroller, there was a noticeable decrease in classification accuracy. This was primarily due to hardware resource limitations. Operations such as pruning and quantizing fully connected layers inevitably affected model performance. Future research should explore more efficient neural network architectures, such as depth wise separable convolutions or attention mechanisms optimized for low-resource environments, to enhance feature extraction capabilities while maintaining computational efficiency.

Additionally, during testing, signal transmission faced stability and latency issues. Delays in data feedback affected the system's ability to accurately reflect instantaneous emotional states. These issues stem from multiple factors, including data transmission protocols, model inference speed, and hardware processing capabilities. Although the system essentially achieved functional real-time emotion reasoning, it struggled to maintain stable performance when processing large volumes of EEG data. This is particularly critical for applications that require monitoring rapidly changing emotional states.

4. Conclusion

This study successfully deployed an EEG-based emotion recognition system on an STM32 microcontroller, achieving 0.81 classification accuracy across negative, neutral, and positive emotional states compared to 0.96 on computer platforms. Through weight quantization and buffer multiplexing, we reduced memory requirements by 67.4%, enabling efficient operation within the microcontroller's constraints (39.5% Flash, 23.2% RAM utilization). Despite performance gaps in highly polarized emotions, the system's reliable neutral emotion detection demonstrates the feasibility of embedded deep learning for accessible mental health monitoring. Future improvements will focus on model optimization and adaptability, advancing EEG-based emotion recognition for wearable and portable applications.

Acknowledgement

Chong Chen, Ziyi Zhu, Yongcheng He and Wen Xu contributed equally to this work and should be considered co-first authors.

References

[1]. World Health Organization. (2022). World mental health report: Transforming mental health for all. https: //www.who.int/teams/mental-health-and-substance-use/world-mental-health-report.

[2]. Xie, L. (2024). A review of diagnostic methods and related interventions for depression. Advances in Social Sciences, 13(1), 1-7. https: //doi.org/10.12677/ass.2024.131001.

[3]. Chen, B. (2017). Beware of hidden depression. Political Work Guide, 4, 49-50. https: //doi.org/10.16687/j.cnki.61 1063/d.2017.04.035

[4]. Haenel, T. (2018). Die larvierte Depression. In: Depression – das Leben mit der schwarz gekleideten Dame in den Griff bekommen. Springer, Berlin, Heidelberg. https: //doi.org/10.1007/978-3-662-54417-4_4.

[5]. Constantinos Halkiopoulos, Evgenia Gkintoni, Anthimos Aroutzidis , et al.Advances in Neuroimaging and Deep Learning for Emotion Detection: A Systematic Review of Cognitive Neuroscience and Algorithmic Innovations [J].Diagnostics (Basel, Switzerland), 2025, 15, (4): 456.DOI: 10.3390/diagnostics15040456.

[6]. Priyadarshani, Muskan, Kumar, Pushpendra, Babulal, Kanojia Sindhuben , et al.Human Brain Waves Study Using EEG and Deep Learning for Emotion Recognition [J].IEEE ACCESS, 2024, 12, 101842-101850.DOI: 10.1109/ACCESS.2024.3427822.

[7]. Ramzan, Munaza, Dawn, Suma.Fused CNN-LSTM deep learning emotion recognition model using electroencephalography signals [J].INTERNATIONAL JOURNAL OF NEUROSCIENCE, 2023, 133, (6): 587597.DOI: 10.1080/00207454.2021.1941947.

[8]. Zhong, B., Wang, P. F., & Wang, Y. Q., et al. (2024). A review of EEG data analysis techniques based on deep learning [A survey of EEG data analysis techniques using deep learning]. Journal of Zhejiang University (Engineering Science), 58, (5): 879-890.DOI: 10.3785/j.issn.1008-973X.2024.05.001.

[9]. Huang, Jingwei, Wang, Chuansheng, Zhao, Wanqi , et al.LTDNet-EEG: A Lightweight Network of Portable/Wearable Devices for Real-Time EEG Signal Denoising [J].IEEE TRANSACTIONS ON CONSUMER ELECTRONICS, 2024, 70, (3): 5561-5575.DOI: 10.1109/TCE.2024.3412774.

[10]. Urigüen, J. A., & Garcia-Zapirain, B. (2015). EEG artifact removal-state-of-the-art and guidelines. Journal of neural engineering, 12(3), 031001. https: //doi.org/10.1088/1741-2560/12/3/031001

[11]. Singh, Kamal, Singha, Nitin, Jaswal, Gaurav , et al.A Novel CNN With Sliding Window Technique for Enhanced Classification of MI-EEG Sensor Data [J].IEEE SENSORS JOURNAL, 2025, 25, (3): 4777-4786.DOI: 10.1109/JSEN.2024.3515252.

[12]. Han, S., Mao, H., & Dally, W. J. (2015). Deep compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding. arXiv.org. https: //arxiv.org/abs/1510.00149

[13]. Sharma, Ramnivas, Meena, Hemant Kumar.Graph based novel features for detection of Alzheimer's disease using EEG signals [J].BIOMEDICAL SIGNAL PROCESSING AND CONTROL, 2025, 103, DOI: 10.1016/j.bspc.2024.107380.

[14]. Asemi, Hanie, Farajzadeh, Nacer.Improving EEG signal-based emotion recognition using a hybrid GWO-XGBoost feature selection method [J].BIOMEDICAL SIGNAL PROCESSING AND CONTROL, 2025, 99, DOI: 10.1016/j.bspc.2024.106795.

[15]. P.-N. Tan, Introduction to data mining. Pearson Education India, 2018.

[16]. Junior, J. C. V. S., Brito, A. V., & Nascimento, T. P. (2015). Testing real-time embedded systems with hardware-in the-loop simulation using high level architecture. In 2015 Brazilian Symposium on Computing Systems Engineering (SBESC) (pp. 142-147). Foz do Iguacu, Brazil. https: //doi.org/10.1109/SBESC.2015.34

Cite this article

Chen,C.;Zhu,Z.;He,Y.;Xu,W. (2025). An EEG Emotion Recognition System Based on the STM32 Microcontroller. Applied and Computational Engineering,157,254-260.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-CDS 2025 Symposium: Data Visualization Methods for Evaluation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. World Health Organization. (2022). World mental health report: Transforming mental health for all. https: //www.who.int/teams/mental-health-and-substance-use/world-mental-health-report.

[2]. Xie, L. (2024). A review of diagnostic methods and related interventions for depression. Advances in Social Sciences, 13(1), 1-7. https: //doi.org/10.12677/ass.2024.131001.

[3]. Chen, B. (2017). Beware of hidden depression. Political Work Guide, 4, 49-50. https: //doi.org/10.16687/j.cnki.61 1063/d.2017.04.035

[4]. Haenel, T. (2018). Die larvierte Depression. In: Depression – das Leben mit der schwarz gekleideten Dame in den Griff bekommen. Springer, Berlin, Heidelberg. https: //doi.org/10.1007/978-3-662-54417-4_4.

[5]. Constantinos Halkiopoulos, Evgenia Gkintoni, Anthimos Aroutzidis , et al.Advances in Neuroimaging and Deep Learning for Emotion Detection: A Systematic Review of Cognitive Neuroscience and Algorithmic Innovations [J].Diagnostics (Basel, Switzerland), 2025, 15, (4): 456.DOI: 10.3390/diagnostics15040456.

[6]. Priyadarshani, Muskan, Kumar, Pushpendra, Babulal, Kanojia Sindhuben , et al.Human Brain Waves Study Using EEG and Deep Learning for Emotion Recognition [J].IEEE ACCESS, 2024, 12, 101842-101850.DOI: 10.1109/ACCESS.2024.3427822.

[7]. Ramzan, Munaza, Dawn, Suma.Fused CNN-LSTM deep learning emotion recognition model using electroencephalography signals [J].INTERNATIONAL JOURNAL OF NEUROSCIENCE, 2023, 133, (6): 587597.DOI: 10.1080/00207454.2021.1941947.

[8]. Zhong, B., Wang, P. F., & Wang, Y. Q., et al. (2024). A review of EEG data analysis techniques based on deep learning [A survey of EEG data analysis techniques using deep learning]. Journal of Zhejiang University (Engineering Science), 58, (5): 879-890.DOI: 10.3785/j.issn.1008-973X.2024.05.001.

[9]. Huang, Jingwei, Wang, Chuansheng, Zhao, Wanqi , et al.LTDNet-EEG: A Lightweight Network of Portable/Wearable Devices for Real-Time EEG Signal Denoising [J].IEEE TRANSACTIONS ON CONSUMER ELECTRONICS, 2024, 70, (3): 5561-5575.DOI: 10.1109/TCE.2024.3412774.

[10]. Urigüen, J. A., & Garcia-Zapirain, B. (2015). EEG artifact removal-state-of-the-art and guidelines. Journal of neural engineering, 12(3), 031001. https: //doi.org/10.1088/1741-2560/12/3/031001

[11]. Singh, Kamal, Singha, Nitin, Jaswal, Gaurav , et al.A Novel CNN With Sliding Window Technique for Enhanced Classification of MI-EEG Sensor Data [J].IEEE SENSORS JOURNAL, 2025, 25, (3): 4777-4786.DOI: 10.1109/JSEN.2024.3515252.

[12]. Han, S., Mao, H., & Dally, W. J. (2015). Deep compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding. arXiv.org. https: //arxiv.org/abs/1510.00149

[13]. Sharma, Ramnivas, Meena, Hemant Kumar.Graph based novel features for detection of Alzheimer's disease using EEG signals [J].BIOMEDICAL SIGNAL PROCESSING AND CONTROL, 2025, 103, DOI: 10.1016/j.bspc.2024.107380.

[14]. Asemi, Hanie, Farajzadeh, Nacer.Improving EEG signal-based emotion recognition using a hybrid GWO-XGBoost feature selection method [J].BIOMEDICAL SIGNAL PROCESSING AND CONTROL, 2025, 99, DOI: 10.1016/j.bspc.2024.106795.

[15]. P.-N. Tan, Introduction to data mining. Pearson Education India, 2018.

[16]. Junior, J. C. V. S., Brito, A. V., & Nascimento, T. P. (2015). Testing real-time embedded systems with hardware-in the-loop simulation using high level architecture. In 2015 Brazilian Symposium on Computing Systems Engineering (SBESC) (pp. 142-147). Foz do Iguacu, Brazil. https: //doi.org/10.1109/SBESC.2015.34