1. Introduction

As cities evolved throughout time, the majority of them began adjusting to the automobile to offer a more sophisticated transportation system. However, traffic congestion is growing in importance for all nations as more cars enter crowded urban areas. Sometimes it starts to outweigh the benefits as it can be time-consuming and slow down the country's economic development. In order to ensure the economic growth of the country, limit pollution, limit the use of non-renewable resources such as gasoline, and the safety of road users. It is required to provide traffic forecasts that offer precise estimates of traffic trends in the upcoming months. The purpose of this study is to calculate traffic trends for the next two months, given the number of cars passing through the road per hour in a day.

Some countries and cities have tried to adopt road pricing systems to address congestion in the past, such as London and Norway. However, these attempts often failed because these systems were often seen as a means to raise funds rather than to achieve optimal congestion levels [1]. Hong Kong also tried such a system in 1989, but it ultimately failed because the government needed to specify the use of the funds. Therefore, methods to limit road congestion are almost impossible because vehicle owners will only accept them if they know the costs and the use of funds.

Instead, it is better to inform road users about the predicted trends in the number of vehicles when people use the roads and plan their own excursions. This is why machine learning is one of the most popular emerging branches today, as it can help us predict traffic trends and plan road use.

In the field of traffic prediction based on machine learning algorithms, researchers in the past have already developed multiple ways e.g. functional mixture prediction approach to combine functional prediction with probabilistic functional classification to take distinct traffic flow patterns into account [2], therefore, reducing traffic congestion. Chiou proposed this method in Dynamical Functional Prediction and Classification. With Application to Traffic Flow Prediction, however, Gated Recurrent Unit (GRU) uses less memory and is faster than Long Short-term Memory (LSTM) and functional mixture prediction [3-6]. But also, LSTM is more accurate when used dataset with longer sequences. But also, GRU’s address is starting a vanishing gradient problem from which vanilla recurrent neural networks suffer. Overall, this research decides to use the GRU neural network, and we think it is the fastest and most efficient way to calculate the traffic trend in times.

In this paper, we propose to use Python to build a linear regression model to address the issue of traffic congestion. We got some data about traffic jams from Kaggle, including DataTime, Junction, Vehicles, ID to build the LSTM model. From the training of GRU neural network to predict the traffic conditions at four different intersections, we get two conclusions: the Number of vehicles in Junction one is rising more rapidly compared to junction two and three; The Junction one's traffic has a stronger weekly seasonality as well as hourly seasonality. Whereas other junctions are significantly linear.

2. Methodology

2.1. Dataset preparation

The dataset used in this study is found in Kaggle [7]. The dataset contains 48,120 observations of the number of vehicles each hour in four different junctions, namely 1) DateTime 2) Junction 3) Vehicles 4) ID

In terms of the data preprocessing, this study used Pandas and Numpy libraries to process and convert the data. The data set contains historical traffic flow and weather information, which are combined into time series and normalized to better suit the training of the model.

At the beginning of the experiment, this study imported the related library and loaded the dataset. We will use this to predict the traffic trends for the next two months. However, the final output of the program will not be displayed as data but as a graph showing trends in the number of cars passing through the four intersections on the road.

2.2. Description of neural network

This study used the GRU model for traffic flow prediction. The GRU model is a recurrent neural network model suitable for processing time series data. The model employs three gates (i.e. reset, update, new state) to control the flow of information. The authors used a single-layer GRU model, including 128 neurons.

In order to analyze them using GRU, firstly it should decide how much past data is should remember. In the data, it should be from 2015-11-01 to 2017-06-30 and recording the junctions. This is the first step in GRU and it is used to decide which information should be omitted from the cell in that particular time step. In the second layer, the first part of them is sigmoid function, and the other one is tanh function. The third step is the step that decides what the output will be, which will be presented in the form of trend of number of cars passing in graph. After the code ran a sigmoid layer, which decides what part of the cell make it to the output.

In this study, a GRU neural network was trained to predict the traffic at four junctions. The normalization and differencing transformations were employed to stabilize the time series. Since the trends and seasonality differ at each junction, different methods were applied to make them stationary. In addition, this study applied root mean square error as the evaluation metric for the model. The model architecture consists of an input layer, an embedding layer, a GRU layer and an output layer. The input layer takes the time series data as input. The embedding layer is used to convert categorical data into numerical data. The GRU layer is used to learn the hidden data from the input sequences. The output layer is used to generate the predictions.

2.3. Implementation details

In terms of the loss function and optimizer, this study used the Mean Square Error (MSE) as the loss function to evaluate the performance of the model and used the Adam optimizer to update the weights of the model since it has been widely used in many studies [8-10].

This study used GPU acceleration to train the model and used Keras's EarlyStopping and ModelCheckpoint callbacks to monitor and save the performance of the model.

In addition, this study used R2 and RMSE metrics to evaluate the performance of the model. R2 is an index used to evaluate the fitting degree of the model to the data, and RMSE is an index used to evaluate the prediction error of the model.

Overall, this notebook provides an example of using the GRU model for traffic flow prediction, and improves the training efficiency of the model by using the Keras library and GPU acceleration. At the same time, this study also uses some callback functions to monitor and save the performance of the model in order to better optimize the training of the model.

3. Result and discussion

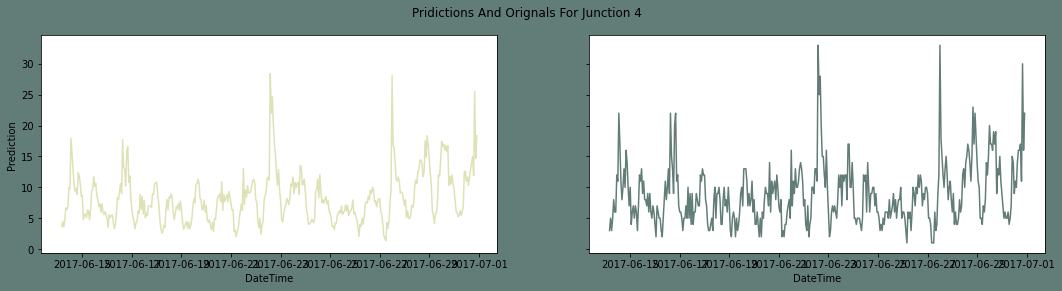

Figure 1. The predictions and originals for function 1.

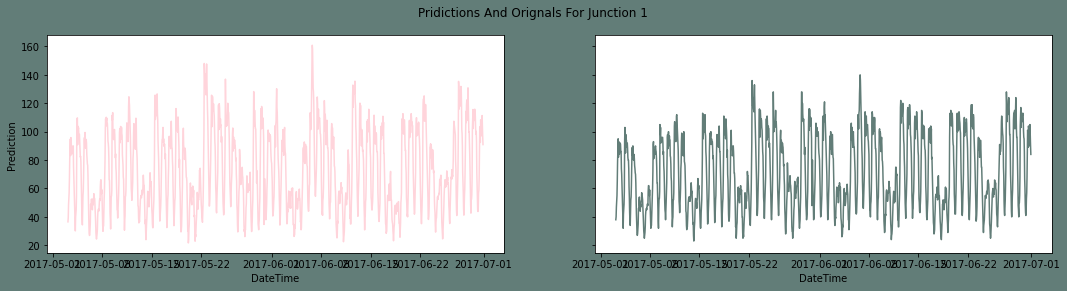

Figure 2. The predictions and originals for function 2.

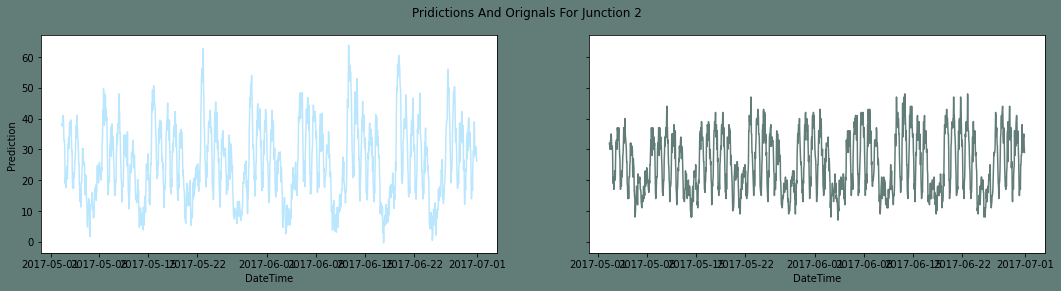

Figure 3. The predictions and originals for function 3.

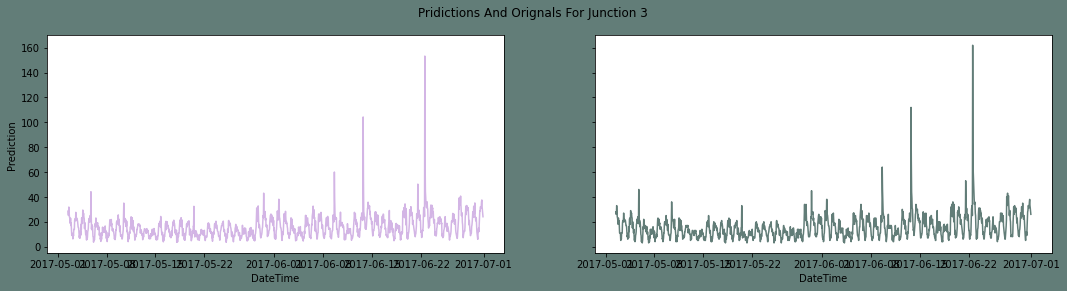

|

Figure 4. The predictions and originals for function 4. |

In this section, we will apply inverse transforms to the preprocessed datasets to eliminate seasonality and trends, thereby facilitating accurate predictions. Our project involves training a GRU neural network to forecast traffic at four junctions. To achieve a stationary time series, we used normalization and differencing transforms. However, given the differing trends and seasonality observed at each junction, we adopted unique strategies for each to achieve stationarity. We evaluated model performance using the root mean squared error, and generated plots to visualize the predicted values against the original test data.

Based on the analysis of Figure 1, Figure 2, Figure 3, and Figure 4, it is evident that the rate of increase in vehicle numbers at Junction 1 is significantly higher than that at the other two junctions. Additionally, Junction 1 exhibits stronger weekly and hourly seasonality, whereas the other junctions display a more linear trend. However, the data for Junction 4 is relatively sparse, precluding definitive conclusions regarding its traffic patterns.

Upon comparing the forecasted and actual traffic situations, we note that the prediction method is reasonably accurate, thereby enabling its future use in traffic intersection control. The relatively high traffic volume at Junction 1 may be attributed to its greater connectivity and accessibility to public destinations, leading to increased vehicular traffic over time. As such, the government could consider prioritizing the management of this junction over the others.

The success of the model can be attributed to the availability of sufficient data, as well as the clear seasonality depicted in the analysis. Nonetheless, the model does have some limitations, including the potential impact of long training times on its accuracy, as well as the accuracy of predicted values during certain time periods.

4. Conclusion

In this project, we trained a GRU Neural network to predict traffic flow rates at four junctions. To achieve a stationary time series, we employed normalization and differencing transforms, taking into consideration the varying trends and seasonality at each junction. Evaluation of the model's performance was based on the root mean squared error metric, and we generated plots to visualize the predicted values alongside the original test data.

Analysis of the data revealed that the number of vehicles at Junction 1 is rising at a faster rate than that at Junctions 2 and 3, whereas data sparsity prevented us from drawing conclusions regarding Junction 4. Additionally, Junction 1 exhibited stronger weekly and hourly seasonality, while the other junctions displayed a more linear trend.

The findings of this study can be effectively employed in predicting vehicle flow rates. However, GRU models still face challenges, such as slow convergence rates and low learning efficiency, resulting in long training times and even under-fitting. Nevertheless, given its accuracy and ease of implementation, it remains a viable method for traffic flow rate prediction.

References

[1]. Thomson J M 1998 Reflections on the economics of traffic congestion Journal of transport economics and policy 93-112

[2]. Chiou J M 2012 Dynamical functional prediction and classification, with application to traffic flow prediction

[3]. Dey R Salem F M 2017 Gate-variants of gated recurrent unit (GRU) neural networks 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS) IEEE 1597-1600

[4]. Fu R Zhang Z Li L 2016 Using LSTM and GRU neural network methods for traffic flow prediction 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC). IEEE 324-328

[5]. Bansal T Belanger D McCallum A 2016 Ask the gru: Multi-task learning for deep text recommendations proceedings of the 10th ACM Conference on Recommender Systems 107-114

[6]. Yu Y Si X Hu C et al. 2019 A review of recurrent neural networks: LSTM cells and network architectures Neural computation 31(7): 1235-1270

[7]. Kaggle 2021 Traffic prediction dataset https://www.kaggle.com/datasets/fedesoriano/traffic-prediction-dataset

[8]. Köksoy O 2006 Multiresponse robust design: Mean square error (MSE) criterion Applied Mathematics and Computation 175(2): 1716-1729.

[9]. Yu Q Chang C S Yan J L et al. 2019 Semantic segmentation of intracranial hemorrhages in head CT scans 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS) IEEE 112-115.

[10]. Zhang Z 2018 Improved adam optimizer for deep neural networks 2018 IEEE/ACM 26th international symposium on quality of service (IWQoS) Ieee 1-2

Cite this article

Ding,H.;Li,Z.;Su,N. (2023). Traffic Prediction Based on the GRU Neural Network. Applied and Computational Engineering,8,287-291.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2023 International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Thomson J M 1998 Reflections on the economics of traffic congestion Journal of transport economics and policy 93-112

[2]. Chiou J M 2012 Dynamical functional prediction and classification, with application to traffic flow prediction

[3]. Dey R Salem F M 2017 Gate-variants of gated recurrent unit (GRU) neural networks 2017 IEEE 60th international midwest symposium on circuits and systems (MWSCAS) IEEE 1597-1600

[4]. Fu R Zhang Z Li L 2016 Using LSTM and GRU neural network methods for traffic flow prediction 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC). IEEE 324-328

[5]. Bansal T Belanger D McCallum A 2016 Ask the gru: Multi-task learning for deep text recommendations proceedings of the 10th ACM Conference on Recommender Systems 107-114

[6]. Yu Y Si X Hu C et al. 2019 A review of recurrent neural networks: LSTM cells and network architectures Neural computation 31(7): 1235-1270

[7]. Kaggle 2021 Traffic prediction dataset https://www.kaggle.com/datasets/fedesoriano/traffic-prediction-dataset

[8]. Köksoy O 2006 Multiresponse robust design: Mean square error (MSE) criterion Applied Mathematics and Computation 175(2): 1716-1729.

[9]. Yu Q Chang C S Yan J L et al. 2019 Semantic segmentation of intracranial hemorrhages in head CT scans 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS) IEEE 112-115.

[10]. Zhang Z 2018 Improved adam optimizer for deep neural networks 2018 IEEE/ACM 26th international symposium on quality of service (IWQoS) Ieee 1-2