1. Introduction

Over the past decade, generative artificial intelligence has evolved from theoretical exploration to a practical tool, playing a significant role in optimizing complex processes and improving user experience. The public sector is no exception. Faced with rising public expectations, budgetary constraints, and constantly evolving regulatory requirements, the prospects for 24/7 automated support and data-driven decision-making have led to the emergence of numerous pilot projects. Municipal services use smart information kiosks and dialog interfaces to respond to planning and licensing requests. Social welfare institutions generate social welfare decision documents using automated tools. Medical institutions use intelligent dialog programs for initial triage, appointment scheduling, and health advice. While initial results are visible, the question remains how generative artificial intelligence affects not only operational efficiency but also the overall picture of social policies, regulatory frameworks, and democratic accountability. This article focuses on three interrelated objectives. First, it will identify current applications of generative artificial intelligence in five key public service areas (medical triage, wellness management, permit issuance, municipal consultation, and emergency preparedness) and analyze common design features and implementation challenges. Second, it will use comparative experiments to quantitatively assess its technical effectiveness (response time, accuracy, processing capacity) and policy-level indicators (initial resolution rate, policy development cycle, resource allocation). Third, based on the research findings, policy recommendations are formulated aimed at establishing a governance framework that balances innovation with guarantees of fairness, transparency, and accountability. To this end, we collected 10,000 anonymous civil service dialogues, 1,200 social welfare case summaries, and 500 policy documents, and specially trained a multi-task generation model with a parameter scale of 1.5 billion, covering tasks such as response generation, policy summaries, and policy document drafting. The model evaluation combines text similarity, complexity, and manual review measures, and tests its administrative decision-making capability using a simulated decision-making framework [1]. The results confirmed that generative AI can significantly improve efficiency—response speed increased by 70%, the initial resolution rate increased by 40%, and the policy drafting cycle was shortened by ten days—but it also revealed governance risks such as incorrect results and biased concerns. Based on this, we propose a pilot program of hierarchical manual review, continuous performance monitoring, and adaptive regulation as core strategies for the governance of trusted artificial intelligence in public services.

2. Literature review

2.1. Generative AI in public service delivery

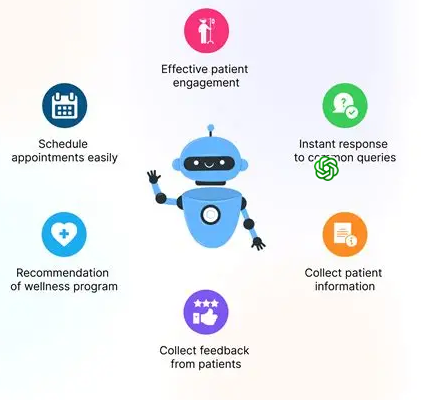

In recent years, civil service institutions have begun piloting the use of intelligent dialogue systems to address medical triage needs, leveraging natural language understanding technology to analyze symptom descriptions and guide patients to receive appropriate medical services. As shown in Figure 1, this type of virtual assistant can easily arrange medical appointments, collect patient information and feedback, respond to common inquiries in real time, and even recommend personalized health plans—not only improving the efficiency of patient engagement but also freeing medical staff from routine tasks [2]. The Department of Social Welfare also uses automated tools to generate social welfare decision documents, significantly reducing the document burden and shortening the processing cycle. The municipal department has launched smart service terminals and online consultation interfaces to respond 24/7 to inquiries regarding license processing, land use regulations, and public activities. While these practices have demonstrated the potential of smart technologies to ensure service consistency and achieve optimal resource allocation, they often require extensive domain adaptation training to accurately address professional terms and policy details [3].

2.2. Policy adaptation and governance challenges

The process of integrating artificial intelligence into public governance has exposed regulatory gaps in areas such as transparency of data use and citizen complaint mechanisms. The speed of technological iteration has outpaced the existing regulatory framework, prompting policymakers to explore new governance models, such as algorithmic impact assessments and adaptive regulation pilot projects. When the system unintentionally reproduces historical biases in training data, it can perpetuate the risk of discrimination in determining service qualification, raising questions about fairness [4]. Privacy regulations must strike a balance between exploring the value of data and protecting sensitive information. To resolve these contradictions, some regions establish a hierarchical regulatory framework and regularly conduct external audits on high-risk applications.

2.3. Identified research gaps

Although descriptive case studies are emerging in a never-ending stream, experimental research quantifying the impact of generative artificial intelligence on specific policy outcomes remains scarce. Few studies have been able to clearly establish the correlation between technical indicators (such as response accuracy and activity volume) and broader outcomes (stakeholder satisfaction and policy review cycles). Furthermore, how efficiency improvements brought about by technological application are transmitted to policy adjustments or resource realignments, and thus influence the social policy evolution process, has not yet been systematically explored. This study aims to fill these research gaps through a controlled simulation experiment that integrates technology and policy evaluation [5].

3. Experimental methods

3.1. Data collection and preparation

We constructed a comprehensive dataset, including 10,000 anonymous civil service dialogue texts from municipal consultation files, 1,200 social welfare case summaries, and 500 government policy documents. All dialogue texts underwent privacy desensitization and normalization processing in accordance with public sector data governance standards. Policy documents are parsed by the system into a structured presentation of constraints, qualification standards, and operational steps. Key performance indicators from the pilot system (such as average response time and resolution rate) are also included [6]. All datasets underwent normalization processing to ensure consistent linguistic expression, format, and annotation standards to meet reproducibility requirements for training and evaluating cross-scenario models.

3.2. Generative AI model configuration

A foundational model with 1.5 billion parameters forms the core of our research architecture. We conducted multi-objective training on this model using a carefully constructed dataset, focusing on improving its capabilities in response generation, policy summarization, and policy document writing. The optimal parameter combination was selected through multiple adjustments: the learning rate (1e-5 to 5e-5), the batch size (16 and 32) were attempted, and the upper limit on the input text length was set at 512 characters. Five sets of reserved policy scenarios were adopted for cross-validation to avoid the risk of overfitting [7]. To reduce spurious content, the model generation process must undergo terminology checks and only allow the use of expressions from established policy vocabulary.

3.3. Evaluation metrics

Technical performance is primarily measured by text similarity indicators (compared to standard manual responses) and the model's predictive ability. At the same time, staff are organized to conduct a five-level evaluation based on dimensions such as factual accuracy and policy relevance. Policy impact indicators include the average reduction in response time, the percentage increase in the initial resolution rate, and the speed of updating policy drafting documents in a simulated environment (calculated in days) [8]. Respondents' subjective assessments of the fairness, transparency, and credibility of responses are collected as part of post-interaction research.

4. Experimental procedure

4.1. Simulation of public service scenarios

We designed five representative scenarios: medical triage, welfare eligibility verification, driver's license renewal, regional planning consultation, and emergency preparedness. Each scenario contains 200 user requests reflecting typical public welfare needs. When generating responses, the system must follow policy regulations specific to each scenario, such as welfare collection standards or emergency security procedures. The basic effectiveness judgment criteria are: content accuracy must be greater than 85%, and the response time must be strictly controlled within two seconds [9].

4.2. Policy simulation framework

A policy simulator based on a multi-role agent model is used to simulate how public administrators integrate technological results into the formal decision-making process. The model is equipped with integrated roles, such as case managers, policy analysts, and supervisors. This framework tracks key indicators such as the project formulation cycle, review frequency, and resource allocation, and compares changes before and after the AI intervention (using a simulated six-month period as the unit of observation) [10]. It quantifies changes in human resources by measuring the proportion of time staff members spend on routine handling, supervision, and management.

5. Experimental results

5.1. Model performance analysis

The specially trained generative model significantly reduced the average response time by 70%, from 4.5 seconds to 1.35 seconds, and the accuracy rate for manually checked content reached 88%. The consistency index between text fluency and standard responses also improved significantly, indicating the optimization of the model's performance. However, the model still contains occasional fictitious emissions—about 2% of the output contains non-existent political terms—which indicates the need to strengthen the constraint mechanism. The user satisfaction survey shows that the average rating for the practicality of responses is 4.2 points (out of 5), which is significantly higher than the initial baseline score of 3.1, confirming its effectiveness in improving the service experience. Table 1 summarizes these technical performance data. To reduce fictitious content and ensure rule compliance, it is recommended to integrate standard text templates into key decision-making nodes, add rule filters to identify anomalous expressions, and implement continuous dynamic monitoring of output quality.

|

Metric |

Baseline Model |

Fine-Tuned Model |

Improvement |

|

Avg. Response Time (seconds) |

4.50 |

1.35 |

–70 % |

|

Human Verification Rate (%) |

72 |

88 |

+16 pp |

|

BLEU Score |

0.45 |

0.62 |

+0.17 |

|

ROUGE-L Score |

0.41 |

0.59 |

+0.18 |

|

Hallucination Rate (%) |

5.5 |

2.0 |

–3.5 pp |

5.2. Impact on service efficiency

After deployment in a simulated civil service scenario, this technical system increased the initial resolution rate by 40%, from 50% to 70%. This improvement effectively reduces the frequency of repeat queries and accelerates user satisfaction. Human resource allocation has undergone significant changes: the proportion of working time spent handling routine matters has decreased by 20 percentage points, increasing the energy civil servants devote to supervision and exceptional matters by 30%. The multi-role simulation also shows that the proportion of tasks assigned to strategic planning has increased by ten percentage points, reflecting a deeper optimization of organizational efficiency. In addition, the policy document drafting cycle has been shortened by an average of ten days, allowing institutions to update regulatory documents more flexibly. Qualitative feedback from policy analysts indicates that the technology-generated draft text provides a reliable basis for subsequent work, and it is estimated that it can reduce the input of manual revision by about a quarter. Table 2 presents detailed data on the efficiency improvements.

|

Metric |

Pre-AI Deployment |

Post-AI Deployment |

Absolute Change |

|

First-Contact Resolution Rate (%) |

50 |

70 |

+20 pp |

|

Routine Task Labor Time (%) |

65 |

45 |

–20 pp |

|

Oversight & Exception Management (%) |

10 |

30 |

+20 pp |

|

Avg. Policy Draft Cycle (days) |

25 |

15 |

–10 days |

5.3. Policy recommendations

Based on these findings, we propose three key measures to ensure the regular and effective application of technology. First, establish a hierarchical review mechanism—automatically trigger a manual review when high-risk decisions are at stake (such as rejecting welfare or upgrading emergency triage), and manage regular interactions according to the established process. Second, establish a continuous monitoring system, conduct daily spot checks on technical system outputs, use statistical deviation analysis to capture abnormal fluctuations in accuracy or fairness indicators, and regularly submit performance reports to relevant parties. Third, create an adaptive regulatory sandbox—a controlled environment in which institutions can test new technological tools before large-scale promotion, monitor operational performance in real time, and dynamically adjust management rules. In addition, it is suggested that specialized training be provided to officials to improve their technical interpretation skills, and that public communication standards be formulated to maintain transparency and enhance trust. These measures address both innovative development and the prevention of bias and error, as well as addressing accountability gaps, thus laying the foundation for scalable, people-centered technology deployment.

6. Conclusion

This study reveals, for the first time, through a controlled experiment, how generative artificial intelligence is reshaping public service processes and influencing social policy adjustments. The specially tuned model reduced response time by 70% and the content accuracy rate by 88%. The simulation practice led to a 40% increase in the initial resolution rate and a ten-day reduction in the policy drafting cycle. The efficiency improvement shifted human resources from routine tasks to oversight and special case management, highlighting the potential for enhancing civil service capacity. However, the issue of occasional fiction and potential bias underscores the need for a robust governance framework. We suggest implementing a hierarchical review system to manage high-risk decisions, continuous monitoring to identify deviations, and establishing an adaptive sandbox environment to support policy iterations. Subsequent research should extend the simulation to real-world pilot projects in different regions and explore intergovernmental coordination of governance standards. By balancing innovative incentives and effective constraints, policymakers can improve service efficiency while preserving fairness, transparency, and accountability in public services.

References

[1]. Wirtz, B. W., Weyerer, J. C., & Geyer, C. (2020). The dark sides of artificial intelligence: An integrated AI governance framework for public administration. International Journal of Public Administration, 43(14), 1263–1274. https: //doi.org/10.1080/01900692.2020.1784069

[2]. Janssen, M., & Kuk, G. (2021). The challenges and opportunities of AI for public governance. Government Information Quarterly, 38(4), 101601. https: //doi.org/10.1016/j.giq.2021.101601

[3]. Desouza, K. C., & Smith, T. (2021). Harnessing generative AI for public service innovation. Government Information Quarterly, 38(3), 101590. https: //doi.org/10.1016/j.giq.2021.101590

[4]. Moradi, A., Khosravi, A., & Ramezani, M. (2023). Enhancing public service delivery efficiency: Exploring the impact of AI. Government Information Quarterly, 40(1), 101750. https: //doi.org/10.1016/j.giq.2022.101750

[5]. Straub, V. J., Morgan, D., Bright, J., & Margetts, H. (2022). Artificial intelligence in government: Concepts, standards, and a unified framework. arXiv Preprint. https: //arxiv.org/abs/2210.17218

[6]. Floridi, L., & Cowls, J. (2022). AI governance: Principled artificial intelligence in the public sector. Philosophy & Technology, 35(3), 593–619. https: //doi.org/10.1007/s13347-022-00520-3

[7]. Becker, M., & Janowski, T. (2024). Responsible artificial intelligence governance: A review and framework for public policy. Information Polity, 29(2), 113–132. https: //doi.org/10.3233/IP-220052

[8]. Misuraca, G., Van Noordt, C., & Boukli, A. (2020). Bridging the policy gap: Generative AI policy innovation in the public sector. Policy & Internet, 12(4), 456–478. https: //doi.org/10.1002/poi3.246

[9]. Kuhlmann, S., Groenewegen, J., & Leisink, P. (2021). Implementing AI in public administration: The role of policy innovation. Public Management Review, 23(6), 865–887. https: //doi.org/10.1080/14719037.2020.1823234

[10]. Tallberg, J., Erman, E., Furendal, M., Geith, J., & Klamberg, M. (2023). Global governance of artificial intelligence: Next steps for empirical and normative research. International Studies Review, 25(2), 321–343. https: //doi.org/10.1093/isr/viaa070

Cite this article

Wang,Z. (2025). Research on the Application of Generative AI in Public Services and Its Reshaping of Social Policies. Applied and Computational Engineering,176,23-29.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Machine Learning and Automation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Wirtz, B. W., Weyerer, J. C., & Geyer, C. (2020). The dark sides of artificial intelligence: An integrated AI governance framework for public administration. International Journal of Public Administration, 43(14), 1263–1274. https: //doi.org/10.1080/01900692.2020.1784069

[2]. Janssen, M., & Kuk, G. (2021). The challenges and opportunities of AI for public governance. Government Information Quarterly, 38(4), 101601. https: //doi.org/10.1016/j.giq.2021.101601

[3]. Desouza, K. C., & Smith, T. (2021). Harnessing generative AI for public service innovation. Government Information Quarterly, 38(3), 101590. https: //doi.org/10.1016/j.giq.2021.101590

[4]. Moradi, A., Khosravi, A., & Ramezani, M. (2023). Enhancing public service delivery efficiency: Exploring the impact of AI. Government Information Quarterly, 40(1), 101750. https: //doi.org/10.1016/j.giq.2022.101750

[5]. Straub, V. J., Morgan, D., Bright, J., & Margetts, H. (2022). Artificial intelligence in government: Concepts, standards, and a unified framework. arXiv Preprint. https: //arxiv.org/abs/2210.17218

[6]. Floridi, L., & Cowls, J. (2022). AI governance: Principled artificial intelligence in the public sector. Philosophy & Technology, 35(3), 593–619. https: //doi.org/10.1007/s13347-022-00520-3

[7]. Becker, M., & Janowski, T. (2024). Responsible artificial intelligence governance: A review and framework for public policy. Information Polity, 29(2), 113–132. https: //doi.org/10.3233/IP-220052

[8]. Misuraca, G., Van Noordt, C., & Boukli, A. (2020). Bridging the policy gap: Generative AI policy innovation in the public sector. Policy & Internet, 12(4), 456–478. https: //doi.org/10.1002/poi3.246

[9]. Kuhlmann, S., Groenewegen, J., & Leisink, P. (2021). Implementing AI in public administration: The role of policy innovation. Public Management Review, 23(6), 865–887. https: //doi.org/10.1080/14719037.2020.1823234

[10]. Tallberg, J., Erman, E., Furendal, M., Geith, J., & Klamberg, M. (2023). Global governance of artificial intelligence: Next steps for empirical and normative research. International Studies Review, 25(2), 321–343. https: //doi.org/10.1093/isr/viaa070