1. Introduction

The technologies that combine virtual, augmented, and mixed reality are now described as Extended Reality (XR). XR is now accepted by an increasing number of groups and industries because it can make users feel a more realistic and specific sensory stimulation than flat-screen interfaces. But with the advantages of XR, some challenges also need to be addressed. For instance, limited facial tracking, motion latency, and network instability will cause a negative impact on user’s experience. When players face those uncertain challenges, it makes it difficult for them to gain accurate micro expression and body motion which leads to difficulties in building emotional resonance and empathy in the game. Therefore, we need to delve deeper into how nonverbal cues affect players' empathy to find an effective way to improve XR user experience. On the one hand, building on Ekman and Friesen’s insights, this study showed that micro-expression is just like instantaneous signals [1]. They are across different dimensions. When people use the signals and body motion, they are projecting their emotions onto these signals. So, it is very vital for this study to try to capture those signals and convert them for the XR environment. On the other hand, some studies suggest that facial expressions may be more important than body language in most social environments [2]; however, there are still some researchers who disagree because it is essential to adopt a more systematic and objective approach to collect nonverbal messages. They use facial and eye tracking technology to continuously check people's attention in XR scenes. By recording data from that technology, they can capture the fluctuations of micro-expressions, which provides a new way to observe empathy [3-5]. Some studies have addressed that motion language and micro-expression are also important factors that may boost positive social outcomes [6]. Although these studies provide a foundation, the effects of micro-expressions and body movements in XR games are still not fully understood by researchers.

To better understand the limits of the above studies, this study uses the role play game Star Citizen and VRChat to explore how micro facial and motion influence empathy in XR field. The study will discuss the following five questions in depth. First of all, this study will record whether missing facial expressions will weaken emotional bonds in the XR environment. Secondly, it will examine whether expressive avatars enhance immersion and whether detailed expressions can cause misinterpretation. In the end, this study aims to investigate the existence of an empathy threshold and how body movements and facial expressions will interact with this empathy threshold.

2. Case analysis

In this study, it is very important to use psychological theory and techniques to help us better convert the information we want. Therefore, our hypothesis is mainly based on the mirror neuron theory, which means part of a user’s actions or motions activate corresponding patterns in the observer's brain [7]. If we need to convert and collect micro expressions and motion, it is better to make the XR avatar's expressions observable Moreover, according to the theory of emotional contagion, the emotional feeling from the XR avatar can be spread to another player [8]. This provides strong support to help the study create interaction that cultivate shared feelings.

2.1. Star citizen

Star Citizen uses Face Over Internet Protocol (FOIP) technology. It can map players' real-time facial expressions onto their avatars in the game. When players engage in activities like spacecraft communications or teaming up in public channels, their micro-expressions and movements are transmitted to others in real time. The game creates low trust and high-risk social environments governed by the "Dark Forest Law,” where players cannot immediately judge others' intentions, and cooperation or betrayal can emerge at any time. Consequently, when players display micro-expressions through high-fidelity emotion capture in realistic ways, it can easily cause misunderstandings and conflicts.

2.2. VRChat

Unlike Star Citizen, VRChat provides players with a more diverse environment and exaggerated avatar designs. Players can utilize everything from simple character movements to highly customized avatars within the game's social interactions. These avatars often originate from different countries, regions, and even faiths. Therefore, avatars exaggerated, and diverse elements and movements may increase the risk of misinterpretation of emotional cues.

Through comparing these two games, researchers can provide more detailed feedback on this effect: precise emotion capture enhances immersion but demands greater cognitive effort, while expressive freedom fosters creativity in the XR environment, it may lead to emotional incoherence [9,10].

3. Design methods and prototype

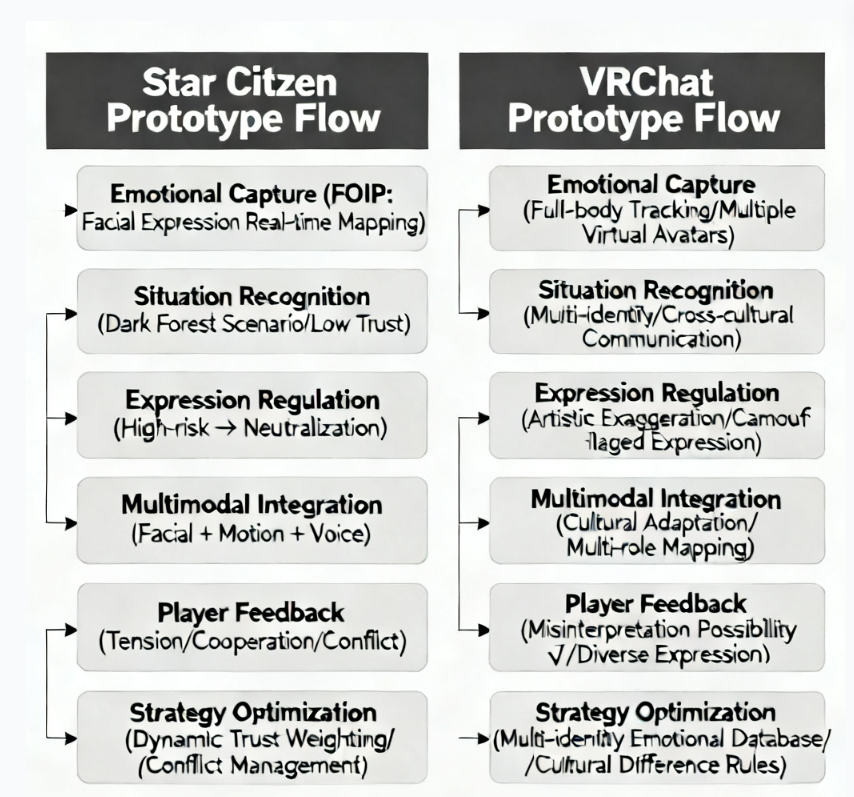

In order to expand this study, the autuor designed two different prototypes (Figure 1). First of all, the prototype of Star Citizen explores the connection between high-fidelity facial capture technology and high-risk social situations. Star Citizen uses Face Over Internet Protocol (FOIP) technology to enable the high-precision and low-latency mapping of players' facial micro expressions onto their virtual avatars. This technology makes the study possible to apply multimodal integration to record players’ facial, motion and voice in real time. More importantly, Star Citizen provides a low trust and high-risk social environment where players can decide to cooperate with others or betray them and fight for high value game resources at any time. In this XR environment, any micro expression that is easily misinterpreted by others can become a trigger for conflict. In this study, the author will use participant observation to follow and document ten experienced players’ micro expression and movement in the test. The analysis will focus on high-risk scenarios, such as initial encounters with unfamiliar players and in-game item transactions.

Additionally, the prototype of VRChat, this game represents a social experience characterized by high freedom and low risk. Its core feature is its exceptional freedom of expression and exclusivity. Players in the game can customize exaggerated character animations and avatars based on their interests. Also, the paper will keep monitoring 10 players with social experience within the game and place them in public social settings (theme clubs, virtual nightclubs). By utilizing full body tracking and a variety of avatars, this study aims to observe how players utilize exaggerated movements as “identity cards” to connect with other players. Therefore, this prototype's hypothesis is that exaggerated nonverbal language may lead to misunderstandings among culturally diverse groups. Unlike the pursuit of realism offered by Star Citizen, these high-freedom, creative possibilities may result in misinterpretation of emotional cues.

4. Conclusion

Based on the research, the lack of microfacial expression can weaken emotional connection in the XR environment. Furthermore, The richer the player Avatar's micro-expressions, the stronger the player’s immersive experience. However, there are still some limits in social environment that contain competition and cross-cultural elements. Over detailed micro expression and body language can cause a disconnecting of empathy and make them feel confused. To be more specific, From the game “star citizen”, the study showed even if high-fidelity facial expression capture (such as the FOIP system) is achieved technically, in the highly competitive and suspicious "dark forest" environment, overly realistic and subtle expressions can become the seeds of misunderstanding. On the other hand, from the game “VRchat”, through highly stylized, even exaggerated self-expression, deep empathy and immersion can also be achieved as long as it is consistent with the context and player identity. In the future study, it is very important for us to know precision of micro expression and movement needs to be balanced to ensure immersive experience and misunderstanding. The immersion does not derive from visual realism, but from the consistency between expression and identity. Future research can explore more enhancing this consistency through technological means.

References

[1]. Ekman, P., & Friesen, W. V. (1975). Unmasking the face: A guide to recognizing emotions from facial clues. Prentice-Hall.

[2]. Oh, C., Kruzic, D., Herrera, F., & Bailenson, J. (2020). Facial expressions contribute more than body movements to conversational outcomes in avatar-mediated virtual environments. Scientific Reports, 10(1), 20626. https: //doi.org/10.1038/s41598-020-76672-4

[3]. Dzardanova, E., & McDuff, D. (2024). Exploring the impact of non-verbal cues on user experience in immersive virtual reality. Computer Animation and Virtual Worlds, 35(3), e2224. https: //doi.org/10.1002/cav.2224

[4]. Linares-Vargas, B. G. P., & McDuff, D. (2024). Interactive virtual reality environments and emotions: A systematic review. Virtual Reality, 28(1), 1–17. https: //doi.org/10.1007/s10055-024-01049-1

[5]. Poglitsch, C., Safikhani, S., List, E., & Pirker, J. (2024). XR technologies to enhance the emotional skills of people with autism spectrum disorder: A systematic review. Computer Animation and Virtual Worlds, 35(3), e103942. https: //doi.org/10.1002/cav.103942

[6]. Li, P. F., & Wang, X. (2024). Influence of avatar identification on the attraction of virtual spaces. JMIR Formative Research, 8, e56704. https: //doi.org/10.2196/56704

[7]. Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192. https: //doi.org/10.1146/annurev.neuro.27.070203.144230

[8]. Hatfield, E., Cacioppo, J. T., & Rapson, R. L. (1993). Emotional contagion. Current Directions in Psychological Science, 2(3), 96–100. https: //doi.org/10.1111/1467-8721.ep10770953

[9]. Pan, X., & Hamilton, A. F. D. C. (2018). Why and how to use virtual reality to study human social interaction: The challenges of exploring a new medium. British Journal of Psychology, 109(3), 395–417. https: //doi.org/10.1111/bjop.12311

[10]. Picard, R. W. (1997). Affective computing. MIT Press.

Cite this article

Tao,H. (2025). Building Connections Through Micro-Expressions and Body Motion Has an Impact in XR Social Environments: Case Studies of Star Citizen and VRChat. Applied and Computational Engineering,210,23-27.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-MLA 2025 Symposium: Intelligent Systems and Automation: AI Models, IoT, and Robotic Algorithms

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Ekman, P., & Friesen, W. V. (1975). Unmasking the face: A guide to recognizing emotions from facial clues. Prentice-Hall.

[2]. Oh, C., Kruzic, D., Herrera, F., & Bailenson, J. (2020). Facial expressions contribute more than body movements to conversational outcomes in avatar-mediated virtual environments. Scientific Reports, 10(1), 20626. https: //doi.org/10.1038/s41598-020-76672-4

[3]. Dzardanova, E., & McDuff, D. (2024). Exploring the impact of non-verbal cues on user experience in immersive virtual reality. Computer Animation and Virtual Worlds, 35(3), e2224. https: //doi.org/10.1002/cav.2224

[4]. Linares-Vargas, B. G. P., & McDuff, D. (2024). Interactive virtual reality environments and emotions: A systematic review. Virtual Reality, 28(1), 1–17. https: //doi.org/10.1007/s10055-024-01049-1

[5]. Poglitsch, C., Safikhani, S., List, E., & Pirker, J. (2024). XR technologies to enhance the emotional skills of people with autism spectrum disorder: A systematic review. Computer Animation and Virtual Worlds, 35(3), e103942. https: //doi.org/10.1002/cav.103942

[6]. Li, P. F., & Wang, X. (2024). Influence of avatar identification on the attraction of virtual spaces. JMIR Formative Research, 8, e56704. https: //doi.org/10.2196/56704

[7]. Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192. https: //doi.org/10.1146/annurev.neuro.27.070203.144230

[8]. Hatfield, E., Cacioppo, J. T., & Rapson, R. L. (1993). Emotional contagion. Current Directions in Psychological Science, 2(3), 96–100. https: //doi.org/10.1111/1467-8721.ep10770953

[9]. Pan, X., & Hamilton, A. F. D. C. (2018). Why and how to use virtual reality to study human social interaction: The challenges of exploring a new medium. British Journal of Psychology, 109(3), 395–417. https: //doi.org/10.1111/bjop.12311

[10]. Picard, R. W. (1997). Affective computing. MIT Press.