1. Introduction

These The history of computer music synthesis is relatively brief compared to the extensive history of human music spanning over a thousand years and the history of modern music dating back several centuries. A notable early example of computer-generated music can be traced back to the late 1940s and early 1950s. At that time, Geoff Hill, working in Sydney, Australia, utilized the CSIR Mk1 computer to produce musical tunes derived from well-known songs [1]. During the late 1950s and early 1960s, a fundamental technique for early electronic synthesizers known as subtractive synthesis was introduced. This method, which became more refined throughout the 1960s and 1970s, works by creating a complex waveform, like a sawtooth or square wave, and then using a filter that varies over time. The filter is applied to sculpt the desired sound by selectively diminishing or eliminating certain frequency component [2]. Bell Laboratories popularized computer music through MUSIC I program, as well as a 1963 article in Science [3]. Between 1964 and 1967, Robert (Bob) Arthur Moog invented the first commercial synthesizer, the Moog Synthesizer, using subtractive synthesis and establishing the analog concept. Simultaneously, John Chowning's experiments at Stanford University in 1967 led to the discovery of FM synthesis, where complex waveforms modulate the pitch of simple sine waves, resulting in supersonic signals over a million Hertz [4]. FM synthesis was commercialized by Yamaha with the development of the DX7, the first commercially successful synthesizer to implement Frequency Modulation, providing greater sound versatility and becoming iconic for the 1980s across various music genres [5]. As software technology and hardware iterations advanced in the 21st century, more production-friendly music software emerged, making computer music generation richer and easier. In recent times, artificial intelligence (AI) music generation methods have evolved, utilizing techniques such as deep learning and machine learning to analyze extensive datasets of existing music and learn underlying patterns. By leveraging this learned knowledge, AI systems can generate new musical compositions, including melodies, harmonies, rhythms, and arrangements, assisting musicians and composers in the creative process while still requiring human involvement and creativity to refine and shape the final compositions [6]. An example of AI music generation is the current popularity of Suno AI, an AI model enabling music creators to generate their own compositions. In the case of music therapy, it's been around a lot longer than algorithmically generated music, but it hasn't been around long as a professional therapy either. In 1995, music therapy was practiced in around 50 countries. The growth of this profession is associated with cultural attitudes towards music, historical contexts, influential figures in music therapy, political and financial factors, educational structures, and healthcare systems. It became integral to many healthcare systems globally but has faced criticism for inadequate scientific research supporting its effectiveness.

Known means of music synthesis are mainly categorized into neural network systems, such as Google’s magenta, and non-neural nets, such as the early GenJam. The following passage will be primarily focused on the brief history of the development of computer music generation. The evolution of computer music generation has seen significant advancements, paving the way for current AI-based methods. Early systems like the Illiac Suite employed stochastic models (Markov chains) for composition, while MUSIC I pioneered sound synthesis [7]. Max Mathews' MUSIC-N family introduced algorithmic composition techniques, and GROOVE offered real-time interactive composition. Landmark research by John A. Biles in 1994 led to the development of GenJam, the first to apply genetic algorithms to jazz piano soloing, pioneering the intersection of computer music and artificial intelligence [8]. In 2016, Google's Magenta project showcased the potential of AI music generation by releasing the first completely AI-generated piano melody [9]. With the rapid development of artificial intelligence, current AI music generation relies heavily on deep learning techniques. First, a large dataset of existing music is collected and preprocessed. Next, a deep learning model, such as a RNN or a GAN, is designed and trained on the dataset, learning the underlying patterns. Once trained, the model can generate new music by sampling from the learned distribution, influenced by input conditions or constraints. Evaluation and refinement help improve the quality [10]. This approach has enabled the creation of more sophisticated and diverse AI-generated music, pushing the boundaries of what is possible in computer music generation.

Depression is a severe problem for the current generations, especially for the adolescents. According to the statistics showcased According to the World Health Organization, it is estimated that globally, approximately one out of every seven adolescents aged between 10 and 19, which equates to 14%, suffer from mental health issues. However, these conditions are often not identified and consequently, many do not receive the necessary treatment. Approximately 3.8% of individuals in the general population are affected by depression. This includes a breakdown of 5% among adults, with a slight variation between genders, i.e., 4% for men and 6% for women. Additionally, it is higher among adults over the age of 60, affecting 5.7% of this demographic. Medication with psychiatric counselling is one of the most prevalent combinations of treatments on depression. At the same time, psychotherapy has been found to be effective. Nevertheless, for some special cases, depressed people are reluctant to communicate with psychotherapists, and that's when verbal therapy becomes difficult and ineffective. Therefore, some effective non-verbal therapy becomes very important, such as music therapy [11]. Music has been found to be an alternative to get in contact with emotions [12]. Recent research, encompassing RCTs, a comprehensive Cochrane systematic review [13], and a meta-analysis [14], underscores the efficacy of music therapy, particularly within the context of severe mental health disorders. These findings underscore music therapy's potential as a valuable treatment option for improving depressive symptoms in individuals facing significant mental health challenges, for instance, offering promise for clinical practice. Whether it's simply assisting therapist for therapy or independently generating therapeutic music, ai generation has shown to be extremely viable [15]. This puts the focus of attention on solving the thorny problem mentioned earlier in the article of alleviating depressive symptoms in adolescents through generated music therapy by means of such an efficient and highly maneuverable tool. The rest of this article will focus on existing treatments and evaluate them.

The subsequent sections of this paper will sequentially introduce fundamental aspects of music in mental health treatment, elucidate established principles of music therapy, review recent applications of therapeutic methods, analyze the limitations and deficiencies of existing therapeutic modalities, and offer an outlook on potential future developments. This comprehensive framework aims to provide a structured exploration of music therapy's role in mental health care, underscoring the significance of music in therapeutic settings. By examining foundational concepts, theoretical underpinnings, practical applications, and emerging challenges, this paper seeks to contribute to a deeper understanding of how music can be leveraged effectively in promoting well-being and addressing mental health disorders. Furthermore, it will highlight the transformative potential of AI-driven music interventions, emphasizing the importance of interdisciplinary collaboration, ethical considerations, and innovative approaches to enhance personalized treatment strategies for individuals with depression and other mental health conditions.

2. Basic description

Music therapy belongs to one of forms of the creative arts therapy, it involves creating musical experiences and fostering client-therapist relationships that facilitate therapeutic change [16]. According to the WFMT, music therapy involves the therapeutic use of music and its elements in diverse environments, including medical, educational, and community contexts. The objective of this approach is to elevate the well-being and life quality for individuals, collectives, families, or communities at large, which takes a comprehensive view of plenty of components. Music therapy can be divided into 2 different categories: receptive and active. Receptive music therapy involves active listening to music. The music can come from either the client or the therapist, and it can be live or recorded, composed, or improvised, including commercial music of any genre. On the other hand, active music therapy entails actively making music, whether it's playing and singing pre-composed music, improvising, or composing original music. The main way in which AI music generation can help in the psychotherapeutic process is in the collaboration with therapists, The mechanism of collaboration between AI music and therapists in music therapy is supported by evidence and strong arguments. Research has shown that AI-generated music can enhance therapeutic interventions by providing diverse and personalized compositions that cater to individual client needs. Studies have demonstrated the effectiveness of AI music in improving emotional expression, reducing negative emotions, and promoting relaxation. The automation of certain tasks through AI technology also saves therapists' time and allows them to focus more on the therapeutic relationship. Additionally, the integration of AI music has the potential to enrich the therapeutic process by offering new avenues for creative expression and exploration [17]. Looking deeper into human-AI collaboration, a study proposes a computer-based analysis of clinical improvisations to identify individual musical profiles. The method involves extracting and analyzing relevant musical parameters. While the specific therapeutic effects for treating depression are not extensively discussed, music therapy has shown promise in enhancing cognitive function, emotional well-being, motor skills, social interaction, and overall quality of life. The effectiveness is contingent upon the specific client population and therapeutic approach employed. Further research is needed to explore the efficacy of this method in treating depression and other clinical conditions.

3. Pricinples

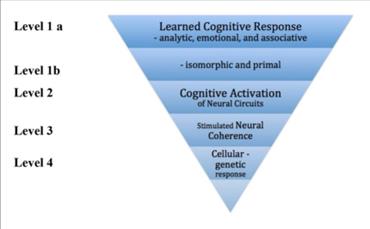

The current explanation of the mechanism of music therapy is that it activates brain areas involved in memory and autobiographical processes, resulting in functional changes and improved brain function [18]. The mainstream explanation also involves its ability through synchronization and emotional resonance [19]. However, there is no definitive rationale for explaining and modelling the efficacy of music therapy, and categorizing responses to music therapy into the following levels is currently a more objective way of explaining it [20]. The four leves are shown as below:

Level 1: Learned cognitive response - This level involves the learned associations and cultural context of music, where specific music can evoke emotional responses based on past experiences. It includes both conscious and unconscious learning processes, such as the early exposure to music in infancy [21, 22].

Level 2: Neural circuitry activation, music activates different neural circuits in the brain, which can have therapeutic effects on various cognitive processes, such as language, movement, and memory. Neurologic Music Therapy utilizes these neural mechanisms to facilitate rehabilitation [23].

Level 3: Neural oscillatory coherence, this level focuses on the mechanism of neural synchronization or coherence, where rhythmic stimulation of the senses (auditory, visual, tactile) can lead to increased synchrony of firing neurons. This entrainment of neural activity can have beneficial effects [24].

Level 4: Cellular-level mechanisms, at this level, music and sound can activate mechanisms at the cellular level, ranging from neurons to bone cells to blood cells. These cellular responses to music can have potential effects on various physiological processes (seen from Fig. 1) [25].

Figure 1: Categories of responses to music therapy [25].

4. Applications

The study conducted by Williams, Hodge, and Wu involved a meticulous experimental design to evaluate the impact of AI-generated music on emotional states, with a particular focus on its potential benefits for individuals with depressive symptoms. A total of 53 participants were engaged in an online survey, providing a substantial dataset for analysis. Participants were exposed to four musical excerpts, calm or positive (N1 and N2) and anxious or negative (S1 and S2). Each participant was tasked with ranking these excerpts in a bipolar fashion across six pairs, resulting in a comprehensive set of 318 pairwise comparisons (6 pairs x 53 participants). This process allowed the researchers to capture nuanced variations in emotional responses to different musical stimuli. The excerpts were analyzed using a dual-representation approach, which included both symbolic data from MIDI files and Mel-Frequency Cepstral Coefficients from the audio signal. This analysis resulted in a multi-dimensional feature set for each piece, which was then subjected to feature selection to identify the most salient features for emotional response prediction. The supervised learning model, trained on these features, was then used to generate new musical sequences. The model's predictions were validated against the participants' self-reported emotional responses, which were collected through a post-listening questionnaire. The questionnaire responses were scored using the DES (Depression, Emotion, and Stress) evaluation, providing a standardized measure of emotional state. The physiological data collected via GSR sensors revealed notable changes in skin conductance levels in response to the musical stimuli. For instance, 58.1% of participants exhibited a higher GSR response to the negative stimuli (S2) compared to the less negative stimulus (S1). Conversely, for the positive stimuli, 54.6% of participants rated N1 as more negative than N2, despite N1 having the lowest reported positivity. These numerical findings indicate a clear interaction between the musical features and the emotional responses of the listeners, both in terms of self-reported feelings and physiological arousal as measured by GSR. The study's results suggest that AI-generated music can be systematically tailored to influence emotional states, offering a promising avenue for therapeutic applications in the management of depression.

Depression is a mental and physical ill-health, and music therapy not only relieves depression but also improves mental health, which means there's a chance of being able to reduce the incidence of depression. The research conducted by Modran et al. investigates the potential therapeutic effects of music on mental health, specifically focusing on the classification of emotional responses to music using deep learning techniques. The objective of this research is to construct a machine learning model that can forecast the potential therapeutic value of particular musical pieces for individuals, taking into account their emotional attributes, the specific characteristics of the music, and the inclusion of solfeggio frequencies. In the experiment, a multi-class neural network was employed to classify emotions into four separate classes: "energetic," "calm," "happy," and "sad." The training of the model was facilitated by using a portion of the Million Song Dataset, which is a comprehensive repository that includes audio features and related metadata from a wide array of contemporary popular music tracks. The architecture of the neural network consisted of an input stage that integrated various audio features, followed by a deep, fully connected hidden layer. Finally, the network featured an output stage with a Softmax activation function to produce the classification results.

During the training process, feature normalization was applied using a MinMaxScaler, and K-Fold Cross Validation was implemented with K set to 10 splits to ensure robustness and generalizability of the model. The model was trained on 8000 samples and achieved an overall accuracy of 91.49%. Evaluation of the model's performance involved using a confusion matrix, revealing a final accuracy score of 94%. Notably, the model demonstrated high accuracy in classifying "calm," "happy," and "energetic" songs, with slightly lower accuracy (85%) observed for "sad" songs. The study also developed a web application allowing users to input their preferred music genre and current mood, enabling the model to predict the potential therapeutic effect of selected songs. In cases where a song was deemed unsuitable, the application recommended alternative music choices that might be more beneficial for the user's emotional state. Although the study did not specifically target depression, the results suggest that AI-based music analysis could contribute to emotional regulation and mental health support. The high accuracy of the model in predicting therapeutic effects based on emotional classification underscores its potential utility in music therapy applications, including potential support for individuals experiencing depressive symptoms. This research lays the groundwork for further exploration of AI-driven music analysis as a tool for personalized music recommendations aimed at enhancing mental well-being.

5. Conclusion

To sum up, the current research in AI-generated music therapy faces several limitations. Firstly, the reliance on machine learning models trained on existing music datasets may introduce biases and limitations in the representation of diverse musical styles and emotional contexts. These models often prioritize popular or commercially successful music, potentially overlooking culturally specific or niche musical expressions that could have unique therapeutic value for certain populations. Secondly, the evaluation of therapeutic effects based solely on emotional classification may oversimplify the complex interactions between music, emotions, and mental health. Emotional responses to music are highly subjective and can vary widely among individuals based on personal experiences, cultural backgrounds, and contextual factors. Existing AI models may struggle to capture and respond to this nuanced variability in emotional expression and interpretation. Furthermore, the generalizability of AI-generated music therapy interventions across diverse populations and clinical contexts remains uncertain. Music preferences and therapeutic responses are deeply personal and can be influenced by individual differences in personality, cognitive abilities, and psychosocial factors. Tailoring AI-generated interventions to accommodate these variations effectively requires ongoing refinement and adaptation of machine learning algorithms. Lastly, ethical considerations surrounding the use of AI in therapeutic settings warrant careful attention. The use of AI-generated music raises questions about privacy, informed consent, and the potential for unintended consequences or biases in algorithmic decision-making. Ensuring transparency, accountability, and ethical oversight in the development and deployment of AI-driven music therapy interventions is essential for safeguarding the well-being of individuals receiving such treatments.

Looking into the future, AI-driven music therapy holds great promise for advancing mental health interventions, especially for conditions such as depression. Future research should focus on addressing key limitations and exploring innovative applications. Efforts should prioritize developing inclusive and culturally sensitive AI models through collaborations between AI researchers, music therapists, and cultural experts. This approach ensures datasets reflect diverse musical traditions and emotional expressions, enhancing therapeutic applicability. Advances in neuroscientific research can inform AI system designs to better mimic neurobiological mechanisms underlying music perception and emotional regulation. Integration of insights from cognitive neuroscience and affective computing can enhance precision in AI-generated music therapy interventions. Exploration of multimodal technologies, such as virtual reality and biofeedback systems, within AI-driven music therapy platforms can optimize therapeutic impact and personalization. Lastly, enhancing transparency, interpretability, and ethical governance of AI algorithms is crucial for realizing AI-driven music therapy's transformative potential in mental health care. Embracing responsible AI practices and interdisciplinary collaborations will revolutionize personalized treatment strategies for individuals with depression and other mental health conditions.

References

[1]. Doornbusch P 2017 Organised Sound vol 22(2) pp 297–307

[2]. Pekonen J and Välimäki V 2011 Proc. 6th Forum Acusticum pp 461-466

[3]. Bogdanov V 2001 All Music Guide to Electronica: The Definitive Guide to Electronic Music (Backbeat Books) p 320.

[4]. Chowning J M 1973 Journal of the Audio Engineering Society vol 21(7) pp 526–534

[5]. Johnstone R 1994 The sound of one chip clapping: Yamaha and FM synthesis MIT thesis.

[6]. Civit M, Civit-Masot J, Cuadrado F and Escalona M J 2022 Expert Systems with Applications vol 209 p 118190.

[7]. Briot J P 2020 Neural Computing and Applications vol 33(1) pp 39-65.

[8]. Biles J A 1994 Proceedings of the International Computer Music Conference pp 131–137

[9]. Miller A 2019 Project Magenta: AI creates its own music Part of the Artist in the Machine: The World of AI-Powered Creativity MIT Press Books IEEE Xplore

[10]. Briot J P and Pachet F 2017 Music generation by deep learning-challenges and directions arXiv preprint arXiv:1712.04371.

[11]. ja Suomen S L D 2004 Duodecim vol 120 pp 744-64.

[12]. De Backer J 2008 Nordic Journal of Music Therapy vol 17 pp 89–104.

[13]. Maratos A S, Gold C, Wang X, Crawford M J 2008 Cochrane Database of Systematic Reviews vol 1 p CD004517.

[14]. Gold C, Solli H, Krüger V, Lie S 2009 Clinical Psychology Review vol 29 pp 193–207

[15]. Rahman J S, Gedeon T, Caldwell S, et al. 2021 Journal of Artificial Intelligence and Soft Computing Research vol 11.

[16]. Punkanen M 2011 Improvisational music therapy and perception of emotions in music by people with depression University of Jyväskylä.

[17]. Sun J, Yang J, Zhou G, Jin Y and Gong J 2024 Understanding Human-AI Collaboration in Music Therapy Through Co-Design with Therapists arXiv preprint arXiv:2402.14503.

[18]. Feng K, Shen C Y, Ma X Y, et al. 2019 Psychiatry Research vol 275 pp 86-93

[19]. Aalbers S, Vink A, Freeman R E, et al 2019 Arts in Psychotherapy vol 65 pp 1-11.

[20]. Clements-Cortes A and Bartel L 2018 Frontiers in medicine vol 5 p 255.

[21]. Standley J M 2008 Journal of Research in Music Education vol 56(1) pp 44-59

[22]. Partanen E, Kujala T, Näätänen R, et al 2013 Proceedings of the National Academy of Sciences vol 110(37) pp 15145-15150

[23]. Thaut M H and Hoemberg V 2014 Handbook of neurologic music therapy Oxford University Press.

[24]. Large E W and Jones M R 1999 Psychological Review vol 106(1) pp 119-159.

[25]. Koelsch S 2014 Nature Reviews Neuroscience vol 15(3) pp 170-180.

Cite this article

Chen,H. (2024). The Possible Effects of Music Therapy Based on Computer Generation on Depression. Lecture Notes in Education Psychology and Public Media,54,190-196.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Education Innovation and Philosophical Inquiries

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Doornbusch P 2017 Organised Sound vol 22(2) pp 297–307

[2]. Pekonen J and Välimäki V 2011 Proc. 6th Forum Acusticum pp 461-466

[3]. Bogdanov V 2001 All Music Guide to Electronica: The Definitive Guide to Electronic Music (Backbeat Books) p 320.

[4]. Chowning J M 1973 Journal of the Audio Engineering Society vol 21(7) pp 526–534

[5]. Johnstone R 1994 The sound of one chip clapping: Yamaha and FM synthesis MIT thesis.

[6]. Civit M, Civit-Masot J, Cuadrado F and Escalona M J 2022 Expert Systems with Applications vol 209 p 118190.

[7]. Briot J P 2020 Neural Computing and Applications vol 33(1) pp 39-65.

[8]. Biles J A 1994 Proceedings of the International Computer Music Conference pp 131–137

[9]. Miller A 2019 Project Magenta: AI creates its own music Part of the Artist in the Machine: The World of AI-Powered Creativity MIT Press Books IEEE Xplore

[10]. Briot J P and Pachet F 2017 Music generation by deep learning-challenges and directions arXiv preprint arXiv:1712.04371.

[11]. ja Suomen S L D 2004 Duodecim vol 120 pp 744-64.

[12]. De Backer J 2008 Nordic Journal of Music Therapy vol 17 pp 89–104.

[13]. Maratos A S, Gold C, Wang X, Crawford M J 2008 Cochrane Database of Systematic Reviews vol 1 p CD004517.

[14]. Gold C, Solli H, Krüger V, Lie S 2009 Clinical Psychology Review vol 29 pp 193–207

[15]. Rahman J S, Gedeon T, Caldwell S, et al. 2021 Journal of Artificial Intelligence and Soft Computing Research vol 11.

[16]. Punkanen M 2011 Improvisational music therapy and perception of emotions in music by people with depression University of Jyväskylä.

[17]. Sun J, Yang J, Zhou G, Jin Y and Gong J 2024 Understanding Human-AI Collaboration in Music Therapy Through Co-Design with Therapists arXiv preprint arXiv:2402.14503.

[18]. Feng K, Shen C Y, Ma X Y, et al. 2019 Psychiatry Research vol 275 pp 86-93

[19]. Aalbers S, Vink A, Freeman R E, et al 2019 Arts in Psychotherapy vol 65 pp 1-11.

[20]. Clements-Cortes A and Bartel L 2018 Frontiers in medicine vol 5 p 255.

[21]. Standley J M 2008 Journal of Research in Music Education vol 56(1) pp 44-59

[22]. Partanen E, Kujala T, Näätänen R, et al 2013 Proceedings of the National Academy of Sciences vol 110(37) pp 15145-15150

[23]. Thaut M H and Hoemberg V 2014 Handbook of neurologic music therapy Oxford University Press.

[24]. Large E W and Jones M R 1999 Psychological Review vol 106(1) pp 119-159.

[25]. Koelsch S 2014 Nature Reviews Neuroscience vol 15(3) pp 170-180.