1. Introduction

Artificial intelligence (AI) is becoming increasingly important in shaping human interaction, decision-making processes, and ethical considerations in today's rapidly developing technological environment. As AI systems are integrated into healthcare, law enforcement, and personal decision-making sectors, ethical challenges in human-AI cooperation have intensified. Judging from the influence of artificial intelligence, the moral dilemma faced by human beings, especially when there is a conflict between family, property, and fairness, has become more complex. For example, in the field of medicine, although artificial intelligence can improve the accuracy of diagnosis and the efficiency of treatment, it must also ensure that the use of these technologies does not infringe on the rights of patients or cause accidental injuries [1]. These dilemmas usually involve decisions that need to balance personal and social morality.

This article mainly studies the moral dilemma of human society, especially in the context of family loyalty and fairness, which provides a key entry point for understanding broader psychological and moral challenges. This field studies how individuals make choices in the face of conflicting moral principles, such as loyalty to the family and compliance with laws and ethical norms. The participation of artificial intelligence in these situations - whether in a consulting role, automatic law enforcement, or predictive analysis - has brought a new dimension to the debate and emphasized the interaction between human morality and machine-based decision-making systems.

Existing research on moral dilemmas usually revolves around the psychological factors that affect human decision-making. Research shows that when protecting loved ones, individuals tend to prioritize family loyalty over fairness, even at the expense of personal or social justice. An experimental study in the Journal of Personality and Social Psychology confirmed that people usually tend to be members of the group, especially close relatives and relatives [2]. As artificial intelligence systems become more and more helpful for decision-making, scholars have also explored the potential of artificial intelligence to exacerbate or alleviate moral conflicts. In. It is mentioned in the book that realism is consistent with the moral view of artificial intelligence, which is an inevitable means to alleviate artificial intelligence. However, the existence of artificial intelligence will also become a utopia. As mentioned in the book: "It is a clear moral agent - a "human 2.0" [3].

This study extends the exploration of moral dilemmas to the field of artificial intelligence-interpersonal communication. By investigating how individuals deal with moral dilemmas in cases involving family loyalty and fairness - and how these reactions change under the intervention of artificial intelligence - the study provides new insights into the psychological and moral consequences of the role of artificial intelligence in society. The novelty of this research is that it uses artificial intelligence as a variable to study traditional human moral dilemmas, providing a new perspective on how technology shapes moral decision-making.

2. The Role of Artificial Intelligence in Human Decision-Making

Artificial intelligence plays a significant role in assisting human decision-making. For example, research in the field of Go shows that since the AI Go Program (APG) defeated the top human chess players in 2016, the quality of human chess players has been significantly improved. An analysis of 750,990 moves in 25,033 games showed that APG reduced errors and major mistakes among 1,242 professional players. This improvement is especially obvious in the early stage of the chess game because the uncertainty is high at this time. At the same time, young chess players can benefit more from APG because they have more advantages in accepting and utilizing new technologies [4].

This study shows that artificial intelligence not only has a positive impact on human decision-making in the field of Go but also in a wider field, the participation of AI can also improve the quality of decision-making. For example, in the fields of medical care, finance, and logistics, AI algorithms can analyze massive amounts of data and provide the best action plan, thus reducing deviations and errors in the human decision-making process. Furthermore, the integration of AI can assist individuals in making more rational decisions, particularly in situations marked by high levels of uncertainty and complexity. However, studies indicate that there are disparities in the accessibility and adoption of AI technology, with significant differences based on age and individuals' openness to new technologies. Such disparities can lead to the monopolization of technological benefits by certain groups, potentially deepening existing social inequalities and contributing to broader social injustice.

3. Research Problems and Methods

This study aims to solve the following problems:

1. When family loyalty conflicts with social equity, how do individuals deal with moral dilemmas?

Two. Will the participation of artificial intelligence in the decision-making process affect the choice of individuals in moral dilemmas, especially when there is a conflict between family and fairness?

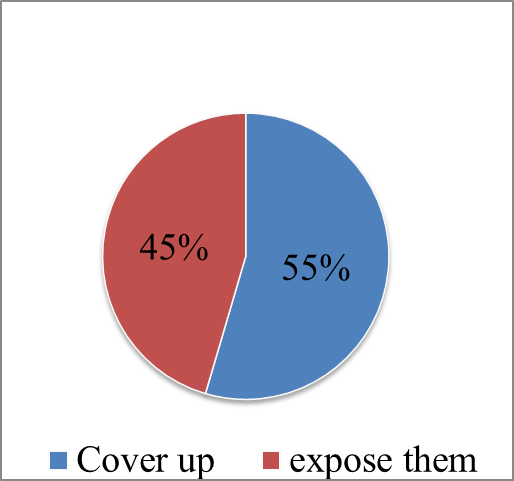

A survey was conducted with 117 participants who were presented with a question involving a moral dilemma. The results indicated that 66 participants chose to shelter their family members, while 58 participants opted to expose them. Although the difference is minimal, the data suggest a slight preference for protecting family members in such situations, as illustrated in Figure 1.

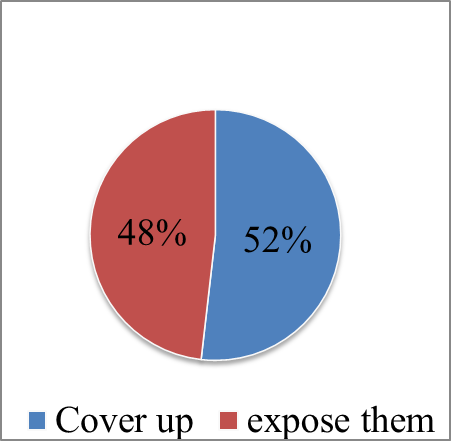

Subsequently, the same question was posed to ChatGPT, which provided an objective response emphasizing the importance of adhering to legal principles. The AI suggested encouraging family members to surrender voluntarily to mitigate legal risks associated with harboring them. It also emphasizes the importance of finding a balance between morality and emotion. I share the answers of ChatGPT with all participants to observe whether the answers of artificial intelligence will affect their judgment. However, as shown in Figure 2, the results demonstrated that the answers of artificial intelligence did not significantly alter participants' positions, with only two participants changing their original decisions.

Figure 1: Distribution of participants’ responses to the moral dilemma

This shows that in the face of this complex moral dilemma, people's choices are more driven by their inner emotions and moral concepts than by external objective suggestions. This study has contributed to the field of moral psychology and the emerging field of artificial intelligence ethics by providing

Figure 2: Influence of AI suggestions on participants’ moral decisions

Empirical data on how humans deal with moral dilemmas involving family and equity in the presence of artificial intelligence systems. These findings highlight the tension between human emotional bonds and objective legal and moral frameworks and provide new insights into how artificial intelligence can change this balance. Research results show that although artificial intelligence can be guided according to legal norms, human emotional attachment to family is often superior to these objective assessments, especially in less serious crimes.

4. Artificial Intelligence and Morality

In fact, after the introduction of disruptive technologies such as big data and artificial intelligence in the Fourth Industrial Revolution With the rise of disruptive technologies like big data and AI in the Fourth Industrial Revolution, discussions around AI’s role in human morality have intensified [5]. As pointed out in the research article "Human-computer Ethical Decision-Making", the participation of artificial intelligence not only plays an auxiliary role in moral decision-making, but also may become an important factor affecting and shaping human judgment [6]. However, as the research results show, even if artificial intelligence can provide objective and rational advice when facing family problems, people are still emotionally driven to protect their family members instead of following the legal and ethical norms given by artificial intelligence. This phenomenon reveals the important role of emotions in moral decision-making, which also echoes the conclusion of Malle et al. that people tend to make choices based on emotional and social ties rather than pure rationality. Although artificial intelligence systems can provide relatively objective advice based on legal and ethical frameworks, their actual impact on human behavior is limited. Even if artificial intelligence comprehensively considers various moral factors when providing advice, in the face of family emotions, the results still cannot shake profound moral choices. This shows that the influence of artificial intelligence as a moral judgment tool or auxiliary means may be offset by human inherent emotional tendencies.

There are still shortcomings in artificial intelligence, and this study also reveals the potential limitations of artificial intelligence in the field of ethics. Although the participation of artificial intelligence can improve human awareness of moral judgment to a certain extent, it cannot replace or completely change human judgment based on emotional and cultural background. A few years ago, the Allen Institute of Artificial Intelligence developed a chatbot called Delphi to judge the right and wrong of behavior. Delphi performs well on straightforward issues; for instance, it labels 'exam cheating' as 'wrong' but finds 'cheating to save a life' ‘acceptable. It can be recognized that it is impolite to use a lawn mower when the neighbor is sleeping, but it is fine if the neighbor is not at home. However, this kind of artificial intelligence also has limitations. As cognitive scientist Tomer Ullman pointed out, just a few misleading adverbs are enough to make their judgments wrong. When asked to judge "gently and sweetly pressing the pillow on the face of a sleeping baby," Delphi replied, "This is allowed " [7]. This shows that artificial intelligence's judgment ability is still flawed and unstable when faced with complex moral dilemmas. This inaccuracy mainly stems from artificial intelligence's reliance on pre-trained data sets and its limited ability to understand the context. Therefore, even if artificial intelligence can simulate some degree of moral judgment, it is still unable to make thoughtful decisions in complex emotional and cultural contexts like human beings. Therefore, in ethical and moral decision-making, artificial intelligence should be regarded as an auxiliary tool rather than the primary basis for final judgments. AI's role should be to provide supportive insights and perspectives, helping individuals consider various aspects of a dilemma without replacing human judgment or moral responsibility in making the ultimate decision.

5. Moral Risk Factors in Artificial Intelligence Decision-Making

In Artificial Intelligence and Moral Dilemmas: Perception of ethical decision-making in AI, it is mentioned that people are dealing with moral dilemmas. When dealing with moral dilemmas, given decisions can be divided into utilitarianism and obligation theory. It is predicted that “emotional decision-makers” - those who are highly enthusiastic but have limited analytical ability - are more likely to refuse to cause harm and make decisions based on responsibilities and obligations. In contrast, “cognitive decision-makers” show lower emotional participation but higher analytical ability. If damage has greater benefits, they tend to accept harm, which leads them to make more utilitarian decisions. This contrast highlights the different methods of emotional-driven and cognitive-driven individuals in moral decision-making. Therefore, differences in individual cognition will lead to specific decisions. However, compared with human beings, artificial intelligence is more likely to be regarded as a relatively less empathetic and more result-oriented entity [8]. Secondly, because artificial intelligence is good at using big data to solve some specific problems, it will have more limitations for more complex moral problems. For example, as James Gondola pointed out in the article "AI and the Ethics of Decision-Making in Business," it is difficult for artificial intelligence systems to deal with those that rely on scarce data or need human beings. The problem is judged by intuition and experience. In many complex decision-making situations, emotional and moral factors often play a key role, which cannot be fully understood and explained by machines [9]. Although artificial intelligence can make accurate calculations and predictions based on data, it cannot participate in the judgment of emotions and values. This means that when it comes to ethical judgment, artificial intelligence relies more on the training data it receives, which may contain biased or wrong criteria for judgment, further limiting its application on complex ethical issues. Therefore, although artificial intelligence can greatly improve the efficiency of decision-making in certain specific fields, it still shows obvious shortcomings in dealing with complex and emotional moral problems. This reminds us that artificial intelligence should be regarded as a tool, not an omnipotent means to replace human intuition and moral judgment. In the decision-making process involving deep ethical issues, human emotions and values are still indispensable.

6. Prospects For Future Development

Future research should explore the integration of human emotions and cultural backgrounds into artificial intelligence systems to improve their support in complex moral dilemmas. At present, researchers are working hard to develop artificial intelligence with the ability to identify and process emotions. These systems can analyze human expression, language, and behavior and make emotional responses. For example, artificial intelligence analyzes the ability of user-generated content (such as text and images) to identify emotional expressions [10]. However, the ability of artificial intelligence to deal with human emotions is still limited, especially on moral issues. Artificial intelligence cannot make in-depth judgments based on culture, emotions, and values like human beings [11].

By further developing these technologies, artificial intelligence can better understand and deal with complex emotional conflicts and provide more humane decision-making support in health care, psychological counseling, and other fields. However, it also brings new moral challenges, such as privacy issues and possible social inequality. Therefore, future research should not only focus on improving the emotional recognition ability of artificial intelligence but also establish a sound moral framework to ensure that these technologies have a positive social impact in their application [11].

7. Conclusion

In summary, this research and existing literature show that when humans face complex moral dilemmas, they still rely highly on emotional ties and personal moral concepts. Artificial intelligence's role in morality is more like an auxiliary tool than the ultimate authority of decision-making. This puts forward new challenges and directions for the development of artificial intelligence in the field of ethics in the future. As AI continues to evolve, it will be essential to explore how human emotional intelligence and cultural differences influence the way we interact with and trust these technologies. Moreover, the integration of moral reasoning into AI systems will require careful consideration of ethical frameworks that account for both universal and context-specific values. Moving forward, interdisciplinary collaboration between ethicists, psychologists, and AI developers will be crucial to ensuring that AI not only assists but also respects human autonomy in making moral decisions. Ultimately, a nuanced understanding of the relationship between human morality and AI will shape the future landscape of both technology and ethics.

References

[1]. Herring, J. (2020). 1. ethics and medical law. Medical Law and Ethics, 1–46. https://doi.org/10.1093/he/9780198846956.003.0001

[2]. Chen, Y. R., Brockner, J., & Katz, T. (1998). Toward an explanation of cultural differences in in-group favoritism: The role of individual versus collective primacy. Journal of Personality and Social Psychology, 75(6), 1490–1502. https://doi.org/10.1037//0022-3514.75.6.1490

[3]. Savulescu, J., & Maslen, H. (1970, January 1). Moral enhancement and Artificial Intelligence: Moral ai? SpringerLink. https://link.springer.com/chapter/10.1007/978-3-319-09668-1_6

[4]. University, S. C. (2019, May). Artificial Intelligence, decision-making, and moral deskilling. Markkula Center for Applied Ethics. https://www.scu.edu/ethics/focus-areas/technology-ethics/resources/artificial-intelligence-decision-making-and-moral-deskilling/

[5]. Chen, Z. (2023, September 13). Ethics and discrimination in artificial intelligence-enabled recruitment practices. Nature News. https://www.nature.com/articles/s41599-023-02079-x

[6]. Stahl, B. C. (2021, March 18). Ethical issues of ai. Artificial Intelligence for a Better Future: An Ecosystem Perspective on the Ethics of AI and Emerging Digital Technologies. https://pmc.ncbi.nlm.nih.gov/articles/PMC7968615/

[7]. Bloom, P. (2023, November 29). How moral can A.I. really be?. The New Yorker. https://www.newyorker.com/science/annals-of-artificial-intelligence/how-moral-can-ai-really-be

[8]. Zhang, Z., Chen, Z., & Xu, L. (2022). Artificial Intelligence and moral dilemmas: Perception of ethical decision-making in ai. Journal of Experimental Social Psychology, 101, 104327. https://doi.org/10.1016/j.jesp.2022.104327

[9]. Gondola, J. (2024, April 6). Ai and the ethics of decision-making in business. Medium. https://medium.com/@jamesgondola/ai-and-the-ethics-of-decision-making-in-business-22b7a57e8202

[10]. Tkalčič, M., & Kunaver, M. (2016). Recognizing emotions in user-generated content. Emotion-Aware Systems: Theories, Technologies, and Applications, 61-83. Springer.

[11]. Tretter, M. (2024, May 13). Equipping AI-decision-support-systems with emotional capabilities? ethical perspectives. Frontiers. https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1398395/full

Cite this article

Zhang,H. (2025). The Role of Artificial Intelligence in Human Moral Decision-Making: Navigating Family Loyalty and Social Justice. Lecture Notes in Education Psychology and Public Media,80,80-85.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Global Politics and Socio-Humanities

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Herring, J. (2020). 1. ethics and medical law. Medical Law and Ethics, 1–46. https://doi.org/10.1093/he/9780198846956.003.0001

[2]. Chen, Y. R., Brockner, J., & Katz, T. (1998). Toward an explanation of cultural differences in in-group favoritism: The role of individual versus collective primacy. Journal of Personality and Social Psychology, 75(6), 1490–1502. https://doi.org/10.1037//0022-3514.75.6.1490

[3]. Savulescu, J., & Maslen, H. (1970, January 1). Moral enhancement and Artificial Intelligence: Moral ai? SpringerLink. https://link.springer.com/chapter/10.1007/978-3-319-09668-1_6

[4]. University, S. C. (2019, May). Artificial Intelligence, decision-making, and moral deskilling. Markkula Center for Applied Ethics. https://www.scu.edu/ethics/focus-areas/technology-ethics/resources/artificial-intelligence-decision-making-and-moral-deskilling/

[5]. Chen, Z. (2023, September 13). Ethics and discrimination in artificial intelligence-enabled recruitment practices. Nature News. https://www.nature.com/articles/s41599-023-02079-x

[6]. Stahl, B. C. (2021, March 18). Ethical issues of ai. Artificial Intelligence for a Better Future: An Ecosystem Perspective on the Ethics of AI and Emerging Digital Technologies. https://pmc.ncbi.nlm.nih.gov/articles/PMC7968615/

[7]. Bloom, P. (2023, November 29). How moral can A.I. really be?. The New Yorker. https://www.newyorker.com/science/annals-of-artificial-intelligence/how-moral-can-ai-really-be

[8]. Zhang, Z., Chen, Z., & Xu, L. (2022). Artificial Intelligence and moral dilemmas: Perception of ethical decision-making in ai. Journal of Experimental Social Psychology, 101, 104327. https://doi.org/10.1016/j.jesp.2022.104327

[9]. Gondola, J. (2024, April 6). Ai and the ethics of decision-making in business. Medium. https://medium.com/@jamesgondola/ai-and-the-ethics-of-decision-making-in-business-22b7a57e8202

[10]. Tkalčič, M., & Kunaver, M. (2016). Recognizing emotions in user-generated content. Emotion-Aware Systems: Theories, Technologies, and Applications, 61-83. Springer.

[11]. Tretter, M. (2024, May 13). Equipping AI-decision-support-systems with emotional capabilities? ethical perspectives. Frontiers. https://www.frontiersin.org/journals/artificial-intelligence/articles/10.3389/frai.2024.1398395/full