1. Introduction

The rapid growth of social media has transformed how information is disseminated, with algorithmic recommendations playing a central role in shaping user experiences [1]. While these algorithms aim to personalize content, they often create echo chambers—environments where users are predominantly exposed to information that aligns with their existing views [2]. Research has extensively examined echo chambers in political contexts, but their impact on gender equality remains underexplored [3]. This gap is particularly concerning given the rise of polarized gender discourse online, where discussions about feminism, men's rights, and gender equity frequently become contentious. Despite awareness of algorithmic bias, little is known about how these systems differentially influence perceptions of gender based on user behavior and demographics.

This study investigates how echo chambers on social media platforms may exacerbate gender inequality through algorithmic recommendations. Specifically, it examines whether algorithms treat male and female accounts differently when they engage with gender-related content, and how exposure to filtered content shapes users' attitudes over time. The research focuses on Douyin, which is TikTok’s Chinese counterpart, a platform renowned for its sophisticated recommendation system and widespread influence.

To address these questions, a mixed-methods approach combines controlled experiments with user interviews. Four experimental groups are designed: male and female accounts that either actively search for gender equality content or follow organic usage patterns. By analyzing the recommended content across these groups, the study identifies potential biases in algorithmic treatment. Additionally, interviews with users track shifts in attitudes, offering insights into the real-world impact of echo chambers.

The significance of this research lies in its potential to inform both platform design and public discourse. By revealing how algorithms may inadvertently reinforce gender stereotypes, the findings could guide improvements in recommendation systems to promote balanced content distribution. Furthermore, the study underscores the need for digital literacy initiatives that encourage users to critically evaluate their online ecosystems. Future work could expand to cross-platform comparisons, but this investigation provides a foundational understanding of how echo chambers operate in one of the world’s most influential social media environments.

2. Echo chambers and the perpetuation of gender inequality in digital spaces

2.1. Definition and characteristics of echo chambers

The term “echo chamber” describes a phenomenon where users on social media platforms are predominantly exposed to information that reinforces their pre-existing beliefs, while dissenting viewpoints are systematically filtered out [2]. This occurs through three key mechanisms: Primarily, content homogenization is an essential component, where algorithms prioritize similar content; the second one is selective exposure, where users actively avoid challenging perspectives; what’s more, algorithmic amplification, where engagement metrics such as likes and shares further narrow content diversity [4]. Over time, echo chambers can polarize public discourse, as seen in gender equality debates.

2.2. Manifestations and causes of gender inequality in digital spaces

Gender inequality persists in digital spaces, mirroring offline disparities [5]. On social media, it manifests as several elements [5]. To begin with, there is a large number of videos showcasing stereotyped portrayals. For instance, women are often associated with domestic roles or appearance-based content, while men dominate leadership or technical discussions on Douyin. Furthermore, algorithmic bias plays a pivotal role in this process, as users' historical data trains the system to reinforce gender stereotypes. This phenomenon not only traps users in increasingly insular content bubbles but also intensifies gender inequality and polarization. For instance, the algorithm tends to show more career-related content to male users while prioritizing parenting-related videos for female users; even the comments sections they see become filtered through this gendered algorithmic lens, creating distinctly different online experiences for men and women. As a result, users of different genders become confined within their respective echo chambers, developing increasingly polarized perspectives on gender equality. The discussions about feminism or men’s rights frequently devolve into adversarial debates, further entrenched by engagement-driven algorithms. Structural factors, such as gendered socialization and platform design choices, compound these issues.

2.3. Algorithmic influence on information distribution

Social media algorithms primarily prioritize engagement-maximizing content, which boosts platform profitability but inadvertently perpetuates gender inequality through several mechanisms [6]. Firstly, they reward extreme content, such as polarizing gender-related posts framing "all men are..." or "women should...", because these generate strong emotional reactions and higher visibility. Secondly, they create self-reinforcing feedback loops where users who interact with gender-stereotyped content receive progressively more similar recommendations, continually hardening their biases [4]. Thirdly, these systems systematically ignore nuanced discussions of intersectionality, like race-gender dynamics, in favor of simplistic binary narratives that drive more engagement [7]. Most crucially, this algorithmic curation extends beyond video recommendations to shape comment sections as well; male users engaging with men's rights content primarily see either aggressively supportive or oppositional comments, while female users viewing feminist content encounter mirroring polarization, effectively homogenizing discourse spaces and severely limiting exposure to diverse perspectives.

3. Research methodology and experimental design

3.1. Platform selection and account configuration

The experimental research was conducted on Douyin, the Chinese counterpart of TikTok, which was strategically selected due to its sophisticated recommendation algorithm and dominant market position in China's short-video landscape. To establish a robust experimental framework, sixteen test accounts were divided into four distinct groups, with each group containing four parallel accounts (A1-A4, B1-B4, C1-C4, D1-D4) to ensure data reliability. The experimental design included four distinct account groups: male accounts A1-A4 that actively searched for "gender equality" content, male accounts B1-B4 that followed normal browsing patterns, female accounts C1-C4 that actively searched for "gender equality" content, and female accounts D1-D4 that followed normal browsing patterns. Prior to formal data collection, all accounts were carefully standardized with identical demographic parameters, including age and geographic location, then underwent a 10-day initialization period to establish natural usage patterns that would be recognized by the platform's algorithms as authentic user behavior.

3.2. Data collection protocol

Building upon this foundation, an experiment was designed that systematically examined two key variables: account gender and engagement behavior. Over a 15-day observation window, multiple data streams were collected, including daily video recommendations, comment section analyses, and detailed engagement metrics. To maintain experimental integrity, several control measures were implemented, such as standardized usage durations and randomized interaction timing.

This study was guided by two key hypotheses examining how algorithmic recommendations vary based on user characteristics. The first hypothesis proposed that an account's perceived gender would significantly influence the type of content recommended by the platform's algorithm, with account gender (male or female) serving as the independent variable and content category distribution as the dependent variable. Specifically, we predicted that organic female accounts would receive more domestic and lifestyle content, while organic male accounts would be shown more entertainment and wealth-focused content, reflecting the algorithm's tendency to reinforce gender stereotypes. Building upon this, the second hypothesis posited that active search behavior for gender equality content would increase exposure to such content compared to organic usage patterns, with search behavior (active versus organic) as the independent variable and frequency of gender equality content as the dependent variable. Here, we anticipated that organic accounts would receive substantially less gender-equality content due to the echo chamber effects inherent in algorithmic recommendation systems.

To test these hypotheses, a comprehensive analysis of 800 video recommendations was conducted (50 videos per participant × 16 accounts). For this study, gender-equality content was defined by three stringent criteria: (1) explicit reference to equal opportunities in workplace or societal settings; (2) substantive challenges to traditional gender roles; and (3) clear advocacy for mutual understanding between genders. This operational definition guaranteed reliable coding and uniform analysis across all sampled videos.

3.3. Mixed-methods approach: content analysis and user interviews

The study would not only analyze the specific experimental data but also conduct in-depth interviews with all 16 participants to examine their firsthand experiences with the platform's recommendation system. Following the experiment, participants would be asked to report about changes in their video recommendations, with particular attention to whether gender equality content had become more prominent following their search behaviors. More significantly, participants would complete a five-point Likert scale both before and after the experiment, which would quantify their evolving awareness of algorithmic bias. These interviews would track cognitive evolution by asking participants to reflect on whether repeated exposure to certain content types had influenced their views on gender issues.

4. Experimental results and analysis

4.1. Algorithmic differential treatment by gender and search behavior

• The total number of gender-equality related videos is 310, accounting for 38.75% of the total video count (800 videos).

• Active-search accounts received about 5 times more gender-equality content:

Group A (Male Active): 116 videos

Group C (Female Active): 142 videos

• Organic accounts showed minimal exposure:

Group B (Male Organic): 20 videos

Group D (Female Organic): 32 videos

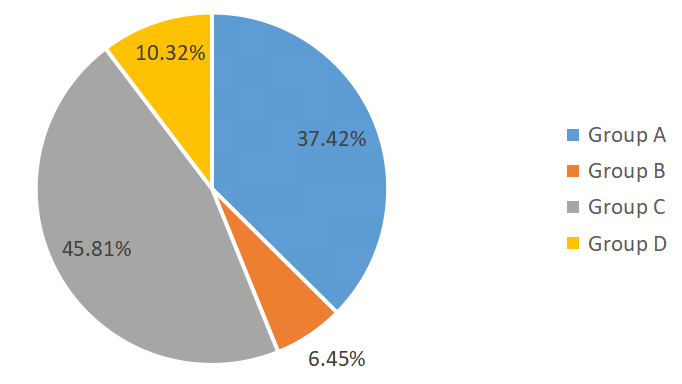

Figure 1 shows the proportion of gender equality content recommended to the four experimental groups (A-D). Group C, consisting of four female accounts actively searching for gender-equality content, received the highest proportion at 45.81%. This was followed by Group A (four male accounts actively searching for such content) at 37.42%. In contrast, organically used accounts in Groups B and D received significantly lower proportions of 6.45% and 10.32% respectively.

Content analysis confirmed gendered distribution patterns:

• Female organic accounts (Group D) received 168 lifestyle videos (84%) focused on domestic themes, fashion, and pets

• Male organic accounts (Group B) saw 144 videos (72%) featuring entertainment and materialistic themes

4.2. Polarization patterns in recommended content

The study revealed three interrelated dimensions of polarization that collectively demonstrate how algorithmic systems shape user experiences. Initially, content analysis identified significant polarization in gender-related videos (n=310, 38.75% of total), with active-search groups (A+C) receiving 258 balanced discussions compared to merely 52 predominantly stereotypical portrayals for organic groups (B+D). This content divergence was further amplified in comment sections, where participant reports indicated gendered patterns of exposure—female participants consistently described encountering radical feminist rhetoric (e.g., "All men benefit from patriarchy"), with 13 of 16 noting heightened emotional intensity, while male participants reported predominantly seeing comments framing equality as "female privilege" (e.g., "Modern feminism seeks dominance"), prompting resentment in 11 of 16 cases.

|

Participant |

Group |

Pre-test Score |

Post-test Score |

Change (Δ) |

|

A1 |

Group A (Male Active) |

2.1 |

4.3 |

+2.2 |

|

A2 |

Group A (Male Active) |

1.8 |

4.0 |

+2.2 |

|

A3 |

Group A (Male Active) |

2.0 |

4.1 |

+2.1 |

|

A4 |

Group A (Male Active) |

1.9 |

3.9 |

+2.0 |

|

/ |

Group A (Male Active) |

1.95 |

4.08 |

+2.13 |

|

C1 |

Group C (Female Active) |

2.2 |

4.4 |

+2.2 |

|

C2 |

Group C (Female Active) |

1.7 |

3.8 |

+2.1 |

|

C3 |

Group C (Female Active) |

2.3 |

4.3 |

+2.0 |

|

C4 |

Group C (Female Active) |

1.9 |

4.0 |

+2.1 |

|

/ |

Group C (Female Active) |

2.03 |

4.13 |

+2.10 |

|

B1 |

Group B (Male Organic) |

1.5 |

2.9 |

+1.4 |

|

B2 |

Group B (Male Organic) |

1.3 |

2.5 |

+1.2 |

|

B3 |

Group B (Male Organic) |

1.6 |

2.8 |

+1.2 |

|

B4 |

Group B (Male Organic) |

1.4 |

2.7 |

+1.3 |

|

/ |

Group B (Male Organic) |

1.45 |

2.73 |

+1.28 |

|

D1 |

Group D (Female Organic) |

1.6 |

2.8 |

+1.2 |

|

D2 |

Group D (Female Organic) |

1.5 |

2.9 |

+1.4 |

|

D3 |

Group D (Female Organic) |

1.4 |

2.7 |

+1.3 |

|

D4 |

Group D (Female Organic) |

1.7 |

3.0 |

+1.3 |

|

/ |

Group D (Female Organic) |

1.55 |

2.85 |

+1.30 |

Most crucially, Table 1 presentspre-post testing using a 5-point Likert scale to demonstrate measurable attitudinal shifts, with active-search groups showing greater awareness of algorithmic bias (Δ=+2.1) compared to organic groups' more modest increases (Δ=+1.3). Participant testimonials vividly captured these effects, with one noting, "The algorithm's progressive narrowing of content made moderate positions invisible" (Participant A2), while another observed their recommendations had become "an echo chamber of male grievance content" (Participant B4).

These findings reveal a three-pronged engine of algorithmic polarization. First, gender-based filtering systematically silences certain voices; second, engagement-driven ranking amplifies extreme positions; third, divergent information ecosystems harden intergroup misperceptions [8]. Rigorous controls—standardized accounts, fixed observation windows, and a mixed-methods design—show that echo chambers do not merely mirror but actively intensify gender polarization through these intertwined mechanisms.

5. Mechanisms of echo chambers' impact on gender equality

5.1. Algorithmic amplification of gender polarization

The experimental results demonstrate significant algorithmic bias in content distribution, with analysis of 800 video recommendations revealing distinct patterns of gender-based filtering. Active-search accounts received approximately five times more gender-equality content with 258 videos compared to organic accounts with 52 videos. Content analysis further revealed that organic female accounts were predominantly shown lifestyle content with 84% of recommendations, while organic male accounts received primarily entertainment and materialistic content with 72% of recommendations. This systematic differentiation in content exposure creates segregated information environments where male and female users encounter fundamentally different narratives regarding gender issues. The comment sections of recommended videos exhibited parallel polarization, with female-oriented content featuring predominantly radical feminist perspectives and male-oriented content containing frequent anti-feminist rhetoric.

5.2. Interactive dynamics between user behavior and algorithmic feedback

The study identified a self-reinforcing cycle wherein initial algorithmic recommendations shape user engagement patterns, which in turn further train the recommendation system [4]. This behavioral pattern creates a positive feedback loop where algorithms progressively narrow content variety while amplifying extreme viewpoints [4]. Longitudinal tracking demonstrated that this process leads to measurable attitude shifts, with users developing increasingly polarized perspectives over the 15-day observation period. The mixed-methods approach captured both quantitative evidence of these patterns and qualitative insights into users' subjective experiences of this narrowing informational landscape.

5.3. Institutional responsibilities and reform directions

The findings underscore the necessity for both platform-level reforms and policy interventions to mitigate these polarization effects. Platform operators could implement algorithmic adjustments to periodically introduce cross-cutting content that challenges users' existing preferences. Technical solutions might include diversity metrics in recommendation systems or explicit labeling of potentially polarizing content. At the policy level, regulatory frameworks could mandate greater transparency in recommendation algorithms and establish independent oversight mechanisms. Without such interventions, current platform architectures will continue to systematically amplify gender polarization through their core operational mechanisms.

6. Discussion

6.1. Implications of research findings

These experimental results provide empirical validation for theoretical concerns about algorithmic polarization, particularly in the context of gender discourse. The documented patterns of differential content distribution and their measurable effects on user perceptions demonstrate how platform architectures actively shape rather than passively reflect social discourse. The findings have significant implications for understanding contemporary challenges to gender equality movements, suggesting that structural features of digital platforms may be contributing to the intensification of gender conflicts.

6.2. Limitations of the current study

Several methodological constraints warrant consideration when interpreting these findings. The 15-day observation period, while sufficient to detect initial polarization patterns, may not capture longer-term dynamics. The sample composition, limited to urban Chinese young adults, raises questions about generalizability to other demographic groups or cultural contexts. Additionally, the experimental design's focus on binary gender categories excludes important variations in gender identity and expression. These limitations highlight the need for caution when extrapolating the results to broader populations or different platform ecosystems.

6.3. Directions for future research

Subsequent investigations should address these limitations through expanded sample diversity, longer observation periods, and cross-platform comparisons. Particularly promising avenues include experimental interventions testing various algorithmic modifications designed to reduce polarization and longitudinal studies tracking the cumulative effects of prolonged exposure to algorithmically curated content [9]. Additional research could also examine intersectional dynamics by incorporating variables such as socioeconomic status and sexual orientation into the experimental framework [10]. Such investigations would contribute to developing more nuanced understandings of digital media's role in shaping social attitudes toward gender equality.

7. Conclusion

This study proves social media algorithms actively worsen gender inequality by creating echo chambers. Through controlled experiments on Douyin, researchers found algorithms show strong gender bias: female accounts receive 84% lifestyle content while male accounts get 72% entertainment and materialistic content. Analysis of 800 video recommendations shows active accounts engaging with gender equality content received 258 videos compared to 52 for organic accounts, a fivefold increase in exposure that nonetheless failed to prevent extreme views in comments and related videos.

Long-term user tracking reveals concrete impacts. Active-search users became 2.1 points more aware of algorithmic bias compared to 1.3 points for regular users. This demonstrates echo chambers amplify gender conflicts through three key mechanisms: gender-based filtering, engagement-boosted extreme content, and creation of separate information bubbles. The findings confirm algorithms treat male and female accounts differently regarding gender content, with lasting effects on user attitudes.

These results demand urgent practical responses. While current systems maximize engagement, they reinforce stereotypes and divide society [6]. Platforms should prioritize content diversity, possibly through features that occasionally show challenging perspectives. Equally important are digital literacy programs to help users recognize echo chambers. Though focused on Douyin, this research provides crucial insights into algorithmic mediation of gender discourse. The mixed-method approach, combining quantitative analysis of 800 videos with qualitative interviews, offers a robust model for future cross-platform and cross-cultural studies. Next steps should test solutions like transparent algorithms or customizable filters.

The study fundamentally changes our understanding of social media's role in gender equality. Platforms are not neutral information channels but active shapers of societal attitudes [11]. These findings highlight the critical need for both technical reforms and user education to address algorithmic bias and its real-world consequences on gender perceptions and relations.

References

[1]. Konstan, J.A., Riedl, J. (2012) Recommender systems: from algorithms to user experience. User Modeling and User Adapted Interaction. 22: 101-123.

[2]. Bright, J., et al Cornell University. (2020) Echo Chambers Exist! (But They’re Full of Opposing Views). https: //doi.org/10.48550/arXiv.2001.11461.

[3]. Jamieson, H.K., Cappella, N.J. (2008) Echo Chamber: Rush Limbaugh and the Conservative Media Establishment. Oxford University Press. Oxford.

[4]. Cinelli, M., et al. (2021) The Echo Chamber Effect on Social Meida. Proceedings of the National Academy of Sciences. 118: 1-8.

[5]. Ahmed, S., Morales, M.D. (2020) Is it still a man’s world? Social media news use and gender inequality in online political engagement. Information, Communication & Society. 24: 381-399.

[6]. Bouchaud, P. (2024) Algorithmic Amplification of Politics and Engagement Maximization on Social Media. Studies in computational intelligence, pp. 131-142.

[7]. Noble, S. U. (2018) Algorithms of Oppression: How Search Engines Reinforce Racism. NYU Press, New York.

[8]. Tufekci, Z. (2015) Algorithmic Harms Beyond Facebook and Google: Emergent Challenges of Computational Agency. Colorado Technology Law Journal. 13: 203.

[9]. Pennycook, G., Rand, D.G. (2019) Fighting misinformation on social media using crowdsourced judgments of news source quality. Proceedings of the National Academy of Sciences. 116: 2521–2526.

[10]. Collins, P.H., Bilge, S. (2020) Intersectionality. Polity Press. Cambridge, Malden.

[11]. Jenkins, H., Ford, S., Green, J. (2013) Spreadable Media: Creating Value and Meaning in a Networked Culture. New York University Press, New York.

Cite this article

Yao,Y. (2025). The Interactive Relationship Between Echo Chamber on the Internet and Gender Equality. Lecture Notes in Education Psychology and Public Media,116,8-16.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceeding of ICIHCS 2025 Symposium: Exploring Community Engagement: Identity, (In)equality, and Cultural Representation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Konstan, J.A., Riedl, J. (2012) Recommender systems: from algorithms to user experience. User Modeling and User Adapted Interaction. 22: 101-123.

[2]. Bright, J., et al Cornell University. (2020) Echo Chambers Exist! (But They’re Full of Opposing Views). https: //doi.org/10.48550/arXiv.2001.11461.

[3]. Jamieson, H.K., Cappella, N.J. (2008) Echo Chamber: Rush Limbaugh and the Conservative Media Establishment. Oxford University Press. Oxford.

[4]. Cinelli, M., et al. (2021) The Echo Chamber Effect on Social Meida. Proceedings of the National Academy of Sciences. 118: 1-8.

[5]. Ahmed, S., Morales, M.D. (2020) Is it still a man’s world? Social media news use and gender inequality in online political engagement. Information, Communication & Society. 24: 381-399.

[6]. Bouchaud, P. (2024) Algorithmic Amplification of Politics and Engagement Maximization on Social Media. Studies in computational intelligence, pp. 131-142.

[7]. Noble, S. U. (2018) Algorithms of Oppression: How Search Engines Reinforce Racism. NYU Press, New York.

[8]. Tufekci, Z. (2015) Algorithmic Harms Beyond Facebook and Google: Emergent Challenges of Computational Agency. Colorado Technology Law Journal. 13: 203.

[9]. Pennycook, G., Rand, D.G. (2019) Fighting misinformation on social media using crowdsourced judgments of news source quality. Proceedings of the National Academy of Sciences. 116: 2521–2526.

[10]. Collins, P.H., Bilge, S. (2020) Intersectionality. Polity Press. Cambridge, Malden.

[11]. Jenkins, H., Ford, S., Green, J. (2013) Spreadable Media: Creating Value and Meaning in a Networked Culture. New York University Press, New York.