Volume 117

Published on October 2025Volume title: Proceedings of the 6th International Conference on Educational Innovation and Psychological Insights

Music, a universal language that can be understood all over the world, has a powerful power to communicate the rich feelings and emotions of human beings. The melody of music will stimulate people's physiological reactions, such as speeding up the heartbeat or relaxing muscles, and then affect people's emotions. Therefore, this paper will focus on exploring the influence of music on emotions. This article discusses how music affects mood from many important angles. Through the related research based on music and neuroscience, this paper reviews the whole process of music influencing emotions. In addition, the article also makes an exploratory analysis of the application of music in therapy. It is of great significance to have a deep understanding of these factors, which helps employees to skillfully make music an effective tool to improve their emotional state and mental health quality in the work scene and people's daily life in general.

View pdf

View pdf

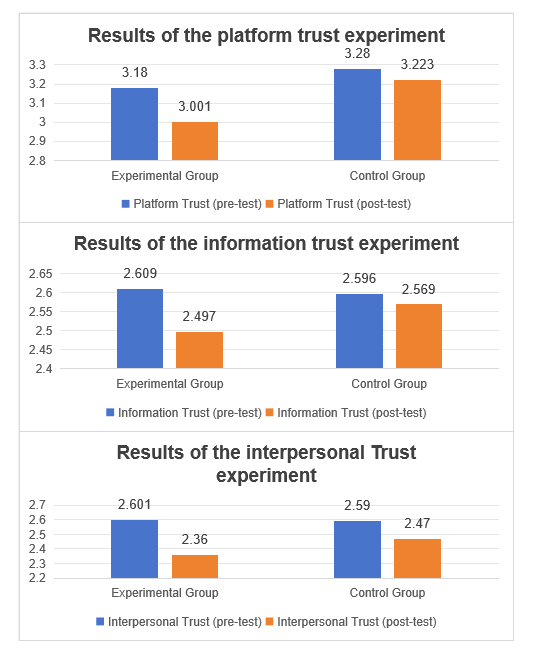

Deep-synthesis technology—an artificial intelligence technique used primarily for audiovisual content—has been misused in ways that are systematically deconstructing the trust ecosystem of social media. This study innovatively unpacks social media trust into three dimensions (platform trust, information trust, and user trust) and, using a survey-experiment design (n = 522), examines the differentiated erosive effects of deep-synthesis content within social media platforms. Taking Douyin (the Chinese version of TikTok) as the context, we employ five categories of deep-synthesis videos as stimuli and control for topic-related confounds. The findings show that deep-synthesis content exerts a negative impact on social media trust, with information trust being the most severely damaged, followed by platform trust; user/interpersonal trust exhibits a lagging and comparatively weak effect. These results reveal differentiated pathways through which technological alienation disrupts trust mechanisms and provide a theoretical basis for platform governance and user-level cognitive interventions.

View pdf

View pdf

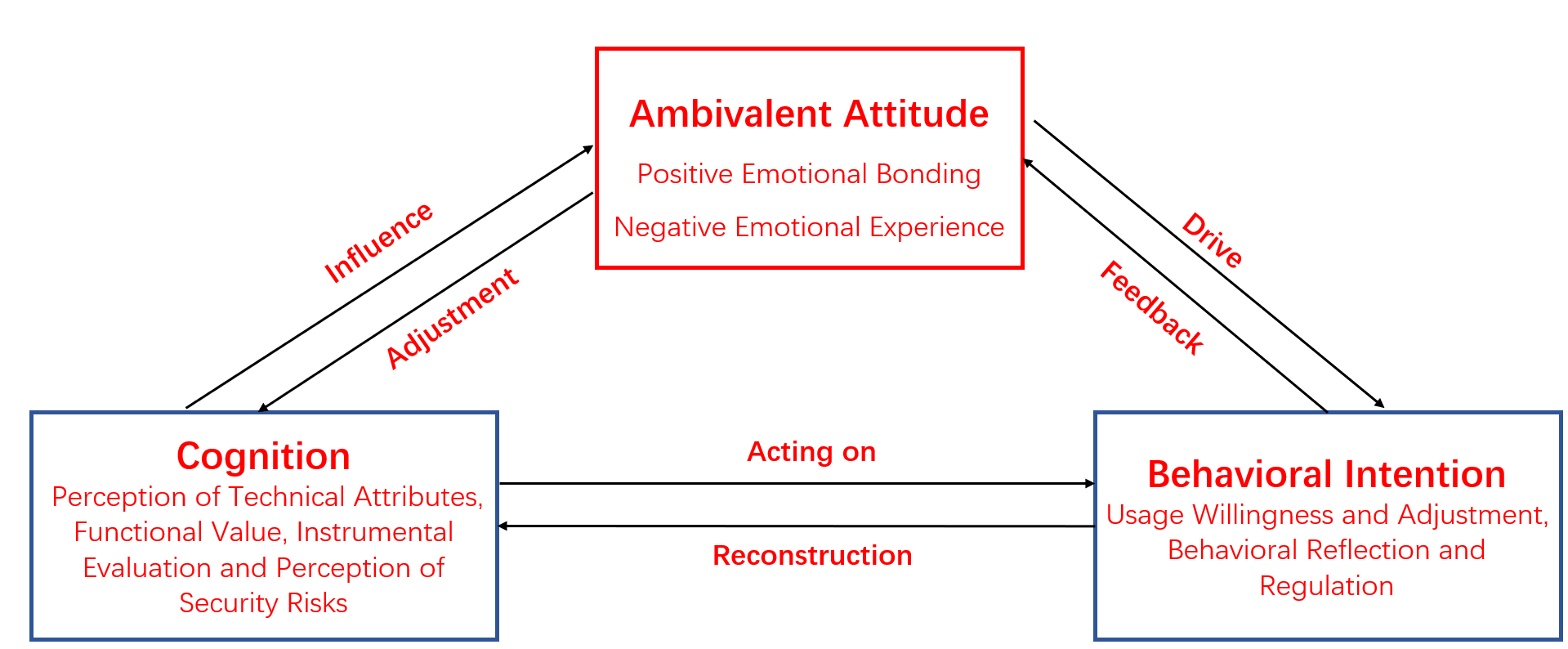

With the continuing rise in psychological stress among young people and the widespread adoption of artificial intelligence technologies, chatbots have gradually become a new channel for emotional expression and emotional support among youth. Drawing on the ABC Attitude Model, this study applies grounded theory to analyze semi-structured interview data from 17 young users aged 15–34, exploring their attitudinal structure and behavioral responses when using chatbots for emotional support. The findings show that young users exhibit both instrumental endorsement and emotional dependence on chatbots, while simultaneously maintaining vigilance and skepticism regarding their empathic capacity, response quality, and privacy/security—forming a contradictory attitude in which “dependence and vigilance” coexist. On this basis, users develop behavioral regulation strategies such as boundary setting and control of usage frequency. The article constructs an interactive mechanism of “cognition–ambivalent attitude–behavioral intention,” revealing a pattern of reflexive dependence in youths’ practices of digital emotional support, and providing theoretical references and practical implications for the design of AI affective products and for youth mental-health services.

View pdf

View pdf

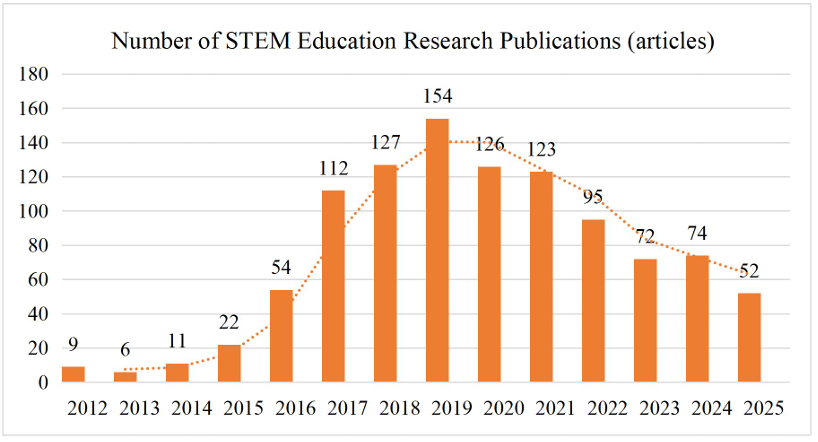

The rapid development of technologies such as the Internet, 5G technology, and artificial intelligence has not only changed the form of education, but also made the advantages of STEM education, which aims to cultivate innovation and problem-solving abilities, increasingly apparent. Through the literature published in core journals in the field of STEM education in China, we can glimpse the current research status, research issues, and development trends in the field of STEM education in China. This not only has great significance for the development of STEM education research in China, but also provides some supplementary research data for international STEM education. The bibliometric method was used to analyze the overall trend, high-frequency word distribution, and clustering of the literature from 2012 to 2025 in the CNKI database of Peking University core and CSSCI journals as data sources, and to construct a knowledge map with the visualization advantage of CiteSpace tool to examine the current situation, hot topics and development trend of STEM education research in China. The results show that, based on the Internet and artificial intelligence, the number of STEM education research in China has been steadily increasing in general, and the hot topics cover three aspects: STEM education philosophy and education policy, STEM curriculum design and STEM literacy, and the emerging research frontiers cover curriculum design, but the cooperation network of research scholars and research institutions is scattered and the depth of research needs to be strengthened.

View pdf

View pdf

This study investigates the equity issues in physical education (PE) and examines the role of leadership in addressing these challenges. Despite its significance for student development, PE faces substantial equity barriers, including gender discrimination, resource inequality, and cultural disparities. These issues directly impact student development and the overall educational environment. Using a comprehensive literature review and conceptual analysis, this study explores the multifaceted nature of equity in PE, highlighting the importance of leadership in creating inclusive and equitable PE environments. The study emphasizes the need for leaders to adopt deliberate and considerate approaches, including strategic planning, resource allocation, and professional development, to promote educational equity and enhance student participation. The findings provide actionable recommendations to improve the PE system and advocate for a more inclusive and supportive educational environment. Future research will integrate social surveys to offer more empirical data support and track the impact of educational policy reforms on PE practices in different regions.

View pdf

View pdf

The aim of this study was to investigate the relationship between working memory (WM) and fundamental movement skills (FMS) in preschool children and to examine the roles of age and gender in this context. A total of 131 preschool children were recruited, and their WM abilities and FMS levels were assessed using standardized WM tasks and the TGMD-3. The results revealed no significant gender differences in WM tasks and total FMS scores, with the exception of the backward digit span task. Weak to moderate significant positive correlations were found between total WM score and total locomotor skills score (r≈0.265) as well as total FMS score (r≈0.245). Furthermore, age significantly predicted the total score of object control skills (OCS), while the total WM score was a significant positive predictor of the total FMS score. These findings suggest an association between WM and FMS in preschoolers, with age being a significant influencing factor. The results have important implications for preschool educational practices and related intervention studies.

View pdf

View pdf

With the development of religious culture, a extreme form of religion-cult is also becoming more rampant, they use specific means to brainwash the minds of believers and manipulate their behaviors, which negatively affects their physical and mental health. In this paper, This paper will analyze the foot-in-the-door technique and deindividuation used by the cult the Movement for the Restoration of the Ten Commandments of God (MRTCG) to explain the reasons why believers, after joining into the cult, end up in self-immolations. Also, this paper will give a solution to improve the social consequence - more people fall into the cult and end up following the organization's instructions to commit suicide - by placing more advertisements that popularize the cult's brainwashing techniques to the public in order to provide a forewarning to better resistance to the cult's persuasions, however, this method also has the disadvantage of being ineffective when the target audience is distracted.

View pdf

View pdf