1. Introduction

Autism Spectrum Disorder is a neurodevelopmental condition that has a profound impact on an individual's social interactions, communication abilities, and behavioural tendencies [1]. Countless individuals, both young and old, across the globe experience the consequences of this condition, which presents substantial obstacles to their everyday communication, education, and social involvement. Although Autism Spectrum Disorder can present in various ways, it often involves difficulties in interpreting facial expressions, a limited understanding of social cues, and struggles with non-verbal communication. At present, the main emphasis in addressing autism is on behavioural therapies and pharmacological treatments. While these methods can be effective to some degree, they typically necessitate ongoing involvement from professionals and have limitations in terms of offering immediate feedback and tailored assistance. Technological advancements have led to the emergence of assistive tools such as communication boards and electronic apps [2]. Nevertheless, these tools frequently lack the necessary level of interactivity and adaptability to adequately fulfil the ever-changing requirements of individuals with autism.

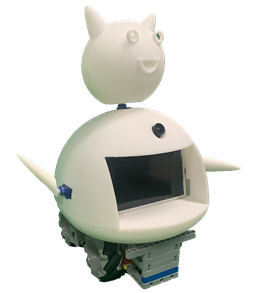

This study presents a new cat-like automated robotic communication system that aims to improve the social skills of individuals with autism by using interactive technology. As shown in Figure 1, the system combines sophisticated artificial intelligence, sensors, and image recognition technologies to imitate human social interactions, offering immediate feedback and a tailored interaction experience, offering new avenues for enhancing the quality of life for individuals with autism spectrum disorder. The structure of this study is organized as follows: the literature review in Section 2 discusses the evolution of technological aids for autism spectrum disorder, highlighting recent advancements in robotic technologies that support social interactions. Next, the methodology (Section 3) details the hardware design of the robot, its software functionalities, and the data processing mechanisms implemented. In Section 4, the real-world applications of the robotic system are evaluated, addressing the challenges encountered and potential improvements. Finally, the conclusion summarizes the findings and underscores the implications of this research within the broader context of assistive technologies for autism therapy.

Figure 1. Cat-like Automated Robot

2. Literature Review

Autism Spectrum Disorder causes difficulties in social communication and behavioural adaptability, greatly affecting the ability of affected individuals to interact with others in their communities. The intricacies of Autism Spectrum Disorder require creative interventions specifically designed to improve communication skills and social interactions. Over time, technological aids have advanced from basic visual tools to complex interactive systems that incorporate artificial intelligence (AI) and robotics [3].

Recent breakthroughs in robotic technologies have demonstrated encouraging outcomes in enhancing social interaction for individuals with Autism Spectrum Disorder. A study led by Yale researchers found that robots programmed to imitate human behaviours can effectively involve children with autism, facilitating crucial social abilities like taking turns and making eye contact [4]. These robots are frequently equipped with mechanisms that enable the adjustment of behaviours in accordance with the child's needs, which is crucial in establishing customised learning environments. Furthermore, the incorporation of artificial intelligence in autism therapy has revolutionised the flexibility of these interventions. Rudovic emphasises the potential of machine learning algorithms to customise interactions according to individual user responses, thereby improving the efficacy of therapeutic sessions [5]. Moreover, interactive systems that integrate real-time data processing and response mechanisms using sensors and image recognition technologies have proven to be highly successful in sustaining user involvement and evaluating advancement [6].

Nevertheless, despite these progressions, obstacles persist in the extensive implementation and customisation of these technologies. According to Iannone and Giansanti, incorporating these intricate systems into everyday use presents considerable difficulties, especially in terms of system design and customisation for individual users [7]. Further investigation should prioritise the development of more resilient adaptive algorithms [8] that have the ability to acquire knowledge from each interaction, thereby continuously enhancing the system to more effectively cater to the user's changing requirements.

3. Methodology

3.1. Hardware Design

The robot created in this research incorporates four Mecanum wheels [9], with each wheel comprising numerous rollers positioned at an angle. This design enables the robot to move unrestrictedly in a 360-degree manner on a flat surface. The robot's ability to move in any direction or rotate in place without a conventional steering mechanism is facilitated by the independent drive of each wheel. The robot's exceptional mobility allows it to navigate effortlessly in confined areas, skillfully maneuvering around obstacles and successfully avoiding collisions. This feature is particularly well-suited for intricate indoor environments.

In order to enhance its perception of the environment and facilitate interaction with users, the robot is equipped with a range of sensors. Initially, a high-resolution camera is affixed to the robot's "head," primarily employed to record users' facial expressions and body language, as well as objects and obstacles in the surroundings. In addition, the robot is equipped with infrared and ultrasonic sensors that aid in detecting and measuring the distance to objects in its vicinity, thereby improving its ability to navigate in unfamiliar environments.

Furthermore, the robot is equipped with a touch-screen display positioned on its "chest." This display serves the purpose of presenting system status information and menu options, as well as offering immediate feedback on the robot's understanding of the user's emotions and behaviours. The interactive interface on the display is intentionally designed to be uncomplicated and instinctive, guaranteeing that users of all age groups can effortlessly navigate it. The interface features prominently-sized icons and buttons that are easily discernible, enabling users to directly adjust settings or choose from various interaction modes using the touch screen.

By integrating these hardware components, the robot's functionality and interactivity are improved, while also guaranteeing operational safety and reliability. The robot is equipped with advanced sensors and drive systems that allow it to operate independently in intricate home or educational environments. Additionally, it has a powerful processor and sufficient memory to promptly respond to and process substantial amounts of input data.

Table 1. Hardware Specifications of the Robotic System.

Component | Description | Specifications |

Wheels | Mecanum wheels for 360-degree movement | Four wheels, multi-directional |

Sensors | Facilitate interaction and navigation | High-resolution camera, infrared, ultrasonic |

Processor | Manage data processing and responses | Advanced, high-speed processor |

3.2. Software and Interactive Functions

The core software of the robot makes use of advanced artificial intelligence technologies, specifically image recognition and natural language processing algorithms. These technologies give the robot the ability to recognise and interpret non-verbal cues from users in real time. To be more specific, the robot examines the facial expressions, eye contact, and body posture of users that are captured by a high-resolution camera. It then uses this information to infer the emotional states and intentions of the users [10]. For example, if the facial recognition algorithm identifies expressions of sadness or anxiety, the robot will automatically activate a soothing programme. This programme may include playing soft music or displaying encouraging messages on the screen. Additionally, the robot will interact with the user in a gentle tone through its voice system in order to alleviate negative emotions. Moreover, the software contains an interactive logic that enables the robot to modify its response patterns and behaviours in accordance with the user's previous interactions with it. It is possible for the robot to gradually adapt to the unique behaviours and response patterns of each user by utilizing real-time updates and optimisations to its internal machine learning models. Suppose, for instance, that the robot notices that a particular interaction method effectively increases the level of engagement exhibited by a particular user, then it will give priority to using that method in subsequent interactions.

A sophisticated voice interaction system is also incorporated into the robot. This system is not only able to comprehend the spoken commands of users, but it is also able to recognise the emotional nuances that are present in their language. This allows for conversations that are more adaptable and empathic. For the purpose of improving its ability to communicate with users, the robot modifies its speech rate, tone, and vocabulary. At the same time, the display of the robot offers instant visual feedback. For example, it can present the results of the emotion analysis in a graphical format that is simple to comprehend. Additionally, it can vividly display dialogue content and feedback on the screen, which helps to improve the efficiency of the communication. In conclusion, the robot is outfitted with an adaptive learning and feedback mechanism, which enables it to automatically adjust the educational content and level of difficulty of its exercises based on the actions that users take while interacting with it. The built-in assessment tools of the robot are constantly collecting feedback and performance data from users. This information is then used to adjust future interactions and learning plans in order to guarantee that the educational process is fully personalized and meets the actual needs of users. Furthermore, this feedback mechanism incorporates long-term interaction data, which enables it to provide users and carers with comprehensive progress reports and behavioural analysis. This, in turn, helps to support ongoing education and therapy.

Table 2. Software Capabilities of the Robotic System.

Feature | Technology Used | Functionality |

Facial Recognition | AI and image processing | Detects and analyzes user emotions |

Emotional Response Analysis | Machine learning algorithms | Adapts interactions based on user mood |

User Interface | Touchscreen technology | Simplifies setting adjustments and mode selections |

3.3. Data Processing

All interaction data generated during the robot's engagement with users, such as interpretations of facial expressions, feedback from verbal communications, and behavioral responses, are collected in real-time and uploaded to a specialized cloud computing platform known as "eider-cloud" for storage and analysis. This platform is equipped with the capability to manage large volumes of data securely and offers personalized data processing solutions based on the behavioral patterns of different users. Using advanced data analysis tools, eider-cloud thoroughly examines patterns and trends within the interaction data, including key metrics such as users' learning progress, emotional changes, and interaction preferences. The platform automatically converts this information into detailed behavioral and learning progress reports, which provide feedback to the users themselves and serve as a resource for therapists and educators to better understand the users' needs and progress.

Eider-cloud tailors highly individualised interaction and learning programmes based on thorough analyses. For example, if the data shows that a user is encountering difficulties with specific types of tasks, the system will automatically modify the difficulty level of those tasks or suggest learning materials that are more appropriate for the user's current level of skill. This mechanism ensures that the learning content is continuously adjusted to match the user's abilities, thereby improving learning efficiency. Furthermore, a crucial feature of the eider-cloud platform is its ability to provide remote monitoring and control. Researchers and therapists have the ability to observe the real-time interactions between the robot and users through the platform. They can make necessary adjustments to the robot's settings or directly intervene in the therapeutic process if required. This feature is especially crucial for managing complex circumstances, guaranteeing that every interaction takes place in a secure and nurturing setting.

Table 3. Data Processing Workflow.

Step | Technology/Algorithm Used | Description |

Data Collection | Sensors, cameras | Collects real-time interaction data |

Data Analysis | Machine learning, AI | Processes and analyzes behavioral data |

Feedback Generation | AI algorithms | Provides tailored feedback to users |

4. Discussion and Limitation

The robotic system developed in this project offers autistic patients a novel mode of communication, significantly improving their social interaction abilities. By simulating human communication patterns and providing emotional feedback, the system enables patients to practice and improve their social skills in a safe and controlled environment, significantly reducing stress and anxiety in real-life social situations. The robot incorporates gamified learning elements, making the learning process enjoyable while also increasing patient engagement and motivation, allowing them to improve their verbal and nonverbal communication skills in a relaxed environment. Furthermore, the robot provides continuous support and feedback, constantly monitoring the patients' progress and automatically adjusting the therapeutic plan in response to their performance. This personalised and adaptable learning path allows patients to progress at their own pace, which improves intervention effectiveness and patient satisfaction. The robot's consistent and predictable interaction patterns also help patients develop trust and a sense of security, reducing anxiety caused by uncertainty and allowing them to interact more confidently with others, gradually improving their adaptability and social skills in real-world settings. The robotic system's highly integrated technological solution not only demonstrates technological innovation, but it also significantly improves the communication abilities and quality of life of autistic patients in therapy and in everyday life. This suggests that the use of advanced technology in special education and therapy has enormous potential, particularly in improving patients' social abilities and overall satisfaction with life.

Although the robotic system has significant advantages in practical applications, such as improving the social capabilities and quality of life for patients with autism, it still faces a number of challenges and limitations during deployment and operation. To begin with, in terms of technological adaptability, the robot must be able to flexibly cater to the specific needs of various users. This adaptability necessitates a high level of customisation in the system, allowing adjustments based on the individual needs of each user. However, the current system design has limitations, particularly in terms of the algorithms' and behavioural logic's self-learning capabilities, which fall short of providing optimal personalised therapy. Second, the cost is a significant barrier to the widespread adoption of this technology. Although the robot provides complex interactive functions and advanced data processing capabilities, the development and maintenance costs of these advanced technologies are relatively high, raising the overall system cost. This cost-related challenge may impede the technology's adoption, particularly in resource-constrained environments. Additionally, the physical design of the robot also presents certain limitations, particularly in terms of operational flexibility in different environments. While the robot is designed to function in a variety of settings, its physical dimensions and mode of operation may not be entirely suitable for specific physical spaces, such as narrow or complex home and educational environments. This could limit the robot's effectiveness in these settings, affecting its efficacy as an assistive tool.

In response to current challenges and limitations, future improvements will concentrate on three key areas: technological innovation, user interface optimisation, and cost control. First, on the technological front, more advanced sensors and deep learning algorithms will be introduced to improve the robot's precision in sensing user behaviours and environmental changes, resulting in a more personalised and adaptable interaction experience. In terms of the user interface, efforts will be made to simplify operational processes and incorporate graphical user interfaces (GUI) and touch technology, making the interface more intuitive and user-friendly, as well as providing customisable layouts and colour schemes to meet the unique needs of autistic patients. Furthermore, to encourage wider adoption of this technology, efforts will be made to cut costs, such as optimising manufacturing processes, using less expensive materials, and adopting a modular design to simplify production and maintenance. Through these comprehensive improvements, the future robotic system will not only be more technically mature, but it will also better meet user needs and be more competitive in the market, providing practical assistance to a larger user base.

5. Conclusion

The development and implementation of the robotic system discussed in this paper represent a significant advancement in the field of assistive technologies for autism therapy. The system has demonstrated considerable potential in enhancing the social interaction and communication skills of autistic individuals, thereby improving their quality of life and integration into society. By providing an innovative method of interaction, the robot facilitates more effective learning and adaptation among users, catering specifically to the unique challenges presented by autism.

Despite its many benefits, the system faces challenges related to adaptability, cost, and physical design that currently limit its wider application. For future research and development, it is imperative to focus on enhancing the system’s adaptability through more sophisticated machine learning algorithms that can learn and adjust in real-time to the user's behaviors and preferences. Additionally, improving the user interface to be more intuitive and accessible will help in reducing the learning curve and increasing acceptance among users. Economically, strategies to lower the cost of production and maintenance must be prioritized to make this technology accessible to a broader audience.

In summary, while the robotic system stands as a promising tool in the field of autism therapy, its full potential can only be realized through continuous technological refinements and strategic adjustments in design and implementation. This will not only broaden the impact of such systems on the autism community but also enhance their integration into mainstream therapeutic practices, thereby providing richer, more supportive environments for individuals with autism. This endeavor aligns with the broader goal of using innovative solutions to foster inclusivity and enhance the quality of life for those on the autism spectrum.

References

[1]. Lord, C., Elsabbagh, M., Baird, G. and Veenstra-Vanderweele, J. (2018). Autism Spectrum Disorder. The Lancet, 392(10146), pp.508–520. doi:https://doi.org/10.1016/s0140-6736(18)31129-2.

[2]. Allen, M.L., Hartley, C. and Cain, K. (2016). iPads and the Use of ‘Apps’ by Children with Autism Spectrum Disorder: Do They Promote Learning?. Frontiers in Psychology, [online] 7(1305). doi:https://doi.org/10.3389/fpsyg.2016.01305.

[3]. Sundas, A. et al. (2023) ‘Evaluation of autism spectrum disorder based on the healthcare by using Artificial Intelligence Strategies’, Journal of Sensors, 2023, pp. 1–12. doi:10.1155/2023/5382375.

[4]. Weir, W. (2018) Robots help children with autism improve social skills, YaleNews. Available at: https://news.yale.edu/2018/08/22/robots-help-children-autism-improve-social-skills.

[5]. Rudovic, O. (2018) Personalized ‘Deep learning’ equips robots for autism therapy, MIT Media Lab. Available at: https://www.media.mit.edu/articles/personalized-deep-learning-equips-robots-for-autism-therapy/.

[6]. Cabibihan, J.-J., Javed, H., Aldosari, M., Frazier, T. and Elbashir, H. (2016). Sensing Technologies for Autism Spectrum Disorder Screening and Intervention. Sensors, [online] 17(12), p.46. doi:https://doi.org/10.3390/s17010046.

[7]. Iannone, A. and Giansanti, D. (2023). Breaking Barriers—The Intersection of AI and Assistive Technology in Autism Care: A Narrative Review. Journal of Personalized Medicine, 14(1), pp.41–41. doi:https://doi.org/10.3390/jpm14010041.

[8]. Yechiam, E. et al. (2010) ‘Adapted to explore: Reinforcement learning in autistic spectrum conditions’, Brain and Cognition, 72(2), pp. 317–324. doi:10.1016/j.bandc.2009.10.005.

[9]. Gfrerrer, A. (2008) ‘Geometry and kinematics of the Mecanum wheel’, Computer Aided Geometric Design, 25(9), pp. 784–791. doi:10.1016/j.cagd.2008.07.008.

[10]. Leo, M. et al. (2015) ‘Automatic emotion recognition in robot-children interaction for ASD treatment’, 2015 IEEE International Conference on Computer Vision Workshop (ICCVW) [Preprint]. doi:10.1109/iccvw.2015.76.

Cite this article

Yan,T. (2024). Robotic technology in Autism intervention: Design and application of the CARS cat-like robot. Applied and Computational Engineering,86,16-22.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Lord, C., Elsabbagh, M., Baird, G. and Veenstra-Vanderweele, J. (2018). Autism Spectrum Disorder. The Lancet, 392(10146), pp.508–520. doi:https://doi.org/10.1016/s0140-6736(18)31129-2.

[2]. Allen, M.L., Hartley, C. and Cain, K. (2016). iPads and the Use of ‘Apps’ by Children with Autism Spectrum Disorder: Do They Promote Learning?. Frontiers in Psychology, [online] 7(1305). doi:https://doi.org/10.3389/fpsyg.2016.01305.

[3]. Sundas, A. et al. (2023) ‘Evaluation of autism spectrum disorder based on the healthcare by using Artificial Intelligence Strategies’, Journal of Sensors, 2023, pp. 1–12. doi:10.1155/2023/5382375.

[4]. Weir, W. (2018) Robots help children with autism improve social skills, YaleNews. Available at: https://news.yale.edu/2018/08/22/robots-help-children-autism-improve-social-skills.

[5]. Rudovic, O. (2018) Personalized ‘Deep learning’ equips robots for autism therapy, MIT Media Lab. Available at: https://www.media.mit.edu/articles/personalized-deep-learning-equips-robots-for-autism-therapy/.

[6]. Cabibihan, J.-J., Javed, H., Aldosari, M., Frazier, T. and Elbashir, H. (2016). Sensing Technologies for Autism Spectrum Disorder Screening and Intervention. Sensors, [online] 17(12), p.46. doi:https://doi.org/10.3390/s17010046.

[7]. Iannone, A. and Giansanti, D. (2023). Breaking Barriers—The Intersection of AI and Assistive Technology in Autism Care: A Narrative Review. Journal of Personalized Medicine, 14(1), pp.41–41. doi:https://doi.org/10.3390/jpm14010041.

[8]. Yechiam, E. et al. (2010) ‘Adapted to explore: Reinforcement learning in autistic spectrum conditions’, Brain and Cognition, 72(2), pp. 317–324. doi:10.1016/j.bandc.2009.10.005.

[9]. Gfrerrer, A. (2008) ‘Geometry and kinematics of the Mecanum wheel’, Computer Aided Geometric Design, 25(9), pp. 784–791. doi:10.1016/j.cagd.2008.07.008.

[10]. Leo, M. et al. (2015) ‘Automatic emotion recognition in robot-children interaction for ASD treatment’, 2015 IEEE International Conference on Computer Vision Workshop (ICCVW) [Preprint]. doi:10.1109/iccvw.2015.76.