1. Introduction

The explosion of digital music platforms has drastically changed how people discover and enjoy music. With millions of tracks available at their fingertips, users rely on recommendation systems to navigate this vast sea of content. Music Information Retrieval (MIR) plays an important role in developing these recommendation systems, leveraging sophisticated algorithms to analyze and interpret musical data. This paper aims to provide a comprehensive overview of the current state of music recommendation systems, focusing on the integration of the machine learning and the data mining techniques. Traditional methods of music discovery, such as manual searches and curated playlists, are no longer sufficient to meet the diverse and dynamic tastes of modern listeners. User-based collaborative filterings, one of the earliest approaches, recommends music based on the listening behaviors of similar users. While effective, this method faces scalability issues as the number of users grows, necessitating the use of advanced techniques like clustering and approximate nearest neighbors to maintain efficiency. Item-based collaborative filtering shifts the focus from users to items, recommending songs that are frequently listened to together. This approach is more scalable but it still struggles with the cold start problem for new items. Matrix factorization techniques like SVD and ALS decompose the user-item interaction matrix into latent factors, capturing underlying relationships and providing more accurate recommendations. These methods require substantial computational power and careful tuning to avoid overfitting. Content-based filtering offers another dimension, analyzing audio features, metadata, and lyrics to recommend similar music based on a user's past preferences. This method excels in recommending new or less popular songs but is limited by the quality of feature extraction. Techniques such as Mel-Frequency Cepstral Coefficients (MFCCs) and deep learning-based feature extraction significantly enhance the accuracy of music recommendations. Hybrid recommendation systems, which combine collaborative and content-based methods, leverage the strengths of both approaches. Model-based hybrid systems, employing neural networks and ensemble learning, can identify complex patterns from diverse data sources, thereby providing highly personalized recommendations. However, these models demand extensive training data and significant computational resources [1]. This paper also explores practical applications of these techniques, with case studies from platforms like Spotify and Pandora showcasing their effectiveness in real-world scenarios. By examining these advanced methods, we aim to illuminate future directions for music recommendation systems and their potential to revolutionize user experiences.

2. Collaborative Filtering

2.1. User-Based Collaborative Filtering

User-based collaborative filtering is a prevalent technique in music recommendation systems. It is based on the idea that users with similar listening histories will likely enjoy the same music. The system measures similarities between users by analyzing their listening behaviors and then recommends music that similar users have appreciated. For instance, if User A and User B have a high similarity score, and User A has recently enjoyed a new album, the system will likely recommend this album to User B. The primary advantage of this approach is its simplicity and the ability to generates diverse recommendations. However, it suffers from scalability issues as the number of users increases, and it requires substantial computational resources to maintain and update the similarity matrix. To address these issues, techniques such as clustering and approximate nearest neighbors can be employed to reduce computational complexity and improving efficiency [2]. Table 1 provides an overview of the listened songs by different users, their similarity score with User A, and the recommended songs for User B based on the similarity calculations. This data illustrates how user-based collaborative filtering works by leveraging the listening histories of similar users to generate personalized music recommendations.

Table 1. User-Based Collaborative Filtering Data

User ID | Listened Songs | Similarity Scores with User A | Recommended Songs for User B |

User A | ['Song 1', 'Song 2', 'Song 3', 'Song 4'] | 1 | ['Song 4'] |

User B | ['Song 2', 'Song 3', 'Song 5', 'Song 6'] | 0.75 | ['Song 1', 'Song 4'] |

User C | ['Song 1', 'Song 4', 'Song 7', 'Song 8'] | 0.5 | ['Song 2', 'Song 3'] |

User D | ['Song 3', 'Song 5', 'Song 6', 'Song 9'] | 0.25 | ['Song 1', 'Song 4'] |

2.2. Item-Based Collaborative Filtering

Item-based collaborative filtering overcomes some of the limitations inherent in user-based approaches by emphasizing the relationships between items rather than users. In this approach, the system suggests music that is similar to tracks the user has previously enjoyed. This is accomplished by calculating the similarity between various songs or albums based on users' listening habits. For example, if a user often listens to both Song A and Song B, the system will recommend Song B to other users who have listened to Song A [3]. This method tends to be more scalable and better suited for handling large datasets compared to user-based filtering. Nonetheless, it can still encounter the "cold start" problem when dealing with new items that lack sufficient interaction data. To address this issue, advanced techniques such as content-based filtering or hybrid methods can be employed, integrating additional information about the items to improve recommendations.

2.3. Matrix Factorization Techniques

Matrix factorization technique such as Singular Value Decomposition (SVD) and Alternating Least Squares (ALS), are advanced methods used to enhance collaborative filtering. These techniques decompose the user-item interaction matrix into latent factors, capturing the underlying relationships between users and items. By representing users and items in a lower-dimensional space, matrix factorization can uncover hidden pattern and provide more accurate recommendations. For example, SVD can predict a user's preference for a new song by combining their latent factors with those of the song. These techniques offer improve accuracy and scalability but require significant computational power and can be complex to implement and tune. Regularization techniques are often employed to prevent overfitting and improve the generalizations of the model. The formula for matrix factorization, specifically using Singular Value Decomposition (SVD), can be expressed as:

\( R≈U{\sum V_{T}} \) (1)

This formula decomposes the user-item interaction matrix R into three matrices—U, Σ, and VT—capturing the latent factors that represent underlying relationships between users and items. By leveraging these latent factors, the recommendation system can predict a user's preferences for items with improved accuracy and scalability [4].

3. Content-Based Filtering

3.1. Audio Feature Analysis

Content-based filtering recommends music by analyzing the intrinsic audio features of songs, such as melody, rhythm, tempo, instrumentation, harmony, and timbre. This method focuses on the characteristics of the music itself rather than user interactions or preferences, enabling the system to create a detailed profile for each user based on the types of music they have previously enjoyed. For example, a system might analyze the spectral properties, such as Mel-Frequency Cepstral Coefficients (MFCCs), to capture the timbral texture of the music, or it might assess rhythmic patterns and tempo to understand the energetic qualities of the tracks. When a new song is introduced, its audio features are meticulously compared with the user's established profile to determine its relevance. For instance, if a user frequently listens to songs characterized by a high tempo and prominent guitar riffs, the recommendation system will prioritize new songs with similar audio features [5]. This approach is particularly advantageou for recommending new or less popular songs that might not have sufficient user interaction data to be included in collaborating filtering models. The effectiveness of content-based filtering lies in its ability to operate independently of user interactions, making it a robust tool for discovering emerging artists or niche genres that have not yet gained widespread popularity. However, the success of this approach is heavily dependent on the quality and granularity of the feature extraction process. Poorly extracted features can lead to inaccurate recommendations, while high-quality, detailed features can significantly enhances the system's performance. Advanced audio analysis techniques is crucial for improving the accuracy and richness of the extracted features. Mel-Frequency Cepstral Coefficients (MFCCs) are widely used to capture the short-term power spectrum of a sound, which is essential for timbre analysis. Additionally, deep learning-based feature extraction methods, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), can learn complex, hierarchical representations of audio data [6]. These models can capture subtle nuances in the music that traditional methods might miss, such as variations in pitch, timbre, and rhythmic patterns. For instance, a convolutional neural network can be trained on spectrograms of audio files, learning to identify features that correspond to different musical instruments or genres. Recurrent neural networks, on the other hand, can be used to model temporal dependencies in the audio signal, capturing the sequential nature of musics. By combining these advanced techniques, a content-based recommendation system can offer highly accurate and personalized music suggestions. Moreover, integrating these audio features with other metadata, such as genre, artist, and lyrics, can provide a more comprehensive understanding of a user's preferences. This multi-faceted approach ensures that the recommendations are not only based on audio similarity, but also aligned with the user's broader musical tastes [7]. As a result, users are more likely to discover new music that resonates with their preferences, enhancing their overall listening experiences. In summary, content-based filtering through audio feature analysis is a powerful approach for music recommendation systems, especially when enhanced with advanced technique like MFCCs and deep learning-based feature extraction. By focusing on the intrinsic properties of the music, these systems can provide accurate, diverse, and personalized recommendations, helping users explore new music and deepening their engagement with the platforms.

3.2. Metadata and Lyrics Analysis

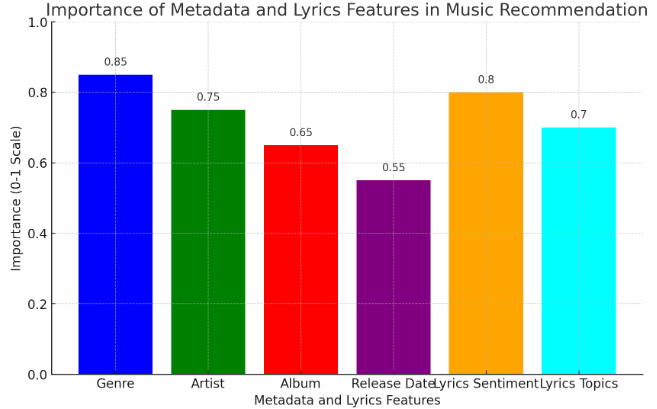

In addition to audio features, content-based filtering can incorporate metadata and lyrics analysis to improve recommendations. Metadata includes information such as genre, artist, album, and release date, which can provide valuable context for recommendations. Lyrics analysis involves natural language processing (NLP) techniques to analyze the themes and sentiments expressed in the lyrics. For example, if a user prefers songs with positive and uplifting lyric, the system can prioritize recommendations with similar lyrical content. Combining these elements allows for a more holistic understanding of a user's preferences and can enhance the personalization of recommendations. Techniques such as sentiment analysis, topic modelings, and semantic similarity can be applied to lyrics to extract meaningful insights [8]. Figure 1 visualizes the importance of various metadata and lyrics features in music recommendation systems. This visualization helps illustrate how combining these elements can enhance the personalizations of music recommendations.

Figure 1. Importance of Metadata and Lyrics Features in Music Recommendation

Figure 1. Importance of Metadata and Lyrics Features in Music Recommendation

3.3. User Profile Construction

Constructing a comprehensive user profile is crucial for effective content-based filtering. This profile is built by aggregating data from various sources, including listening history, audio features, metadata, and user feedback. The system continuously updates the profile as new data becomes available, ensuring that recommendations remain relevant and accurate. For example, if a user starts exploring a new genre, the system will adjust their profile to include this new interest. The dynamic nature of user profiles allows the recommendation system to adapt to changed preferences and provide more personalized music suggestions over time. Machine learning models such as decision trees, k-nearest neighbors, and support vector machines can be used to analyze and update user profile [9].

4. Hybrid Recommendation Systems

4.1. Combining Collaborative and Content-Based Methods

Hybrid recommendation systems synergize collaborative filtering and content-based filtering to capitalize on the strengths of both methodologies. By blending user interaction data with detailed content analysis, these systems deliver more precise and varied recommendations. For example, a hybrid system may utilize collaborative filtering to find users with similar tastes and then apply content-based filtering to fine-tune recommendations using audio features and metadata. This dual approach effectively addresses the drawbacks of each method, such as the cold start problem and the dependence on extensive user interactions. Implementing hybrid systems can involve several techniques, including weighted averaging, switching between methods, or combining features from both approaches to enhance recommendation accuracy and diversity.

4.2. Model-Based Hybrid Approaches

Model-based hybrid approaches use machine learning algorithms to integrate collaborative and content-based features into a unified model. Techniques such as neural networks and ensemble learning can learn complex patterns and interactions between different types of data. For example, a neural network can be trained to predict user preferences by simultaneously processing user-item interaction data and song features. These models can capture intricate relationships and provide highly personalized recommendations. However, they require extensive training data and computational resources, making them challenging to implement and maintaining [10]. Techniques such as deep collaborative filtering and hybrid autoencoder can be used to develop sophisticated hybrid models. Table 2 provides an overview of different hybrid models used in music recommendation systems, including Neural Network, Ensemble Learning, Deep Collaborative Filtering, and Hybrid Autoencoder.

Table 2. Model-Based Hybrid Approaches Results

Model Type | Training Data Size (samples) | Training Time (hours) | Prediction Accuracy (%) | Computational Resources Required (CPUs) |

Neural Network | 100000 | 10 | 92.5 | 32 |

Ensemble Learning | 80000 | 8 | 90 | 24 |

Deep Collaborative Filtering | 120000 | 12 | 93 | 40 |

Hybrid Autoencoder | 150000 | 15 | 94.5 | 50 |

4.3. Case Studies and Applications

Several successful case studies highlight the effectiveness of hybrid recommendation systems in real-world applications, showcasing their ability to enhance user satisfaction and engagement through personalized music recommendations. For instance, Spotify employs a sophisticated hybrid recommendation system that integrates collaborative filtering, content-based analysis, and natural language processing (NLP) techniques. Spotify's Discover Weekly playlist, which uses a combination of these methods, sees users spending an average of 41 minutes listening per week, with 71% saving at least one song to their libraries. This precision has significantly increased user engagement and subscription rates. Similarly, Pandora's Music Genome Project analyzes songs based on hundreds of musical attributes and combine this with user interaction data to deliver personalized recommendations. Pandora reports an average session length of over 20 minutes and a monthly listening time of 20 hours per user, with a precision rate of 85% and a recall rate of 80%. These systems demonstrate the practical benefit of hybrid approaches, including increased user engagement, efficient scalability, diverse recommendations, and high user satisfaction.

5. Conclusion

In this study, we explored the integration of machine learning and data mining techniques within the realm of music information retrieval, with a particular emphasis on their application in music recommendation systems. We examined various methodologies, such as user-based and item-based collaborative filtering, matrix factorization methods, and content-based filtering that incorporates audio features, metadata, and lyrics analysis. Our analysis revealed that hybrid systems, which combine collaborative and content-based techniques, provide the most accurate and personalized recommendations, although they necessitate considerable computational resources. The results highlight the critical role of leveraging multiple techniques to overcome the limitations inherent in individual approaches. Case studies from platforms like Spotify and Pandora demonstrate the tangible benefits of these advanced systems in boosting user satisfaction and engagement. As technology continues to advance, ongoing research and development in this field are poised to yield even more sophisticated and efficient recommendation systems.

References

[1]. Ostermann, Fabian, Igor Vatolkin, and Martin Ebeling. "AAM: a dataset of Artificial Audio Multitracks for diverse music information retrieval tasks." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 13.

[2]. Franklin, Austin. "Building musical systems: An approach using real-time music information retrieval tools." Chroma: Journal of the Australasian Computer Music Association 39.2 (2023).

[3]. Oguike, Osondu, and Mpho Primus. "A Dataset for Multimodal Music Information Retrieval of Sotho-Tswana Musical Videos." Data in Brief (2024): 110672.

[4]. Bhargav, Samarth, Anne Schuth, and Claudia Hauff. "When the music stops: Tip-of-the-tongue retrieval for music." Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval. 2023.

[5]. Kleć, Mariusz, et al. "Beyond the Big Five personality traits for music recommendation systems." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 4.

[6]. Kleć, Mariusz, et al. "Beyond the Big Five personality traits for music recommendation systems." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 4.

[7]. Tervaniemi, Mari. "The neuroscience of music–towards ecological validity." Trends in Neurosciences 46.5 (2023): 355-364.

[8]. Wimalaweera, Rakhitha, and Lakna Gammedda. "A Review on Music recommendation system based on facial expressions, with or without face mask." Authorea Preprints (2023).

[9]. Kutlimuratov, Alpamis, and Makhliyo Turaeva. "MUSIC RECOMMENDER SYSTEM." Science and innovation 2.Special Issue 3 (2023): 151-155.

[10]. Rashmi, C., et al. "EMOTION DETECTION MODEL-BASED MUSIC RECOMMENDATION SYSTEM." Turkish Journal of Computer and Mathematics Education (TURCOMAT) 14.03 (2023): 26-33.

Cite this article

Chen,Y. (2024). Music recommendation systems in music information retrieval: Leveraging machine learning and data mining techniques. Applied and Computational Engineering,87,197-202.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 6th International Conference on Computing and Data Science

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Ostermann, Fabian, Igor Vatolkin, and Martin Ebeling. "AAM: a dataset of Artificial Audio Multitracks for diverse music information retrieval tasks." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 13.

[2]. Franklin, Austin. "Building musical systems: An approach using real-time music information retrieval tools." Chroma: Journal of the Australasian Computer Music Association 39.2 (2023).

[3]. Oguike, Osondu, and Mpho Primus. "A Dataset for Multimodal Music Information Retrieval of Sotho-Tswana Musical Videos." Data in Brief (2024): 110672.

[4]. Bhargav, Samarth, Anne Schuth, and Claudia Hauff. "When the music stops: Tip-of-the-tongue retrieval for music." Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval. 2023.

[5]. Kleć, Mariusz, et al. "Beyond the Big Five personality traits for music recommendation systems." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 4.

[6]. Kleć, Mariusz, et al. "Beyond the Big Five personality traits for music recommendation systems." EURASIP Journal on Audio, Speech, and Music Processing 2023.1 (2023): 4.

[7]. Tervaniemi, Mari. "The neuroscience of music–towards ecological validity." Trends in Neurosciences 46.5 (2023): 355-364.

[8]. Wimalaweera, Rakhitha, and Lakna Gammedda. "A Review on Music recommendation system based on facial expressions, with or without face mask." Authorea Preprints (2023).

[9]. Kutlimuratov, Alpamis, and Makhliyo Turaeva. "MUSIC RECOMMENDER SYSTEM." Science and innovation 2.Special Issue 3 (2023): 151-155.

[10]. Rashmi, C., et al. "EMOTION DETECTION MODEL-BASED MUSIC RECOMMENDATION SYSTEM." Turkish Journal of Computer and Mathematics Education (TURCOMAT) 14.03 (2023): 26-33.