1. Introduction

Nowadays, sensors are widely used to support daily life and manufacturing. The sensors made people’s lives more convenient and comfortable with lights, fans, televisions and automatic vehicles [1]. Knowledge of sensors is fundamental for practitioners in engineering. Recently, one of the most famous developing topics is the field of automatic vehicles. In this area, sensors have many applications. For the distance detection, the engineers could use the ultrasonic sensor, lidar and visual sensors. These types of sensors can be categorized into localization. In the automatic vehicle, the control of the vehicle is also important. Another category of sensors appears apparently. This type of sensor includes inertial sensors like gyroscopes. For the control of the robot or vehicle, there are many algorithms that appear. A suitable algorithm can significantly enhance the performance of the sensors. For example, the Simultaneous Localization and Mapping (SLAM) control is widely used for visual and lidar sensors. In this article, there are mainly two kinds of sensors discussed. One is the localization including the visual, lidar, ultrasonic and infrared sensors. Another one is the robot control which includes the inertial sensor (gyroscope).

2. Localization

The robot localization sensor is a major part in mobile robots. It includes lots of different sensors like visual, and infrared sensors. This kind of sensor can provide important data to locate the robot. These types of sensors have already been employed and experienced in a lot of different areas including engineering, and architecture. In this part, the article will include the discussion on visual, infrared, lidar, ultrasonic, and color sensors.

2.1. Visual sensors

2.1.1. Introduction to visual sensors. A visual sensor basically is a device composed of a camera, a storage unit, an energy supply and a communication surface. It is popular and already employed in a lot of different areas including engineering. For instance, a robot needs to detect and identify the environment to finish certain missions. In this scenario, an efficient and effective visual sensor can easily fulfill the requirements of identification and detection. The visual sensor has been developed in different ways by using the characteristics of hardware platforms. Most applications of the visual sensors have been developed by using “Construction Blocks” [2].

2.1.2. Estimation of the performance. Although there are lots of complexities in the process of building them, there are still some effective and practical ways to estimate the performance of the visual sensors. There are several parts to estimate [2]. The major concern to estimate the performance of the visual sensors is operation time. The time senor operates is the most relevant parameter when choosing the proper sensor hardware. The second concern is the energy consumption that it is a relevant demand for modern applications. The third concern is the data saving. It is important in a lot of real-life applications. There are more and more efficient and local ways to preserve data recently. The fourth one is the Visual quality. It is popular for the precise localization of robots and cars. If the camera can transfer precise images of objects, it can significantly enhance and improve the performance of visual sensors in robots and cars.

2.1.3. Advantages and disadvantages of applications. In most scenarios, the price of the visual sensor is not very high. Also, the required repair cost is little. It is very easy to install on the robot. The visual sensor is small and lightweight. There are lots of different areas that require visual sensors. They are useful for detecting and identifying the recent environment of the robot to achieve some specific missions. However, the visual sensors usually can’t detect the small flaws of a robot. A small flaw may require a more sensitive method.

2.2. Infrared sensor

2.2.1. Introduction to the infrared sensor. The most common type of electromagnetic radiation is infrared radiation. The infrared detector for thermal imaging has a numerous application [3]. For example, it is sensitive to detect the object’s temperature, geometry, composition, and location in space and atmosphere. The contribution it has is very important for the development of future and recent technology. Also, the engineers should familiarize the use of infrared sensors since they use it in various scenarios, such as objects chasing. In this scenario, an object can be followed by chasing the infrared radiation of it.

2.2.2. Types of infrared sensor. The active infrared sensor includes both a receiver and an emitter. In this case, the infrared sensor can send and receive signals. So, the basic function of this infrared sensor is through the radiation energy to receive and send radiation through a detector. The relevant examples of this kind of sensor are reflectance and break beam sensors.

The passive infrared sensor only includes a detector. It uses infrared sources to receive the signal from the target. It can detect the sources through infrared receivers.

In this case, the object can understand the data from the infrared sensor through the infrared sources. The most famous type of this kind of sensor is the Bolometer. Also, there are two major types of passive infrared sensors, the thermal infrared sensor and the quantum infrared sensor. Thermal infrared sensors are independent of wavelength and use heat-like as the source. The response time is as slow as their detection time.

Quantum infrared sensors can react faster than thermal ones. It depends on wavelengths and detection time. However, it needs time to cool down the device for the exact measurement.

2.2.3. Advantages and shortcomings of infrared sensor. The infrared sensor can detect and receive the radiation in a large area. It employs lots of different applications. The infrared sensor can also detect infrared radiation in real-time. In most scenarios, the sensor can receive the signal in a short time. The price of this type of sensor is always low, most of the engineers could afford to use it. Also, there are lots of different types of infrared sensors made by different companies in the market. However, because of some inherent limitations in the infrared sensor, the image from the sensor may be blurred. A high accuracy still needs to have a high level of algorithm. The challenges it faced still need to be overcome.

2.3. Lidar

2.3.1. Introduction to the Lidar. Lidar stands for light detection and ranging. It is one of the most popular sensors nowadays. The function of it is to use pulsed laser light to measure the distance from one to the target. It has been employed in lots of different areas. This technology provides a precise map of the earth surface including buildings, underground even underwater [4]. The basic principle of Lidar is to send out laser pulses and measure the delay time between the emission and return. The data from the Lidar can provide an accurate image of the environment. Engineers use this type of sensor in lots of application including city planning and more.

2.3.2. Applications of Lidar. The lidar is popular in aviation. It can give a clear three-dimensional map of the Earth’s surface. Airborne lidar measures the distance between the plane to the target by illuminating it with pulsed laser light and measuring the reflected pulses with the lidar sensor. The function of the lidar plays a significant role in this area.

In the autonomous vehicle navigation area, lidar can provide accurate navigation and exact mapping for the autonomous vehicle. In this case, the engineers can use this type of sensor to achieve the goal of unpiloted driving. The role of the lidar is indispensable. Also, the lidar can collaborate with other sensors. For instance, it could collaborate with a gyroscope sensor to finish the autonomous vehicle mission. In this case, lidar can provide exact data for the upper environment and color sensors can be helpful to the control of the vehicle.

By using the highly accurate data from the lidar sensor, the simulation image in virtual reality will be extremely realistic and bring optimal performance for the users. In the future, the lidar sensor will be employed in more scenarios.

2.3.3. Advantages and shortcomings of the Lidar. The lidar can provide extremely detailed and exact data. Even at a great distance away from the target, it can still work well. In most scenarios, the lidar can generate a large amount of data in a short period. The function of it is significant in surveying and mapping applications. The lidar is more cost-efficient than human labor when mapping a great distance. Most lidars provided by famous companies are very cheap. Although in some small-scale measurements, the cost of the lidar may be higher, with the advancement of the technology in the future, such problems will be addressed. For instance, the iPhone and iPads have started to use small-scale lidar.

However, lidar still faced some challenges. For a given distance, the lidar’s performance varies on different surfaces. Textured surfaces, such as asphalt and clothing, produce predictable reflections and well performance. The surfaces like paint markings on roads, and reflective road signs are also good targets. However, surfaces like mirrors, glass and smooth water produce very poorly disperse distribution reflections. So, it will let the receiver miss the signals which causes inaccurate data. The lidar is very sensitive to rain, snow and extreme weather. Under this circumstance, the data from the lidar will be inaccurate or incomplete. Also, the measurement of the lidar during the morning fog will cause the data to be inaccurate. For these problems, most engineers decide to use multiple sensors to enhance the performance of the mobile robot which brings more accurate data.

2.4. Ultrasonic sensor

2.4.1. Introduction to the ultrasonic sensor. The ultrasonic sensor is an electronic device that measure the distance of a target object by emitting ultrasonic sound waves. It is widely used in various applications due to its ability to operate independently of color and its resistance to environmental factors. It’s a powerful tool in robotics, automation and even medical imaging. As a key part of localization system, the common and widely used ultrasonic sensor is based on piezoelectric or Microelectromechanical Systems (MEMS)transducer [5]. From an engineering perspective, the ultrasonic sensor is indispensable in lots of scenarios. For instance, it is widely used for distance measurements in an enclosed field with walls on each side.

2.4.2. Applications of the ultrasonic sensor. The ultrasonic sensing is useful for the positioning of the robotic arm and mobile robot. In this case, the ultrasonic sensor can provide real-time data on the object. The engineers can use positioning data to control the robot more precisely. In an enclosed environment, the ultrasonic sensor can achieve proximate detection by estimating the exact data of the object. The data could be used to avoid collision and detect the diameter of the objects like the roll in a more accurate way.

2.4.3. Advantages and shortcomings of the ultrasonic sensor. The ultrasonic sensor is a high-precision device, this should be the major reason that the ultrasonic sensor has been employed in lots of applications in engineering. In most scenarios, it can give the engineers precise data in short time. The ultrasonic sensor has an anti-interference ability. It is not impacted by the target’s color. Also, it provides a consistent outcome and shows high reliability. Moreover, the reaction time of the ultrasonic sensor is very quick. It should be noticed that the speed of sound is based on humidity and temperature at most times, so the environmental circumstances may show attractions while the sensor is detecting the distance. The ultrasonic sensor may not be an optimal choice for the measurement of the minimal objects. The size of the ultrasonic sensor may be too large in this circumstance.

2.5. SLAM control

Simultaneous localization and Mapping (SLAM) is a technology for contemporary robotics and spatial computing. It utilizes an array of sensor data from the camera, lidar, and Inertial measurement units (IMUs). This technology has been prevailing in the field of automatic vehicle, navigation and automatic robotics. The SLAM system offers high accuracy and better environment mapping. There are two major categories of SLAM methodologies: visual SLAM and Lidar SLAM. Each approach has its advantages and disadvantages [6].

2.5.1. Visual SLAM. The visual SLAM utilizes cost-effectiveness and efficiency in size. It also can produce accurate color images of the surrounding environment. The principal shortcoming of this method is its dependency on the triangulating disparities from multi-view imagery [7]. Moreover, the estimation performance for distant environments and outdoor scenes is poor and easily influenced.

2.5.2. Lidar SLAM. The lidar SLAM utilizes the precise and extensive system measurement of the capabilities of Lidar sensors. However, it may struggle with the environment with limited geometric features. Also, it can’t capture the color image that the visual sensor can [8].

3. Robot control

For a mobile robot, the robot control is as important as the localization. This section mainly discusses the inertial sensor and PID control, which will also be addressed in the discussion of the sensor-based algorithm.

3.1. Inertial sensor

The inertial sensor measures the variations in linear speed and angular motions according to the inertial space. The frequently used inertial sensor are accelerometers, gyroscopic sensors and magnetic sensor that can embedded in the body [9]. The basic principle of the inertial sensor is to transduce inertial forces into electrical signals to measure specific forces like acceleration [10]. By using the accurate data given the inertial sensor with suitable algorithms, the control of the robot can be enhanced. This section is mainly focused on gyroscope sensors.

3.1.1. The gyroscope sensor. The term “gyroscope”, conventionally referred to the mechanical class of gyroscopes, derives from the Ancient Greek language, being the Physics of the “precession motion”, a phenomenon also observed in ancient Greek society, The gyroscope sensor typically is for measurement of angular velocity, maintaining orientation and angular velocity [11]. It mainly consists of a spinning disc or a wheel that can freely rotate. It exhibits the properties of angular momentum when the disc and wheel are rotated. It can help the sensor to resist the orientation. The basic principle of the gyroscope sensor is based on the conservation law of the angular momentum.

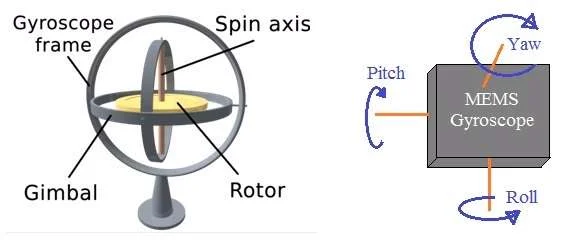

3.1.2. The component of the gyroscope sensor. The components of the gyroscope sensor are the spinning axis, rotor and frame, see below Figure 1. This structure can help the gyroscope sensor to maintain its orientation and resist its position [12]. The spin axis is a major part of the gyroscope sensor. It is the primary axis of the rotations for the gyroscope sensor, and it always follow the principle of the conservation of the angular momentum. The rotor is the spinning wheel or the disc that mounted on the spin axis. It is a key component for exhibiting the properties. The gyroscope frame is the outer structure that provides a reference frame for the gyroscope. It contains the basic mechanics of the gyroscope to connect the external systems or devices.

Figure 1. The component of the gyroscope sensor.

3.1.3. Applications of the gyroscope sensor. The gyroscope sensor is an essential sensor for aircraft, satellite stabilization and control. During the flight, the gyroscope can help the aircraft to maintain stability. By using the gyroscope sensor, it can ensure the stability of the drones and respond accurately to the pilot’s command. The gyroscope can combine with Proportional-Integral-Derivative (PID) control to have a better performance of the robot. Through the consistent and accurate data from the gyroscope, the PID control can help the robot in a more stable way.

3.1.4. Advantages and shortcomings of the gyroscope. The size of the gyroscope sensor is small and lightweight. Also, the reaction speed is very fast. It can provide accurate data in a short time.

The gyroscope can measure all types of rotation and relative orientation in all three axes.

3.2. PID Control

Proportional Integral Derivative control (PID control) is a commonly used control method in engineering. The PID control consists of three basic coefficients: proportional, integral, and derivative which varied to get the optimal response. It can be combined with the gyroscope sensor. The gyroscope can give an angle in real-time of the robot. Based on the gyroscope, the robot can reach a more stable control. An engineer can use this to make the robot reach optimal speed.

4. Future developments and suggestions

4.1. The combination use of the sensors

Nowadays, there are more and more combinations of sensors to achieve better performance for the robot. For a single sensor, it may not reach the requirements of the engineers. With the advancement of technology, the combination use of the sensor appears to solve the problems that the single sensor can’t achieve. In this part, the article includes some combinations of sensors. Three main kinds of sensors are addressed: camera(visual), IMUs, and infrared sensors.

4.2. Visual-inertial algorithm

The combination use of the camera and IMU can provide better performance of the robot. Also, they can combine with a suitable algorithm. For the estimation of the degree of freedom, the IMU can provide major help. Because of the IMU’s small size, lightweight, low cost, and, most importantly, the ability to measure three-axis angular velocities and linear accelerations of the sensing platform to which it is rigidly attached at high frequency, IMU has been widely used in navigation systems [13]. The camera can provide tracking and mapping information about the environment around the sensing platform and could serve as one of the idea complementary sensors to IMU [13].

4.3. Infrared-inertial algorithm

Nowadays, the combination use of infrared and inertial sensors has been a prevailing topic. The role of each of them is indispensable. In this combination, the IMU can measure the three-axis angular velocity and linear acceleration. With the combined use of PID control, it can perform well. The infrared sensor can detect thermal objects by its infrared radiation. Through this process, it can provide accurate data about the environment.

4.4. Machine learning

The topic of the machine learning algorithm has been more and more prevailing. The traditional ways to problem-solving and decision-making have too many shortcomings compared to machine learning. This algorithm can provide a vast amount of information and uncover insights. It is a pioneering algorithm that may unlock the path to intelligent automation. The machine learning algorithm can enable intelligent sensor design. The machine learning can significantly improve the full performance of a given sensor system [14]. There are two emerging examples that machine learning enables the improvement of the sensor design.

4.5. Machine learning-based design of a point-of-care diagnostic sensor for Lyme disease

This is a computational point-of-care sensor for rapid Lyme disease testing [14]. This sensor is used to measure the eight immunoglobulin-M (IgM) and eight immunoglobulin-G (IgG) antibodies associated with Lyme disease. A computational sensing system successfully demonstrated the improvement of the diagnostic sensitivity and specificity which lower the cost of per test can took.

4.6. Machine learning-based sensor design in the field of synthetic biology

A deep learning-based design framework demonstrates the target proteins and small molecules, potentially enabling numerous next-generation biosensing technologies. To address the problem of design hurdle, the engineers use a deep learning method to design a ‘sequence-to-function’ framework for prediction of the real-world function and response of RNA toehold switches. This application of deep learning method, therefore, successfully represents a powerful computational tool for the sensing community [15].

5. Conclusion

In summary, this article discusses two major types of sensors in the field of engineering. The applications, advantages and disadvantages for each type of sensor are explored. The first one is localization that includes visual, lidar, infrared and ultrasonic sensors. To enhance the performance of the sensor system, there is also SLAM algorithm-based sensors system. Another one is the robot control which includes the inertial sensor (gyroscope). PID control is also prevailing in the field of robotics control. Future improvements in the sensors are proposed. For a single sensor, it still has some performance problems in different environments. The combination use of the sensors occurred to significantly enhance the performance of the robot. A multiple sensors system can bring more accuracy and stability. Also, the machine learning algorithm is expected to be more significant in the future of engineering. This article discusses two types of applications of machine learning-based sensor design. All in all, the sensors have played an indispensable role.

References

[1]. Patel, Bhagwati Charan Sinha, G R Goel, Naveen (2020) “Introduction to sensors.” In Advances in modern science of IOP science

[2]. D. G. Costa (2020) “Visual Sensors Hardware Platforms: A Review”, in IEEE Sensors Journal, vol. 20, no. 8, pp. 4025-4033

[3]. Amir Karim and Jan Y Andersson (2013) “Infrared detectors: Advances, challenges and new technologies” IOP Conf. Ser.: Mater. Sci. Eng. 51 012001

[4]. A. P. S and S. A (2023) “Advancements and Applications of LiDAR Technology in the Modern World: A Comprehensive Review”, in 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME)

[5]. A. Sun, Z. Wu, D. Fang, J. Zhang and W. Wang (2016) “Multimode Interference-Based Fiber-Optic Ultrasonic Sensor for Non-Contact Displacement Measurement”, in IEEE Sensors Journal, vol. 16, no. 14, pp. 5632-5635

[6]. Cai, Yiyi, Yang Ou, and Tuanfa Qin. (2024) Improving SLAM Techniques with Integrated Multi-Sensor fusion for 3D reconstruction Sensors 24no.7:2033. https://doi.org/10.3390/s24072033

[7]. J. Li et al. (2022) A Hardware Architecture of Feature Extraction for Real-Time Visual SLAM in IECON 2022 – 48th Annual Conference of the IEEE Industrial Electronics Society

[8]. Zhao, Jianwei, Shengyi Liu, and Jinyu Li. (2022) “Research and Implementation of Autonomous Navigation for Mobile Robots Based on SLAM Algorithm under ROS" Sensors 22, no. 11: 4172. https://doi.org/10.3390/s22114172

[9]. Ulkuhan Guler, Tuna B. Tufan, Aatreya Chakravarti, Yifei Jin, Maysam Ghovanloo,(2023)Implantable and Wearable Sensors for Assistive Technologies, Editor(s): Roger Narayan, Encyclopedia of Sensors and Biosensors (First Edition) Elsevier, Pages 449-473

[10]. Vincenzo Pesce, Andrea Colagrossi and Stefano Silvestrini (2023). “Sensors”, in in Modern Spacecraft Guidance, Navigation, and Control

[11]. NASA. Available online: https://solarsystem.nasa.gov/news/2005/03/14/brief-history-of-gyroscopes (accessed on 24 June 2017).

[12]. Advantages of Gyroscope | disadvantages of Gyroscope (rfwireless-world.com) in Advantages of Gyroscope | disadvantages of Gyroscope (rfwireless-world.com)

[13]. Ballard, Z., Brown, C., Madni, A.M. et al. (2021) Machine learning and computation-enabled intelligent sensor design. Nat Mach Intell 3, 556–565

[14]. Hyou-Arm Joung, Zachary S. Ballard, Jing Wu, Derek K. Tseng, Hailemariam Teshome, Linghao Zhang, Elizabeth J. Horn, Paul M. Arnaboldi, Raymond J. Dattwyler, Omai B. Garner, Dino Di Carlo, and Aydogan Ozcan (2020) “Point-of-Care Serodiagnostic Test for Early-Stage Lyme Disease Using a Multiplexed Paper-Based Immunoassay and Machine Learning”, in ACS Nano 2020 ,229-240

[15]. Angenent-Mari, N.M., Garruss, A.S., Soenksen, L.R. et al. (2020) “A deep learning approach to programmable RNA switches”, in Nat Commun 11, (2020)

Cite this article

Yang,Z. (2024). Review on sensor technology in robot positioning and control solution. Applied and Computational Engineering,93,7-14.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 2nd International Conference on Machine Learning and Automation

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Patel, Bhagwati Charan Sinha, G R Goel, Naveen (2020) “Introduction to sensors.” In Advances in modern science of IOP science

[2]. D. G. Costa (2020) “Visual Sensors Hardware Platforms: A Review”, in IEEE Sensors Journal, vol. 20, no. 8, pp. 4025-4033

[3]. Amir Karim and Jan Y Andersson (2013) “Infrared detectors: Advances, challenges and new technologies” IOP Conf. Ser.: Mater. Sci. Eng. 51 012001

[4]. A. P. S and S. A (2023) “Advancements and Applications of LiDAR Technology in the Modern World: A Comprehensive Review”, in 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME)

[5]. A. Sun, Z. Wu, D. Fang, J. Zhang and W. Wang (2016) “Multimode Interference-Based Fiber-Optic Ultrasonic Sensor for Non-Contact Displacement Measurement”, in IEEE Sensors Journal, vol. 16, no. 14, pp. 5632-5635

[6]. Cai, Yiyi, Yang Ou, and Tuanfa Qin. (2024) Improving SLAM Techniques with Integrated Multi-Sensor fusion for 3D reconstruction Sensors 24no.7:2033. https://doi.org/10.3390/s24072033

[7]. J. Li et al. (2022) A Hardware Architecture of Feature Extraction for Real-Time Visual SLAM in IECON 2022 – 48th Annual Conference of the IEEE Industrial Electronics Society

[8]. Zhao, Jianwei, Shengyi Liu, and Jinyu Li. (2022) “Research and Implementation of Autonomous Navigation for Mobile Robots Based on SLAM Algorithm under ROS" Sensors 22, no. 11: 4172. https://doi.org/10.3390/s22114172

[9]. Ulkuhan Guler, Tuna B. Tufan, Aatreya Chakravarti, Yifei Jin, Maysam Ghovanloo,(2023)Implantable and Wearable Sensors for Assistive Technologies, Editor(s): Roger Narayan, Encyclopedia of Sensors and Biosensors (First Edition) Elsevier, Pages 449-473

[10]. Vincenzo Pesce, Andrea Colagrossi and Stefano Silvestrini (2023). “Sensors”, in in Modern Spacecraft Guidance, Navigation, and Control

[11]. NASA. Available online: https://solarsystem.nasa.gov/news/2005/03/14/brief-history-of-gyroscopes (accessed on 24 June 2017).

[12]. Advantages of Gyroscope | disadvantages of Gyroscope (rfwireless-world.com) in Advantages of Gyroscope | disadvantages of Gyroscope (rfwireless-world.com)

[13]. Ballard, Z., Brown, C., Madni, A.M. et al. (2021) Machine learning and computation-enabled intelligent sensor design. Nat Mach Intell 3, 556–565

[14]. Hyou-Arm Joung, Zachary S. Ballard, Jing Wu, Derek K. Tseng, Hailemariam Teshome, Linghao Zhang, Elizabeth J. Horn, Paul M. Arnaboldi, Raymond J. Dattwyler, Omai B. Garner, Dino Di Carlo, and Aydogan Ozcan (2020) “Point-of-Care Serodiagnostic Test for Early-Stage Lyme Disease Using a Multiplexed Paper-Based Immunoassay and Machine Learning”, in ACS Nano 2020 ,229-240

[15]. Angenent-Mari, N.M., Garruss, A.S., Soenksen, L.R. et al. (2020) “A deep learning approach to programmable RNA switches”, in Nat Commun 11, (2020)