1. Introduction

Motor dysfunction is a widespread health problem that affects a large number of people worldwide, especially those with spinal cord injury (SCI). The incidence of spinal cord injury is increasing year by year in many countries, resulting in most patients losing the ability to move for life, which seriously affects their quality of life and mental health. According to the latest data, there are about 12 million new cases of stroke each year worldwide, with a disability rate of 50% to 70%. Most of these stroke patients face serious motor dysfunction during rehabilitation [1]. Promoting neuroplasticity and motor relearning can improve motor ability, but it requires intensive and repetitive training of motor skills. Some rehabilitation equipment requires therapists to manually assist patients during training, making training inefficient. According to experimental tests [2], the application of exoskeleton robots to physical activity and gait training is effective in improving gait and physical function and increasing cardiopulmonary metabolic efficiency. Therefore, rehabilitation exoskeleton robots have received increasing attention to exercise training, which can automate tedious and time-intensive exercise rehabilitation to achieve more beneficial therapeutic effects. The coordinated control of the exoskeleton depends on a variety of key technologies. Realising rehabilitation training that helps patients‘ motor ability in the real environment and assisting patients’ movement in the real complex environment is one of the technical difficulties in the field of exoskeleton robots.

Therefore, this paper specifically discusses gait planning based on traditional visual models, gait coordination control using sEMG combined with visual models, and gait coordination control strategies combined with VR technology. It discusses the effectiveness of various techniques on motor dysfunction, aiming to provide insights and a foundation for future research and development of rehabilitation exoskeleton robots.

2. Version-based

Creating a model of the real environment using depth cameras, radar detection, or comparable technologies can facilitate online gait planning, and enable more intricate functions such as ascending and descending stairs and overcoming obstacles. It enhances the situational awareness of exoskeleton robots, aids robots in footprint selection, and assist patients in navigating more complex environments. Nonetheless, contemporary research requires enhancement in real-time footprint planning capabilities, as well as practicality and flexibility. Currently, the majority of popular lower limb exoskeleton robots concentrate on the investigation of rehabilitation capabilities, with comparatively less study conducted on scene perception functionalities.

2.1. Stair detection

2.1.1. Line-based extraction method. Line-based extraction techniques regard the geometrical features of stairs as a collection of constantly dispersed lines [3]. Ordinary image processing techniques are challenging to modify for complicated realities and are applicable only in particular contexts. Wang et al. suggested StairNet [4-6] to address this issue, which has since been revised to its third generation. StairNet [4] employs an end-to-end detection mechanism for stair lines via a fully convolutional neural network, enabling the deep learning model to directly extract properties of stair lines, resulting in significant advancements in both precision and velocity. StairNetV2 [5] addresses the challenge of identification in visually indistinct settings by employing a binocular input network design and a selection module that proficiently integrates RGB and depth signals. StairNetV3 presents a depth estimation network design that reconciles the extensive application of monocular StairNet with the resilience of binocular StairNetV2 in intricate situations [6].

2.1.2. Plane-based extraction methods. The plane-based extraction technique considers the stairs as a series of continuously dispersed planes. Following the acquisition of a point cloud using a vision sensor, segmentation and clustering techniques are employed to identify the vertical and horizontal planes of the steps [3]. Cao et al. [7] developed a deep learning recognition model utilizing RepVGG to identify exoskeleton walking scenarios. Following the generation of a 3D point cloud from the picture taken by the RGB-D camera and its conversion into a binary image, they introduced a resilient stair parameter estimation technique predicated on the stair plane. The stair plane is first segmented utilizing an area expanding segmentation technique, followed using an enhanced RANSAC algorithm to accurately estimate the dimensions of the stair tread.

The majority of research on Plane-based Extraction Method employs techniques such as point cloud downsampling, dimensionality reduction, optimization of normal estimation, and enhancement of clustering calculations to augment real-time performance.

2.2. Obstacl detection

Obstacle detection typically employs a camera to capture a depth image of the pedestrian environment, which is subsequently transformed into a 3D point cloud. To minimize the computational expense of point cloud segmentation, An et al. [8] employed a convolutional neural network (CNN) to ascertain the presence of obstacles in the scene and identify the area of interest (ROI) encompassing the obstacle. Thereafter, point cloud segmentation is executed within the ROI to extract the obstacle point cloud. Li et al. [9] developed an adaptive machine vision system by integrating a convolutional neural network with a biomimetic MoS₂/WSe₂ transistor to mitigate the effects of image brightness on convolutional neural networks (CNNs) in image recognition, enabling rapid adaptation and precise image recognition across varying brightness conditions. Identifying impediments in actual street environments is essential for exoskeleton robots to aid users in acclimating to their surroundings. Bi et al. [10] introduced the Ct-Net technique, derived from the PSMNet prototype, integrating technological components like as GFE, feature adapters, and DACOM for stereo matching in robotic applications across diverse environments. The exoskeleton robot can precisely assess the depth of its environment, devise a route, and evade obstacles.

Semantic picture synthesis techniques have consistently gained advantages from the modeling of convolutional neural networks (CNNs). Owing to the constraints of local perception, they have observed minimal enhancement in performance in recent years. To resolve this issue, Ke et al. [11] employed a SC-UNet model for semantic image synthesis, a new approach grounded in Swin Transformer and CNN. It utilizes a UN network architecture. Semantic image synthesis is employed by the exoskeleton robot to generate realistic images from the input semantic layout map. This improves its perception of the semantic information of the surrounding environment, such as object location and classification, enabling more accurate control and interaction.

2.3. Environment-oriented adaptive gait planning

Environment-oriented adaptive gait planning is an approach for gait planning utilized by technologies like robots or exoskeletons. It underscores the necessity of incorporating environmental factors (such as terrain and obstacles) into gait planning, enabling the device to adaptively modify joint positions and movement trajectories in response to environmental changes, thereby facilitating more efficient, stable, and natural locomotion. This planning approach typically integrates the geometric properties of the environment into the spatio-temporal trajectory planning of the device's end effector and computes the requisite joint angles using pertinent methods, such as inverse kinematics.

Zou C. suggested a terrain-adaptive gait planning methodology that integrates Kernelized Movement Primitives (KMP), modeling the human-exoskeleton system as a cart-pendulum model (CPM), and employing approaches such as terrain geometry parameter measurement via a visual sensor. By employing KMP, the exoskeleton may modify gait characteristics, including step length and height, to create a suitable gait trajectory for various terrains, therefore providing stability and fluid mobility over diverse surfaces. This approach significantly improves the resilience of the exoskeleton in dynamic settings[12].

Xiang et al. employed the Dynamic Movement Primitives (DMPs) technique to enhance the level of bionic imitation, making it highly appropriate for environment-oriented adaptive gait planning[13]. Nonetheless, DMPs cannot be adjusted near the midway of the trajectory. Cao employed the Velocity-Modulated Primitives (VMPs) technique. The descent of stairs is examined through a comprehensive cycle that integrates stair parameters, thigh length, calf length, and gait cycle, among others, utilizing nonlinear equations to analyze the trajectories of the hip and ankle joints, thereby deriving the motion angle formula for each joint based on inverse kinematics. Gait planning, utilizing key point modulation, is executed for each stage in conjunction with stair size parameters, allowing application to stairs of varying dimensions[9].

Nonetheless, in the presence of actual environment with obstacles, gait planning for ascending and descending stairs is insufficient. Liu et al. determined the distance and dimensions of floor obstructions using the resulting depth picture. In accordance with established safety parameters, the autonomous decision-making model translates the distance and dimensions of obstacles into the requisite step length and elevation. A nonlinear equation is employed to depict the spatial trajectory of the hip and ankle joints. Utilizing factorial space placement and inverse kinematics, the joint angle trajectory is determined, and several modes (initial mode, normal mode, transition mode, and terminal mode) are defined, with associated restrictions established to facilitate precise gait pattern planning [14].

The aforementioned gait planning facilitates joint movement through a bionic gait. Nonetheless, during real operation, the interaction between the person and the exoskeleton complicates the exoskeleton's ability to precisely implement the gait plan. Yu et al. developed an online correction approach utilizing the zero-moment point (ZMP) to address this issue. The joint motion trajectory is initially fitted with a B-spline curve, followed by an adjustment of the error between the actual and intended ZMP trajectories to mitigate human influence [15].

3. Gait coordination control based on visual model fusion sEMG

While the surrounding environment data obtained by computer vision aids in the regulation of exoskeleton robots, it cannot fully supplant the information supplied by other sensors on the user's movement intentions. This information should synergize to get more accuracy and efficacy in control. Deep neural networks (DNNs) have exhibited enhanced performance outcomes relative to conventional approaches in the implementation of an advanced myoelectric control (MEC) system. Consequently, the integration of myoelectric data with visual models like convolutional neural networks (CNNs) might improve human-robot interaction and the coordinated control of exoskeleton robots, representing a primary area of future study.

Zhu et al. suggested a hybrid model that integrates a convolutional neural network (CNN) with a long short-term memory (LSTM) network, utilizing an enhanced principal component analysis (PCA) approach based on the kernel method for dimensionality reduction and the minimization of redundant information. The suggested strategy achieves an accuracy of around 98.5%[16]. This is the initial phase in employing discrete decoding for myoelectric control of an assistive exoskeleton robot. It is crucial for the future advancement of neuro-controlled mechanical exoskeletons, allowing for enhanced perception of the user's lower limb movement intentions, thereby facilitating more precise control and support. In rehabilitation training, the exoskeleton robot can offer tailored support based on the user's motions to facilitate the recovery process.

Zhang et al. employed deep learning architectures, including CNN-RNNs, CNN-GNNs, and CNNs, for the identification of lower limb movements. The models' feature extraction and fusion capabilities were enhanced by graph data building, novel graph generation techniques, cross-modal information interaction approaches, and embedding attention mechanisms. In experiments, these frameworks have attained high accuracy rates across various tasks, including a maximum average accuracy of 95.198% in lower limb movement recognition among healthy subjects, an accuracy of 99.784% in movement recognition in stroke patients, and an accuracy of 99.845% in phase recognition during the SitTS process [17].

Liu et al. introduced a novel model, CNN-VIT, for gesture detection based on surface electromyogram (sEMG) in addition to predicting lower limb motions. The model combines the architecture of a convolutional neural network (CNN) with a visual Transformer (VIT) and employs a weighted method. This approach attains an average accuracy of 86.15% across several datasets by precisely recognizing sEMG signals [18]. Exoskeleton robots can help based on the user's hand motions, enhancing workout efficiency and comfort. This can offer insights for lower limb exoskeleton robots to precisely discern the user's exercise goals, promptly respond to orders, and enhance human-computer interface efficacy.

4. Combined with VR technology

The advancement of VR enables the simulation of real environments, offering users an immersive and interactive training setting that facilitates the convenient and effective acquisition of real-time data feedback, thereby enhancing gait control and rehabilitation training for lower limb exoskeleton robots.

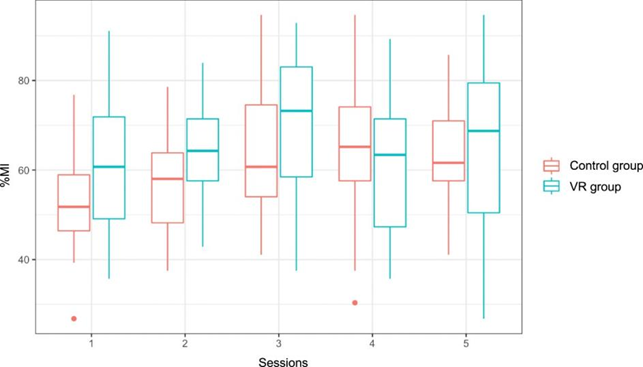

Laura et al. employed a brain-computer interface (BCI) integrated with motor imagery (MI-CI) technology to acquire and classify electroencephalography (EEG) data, discerning the subject's motor intention and translating it into control commands, thereby facilitating precise control of the exoskeleton robot's gait and aiding the subject in gait training and rehabilitation [19]. The implementation of the VR training system augments the subject's capacity to execute motor imagery, hence enhancing the efficacy of the BCI, which allows the exoskeleton robot to more effectively align with the subject's intentions and increase gait coordination and stability, as seen in Figure 1.

Figure 1. Boxplot of accuracies obtained in trials in movement for each session and each group.

Similarly, Chen et al. developed an EMG-based gait pattern adaptation approach that allows people to manipulate the gait of a lower limb exoskeleton robot using EMG signals.[20]. Utilizing VR technology, participants receive real-time visual feedback, enhancing the accuracy of the EMG signals and gait data collected throughout the experiment.

VR could serve as a dynamic and adaptable training environment to improve the accuracy, stability, and adaptability of gait control systems in lower limb exoskeletons. This could lead to more effective and efficient rehabilitation and assistance for users of such robotic systems.

5. Discussion

Traditional vision systems provide significant autonomy in lower limb exoskeleton robots, allowing real-time environmental monitoring and gait modifications to enhance mobility for individuals with restricted physical or cognitive capabilities. Their superior navigational precision guarantees meticulous planning of foot placements and joint motions, circumventing obstacles and maintaining stability. Nonetheless, these systems are susceptible to environmental factors and may exhibit suboptimal performance in low-light circumstances, on shiny surfaces, or in visually congested settings. Moreover, conventional vision systems predominantly emphasize external data and fail to directly integrate the user's physiological condition or intents, thus leading to control that does not align with the user's requirements.

A gait control system integrating surface electromyogram (sEMG) inputs with visual models improves user engagement. By incorporating sEMG signals, the system enhances its comprehension of the user's muscular activity and intents, leading to more intuitive and responsive control of the exoskeleton and more consistent movement aligned with the user's expectations. High responsiveness is attained by real-time input from sEMG data, enabling the exoskeleton to adapt movements dynamically based on the user's objectives, hence enhancing safety and efficacy. However, individual variations in muscle architecture, dermal attributes, and physiological states may result in alterations to the sEMG signal characteristics, necessitating personalized signal processing and calibration; otherwise, the efficacy of control may be compromised. Moreover, the sEMG signal is vulnerable to noise and interference, particularly during user movement, variations in skin wetness, or insecure electrode positioning, which can diminish signal quality and compromise the precision of gait control and the system's dependability.

The research indicates that there exists a degree of complementarity between the two. Incorporating classic visual model gait planning, sEMG-based motion intention prediction, and virtual reality (VR) technologies into a lower limb exoskeleton can establish a cohesive system that substantially improves robotic-assisted mobility. Visual model gait planning facilitates accurate navigation and contextual awareness, crucial for secure and steady locomotion. In this context, sEMG-based motion intention prediction is crucial, since it enables the exoskeleton to be dynamically calibrated in accordance with the user's muscle activity, facilitating a more natural and responsive connection. Incorporating VR enhances the system's functionalities, offering an immersive and customizable training environment that can be tailored to the user's individual requirements and advancement. The integration of the three technologies will markedly improve the intelligence and adaptability of the exoskeleton robot.

6. Conclusion

This study focuses on the gait control of the lower-limb exoskeleton and introduces the recent study and related work, discussing and summarizing the advantages and disadvantages of these methods. Then, by providing a comprehensive envision that combines environmental awareness, user intention prediction, and immersive training, this work paves the way for more effective and user-friendly exoskeleton systems. Future studies should focus on optimizing the integration of these systems to enhance their performance, particularly in terms of responsiveness and robustness in diverse environments

References

[1]. American Heart Association Council on Epidemiology and Prevention Statistics Committee and Stroke Statistics Subcommittee 2024 2024 Heart Disease and Stroke Statistics: A Report of US and Global Data From the American Heart Association Circulation vol 149 no 8 p e347-e913

[2]. Sun M Cui S Wang Z et al 2024 A laser-engraved wearable gait recognition sensor system for exoskeleton robots Microsyst Nanoeng vol 10 p 50

[3]. Wang C Pei Z Fan Y Qiu S Tang Z. 2024 Review of Vision-Based Environmental Perception for Lower-Limb Exoskeleton obots Biomimetics vol 9 p 254

[4]. Wang C Pei Z Qiu S Tang Z 2022 Deep leaning-based ultra-fast stair detection Sci Rep vol 12 p 16124

[5]. Wang C Pei Z Qiu S Tang Z 2023 RGB-D-Based Stair Detection and Estimation Using Deep Learning Sensors vol 23 p 2175

[6]. Wang C Pei Z Qiu S Tang Z 2024 StairNetV3 Depth-aware stair modeling using deep learning Vis Comput

[7]. Cao Z 2023 Research on Visual Perception and Gait Planning of Lower Extremity Exoskeletons Hangzhou Dianzi University

[8]. An D Zhu A Yue X Dang D Zhang Y 2022 Environmental obstacle detection and localization model for cable-driven exoskeleton UR p 64-69

[9]. Li L Li S Wang W et al 2024 Adaptative machine vision with microsecond-level accurate perception beyond human retina Nat Commun vol 15 p 6261

[10]. Bi Y Li C Tong X et al 2023 An application of stereo matching algorithm based on transfer learning on robots in multiple scenes Sci Rep vol 13 p 12739

[11]. Ke A Luo J Cai B 2024 UNet-like network fused swin transformer and CNN for semantic image synthesis Sci Rep vol 14 p 16761

[12]. Zou C Peng Z Shi K Mu F Huang R Cheng H 2023 Terrain-Adaptive Gait Planning for Lower Limb Walking Assistance Exoskeleton Robots CCC p 4773-4779

[13]. Xiang S 2020 Research and Implementation of Gait Planning Method for Walking Exoskeleton Ascend and Descend Stairs University of Electronic Science and Technology of China

[14]. Liu D-X Xu J Chen C Long X Tao D Wu X 2021 Vision-Assisted Autonomous Lower-Limb Exoskeleton Robot IEEE Transactions on Systems Man and Cybernetics Systems vol 51 no 6 p 3759-3770

[15]. Yu Z Yao J 2022 Gait Planning of Lower Extremity Exoskeleton Climbing Stair based on Online ZMP Correction J Mech Transm 44 62-67

[16]. Zhu M Guan X Li Z et al 2023 sEMG-Based Lower Limb Motion Prediction Using CNN-LSTM with Improved PCA Optimization Algorithm J Bionic Eng vol 20 p 612-627

[17]. Zhang C Yu Z Wang X Chen Z-J Deng C Xie S Q 2024 Exploration of deep learning-driven multimodal information fusion frameworks and their application in lower limb motion recognition Biomed Signal Process Control vol 96 p 106551

[18]. Liu X Hu L Tie L Jun L Wang X Liu X 2024 Integration of Convolutional Neural Network and Vision Transformer for gesture recognition using sEMG Biomed Signal Process Control vol 98 p 106686

[19]. Ferrero L Quiles V Ortiz M Iáñez E, Gil-Agudo Á Azorín J M 2023 Brain-computer interface enhanced by virtual reality training for controlling a lower limb exoskeleton Scie vol 26 no 5 p 106675

[20]. Chen W Lyu M Ding X Wang J Zhang J 2023 Electromyography-controlled lower extremity exoskeleton to provide wearers flexibility in walking Biomed Signal Process Control vol 79 no 2 p 104096

Cite this article

Yang,L. (2024). A Review of Gait Coordination Control Methods for Lower Limb Exoskeleton Robots Based on Visual Models. Applied and Computational Engineering,80,168-174.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of CONF-MLA Workshop: Mastering the Art of GANs: Unleashing Creativity with Generative Adversarial Networks

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. American Heart Association Council on Epidemiology and Prevention Statistics Committee and Stroke Statistics Subcommittee 2024 2024 Heart Disease and Stroke Statistics: A Report of US and Global Data From the American Heart Association Circulation vol 149 no 8 p e347-e913

[2]. Sun M Cui S Wang Z et al 2024 A laser-engraved wearable gait recognition sensor system for exoskeleton robots Microsyst Nanoeng vol 10 p 50

[3]. Wang C Pei Z Fan Y Qiu S Tang Z. 2024 Review of Vision-Based Environmental Perception for Lower-Limb Exoskeleton obots Biomimetics vol 9 p 254

[4]. Wang C Pei Z Qiu S Tang Z 2022 Deep leaning-based ultra-fast stair detection Sci Rep vol 12 p 16124

[5]. Wang C Pei Z Qiu S Tang Z 2023 RGB-D-Based Stair Detection and Estimation Using Deep Learning Sensors vol 23 p 2175

[6]. Wang C Pei Z Qiu S Tang Z 2024 StairNetV3 Depth-aware stair modeling using deep learning Vis Comput

[7]. Cao Z 2023 Research on Visual Perception and Gait Planning of Lower Extremity Exoskeletons Hangzhou Dianzi University

[8]. An D Zhu A Yue X Dang D Zhang Y 2022 Environmental obstacle detection and localization model for cable-driven exoskeleton UR p 64-69

[9]. Li L Li S Wang W et al 2024 Adaptative machine vision with microsecond-level accurate perception beyond human retina Nat Commun vol 15 p 6261

[10]. Bi Y Li C Tong X et al 2023 An application of stereo matching algorithm based on transfer learning on robots in multiple scenes Sci Rep vol 13 p 12739

[11]. Ke A Luo J Cai B 2024 UNet-like network fused swin transformer and CNN for semantic image synthesis Sci Rep vol 14 p 16761

[12]. Zou C Peng Z Shi K Mu F Huang R Cheng H 2023 Terrain-Adaptive Gait Planning for Lower Limb Walking Assistance Exoskeleton Robots CCC p 4773-4779

[13]. Xiang S 2020 Research and Implementation of Gait Planning Method for Walking Exoskeleton Ascend and Descend Stairs University of Electronic Science and Technology of China

[14]. Liu D-X Xu J Chen C Long X Tao D Wu X 2021 Vision-Assisted Autonomous Lower-Limb Exoskeleton Robot IEEE Transactions on Systems Man and Cybernetics Systems vol 51 no 6 p 3759-3770

[15]. Yu Z Yao J 2022 Gait Planning of Lower Extremity Exoskeleton Climbing Stair based on Online ZMP Correction J Mech Transm 44 62-67

[16]. Zhu M Guan X Li Z et al 2023 sEMG-Based Lower Limb Motion Prediction Using CNN-LSTM with Improved PCA Optimization Algorithm J Bionic Eng vol 20 p 612-627

[17]. Zhang C Yu Z Wang X Chen Z-J Deng C Xie S Q 2024 Exploration of deep learning-driven multimodal information fusion frameworks and their application in lower limb motion recognition Biomed Signal Process Control vol 96 p 106551

[18]. Liu X Hu L Tie L Jun L Wang X Liu X 2024 Integration of Convolutional Neural Network and Vision Transformer for gesture recognition using sEMG Biomed Signal Process Control vol 98 p 106686

[19]. Ferrero L Quiles V Ortiz M Iáñez E, Gil-Agudo Á Azorín J M 2023 Brain-computer interface enhanced by virtual reality training for controlling a lower limb exoskeleton Scie vol 26 no 5 p 106675

[20]. Chen W Lyu M Ding X Wang J Zhang J 2023 Electromyography-controlled lower extremity exoskeleton to provide wearers flexibility in walking Biomed Signal Process Control vol 79 no 2 p 104096