1. Introduction

Since the 1990s, Motion Capture technology has developed rapidly and is now found in many applications, including film and television production, game development, sports analysis, medical rehabilitation, virtual reality, and augmented reality. The core principle involves digitally capturing the trajectories of moving objects or the human body to accurately reproduce dynamic behaviors. This technology further enhances the realism of virtual character animation while providing valuable data for rehabilitation therapy and behavioral analysis research in medicine, psychology, and sports science [1-4]. The further development of this technology has opened up new avenues for interdisciplinary applications, significantly advancing creativity in the entertainment industry and providing robust technical support for sports optimization and rehabilitation medicine [3,4]. In the entertainment sector, MoCap technology improves the authenticity of virtual characters; in the medical field, it is applied to optimize patient rehabilitation assessments and treatment plans [1,4]. This paper will focus on motion capture technology and its typical methods, highlighting the advantages and challenges encountered in various applications. Markerless motion capture, based on deep learning and computer vision, enables motion capture without physical markers, enhancing the technology's flexibility and intelligence [5,6]. With the integration of AI and deep learning, Motion Capture is becoming increasingly intelligent, with broad prospects in fields such as machine learning and autonomous driving. This review particularly covers both contact-based and non-contact Motion Capture technologies, including inertial, mechanical, electromagnetic, optical, depth cameras, and LiDAR technologies. The development of Motion Capture technology is not limited to traditional entertainment and medical fields but is continuously expanding into emerging areas. With advancements in AI and deep learning, motion capture technology has become more intelligent and automated. In the future, MoCap will be more widely applied in high-tech fields such as autonomous driving, intelligent robotics, and virtual reality, providing foundational support for innovation in these industries. Additionally, with the widespread adoption of markerless technology, Motion Capture systems will become more flexible and user-friendly [5], further reducing equipment costs, enabling entry into more everyday application scenarios. Overall, Motion Capture technology is expected to achieve broader interdisciplinary integration in the future, driving technological progress and innovation across industries.

This paper aims to systematically review the implementation of modern motion capture technology and analyze adaptation methods while discussing its current applications and technical challenges in various industries. It evaluates the strengths and weaknesses of existing technologies and suggests potential future development directions, such as the popularization of markerless Motion Capture technology and its integration with artificial intelligence, which may promote widespread application in the Motion Capture field. Based on a literature review and analysis of existing technologies, this study delves into motion capture technology. It first introduces contact-based Motion Capture technologies, including inertial sensors, mechanical, and electromagnetic methods. Most of these rely on sensors and exoskeletons to capture motion by measuring the rotation angles of human joints [7]. The paper then continues with non-contact techniques: optical systems, depth cameras, and LiDAR. A comparative analysis is conducted on the performance of each technology in different application scenarios, considering factors such as cost, accuracy, occlusion handling, and device convenience. Data analysis is based on the results of the literature review and typical application scenarios, focusing on fields such as healthcare, entertainment, and virtual reality.

2. Motion Capture Technology (MoCap)

2.1. Basic Process of Motion Capture

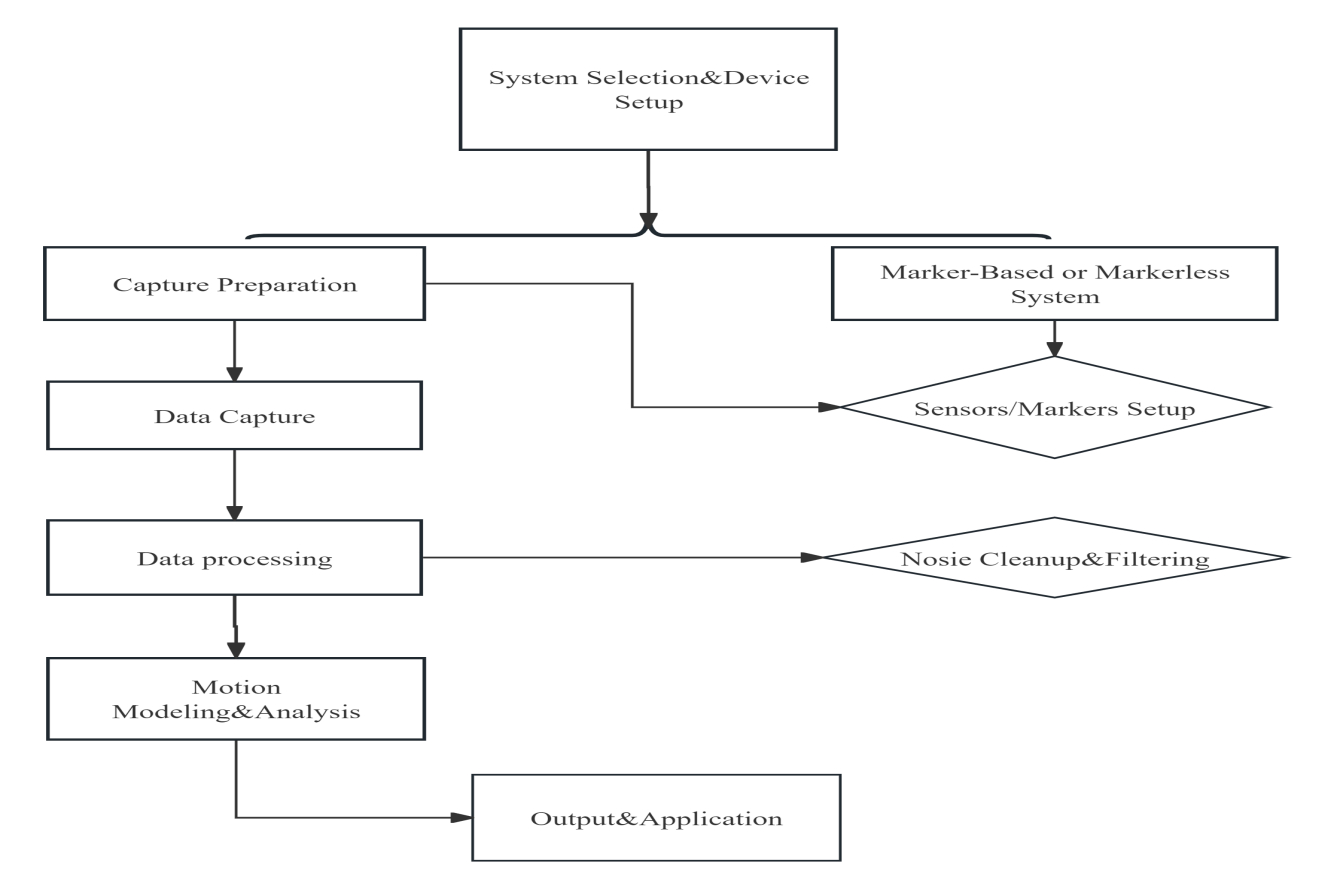

Figure 1: Process of Motion Capture

The diagram as show in Figure 1 outlines the fundamental workflow of a Motion Capture (MoCap) system, detailing the sequence of steps involved in capturing, processing, and analyzing motion data for various applications.

2.2. Motion capture based on sensor classification

The mainstream motion capture technologies in the market, based on sensors, can be categorized into contact-based and non-contact-based systems.

Contact-based Motion Capture: A technique that captures motion by placing sensors or markers at key points on the human body or object.

Non-contact Motion Capture: A technique that captures motion using remote sensors without the need to place any physical devices or markers on the human body.

Six popular motion capture technologies can be derived from contact-based and non-contact-based systems, as demonstrated in Table 1.

Table 1: A Comparative Overview of Motion Capture Technologies. | |||||

Subtype | Advantages | Disadvantages | Applications | Authors | |

Mechanical Motion Capture | Contact-based | Accurate, real-time prediction, complex | Sensor errors, magnetic interference, needs calibration | Real-time motion prediction in healthcare, virtual reality, and sports | Huailiang Xia et. al. [8] |

Mechanical Motion Capture | Contact-based | High precision, no camera needed, works in obstacles | Bulky, restrictive, expensive setup | Precision joint data in rehabilitation and robotics | Nakashima M et. al. [7] |

Electromagnetic Motion Capture | Contact-based | Energy-efficient, low-frequency, wearable tech | Sensitive to magnetic fields, environmental interference | Wearable tech, energy harvesting | Ge Shi et. al. [9] |

Optical Motion Capture | Non-contact | High precision, accurate for full body tracking | Requires multiple cameras, occlusion, lighting issues | Film production, clinical motion analysis | Dasgupta A et. al. [5] |

Depth Camera Motion Capture | Non-contact | Lighting-independent, 3D data, privacy protection | Expensive, complex tasks affect performance | Surgical skill assessment, hand movement tracking, VR simulations | Zuckerman, I. et. al. [10] |

LiDAR Motion Capture | Non-contact | Large-scale capture, accurate in outdoor environments | Sparse data, occlusion, complex data fusion | Outdoor multi-person tracking, robotics | Yiming Ren et. al. [11] |

3. Challenges and Current Solutions

3.1. Challenges

Contact-based: It relies on multiple sensors, making it prone to interference and slower data transmission, especially in complex environments where it is susceptible to magnetic field disturbances [8].The system's equipment is bulky, affecting the naturalness of movement and making it inconvenient to use in dynamic motion scenarios[7]. Although motion capture technology offers high accuracy, the need for extensive cable connections significantly restricts the range of motion, and interference in magnetic field environments remains a serious challenge[9].

Non-contact: Motion capture systems are prone to occlusion and line-of-sight limitations, particularly in multi-character or complex environments[11].In complex postures or large-scale motion scenarios, point cloud data is prone to distortion, affecting accuracy[10]. Markerless motion capture relies on computer vision, but its accuracy in complex movements still needs improvement, with errors being particularly significant during high-speed motions[6].

3.2. Current Solutions

Occlusion and data loss issues: Deep learning algorithms, such as U-net and LSTM, have been used to restore lost marker data, particularly in cases of prolonged data loss. By combining synchronized data from multiple cameras with adaptive regression models, reconstruction errors can be significantly reduced [12]. Real-time data completion techniques based on deep learning have enabled the rapid reconstruction of occluded motion segments. Additionally, multimodal fusion techniques have been employed to integrate different data sources (e.g., IMU and visual data), reducing the impact of occlusion in virtual environments [13].

Equipment costs and operational complexity: By integrating low-cost, portable sensors such as IMU, UWB, and radar systems with optical systems, it is possible to enhance capture flexibility and accuracy while reducing costs, making it particularly suitable for large-scale dynamic motion scenarios [14].

4. Industry applications of motion capture

4.1. Medical applications

In the field of medical rehabilitation, motion capture technology has been widely used to assess and analyze patient movements without the need for physical markers. This system, known as markerless motion capture (MMC), uses computer vision to record natural human motion, supporting clinical assessments and rehabilitation training. MMC has been applied to evaluate motor functions in conditions such as Parkinson’s disease, cerebral palsy, and post-stroke recovery, providing reliable joint movement data, detecting gait abnormalities, and assessing treatment effectiveness. It serves as a non-invasive and efficient tool for tracking rehabilitation progress, playing a vital role in modern medical evaluations [4].

4.2. Film

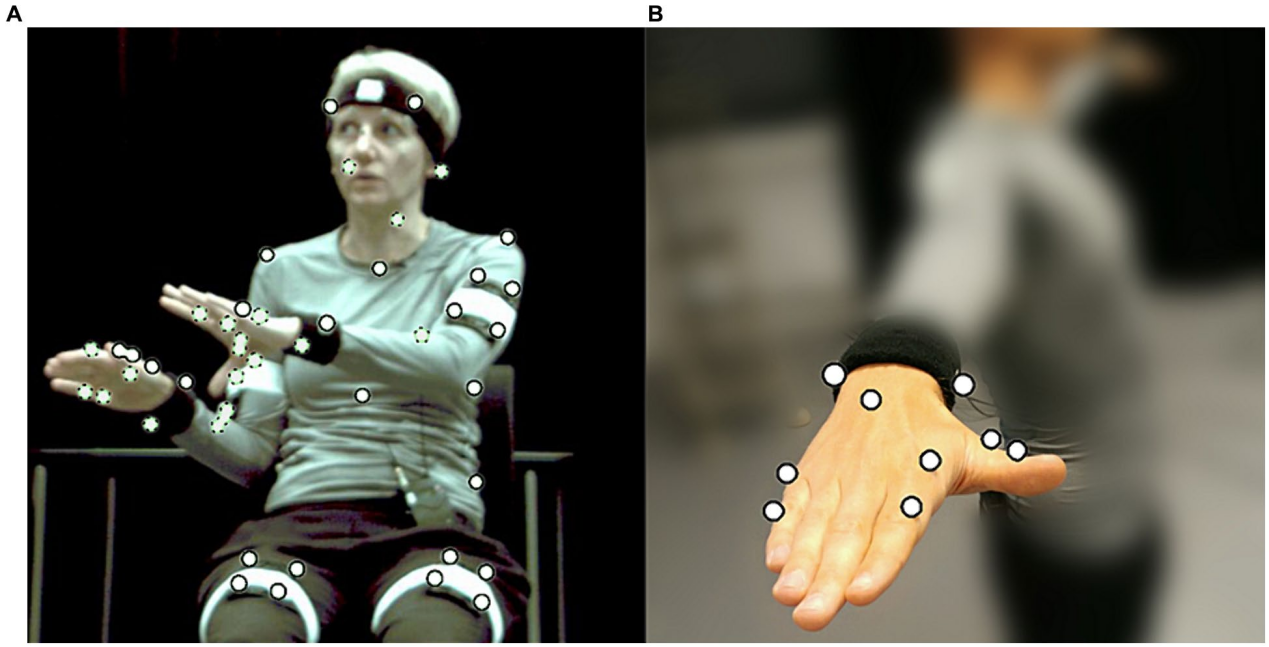

In film production, motion capture systems have been key tools for creating realistic character animations. Using specially designed suits with marker sensors, high-resolution cameras can accurately capture actors’ motion data. This technology allows filmmakers to accurately reproduce complex movements and facial expressions, resulting in lifelike virtual characters. However, a major challenge for this system has been occlusion, particularly when capturing fine movements, such as fingers, or interactions involving multiple actors [15]. Figure 2 shows the application of motion capture in the rising film industry of Planet of the Apes.

Figure 2: Motion Capture in film applications[15]

4.3. Game

In the gaming industry, motion capture has been widely used to create realistic character movements and enhance player immersion. Using specially designed suits equipped with sensors, actors’ movements are captured and converted into digital animations. This enables game developers to achieve smooth movements and realistic facial expressions for characters, as seen in games like The Last of Us and Assassin’s Creed. However, the technology still faces challenges in capturing complex interactions, such as combat or fine hand movements, primarily due to sensor occlusion and motion blur during fast actions Colour[16]. Figure 3 shows the application of motion capture in the game.

Figure 3: Baby JJ Motion Capture splitscreen.[16]

Table 2: The applications of motion capture | |||||

Industry | Application | Specific Cases | Methods | Advantages | Authors |

Healthcare | Rehabilitation training, clinical evaluation, AI-based analysis | Stroke recovery evaluation using Kinect, motion analysis for Parkinson’s | Markerless motion capture, Microsoft Kinect, AI and deep learning models | Natural, non-invasive, detailed data for individualized rehabilitation | Lam, W.W.T.et. al. [3] |

Film | Creating virtual characters, facial expression capture, real-time feedback | Avatar, Planet of the Apes – virtual character creation | Marker-based optical capture, facial motion capture, multi-camera systems | Realistic movement, faster animation production, creative flexibility | Retinger, M.et. Al. [14] |

Game | Character motion and expression, real-time capture, multiplayer interactions | Tomb Raider: Underworld, Hellblade: Senua's Sacrifice, Assassin’s Creed | Optical capture with markers, facial capture, real-time capture for VR | High realism in character actions, immersive experiences, multiplayer control | Lavrador, E.et. Al. [16] |

4.4. Applications of Motion Capture in Psychology

Motion capture has been widely used in the fields shown in Table 2 since the 1990s. However, in recent years, a literature review has revealed that motion capture has also been applied in psychology.

Motion capture has proven to be valuable in research on gesture-speech synchronization, emotional expression, and the impact of nonverbal behavior on cognitive processes. By accurately recording human movements and converting them into digital models, researchers have been able to analyze the relationship between actions and psychological states in depth. For example, studies on gesture-speech synchronization have found that temporal coordination of gestures significantly enhances memory for verbal information. Additionally, motion capture technology can precisely capture facial and bodily movements associated with emotional expressions, revealing nonverbal behavior patterns under different emotional states. Experiments conducted in virtual environments have further expanded the application of this technology, allowing researchers to control experimental variables through virtual characters and simulate realistic social interactions to analyze participants’ psychological responses. The advantages of this technology include high-precision recording of subtle movements and real-time feedback, greatly enhancing the accuracy and control ability of psychological experiments[2]. Figure 4 shows the appearance and process of the speaker's wearing.

Figure 4: (A) Speaker during MOCAP recording wearing markers (white circles). Dotted outlines indicate markers concealed in the current view. (B) Placement of

MOCAP markers on hands and fingers.[2]

5. Future Prospects of Motion Capture

5.1. The Popularization of Markerless Motion Capture

Markerless motion capture technology relies on computer vision and artificial intelligence, enabling the capture of human dynamic information without the need for physical markers or devices. In the future, markerless motion capture is expected to achieve breakthroughs in accuracy, real-time processing, and naturalness, particularly in fields such as virtual reality, film production, and sports science. The popularization of this technology will reduce costs and simplify operations, making motion capture systems accessible to general users and small enterprises [5,15].

5.2. Deep Integration with Artificial Intelligence (AI)

The rapid advancement of AI and deep learning models has significantly enhanced the intelligent processing capabilities of motion capture, particularly in automated data analysis, motion prediction, and lost data recovery. AI-based motion capture systems are capable of autonomously learning and predicting complex human motion trajectories, handling more intricate multi-subject scenarios, and generating high-precision motion data in real time. Table captions/numbering [1].

5.3. Capture in Multi-Subject Scenarios

The application of motion capture in multi-subject and complex scenarios is expected to become more widespread. With advancements in system processing capabilities and algorithms, future developments will enable more accurate capture of dynamic interactions among multiple subjects, providing precise data support for fields such as sports performance and medical rehabilitation[16].

5.4. Future Research Directions

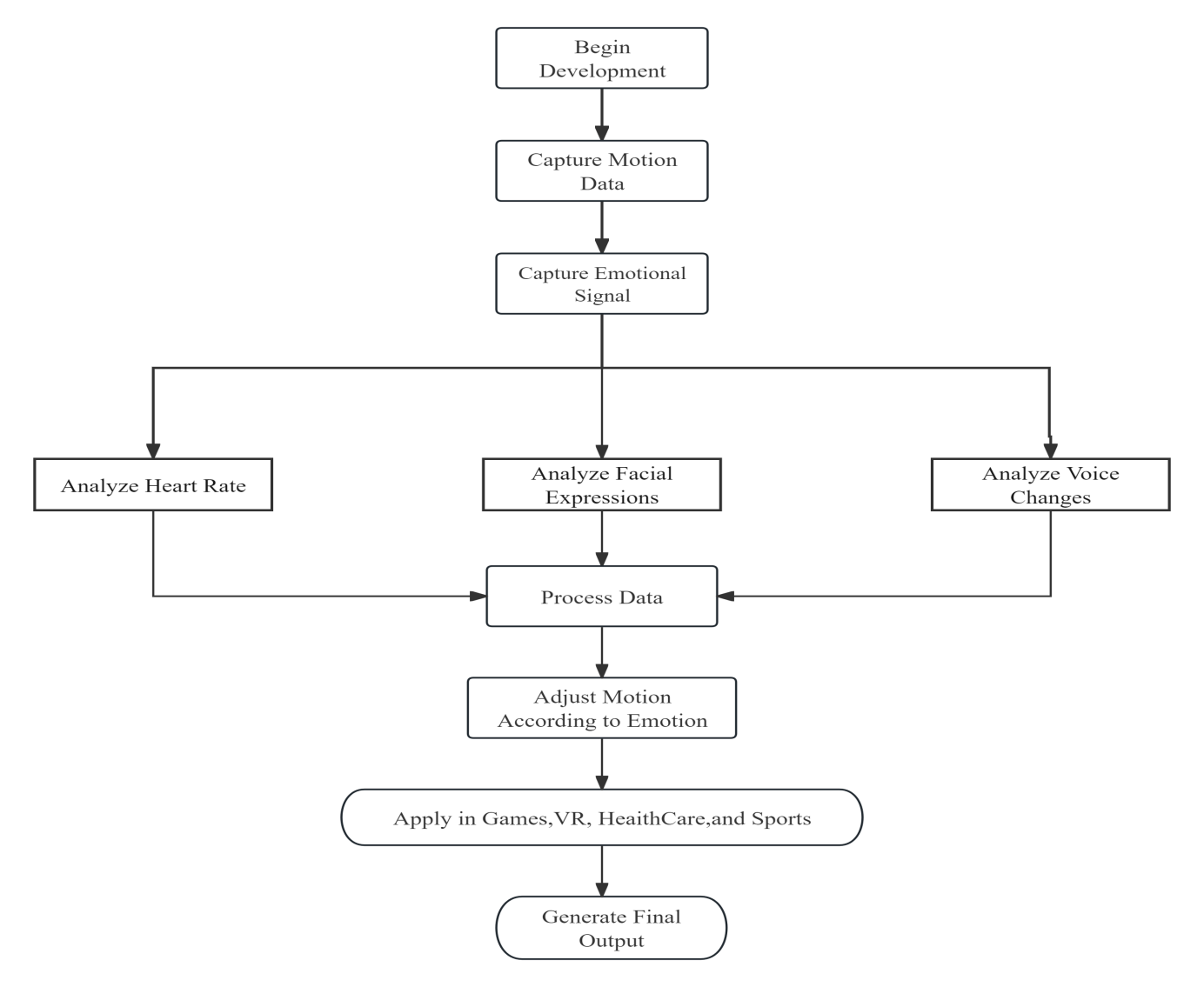

The development of emotion-responsive motion capture could represent a breakthrough in the field of motion capture. This technology would not only record the subject’s physical movements but also adapt flexibly based on emotional states. By integrating real-time emotional feedback—derived from physiological signals such as heart rate, micro-expressions, and voice changes—the system adjusts the way movements are read and represented. The specific process is illustrated in Figure 5.

Figure 5: Emotion-Responsive Motion Capture: A New Breakthrough

6. Conclusion

This paper provides a systematic review of motion capture technology and its applications across various fields, with a focus on analyzing the advantages and disadvantages of contact-based and non-contact-based capture systems, particularly in medical, entertainment, and sports science applications. By examining multiple technologies, such as inertial, mechanical, electromagnetic, optical, depth cameras, and LiDAR, the study explores issues related to accuracy, data processing, and cost. The findings indicate that future developments in motion capture will move toward cost optimization, lightweight equipment, and markerless capture, with further integration with artificial intelligence (AI) driving increased system intelligence and adoption.

In the future, markerless motion capture is expected to be applied in a wider range of fields due to its convenience and low cost, particularly in virtual reality, film production, and sports analysis. With deeper integration of AI and deep learning, motion capture technology will achieve significant improvements in accuracy and real-time performance, enabling more complex and detailed motion prediction and data recovery. This will provide robust technical support for areas such as education, intelligent robotics, and autonomous driving, further advancing the broad adoption and development of motion capture technology.

References

[1]. Park, M., Cho, Y., Na, G., & Kim, J. (2023). Application of Virtual Avatar using Motion Capture in Immersive Virtual Environment. International Journal of Human–Computer Interaction, 1–15. https://doi.org/10.1080/10447318.2023.2254609.

[2]. Nirme, J., Gulz, A., Haake, M., Gullberg, M. (2024). Early or synchronized gestures facilitate speech recall-a study based on motion capture data. Front Psychol, 15: 1345906. doi: 10.3389/fpsyg.2024.1345906. PMID: 38596333; PMCID: PMC11002957.

[3]. Suo, X., Tang, W, Li, Z. (2024). Motion Capture Technology in Sports Scenarios: A Survey. Sensors. 24(9):2947. https://doi.org/10.3390/s24092947.

[4]. Lam, W. W. T., Tang, Y. M. & Fong, K. N. K. (2023). A systematic review of the applications of markerless motion capture (MMC) technology for clinical measurement in rehabilitation. J NeuroEngineering Rehabil 20, 57. https://doi.org/10.1186/s12984-023-01186-9.

[5]. Nakashima, M., Kanie, R., Shimana, T., Matsuda Y., Kubo Y. (2023). Development of a comprehensive method for musculoskeletal simulation in swimming using motion capture data. Proceedings of the Institution of Mechanical Engineers, Part P: Journal of Sports Engineering and Technology.237(2):85-95. doi:10.1177/1754337119838395

[6]. Xia, H. L., Zhao, X. Y., Chen, Y., Zhang, T. Y., Yin, Y. G., Zhang, Z. H. (2023). Human Motion Capture and Recognition Based on Sparse Inertial Sensor, Journal of Advanced Computational Intelligence and Intelligent Informatics, Volume 27, Issue 5, Pages 915-922, Released on J-STAGE September 21, 2023, Online ISSN 1883-8014, Print ISSN 1343-0130, https://doi.org/10.20965/jaciii.2023.p0915

[7]. Shi, G., Liang, X., Xia, Y. S., Jia, S. Y., Hu, X. Z., Yuan, M. Z., Xia, H. K., Wang, B. R. (2024). A novel dual piezoelectric-electromagnetic energy harvester employing up-conversion technology for the capture of ultra-low-frequency human motion, Applied Energy, Volume368, 123479, ISSN 0306-2619, https://doi.org/10.1016/j.ape nergy.2024.123479.

[8]. Dasgupta, A., Sharma, R.., Mishra, C., Nagaraja V. H. (2023). Machine Learning for Optical Motion Capture-Driven Musculoskeletal Modelling from Inertial Motion Capture Data. Bioengineering. 10(5):510. https://doi.org/10.3390/bioengineering10050510

[9]. Zuckerman, I., Werner, N., Kouchly, J. et al. (2024). Depth over RGB: automatic evaluation of open surgery skills using depth camera. Int J CARS 19, 1349–1357. https://doi.or g/10.1007/s11548-024-03158-3

[10]. Ren. Y. M., Zhao, C. F., He, Y. N., Cong, P. S., Liang, H., Yu, J. Y., Xu, L. and Ma, Y. X. (2023). LiDAR-aid Inertial Poser: Large-scale Human Motion Capture by Sparse Inertial and LiDAR Sensors. IEEE Transactions on Visualization and Computer Graphics 29, 5, 2337–2347. https://doi.org/10.1109/TVCG.2023.3247088

[11]. Ayane, K., Naoki, T., Kohei, M., Kazutoshi, K. (2023). Markerless motion capture of hands and fingers in high-speed throwing task and its accuracy verification, Mechanical Engineering Journal, 10(6), p.23-00220, Online ISSN 2187-9745, https://doi.org/10.1299/mej.23-00220.

[12]. Yuhai, O., Ahnryul, C., Yubin, C., Hyunggun, K. and Joung, H. M. (2024). Deep-Learning-Based Recovery of Missing Optical Marker Trajectories in 3D Motion Capture Systems. Bioengineering 11, no. 6: 560. https://doi.org/10.3390/bioengi neering11060560

[13]. Park, M., Cho, Y., Na, G., & Kim, J. (2023). Application of Virtual Avatar using Motion Capture in Immersive Virtual Environment. International Journal of Human–Computer Interaction, 1–15. https://doi.org/10.1080/10447318.2023.2254609

[14]. Retinger, M., Michalski, J., Kozierski, P., & Giernacki, W. (2023, June). Toward improving tracking precision in motion capture systems. In 2023 International Conference on Unmanned Aircraft Systems (ICUAS) (pp. 919-925). IEE

[15]. Sharma, S., Verma, S., Kumar, M. and Sharma, L. (2019). Use of Motion Capture in 3D Animation: Motion Capture Systems, Challenges, and Recent Trends. 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, pp. 289-294, doi: 10.1109/COMITCon.2019.886

[16]. Lavrador, E., Teixeira, L., Kunz, S. (2023). Motion Capture as a Tool of Empowerment for Female Main Characters. In: Brooks, A.L. (eds) ArtsIT, Interactivity and Game Creation. ArtsIT 2022.Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol479. Springer, Cham. https://doi.org/10.1007/978-3-031-28993-4_35

Cite this article

Yang,J. (2024). Analysis of Motion Capture Technology Research and Typical Applications. Applied and Computational Engineering,112,130-138.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 5th International Conference on Signal Processing and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Park, M., Cho, Y., Na, G., & Kim, J. (2023). Application of Virtual Avatar using Motion Capture in Immersive Virtual Environment. International Journal of Human–Computer Interaction, 1–15. https://doi.org/10.1080/10447318.2023.2254609.

[2]. Nirme, J., Gulz, A., Haake, M., Gullberg, M. (2024). Early or synchronized gestures facilitate speech recall-a study based on motion capture data. Front Psychol, 15: 1345906. doi: 10.3389/fpsyg.2024.1345906. PMID: 38596333; PMCID: PMC11002957.

[3]. Suo, X., Tang, W, Li, Z. (2024). Motion Capture Technology in Sports Scenarios: A Survey. Sensors. 24(9):2947. https://doi.org/10.3390/s24092947.

[4]. Lam, W. W. T., Tang, Y. M. & Fong, K. N. K. (2023). A systematic review of the applications of markerless motion capture (MMC) technology for clinical measurement in rehabilitation. J NeuroEngineering Rehabil 20, 57. https://doi.org/10.1186/s12984-023-01186-9.

[5]. Nakashima, M., Kanie, R., Shimana, T., Matsuda Y., Kubo Y. (2023). Development of a comprehensive method for musculoskeletal simulation in swimming using motion capture data. Proceedings of the Institution of Mechanical Engineers, Part P: Journal of Sports Engineering and Technology.237(2):85-95. doi:10.1177/1754337119838395

[6]. Xia, H. L., Zhao, X. Y., Chen, Y., Zhang, T. Y., Yin, Y. G., Zhang, Z. H. (2023). Human Motion Capture and Recognition Based on Sparse Inertial Sensor, Journal of Advanced Computational Intelligence and Intelligent Informatics, Volume 27, Issue 5, Pages 915-922, Released on J-STAGE September 21, 2023, Online ISSN 1883-8014, Print ISSN 1343-0130, https://doi.org/10.20965/jaciii.2023.p0915

[7]. Shi, G., Liang, X., Xia, Y. S., Jia, S. Y., Hu, X. Z., Yuan, M. Z., Xia, H. K., Wang, B. R. (2024). A novel dual piezoelectric-electromagnetic energy harvester employing up-conversion technology for the capture of ultra-low-frequency human motion, Applied Energy, Volume368, 123479, ISSN 0306-2619, https://doi.org/10.1016/j.ape nergy.2024.123479.

[8]. Dasgupta, A., Sharma, R.., Mishra, C., Nagaraja V. H. (2023). Machine Learning for Optical Motion Capture-Driven Musculoskeletal Modelling from Inertial Motion Capture Data. Bioengineering. 10(5):510. https://doi.org/10.3390/bioengineering10050510

[9]. Zuckerman, I., Werner, N., Kouchly, J. et al. (2024). Depth over RGB: automatic evaluation of open surgery skills using depth camera. Int J CARS 19, 1349–1357. https://doi.or g/10.1007/s11548-024-03158-3

[10]. Ren. Y. M., Zhao, C. F., He, Y. N., Cong, P. S., Liang, H., Yu, J. Y., Xu, L. and Ma, Y. X. (2023). LiDAR-aid Inertial Poser: Large-scale Human Motion Capture by Sparse Inertial and LiDAR Sensors. IEEE Transactions on Visualization and Computer Graphics 29, 5, 2337–2347. https://doi.org/10.1109/TVCG.2023.3247088

[11]. Ayane, K., Naoki, T., Kohei, M., Kazutoshi, K. (2023). Markerless motion capture of hands and fingers in high-speed throwing task and its accuracy verification, Mechanical Engineering Journal, 10(6), p.23-00220, Online ISSN 2187-9745, https://doi.org/10.1299/mej.23-00220.

[12]. Yuhai, O., Ahnryul, C., Yubin, C., Hyunggun, K. and Joung, H. M. (2024). Deep-Learning-Based Recovery of Missing Optical Marker Trajectories in 3D Motion Capture Systems. Bioengineering 11, no. 6: 560. https://doi.org/10.3390/bioengi neering11060560

[13]. Park, M., Cho, Y., Na, G., & Kim, J. (2023). Application of Virtual Avatar using Motion Capture in Immersive Virtual Environment. International Journal of Human–Computer Interaction, 1–15. https://doi.org/10.1080/10447318.2023.2254609

[14]. Retinger, M., Michalski, J., Kozierski, P., & Giernacki, W. (2023, June). Toward improving tracking precision in motion capture systems. In 2023 International Conference on Unmanned Aircraft Systems (ICUAS) (pp. 919-925). IEE

[15]. Sharma, S., Verma, S., Kumar, M. and Sharma, L. (2019). Use of Motion Capture in 3D Animation: Motion Capture Systems, Challenges, and Recent Trends. 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, pp. 289-294, doi: 10.1109/COMITCon.2019.886

[16]. Lavrador, E., Teixeira, L., Kunz, S. (2023). Motion Capture as a Tool of Empowerment for Female Main Characters. In: Brooks, A.L. (eds) ArtsIT, Interactivity and Game Creation. ArtsIT 2022.Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol479. Springer, Cham. https://doi.org/10.1007/978-3-031-28993-4_35