1. Introduction

The Artificial Intelligence (AI) revolution has brought about transformations in almost every industry across the globe. It has changed the way we live, work, and interact with the world forever. These improvements in healthcare, finance, marketing, and various other areas arise from the unprecedented data we are generating. AI learns from data, makes predictions from data, and optimises decision making to improve processes. While the benefits of this explosion in AI innovation and data are manifold, the collection, storage, and use of personal data raises many privacy concerns. Individuals inherently have little control over what, how, and with whom their data is shared and utilised by AI systems, which can ultimately lead to misuse or exploitation of sensitive information. The core question this research aims to answer is how AI innovation canarding of privacy. It will begin by highlighting the risks that massive data collection, cybersecurity, and lack of transparency in AI algorithms pose to personal privacy. It will also discuss the legal frameworks such as the General Data Protection Regulation (GDPR) trying to rein in the use of data [1]. The research will further explore technical solutions and strategies for development of AI in such a way that the privacy of endusers is enhanced without stifling innovation. The aim of the research is to address both the academic and practical concerns around the protection of personal information in the AI-driven digital world.

2. Key Challenges in AI and Personal Data Privacy

2.1. Massive Data Collection in AI Systems

One of the biggest problems associated with AI is the need for huge amounts of personal data for training AI systems. Because AI systems learn by observing patterns and making predictions, they need to gather large amounts of personal data to learn and improve. This data-dependent nature of AI systems raises significant privacy concerns. Our location, health data, search history, personal preferences and other personal information is often collected without permission or knowledge. The large-scale nature of this data collection allows us to create comprehensive profiles about individuals that, if obtained by a hacker or other bad actor, could be used for malevolent purposes. The problem is that we don’t have control over how such data is being used. The challenge, then, is to design AI systems that work without invading our personal privacy [2].

2.2. Data Breaches and Cybersecurity Risks

Even artificial intelligence systems are not exempt from cybersecurity risks. Dig new risks as the introduction of AI into legacy systems may create new vulnerabilities. For example, data breaches can potentially expose billions of personal data points that have been stored in AI systems. Weaknesses in the system’s security apparatus can be exploited by hackers to infiltrate sensitive data and expose individuals to potential harm. Such breaches are not only expensive in terms of monetary damages but are also worrisome for public trust in AI platforms. The protection of AI platforms against such risks is crucial because the consequences of data breaches can be detrimental to as identity theft, financial fraud and reputational damage to organisations [3].

2.3. Opaque Algorithms and Accountability

Algorithms are widely referred to as ‘black boxes’, as it’s often not clear how they reach their decisions. This opacity presents a serious obstacle to accountability. In contexts where Al systems process personal data, for example, individuals have a right to know how their data is being used, but this isn’t possible when there’s opacity in the algorithms. This lack of algorithmic accountability is ethically problematic. It also makes it difficult to hold Al systems responsible and introduces complications for regulatory efforts.If personal data is misused, or processed in ways that breach privacy regulations, it can be difficult to assign responsibility. A good way to illustrate the problems caused by opacity in Al is with the Accountability Formula (AF):

\( AF=T+E+R \) (1)

T stands for Transparency meaning that it is easy to explain how the data is processed and decisions made. E stands for Explainability meaning that it is easy to explain the logic behind Al outcomes, and R stands for Responsibility giving a clear definition of who is responsible to the data and to the decisions.AF stands for transparency, explainability and accountability, meaning a high AF value is a system with a high level of transparency, explainability and accountability [4].Creating transparent Al systems is crucial for achieving the goals of building trust and protecting privacy.

3. Legal Frameworks Governing Data Privacy

3.1. The Role of GDPR in AI Applications

The General Data Protection Regulation (GDPR) is the most well-known and comprehensive data protection legal framework in the world, and it covers all data collection and processing about individuals in the European Union (EU), especially in relation to AI. It obliges the companies to get consent from the individuals for processing their personal data, and it also includes the principles of data minimisation and security. In addition to a new right to privacy control, the compliance costs associated with GDPR for business are enormous, with investments required in legal expertise, technical infrastructure and operational modifications (eg, hiring data protection officers, upgrading data management systems). These costs are particularly onerous for smaller companies. For consumers, the legislation has raised awareness of data privacy rights, giving rise to a demand for transparency and control. Trust between the consumer and business has been developed, although the constant requests for consent can also lead to frustration. Outside the EU, GDPR has significantly affected companies that want to sell their goods and services in the EU, including internet giants such as Google and Amazon, which have had to change the way that they treat data, using GDPR-compatible tools; and it has become a reference point in debates about data privacy across the globe, with other regions following suit in drafting similar frameworks.[5] Table 1 illustrates a case study on the impacts of the GDPR on consent rates, privacy breaches and data minimisation, and how it serves two functions – as both a data protection mechanism and a broader operational environment for AI companies.

Table 1: GDPR Impact on AI Applications

Company Name | Compliance Date | Personal Data Processed (GB) | Data Collection Consent Rate (%) | Privacy Breach Incidents (Pre-GDPR) | Privacy Breach Incidents (Post-GDPR) | Data Minimization Achieved (%) |

Tech Solutions Inc. | 2023/1/15 | 500 | 88 | 5 | 1 | 80 |

AI Innovations Ltd. | 2023/3/22 | 1200 | 92 | 8 | 2 | 85 |

DataProtect Corp. | 2023/5/10 | 950 | 85 | 3 | 0 | 90 |

CyberNet AI | 2023/7/5 | 760 | 90 | 6 | 1 | 87 |

InnoAI Systems | 2023/9/18 | 1100 | 95 | 7 | 0 | 88 |

3.2. Comparing U.S. and EU Data Privacy Laws

In fact, there is a clear difference between the US and the EU in the attention given to regulating personal data as part of AI applications. In the EU, since 2018 the General Data Protection Regulation (GDPR) has supported the rights of individuals as the sovereign owners of their personal data. No such attentive overarching federal data privacy law exists in the US – and no move to establish one appears imminent. Instead, the US legal regime continues to erect sector-specific regulations, such as the Health Insurance Portability and Accountability Act (HIPAA) for healthcare data, the California Consumer Privacy Act (CCPA) for personal data in some sectors, etc. This patchwork of laws poses significant problems for expanding AI companies in navigating the regulatory landscape across sectors and jurisdictions, especially for those operating across borders. In some contexts, there is a regulatory vacuum, especially when it comes to generalised AI applications, where, for example in the tech world, companies actively operate with little to no regulation – guided primarily by corporate social responsibility.[6] In the US, worries about overregulation that would hold back innovation and economic growth have contributed to a more lenient regime. However, even within societies, attitudes tend to be complex: while the dominant interests in the tech sector push for a low-regulation approach, there’s also growing public demand for greater data privacy protections that could result in more regulation in the future. European AI companies, by contrast, are required to operate in the strict confines of GDPR.

3.3. Future Trends in Global Privacy Regulations

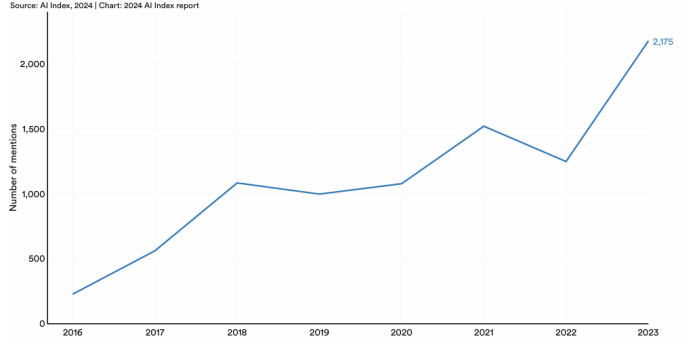

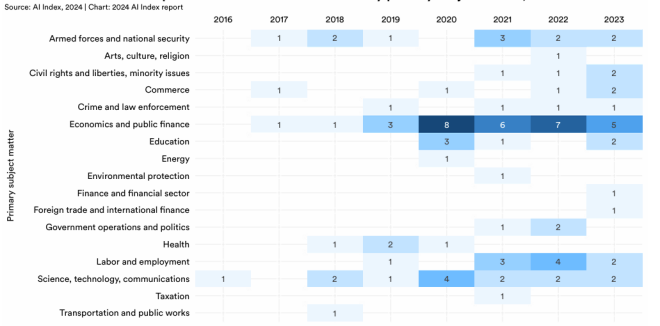

As shown in Figure 1 and Figure 2, on a global scale, the legal and regulatory landscape around privacy is responding to the AI boom. In the US, there is not yet a general federal law, but there are sector-specific laws proliferating at the state level (ie, Californian and Virginian laws), and the momentum for a federal law is increasing because of the increasing influence of AI.[7] Even outside of GDPR in Europe, new laws such as the EU Artificial Intelligence Act being proposed to regulate high-risk AI systems (which would include large models in the future, under the auspices of real world use) would require greater transparency – and accountability – from AI systems. It’s possible that the EU AI Act will serve as a new model for AI governance across the world. As AI continues to evolve, the US and Europe appear to be building systems of law to keep pace with its unique challenges.

Figure 1: Number of mentions of AI in legislative proceedings in 80 select countries, 2016–23 (source: HAI_AI-Index-Report-2024)

Figure 2: Number of AI-related bills passed into law in select countries by primary subject matter, 2016–23 (source: HAI_AI-Index-Report-2024)

4. Technical Solutions for Enhancing Privacy

4.1. Privacy-Preserving AI Techniques

Techniques such as differential privacy and federated learning, which allow AI models to be trained without revealing users’ sensitive information (such as their medical condition), are commonly required of US tech giants such as Apple and Google, but are also becoming much more widespread. Differential privacy keeps AI models performing well while adding ‘noise’ to datasets to ensure that they can be trained without any individual’s identity being compromised. Federated learning, in turn, allows for AI models to be trained across a large number of distributed devices such as those in mobile health without sending raw data to the central server, which would increase the possibility of data being breached. While these technologies are now most commonly associated with US companies, they are likely to become increasingly adopted around the world [8]. With these ‘privacy-preserving’ techniques that appear to enable advances in AI model development without compromising privacy, it’s no surprise that multinational companies will want to adopt them where regulation requires high levels of data privacy across jurisdictions. Sure, these techniques have first been adopted by US tech giants, but they are likely to become more relevant globally going forward. Notably, they are particularly suited for sectors that require the use of large amounts of personal information. And this could turn out to be the secret sauce for building advanced AI systems without individual users having to sacrifice fundamental rights to privacy. These technologies are likely to be the future of AI.

4.2. Data Encryption and Secure AI Systems

Encryption technologies are crucial to enable AI systems to secure personal data as raw data is unreadable to intruders. For example, end-to-end encryption for AI means that the data is encrypted when it leaves the user’s device, during transmission, when it is being stored, and when it is being processed by the AI system. More specifically, secure AI systems, with encryption technologies, can help organisations implement the consideration of privacy by design, aligning the AI system’s privacy practices with data protection regulation such as GDPR. This is important for personal information because it can be encrypted from the very beginning and then kept secure during the entire data lifecycle. As AI systems will inevitably have more personal data to process in the future, encryption will remain a vital tool to help improve privacy. Table 2 data from a GDPR simulation for case study purposes [9]. The impact of GDPR on various AI-powered companies after implementation is detailed and compared to the data before implementation – namely, the impact on data collection consent rates, privacy breaches, and data minimisation. It aims to present how GDPR can reshape the application of AI as a tool in personal data processing by strengthening privacy itself.

Table 2: GDPR Impact Case Study Data

Company Name | Year of GDPR Implementation | Pre-GDPR Consent Rate (%) | Post-GDPR Consent Rate (%) | Pre-GDPR Privacy Breaches (incidents) | Post-GDPR Privacy Breaches (incidents) | Pre-GDPR Data Minimization (%) | Post-GDPR Data Minimization (%) |

AI Vision Tech | 2018 | 65 | 88 | 12 | 2 | 50 | 80 |

NeuroNet Solutions | 2018 | 70 | 92 | 15 | 1 | 60 | 85 |

DeepData Analytics | 2019 | 62 | 85 | 8 | 3 | 55 | 88 |

Quantum AI Labs | 2019 | 68 | 90 | 10 | 1 | 58 | 90 |

NextGen AI | 2020 | 75 | 95 | 7 | 0 | 63 | 87 |

5. Strategies for Balancing Innovation and Privacy

5.1. Building Privacy-Centric AI Models

Innovation and privacy can coexist if the right safeguards are in place. AI developers need to design models with privacy in mind. Every new model should avoid data breach by deploying techniques such as differential privacy and federated learning. This ensures that every AI model is privacy respecting. More importantly, people will trust the AI technology once developers adhere to data privacy. Eventually, the use and adoption of AI will expand. To incentivise AI developers to design privacy-respecting models, governments should pass laws requiring transparency and demanding corporate accountability, and enforcing privacy by design in AI development. Alongside this, governments need to educate their citizenry on data privacy. This will empower individuals to demand accountability from AI providers. Once we achieve privacy-respecting AI design, appropriate regulation and greater public education, AI innovation becomes possible without undermining privacy.

5.2. Integrating Privacy Technologies in AI Systems

This is where we see the value of privacy-enhancing technologies, such as encryption and federated learning incorporated into AI systems. These technologies allow AI to function without compromising on privacy, by processing data in a protected manner. In addition, by embedding privacy technologies at various stages of the process – such as data collection, processing, storage etc – AI innovations can better meet desired privacy standards [10]. This reduces, but doesn’t eliminate, trade-offs. The aim is to achieve meaningful gains for both innovation and regulation. This approach promotes public confidence in AI and maintains ongoing community support for AI innovations. Companies that are serious about privacy and are being sensitive to it will also be less exposed to the fines and reputational damage associated with data breaches.

6. Conclusion

This data-rich era poses the ongoing challenge of providing the best service or product, while minimising the data drawn from users. What are the limits of AI innovation versus data protection? The promises of AI don’t come for free. AI’s reliance upon the collection of massive amounts of personal data triggers vulnerabilities and anxieties surrounding privacy, including data leakages, cyber security attacks, dark algorithms, algorithmic explanations, governability, transparency and accountability. Legal privacy frameworks such as the GDPR are important steps towards viewing data privacy as a human right. But so too are technical mechanisms that allow for the minimisation of data exposure important to maintain AI functionality – such as differential privacy, federated learning and encryption. Privacy should be considered at the design stage, to encourage public confidence and responsible AI. This approach serves corporate social responsibility and promotes cross-industry cooperation and international collaboration. As AI proliferates, greater transparency, accountability and privacy in AI systems may enhance AI adoption and innovation.

References

[1]. Humerick, Matthew. "Taking AI personally: how the EU must learn to balance the interests of personal data privacy & artificial intelligence." Santa Clara High Tech. LJ 34 (2017): 393.

[2]. Van den Hoven van Genderen, Robert. "Privacy and data protection in the age of pervasive technologies in AI and robotics." Eur. Data Prot. L. Rev. 3 (2017): 338.

[3]. Stahl, Bernd Carsten, and David Wright. "Ethics and privacy in AI and big data: Implementing responsible research and innovation." IEEE Security & Privacy 16.3 (2018): 26-33.

[4]. Onik, Md Mehedi Hassan, K. I. M. Chul-Soo, and Y. A. N. G. Jinhong. "Personal data privacy challenges of the fourth industrial revolution." 2019 21st International Conference on Advanced Communication Technology (ICACT). IEEE, 2019.

[5]. Meurisch, Christian, Bekir Bayrak, and Max Mühlhäuser. "Privacy-preserving AI services through data decentralization." Proceedings of The Web Conference 2020. 2020.

[6]. Elliott, David, and Eldon Soifer. "AI technologies, privacy, and security." Frontiers in Artificial Intelligence 5 (2022): 826737.

[7]. Liu, Yu-li, et al. "Privacy in AI and the IoT: The privacy concerns of smart speaker users and the Personal Information Protection Law in China." Telecommunications Policy 46.7 (2022): 102334.

[8]. Majeed, Abdul, and Seong Oun Hwang. "When AI meets information privacy: The adversarial role of AI in data sharing scenario." IEEE Access (2023).

[9]. Voigt, Paul, and Axel Von dem Bussche. "The eu general data protection regulation (gdpr)." A Practical Guide, 1st Ed., Cham: Springer International Publishing 10.3152676 (2017): 10-5555.

[10]. Hintze, Mike, and Khaled El Emam. "Comparing the benefits of pseudonymisation and anonymisation under the GDPR." Journal of Data Protection & Privacy 2.2 (2018): 145-158.

Cite this article

Xiao,H.;Li,J. (2024). Balancing Innovation and Privacy: Safeguarding Personal Information in the AI-Driven Digital Era. Applied and Computational Engineering,118,23-29.

Data availability

The datasets used and/or analyzed during the current study will be available from the authors upon reasonable request.

Disclaimer/Publisher's Note

The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of EWA Publishing and/or the editor(s). EWA Publishing and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

About volume

Volume title: Proceedings of the 3rd International Conference on Software Engineering and Machine Learning

© 2024 by the author(s). Licensee EWA Publishing, Oxford, UK. This article is an open access article distributed under the terms and

conditions of the Creative Commons Attribution (CC BY) license. Authors who

publish this series agree to the following terms:

1. Authors retain copyright and grant the series right of first publication with the work simultaneously licensed under a Creative Commons

Attribution License that allows others to share the work with an acknowledgment of the work's authorship and initial publication in this

series.

2. Authors are able to enter into separate, additional contractual arrangements for the non-exclusive distribution of the series's published

version of the work (e.g., post it to an institutional repository or publish it in a book), with an acknowledgment of its initial

publication in this series.

3. Authors are permitted and encouraged to post their work online (e.g., in institutional repositories or on their website) prior to and

during the submission process, as it can lead to productive exchanges, as well as earlier and greater citation of published work (See

Open access policy for details).

References

[1]. Humerick, Matthew. "Taking AI personally: how the EU must learn to balance the interests of personal data privacy & artificial intelligence." Santa Clara High Tech. LJ 34 (2017): 393.

[2]. Van den Hoven van Genderen, Robert. "Privacy and data protection in the age of pervasive technologies in AI and robotics." Eur. Data Prot. L. Rev. 3 (2017): 338.

[3]. Stahl, Bernd Carsten, and David Wright. "Ethics and privacy in AI and big data: Implementing responsible research and innovation." IEEE Security & Privacy 16.3 (2018): 26-33.

[4]. Onik, Md Mehedi Hassan, K. I. M. Chul-Soo, and Y. A. N. G. Jinhong. "Personal data privacy challenges of the fourth industrial revolution." 2019 21st International Conference on Advanced Communication Technology (ICACT). IEEE, 2019.

[5]. Meurisch, Christian, Bekir Bayrak, and Max Mühlhäuser. "Privacy-preserving AI services through data decentralization." Proceedings of The Web Conference 2020. 2020.

[6]. Elliott, David, and Eldon Soifer. "AI technologies, privacy, and security." Frontiers in Artificial Intelligence 5 (2022): 826737.

[7]. Liu, Yu-li, et al. "Privacy in AI and the IoT: The privacy concerns of smart speaker users and the Personal Information Protection Law in China." Telecommunications Policy 46.7 (2022): 102334.

[8]. Majeed, Abdul, and Seong Oun Hwang. "When AI meets information privacy: The adversarial role of AI in data sharing scenario." IEEE Access (2023).

[9]. Voigt, Paul, and Axel Von dem Bussche. "The eu general data protection regulation (gdpr)." A Practical Guide, 1st Ed., Cham: Springer International Publishing 10.3152676 (2017): 10-5555.

[10]. Hintze, Mike, and Khaled El Emam. "Comparing the benefits of pseudonymisation and anonymisation under the GDPR." Journal of Data Protection & Privacy 2.2 (2018): 145-158.